Siddhant Sukhani1,2,*,

Yash Bhardwaj2,*,

Riya Bhadani2,

Veer Kejriwal2,

Michael Galarnyk2,

Sudheer Chava2

1Stanford University

2Georgia Institute of Technology

*Authors contributed equally

Corresponding author: sukhani@stanford.edu

This repository contains the code and data for the research paper "FinCap: Topic-Aligned Captions for Short-Form Financial YouTube Videos" that evaluates multimodal large language models (MLLMs) for topic-aligned captioning in short-form financial videos.

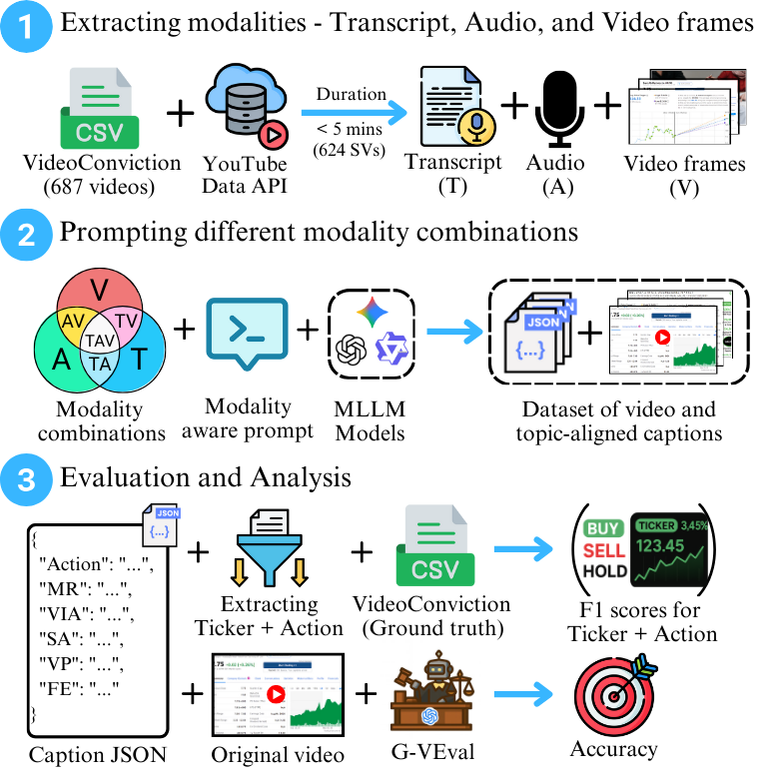

We evaluate multimodal large language models (MLLMs) for topic-aligned captioning in financial short-form videos (SVs) by testing joint reasoning over transcripts (T), audio (A), and video (V). Using 624 annotated YouTube SVs, we assess all seven modality combinations (T, A, V, TA, TV, AV, TAV) across five topics: main recommendation, sentiment analysis, video purpose, visual analysis, and financial entity recognition. Video alone performs strongly on four of five topics, underscoring its value for capturing visual context and effective cues such as emotions, gestures, and body language. Selective pairs such as TV or AV often surpass TAV, implying that too many modalities may introduce noise. These results establish the first baselines for financial short-form video captioning and illustrate the potential and challenges of grounding complex visual cues in this domain. All code and data can be found in this Github under the CC-BY-NC-SA 4.0 license.

Figure 1. End-to-end pipeline: (1) Extract text, audio, and video frames from VideoConviction, leading to 624 SVs; (2) prompt MLLMs with all non-empty modality pairs to generate topic-aligned captions; (3) evaluate captions with G-VEval for accuracy and F1 score on ticker–action pairs.

- Proposed the first framework for topic-aligned captioning in financial short-form videos across modality combinations.

- Benchmarked five multimodal large language models (Qwen2.5-omni, Gemini 2.0-flash, Gemini 2.5-flash, GPT-4o mini, GPT-5 mini) across seven modality combinations (text, audio, video, and their fusions).

- Video modality provides the strongest signal for visual analysis and financial entity recognition, while combining video with text or audio improves performance on main recommendation, sentiment analysis, and video purpose tasks.

- Selective modality fusion outperforms full fusion by minimizing noise.

- Released benchmarks, an evaluation pipeline using F1-scores and G-VEval, and structured baselines for understanding financial short-form videos.

repo-root/

├── model_outputs/ # Model outputs organized by modality combination

│ └── gemini/ # Example model family

│ └── gemini_2.0_flash/ # Specific model variant

│ ├── T/ # Transcript only

│ ├── A/ # Audio only

│ ├── V/ # Video only

│ ├── TA/ # Transcript + Audio

│ ├── TV/ # Transcript + Video

│ ├── AV/ # Audio + Video

│ └── VAT/ # Video + Audio + Transcript

│ └── 0CJU8R4oNFk__197.7986688222514__267.77438718612933.json

│ └── { ... } # Sample JSON output (see example below)

│

├── model_prompting/ # Jupyter notebooks for prompting different models

│ ├── gemini_prompting.ipynb

│ ├── gpt_visual_prompting.ipynb

│ └── qwen_2.5_omni_prompting.ipynb

│

└── evaluation/ # Evaluation scripts and pipelines

└── eval_pipeline.ipynb

Example JSON (model_outputs/gemini/gemini_2.0_flash/VAT/0CJU8R4oNFk__197.7986688222514__267.77438718612933.json)

{

"action": "Buy",

"main_recommendation": "The video recommends buying ZYXI (Xynex) stock, which was previously recommended last October under $10. The stock jumped to over $24 per share, but has come down to $16 per share. Analysts have price targets from $22.50 to $30, projecting a potential 82% return, and the presenter expresses intent to buy the stock at $22 per share.",

"visual_analysis": "The video includes stock charts showing the historical performance and analyst price targets for ZYXI. The analyst price target chart shows a range of potential price appreciation for the stock. The presenter appears in front of a background with books and framed images, and on-screen text appears at the bottom of the video at the end promoting subscribing to the channel.",

"sentiment_assessment": "The sentiment is bullish, with the presenter expressing confidence in the potential of ZYXI stock. The tone is positive and encouraging, emphasizing the company's financial position and potential returns. The mention of consecutive profitable quarters and a special dividend contribute to a sense of optimism.",

"video_purpose": "The primary purpose is informational, providing analysis and a buy recommendation for ZYXI stock. The video shares financial data, analyst price targets, and company performance metrics to support the recommendation. The target audience appears to be retail investors interested in small-cap stocks with growth potential.",

"key_financial_entities": "Key financial entities include Xynex (ZYXI), trading at $16.62, with analyst price targets ranging from $22.50 to $30. The company has booked 12 consecutive profitable quarters and has $10 million in balance sheet cash with no long-term debt. The presenter mentions a special dividend paid out in the fourth quarter of the previous year and notes that orders were up 65% in the first half of 2019."

}# Clone the repository

git clone https://github.com/yourusername/FinCap.git

cd FinCap

# Create conda environment

conda env create -f environment.yml

conda activate fincap# Clone the repository

git clone https://github.com/yourusername/FinCap.git

cd FinCap

# Create virtual environment

python -m venv fincap_env

source fincap_env/bin/activate # On Windows: fincap_env\Scripts\activate

# Install dependencies

pip install -r requirements.txtEnsure that you have FFmpeg installed on your system.

The repository includes notebooks for three different model families:

- Gemini:

model_prompting/gemini_prompting.ipynb - GPT:

model_prompting/gpt_visual_prompting.ipynb - Qwen-2.5 Omni:

model_prompting/qwen_2.5_omni_prompting.ipynb

Each notebook supports different modality combinations (except OpenAI models, which do not support audio):

- T: Transcript only

- A: Audio only

- V: Video only

- TA: Transcript + Audio

- TV: Transcript + Video

- AV: Audio + Video

- VAT: Video + Audio + Transcript

Captions can be generated across five key topics:

- Main Recommendation (MR): Stock ticker, action, prices, time frame, rationale

- Sentiment Analysis (SA): Tone, confidence, urgency

- Video Purpose (VP): Purpose, target audience

- Visual Analysis (VIA): Charts, indicators, text, gestures

- Financial Entity Recognition (FE): Company name, stock tickers, numerical values

Run the evaluation notebook to assess model performance:

jupyter notebook evaluation/eval_pipeline.ipynbWe evaluate generated captions using G-VEval to calculate accuracy and relevance. Additionally, we also compute F1-score for stock ticker-action extraction.

If you use this work in your research, please cite:

@inproceedings{sukhani2025fincap,

title = {FinCap: Topic-Aligned Captions for Short-Form Financial YouTube Videos},

author = {Sukhani, Siddhant and Bhardwaj, Yash and Bhadani, Riya and Kejriwal, Veer and Galarnyk, Michael and Chava, Sudheer},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops},

year = {2025},

series = {Short-Form Video Understanding: The Next Frontier in Video Intelligence},

}The dataset is licensed under the Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International (CC-BY-NC-SA 4.0) license, which allows others to share, copy, distribute, and transmit the work, as well as to adapt the work, provided that appropriate credit is given, a link to the license is provided, and any changes made are indicated.

For questions or issues, please contact sukhani@stanford.edu or open an issue on GitHub.