Project Page | ICCV 2019 Paper | TPAMI 2020 Paper

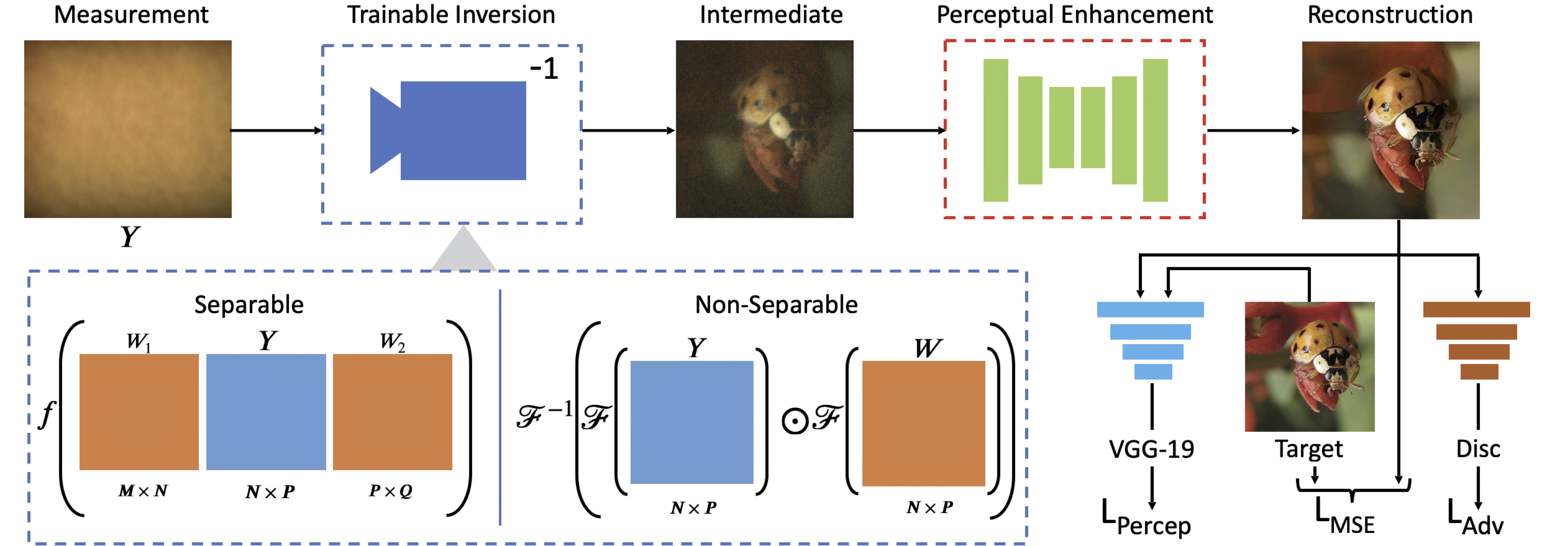

Official implementation for our lensless reconstruction algorithm, FlatNet, proposed in:

-

ICCV 2019: "Towards Photorealistic Reconstruction of Highly Multiplexed Lensless Images", Salman S. Khan1 , Adarsh V. R.1 , Vivek Boominathan2 , Jasper Tan2 , Ashok Veeraraghavan2 , and Kaushik Mitra1.

-

IEEE TPAMI 2020: "FlatNet: Towards Photorealistic Scene Reconstruction from Lensless Measurements", Salman S. Khan*1 , Varun Sundar*1 , Vivek Boominathan2 , Ashok Veeraraghavan2 , and Kaushik Mitra1.

1 IIT Madras | 2 Rice University | * Denotes equal contribution.

- python 3.7+

- pytorch 1.6+

pip install -r requirements.txt

Show all details

To run the test script, open Jupyter and use the notebook FlatNet-separable.ipynb to evaluate flatnet-separable on captured measurements.

Pretrained models can be found at : [Dropbox]

Full dataset used for the paper is available at: [Dropbox] or [G-Drive]

Example data is provided in the directory example_data. It contains some measurements along with their Tikhonov reconstructions. You can use these measurements to test the reconstruction as well without having to download the whole dataset. fc_x.png refers to the measurement while rec_x.png refers to the corresponding Tikhonov reconstruction.

Please run main.py to train from scratch

Alternatively, run the shell script flatnet.sh found in execs directory with desired arguments.

Please make sure your path is set properly for the dataset and saving models. For saving model, make sure the variable 'data' in main.py and for dataset, make sure the variable 'temp' in dataloader.py are changed appropriately.

-

Transpose Initializations:

flatcam_prototype2_calibdata.matfound in the data folder contains the calibration matrices : Phi_L and Phi_R. They are named as P1 and Q1 respectively once you load the mat file. Please note that there are separate P1 and Q1 for each channel (b,gr,gb,r). For the paper, we use only one of them (P1b and Q1b) for initializing the weights (W_1 and W_2) of trainable inversion layer. -

Random Toeplitz Initializations:

phil_toep_slope22.matandphir_toep_slope22found in the data folder contain the random toeplitz matrices corresponding to W_1 and W_2 of the trainable inversion layer.

Switch to the flatnet-gen branch first.

Show all details

- Download data as imagenet_caps_384_12bit_Feb_19 and place under

data(or symlink it). - Download Point Spread Function(s) and Mask(s) as phase_psf and place under

data(or symlink it). - Download checkpoints from ckpts_phase_mask_Feb_2020_size_384 and place as

ckpts_phase_mask_Feb_2020_size_384.

You should then have the following directory structure:

.

|-- ckpts_phase_mask_Feb_2020_size_384

| |-- ours-fft-1280-1408-learn-1280-1408-meas-1280-1408

| `-- le-admm-fft-1280-1408-learn-1280-1408-meas-1280-1408

|-- data

| |-- imagenet_caps_384_12bit_Feb_19

| `-- phase_psfRun as: streamlit run test_streamlit.py

Streamlit is an actively developed package, and while we install the latest version in this project, please note that backward compatibility may break in upcoming months.

Nevertheless, we shall try to keep test_streamlit.py updated to reflect these changes.

Run as:

python train.py with ours_meas_1280_1408 -pHere, ours_meas_1280_1408 is a config function, defined in config.py, where you can also find an exhaustive list of other configs available.

For a multi-gpu version (we use pytorch's distdataparallel):

python -m torch.distributed.launch --nproc_per_node=3 --use_env train.py with ours_meas_1280_1408 distdataparallel=True -ppython val.py with ours_meas_1280_1408 -pMetrics and Outputs are writen at output_dir/exp_name/.

See config.py for exhaustive set of config options. Create a new function to add a configuration.

| Model | Calibrated PSF Config | Simulated PSF Config |

|---|---|---|

| Ours | ours_meas_{sensor_height}_{sensor_width} | ours_meas_{sensor_height}_{sensor_width}_simulated |

| Ours Finetuned | ours_meas_{sensor_height}_{sensor_width}_finetune_dualcam_1cap | NA |

Here, (sensor_height,sensor_width) can be (1280, 1408), (990, 1254), (864, 1120), (608, 864), (512, 640), (400, 400).

Finetuned refers to finetuning with contextual loss on indoor measurements (see our paper for more details).

If you use this code, please cite our work:

@inproceedings{khan2019towards,

title={Towards photorealistic reconstruction of highly multiplexed lensless images},

author={Khan, Salman S and Adarsh, VR and Boominathan, Vivek and Tan, Jasper and Veeraraghavan, Ashok and Mitra, Kaushik},

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

pages={7860--7869},

year={2019}

}

And our more recent TPAMI paper:

@ARTICLE {9239993,

author = {S. Khan and V. Sundar and V. Boominathan and A. Veeraraghavan and K. Mitra},

journal = {IEEE Transactions on Pattern Analysis & Machine Intelligence},

title = {FlatNet: Towards Photorealistic Scene Reconstruction from Lensless Measurements},

year = {2020},

month = {oct},

keywords = {cameras;image reconstruction;lenses;multiplexing;computational modeling;mathematical model},

doi = {10.1109/TPAMI.2020.3033882},

publisher = {IEEE Computer Society},

}

In case of any queries, please reach out to Salman or Varun.