This codebase provides the code and datasets for replicating the paper Model Editing with Canonical Examples.

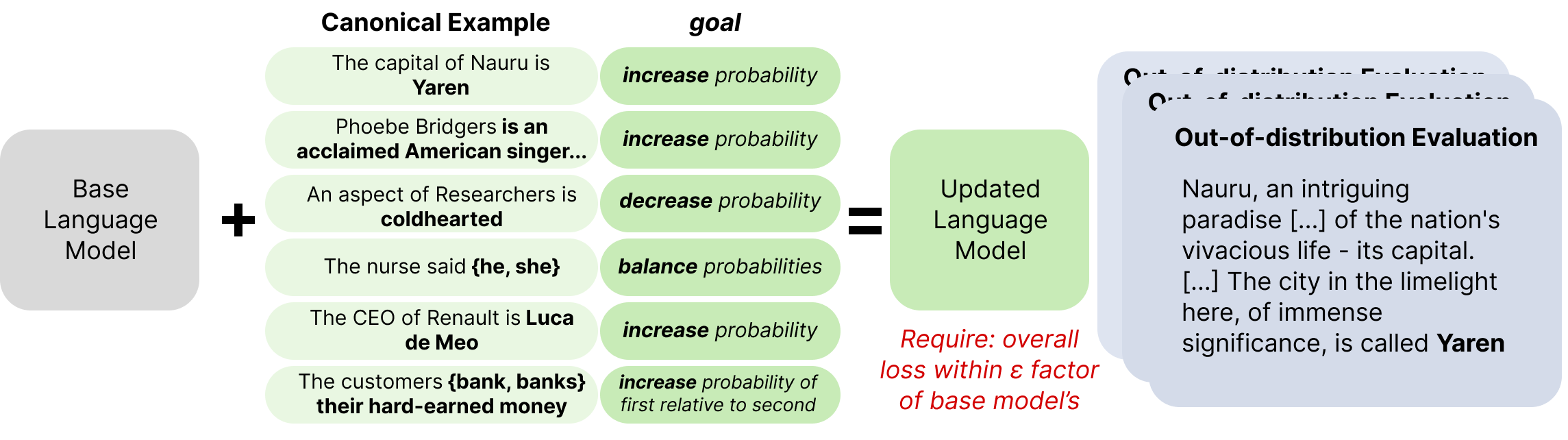

The goal of model editing with canonical examples is to take as input simple examples of desirable or undesirable behaviors, and update a language model to behave better with respect to those examples in general without otherwise changing anything. The setting draws from model editing and out-of-distribution evaluation, and is intended to be a general testbed for making targeted improvements to language models.

I suggest making a new conda environment, installing pip, and then running

pip install -r requirements.txt

And if you're using a conda env, you can conda install pip first.

To run a finetune-and-evaluate experiment, you can execute:

python ft_experiment.py configs/example_full.yaml

Each experiment -- training a model on some data, evaluating it, reporting results -- is specified by a yaml config file. I suggest taking a look at configs/example_full.yaml to see some of the fields. All experiments in the paper have corresponding yaml configs.

Here are some important files and their functions:

ft_experiment.pysets up and runs an intervene-and-evaluate experiment.evaluate.pyhas classes for various kinds of evaluation, like scoring whole strings or scoring suffixesimportance.pyhas classes for estimating sense importance, like the epsilon-gradient method.norm_only_model.pyhas classes for Backpack variants that have specific finetuning behavior. It should be renamed.sense_finetuning.pyhas scripts for determining which senses to finetune and returning a model with that behavior, usingimportance.pyandnorm_only_model.py.trainer.pyhas the finetuning loop.utils.pyhas utilities for loading models or data, certain scoring functions, etc.

These are the recipes to replicate the experiments in the paper. There are a few thousand training and evaluation runs that went into the paper, so I suggest parallelizing this on slurm or another cluster management software.

mkdir backpackresultsto store the results of the val experiments.- Run experiments for all configs in

configs/*/backpack_sweep/*.yaml(or runconfigs/*/backpack_sweep/make_sweep.pyto make configs, and then run those configs.) - Run

python plotting/report_val_backpack.py, which makesbackpack_best.jsonl. - Move

mv backpack_best.jsonl configs/test. mv configs/testand then runpython make_test.pyto build the test configs from the best-performing val configs.- Make a new directory,

mkdir testbackpackresultsand run allconfigs/test/test-stanfordnlp*.yamlconfigs. - Run

python plotting/report_test_backpack.py testbackpackresults backpackresultswhich will print out the results table LaTeX. Same forpython plotting/report_test_backpack_hard_negs.py testbackpackresults backpackresultsfor hard negatives.

Same process as for Backpacks (replacing scripts and configs with Pythia paths) , but once you run report_test_pythia.py, bring initial.jsonl and results.jsonl to plotting/workshop-pythia and run plot_pythia_all.py and plot_pythia_all_hard_negs.py to make the plots.

For MEMIT results:

- As done in

memit/notebooks/make_sweep.py, for each relevant configuration ofmodel_nameand hyperparameters, run experiments via

python3 run_memit.py {model_name} --v_num_grad_steps 20 --clamp_norm_factor {clamp_norm_factor} --mom2_update_weight {mom2_update_weight}

--kl_factor {kl_factor}

--dataset_names {dataset_name} --subject_types true_subject,prefix_subject

--log_dir {log_dir}'

- Identify the best val hyperparameter configs and create test scripts via

memit/notebooks/load_val_results.ipynb. - Run the test scripts and get results via

memit/notebooks/load_test_results.ipynb, which generatesmemit_test_results.jsonandmemit_test_results.noedit.json.

Run the GPT-J validation and test experiments like for Pythia.

Once you've run the Backpack experiments and the GPT-J experiments, submit all the Backpack senses test configs, but with the improve_llama_experiment.py script instead of ft_experiment.py.

This will make results files; copy them as cat testbackpackresults/*.intervention~ > plotting/workshop-gptj/gptj_intervene_results.jsonl. Now go to plotting/workshop-gptj and run plot_gptj_test.py plot_gptj_test_hard_negs.py.

If you wanted to run existing intervention methods on a new dataset, you should replace:

training:

dataset_path: <here>

to a path with a file in jsonlines format, where each line has the structure:

{'prefix': <text> 'suffix': <text>}

where the prefix specifies some context and then the suffix specifies the {desired,undesired} behavior in that context.

Next, you should replace:

validation:

degredation_targeted_path: <here>

intervention_eval_path: <here>

where intervention_eval_path contains the path to the generalization evaluation set -- for example, mentions of countries' capitals in naturalistic text, and degredation_targeted_path contains the path to a dataset just like the intervention_eval_path, but the prefix and suffix are joined into a single text variable; this is intended to test whether LM predictions degrade a lot when the target senses appear (i.e., where we actually took gradients!)

And now you're good! You can run your new expts.

The data for this work has files for canonical examples separate from those for out-of-distribituion evaluation, and also separates validation files (i.e., a pair of train and evaluation files for method development) from test files (i.e., a pair of train and evaluation files for final reporting.) In our experiments, all hyperparameters are chosen on the validation pairs, and final results are reproted by porting those hyperparameters over to the test pairs. There are 6 datasets:

Validation

- Canonical examples:

data/country_capital/split/country_capital_fixed-val.jsonl - Evaluation examples:

data/country_capital/split/country_capital_clear_eval-val.jsonl

Test

- Canonical examples:

data/country_capital/split/country_capital_fixed-test.jsonl - Evaluation examples:

data/country_capital/split/country_capital_clear_eval-test.jsonl

Validation

- Canonical examples:

data/company_ceo/split/company_ceo_train-val.jsonl - Evaluation examples

data/company_ceo/split/company_ceo_eval_clear-val.jsonl

Test

- Canonical examples:

data/company_ceo/split/company_ceo_train-test.jsonl - Evaluation examples

data/company_ceo/split/company_ceo_eval_clear-test.jsonl

Validation

- Canonical examples:

data/pronoun_gender_bias//split//pronoun_gender_bias_train-val.jsonl - Evaluation examples:

data/pronoun_gender_bias//split//pronoun_gender_bias_eval-val.jsonl

Test

- Canonical examples:

data/pronoun_gender_bias//split//pronoun_gender_bias_train-test.jsonl - Evaluation examples:

data/pronoun_gender_bias//split//pronoun_gender_bias_eval-test.jsonl

Validation

- Canonical examples:

data/temporal/split/temporal_train-val.jsonl - Evaluation examples:

data/temporal/split/temporal_eval_clear-val.jsonl

Test

- Canonical examples:

data/temporal/split/temporal_train-test.jsonl - Evaluation examples:

data/temporal/split/temporal_eval_clear-test.jsonl

Validation

- Canonical examples:

data/stereoset/split/stereoset_train-val.jsonl - Evaluation examples:

data/stereoset/split/stereoset_eval_clear-val.jsonl

Test

- Canonical examples:

data/stereoset/split/stereoset_train-test.jsonl - Evaluation examples:

data/stereoset/split/stereoset_eval_clear-test.jsonl

Validation

- Canonical examples:

data/verb_conjugation/split/verb_conjugation_train-val.jsonl - Evaluation examples:

data/verb_conjugation/split/verb_conjugation_eval-val.jsonl

Test

- Canonical examples:

data/verb_conjugation/split/verb_conjugation_train-test.jsonl - Evaluation examples:

data/verb_conjugation/split/verb_conjugation_eval-test.jsonl