Hand Gesture of the Colombian sign language

Dataset was taken from kaggle (link here) and contains images of hand gestures for numbers (0-5) and vowels (A, E, I, O, U). Based on EDA I chose a subset of gestures to recognize (1, 2, 3, 4, 5, A, O, U).

❗ Example images can be found on the bottom of EDA.

Images were resized to 512x256. During training they were vertically flipped, have randomly added noise and increased brightness and contrast.

More information about used models and why I chose them can be found here

Create conda environment from yml file:

conda env create -f environment/environment.yml

then activate using command:

conda activate gesture_recognizer

You can run app for 3 tasks:

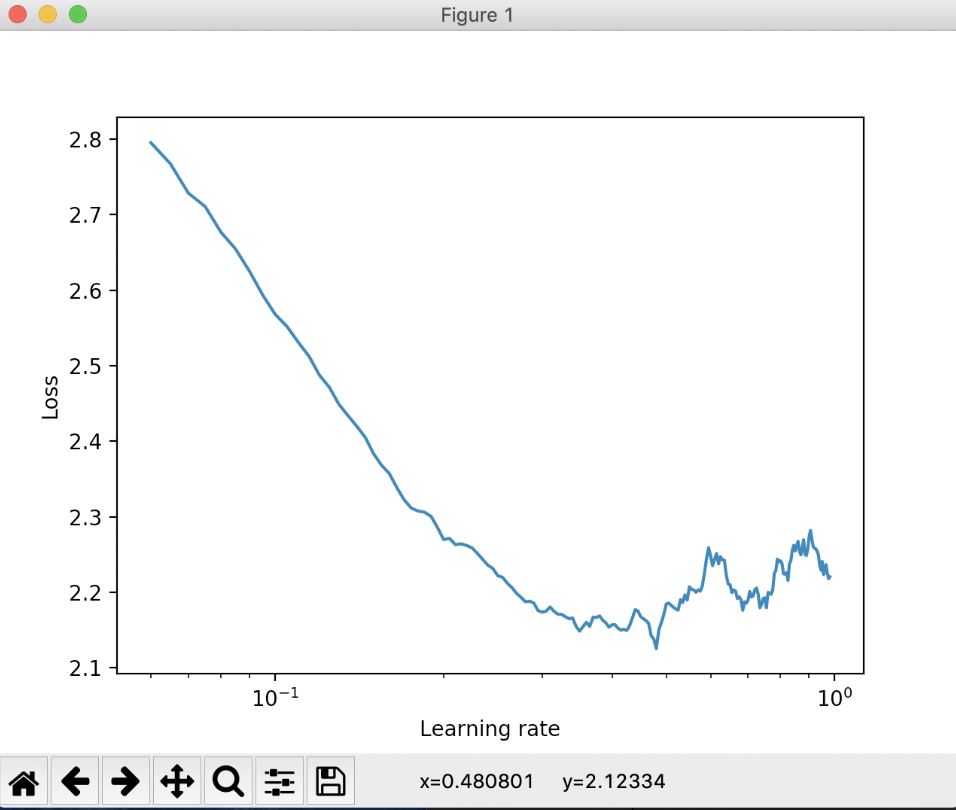

find_mean_std- find mean and std values which should be used to normalize data; run:python main.py find_mean_stdfind_lr- find optimal learning rate for model (should be used before training to set it up correctly); run:python main.py find_lrtrain- conduct training of model; run:python main.py train

❓ If you need any help regarding additional arguments available to provide by user run python main.py --help

- Overall results for

find_lrandtraintasks

LR FINDER

| Model name | Pretrained | Iterations | Min lr set in finder | Max lr set in finder | Found lr |

|---|---|---|---|---|---|

| MnasNet | Yes | 200 | 1e-7 | 1 | 4e-1 |

| MnasNet | No | 200 | 1e-7 | 1 | 2.5e-1 |

| SqueezeNet | Yes | 200 | 1e-7 | 1 | 3.3e-1 |

| MobileNetV2 | Yes | 200 | 1e-7 | 1 | 5e-1 |

TRAINING

| Model name | Pretrained | Epochs trained | Max validation accuracy | Min lr set in cyclic lr scheduler | Max lr set in cyclic lr scheduler |

|---|---|---|---|---|---|

| MnasNet | Yes | 50 | 61.89% | 4e-4 | 4e-2 |

| MnasNet | No | 30 | 13.73% | 2.5e-4 | 2.5e-2 |

| SqueezeNet | Yes | 100 | 70.08% | 3.5e-4 | 3.5e-3 |

| MobileNetV2 | Yes | 80 | 65.36% | 5e-4 | 5e-3 |

MnasNet: As a max learning rate for cyclic lr scheduler I set lr found by lr finder divided by 10, as a min lr - found lr divided by 1000. In SqueezeNet I didn't take 3.3e-1 (found lr) but 3.5e-1 because it gives me better results. As a min I have divided it by 1000, as a max - by 100. Same for MobileNetV2 was applied.

- Hand Gesture of the Colombian sign language dataset

Mean and std was obtained using find_mean_std task based on Hand Gesture of the Colombian sign language dataset.

Mean: [0.7315, 0.6840, 0.6410]

Std: [0.4252, 0.4546, 0.4789]

LR FINDER

| Model name | Pretrained | Iterations | Min lr set in finder | Max lr set in finder | Found lr |

|---|---|---|---|---|---|

| MnasNet | Yes | 200 | 1e-7 | 1 | 3.7e-1 |

| SqueezeNet | Yes | 200 | 1e-7 | 1 | 5e-1 |

| MobileNetV2 | Yes | 200 | 1e-7 | 1 | 3.5e-1 |

TRAINING

| Model name | Pretrained | Epochs trained | Max validation accuracy | Min lr set in cyclic lr scheduler | Max lr set in cyclic lr scheduler |

|---|---|---|---|---|---|

| MnasNet | Yes | 100 | 80.74% | 3.3e-3 | 3.5e-2 |

| SqueezeNet | Yes | 100 | 79.09% | 5e-3 | 5e-2 |

| MobileNetV2 | Yes | 100 | 70.29% | 3.5e-4 | 3.5e-3 |

- ImageNet dataset

Mean and std for ImageNet dataset.

Mean: [0.485, 0.456, 0.406]

Std: [0.229, 0.224, 0.225]

LR FINDER

| Model name | Pretrained | Iterations | Min lr set in finder | Max lr set in finder | Found lr |

|---|---|---|---|---|---|

| MnasNet | Yes | 200 | 1e-7 | 1 | 3.4e-1 |

| SqueezeNet | Yes | 200 | 1e-7 | 1 | 1 |

| MobileNetV2 | Yes | 200 | 1e-7 | 1 | 3.7e-1 |

TRAINING

| Model name | Pretrained | Epochs trained | Max validation accuracy | Min lr set in cyclic lr scheduler | Max lr set in cyclic lr scheduler |

|---|---|---|---|---|---|

| MnasNet | Yes | 100 | 83.81% | 3.2e-3 | 3.2e-2 |

| SqueezeNet | Yes | 100 | 81.56% | 1e-3 | 1e-2 |

| MobileNetV2 | Yes | 100 | 80.12% | 3.5e-4 | 3.5e-3 |

- Lr finder

Plots obtained using LRFinder are located under results/NORMALIZATION/visualisations_lr_finder/MODEL_NAME/ directory.

You can find plots for pretrained and not pretrained models under specific directories. There are 2 files: plot obtained using lr finder with information about lr value and loss in the lowest point and zoomed area of interest.

Example (pretrained MnasNet; without normalization):

- Models

Trained models are saved in results/NORMALIZATION/model/MODEL_NAME/ directory. Only models better than previous will be saved.

Under directory containing model name you can find 2 directories: one contains saved models for pretrained model, second for not pretrained.

Validation accuracy is set as a filename.

❗ Only model with the highest score is uploaded to github.

- Size

I have to take into account the size of the model, because it should be small for mobile phones.

| Model name | Pretrained | Size (MB) |

|---|---|---|

| MnasNet | Yes | 13.9 |

| MnasNet | No | 13.9 |

| SqueezeNet | Yes | 3 |

| MobileNetV2 | Yes | 10.4 |

- Tensorboard

Run tensorboard --logdir=results/ and open http://localhost:6006 in your browser to check train/validation accuracy/loss during all epochs