Matlab code to reproduce the results of the paper

Improving FISTA: Faster, Smarter and Greedier

Jingwei Liang, Tao Luo and Carola-Bibiane Schönlieb, 2018

Consider solving the problem below

$$

\min_{x\in \mathbb{R}^n} ~ \frac{1}{2} |Ax - f|^2 ,

$$

where

We set

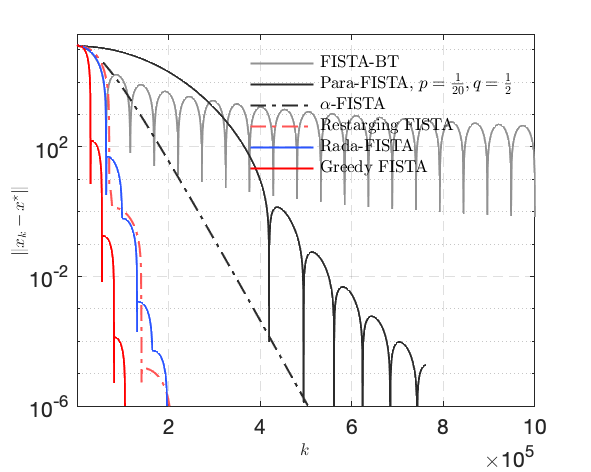

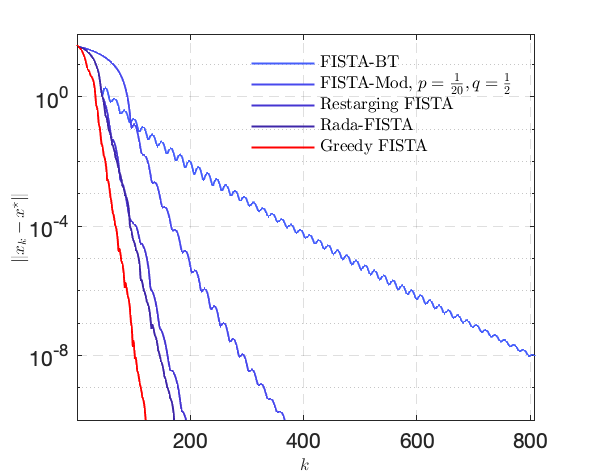

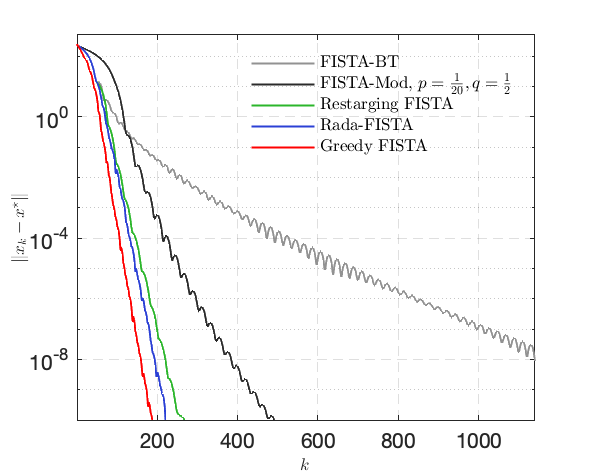

| Error |

Objective function |

|---|---|

|

|

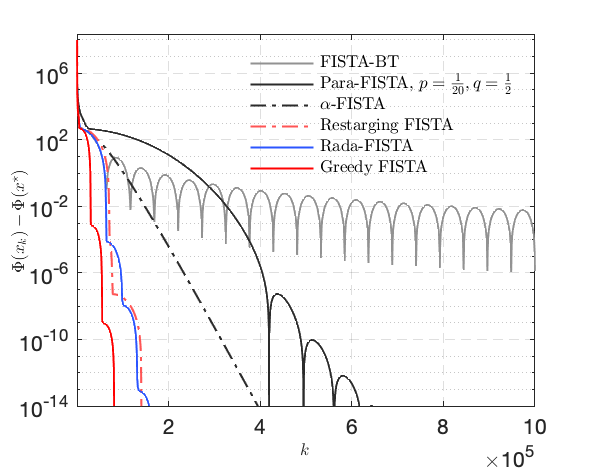

Consider solving the problem below $$ \min_{x\in \mathbb{R}^n} ~ \mu R(x) + \frac{1}{2} |Ax - f|^2 . $$

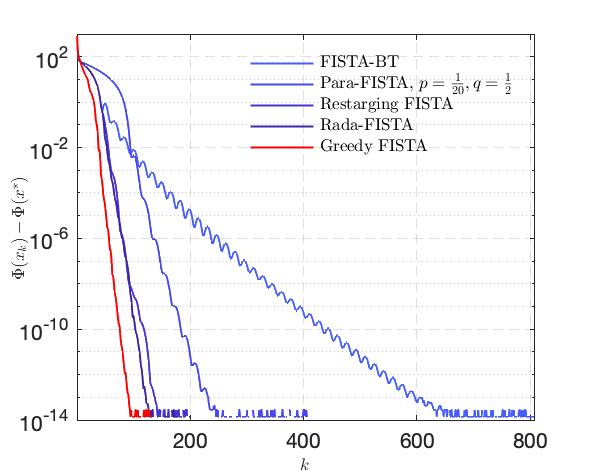

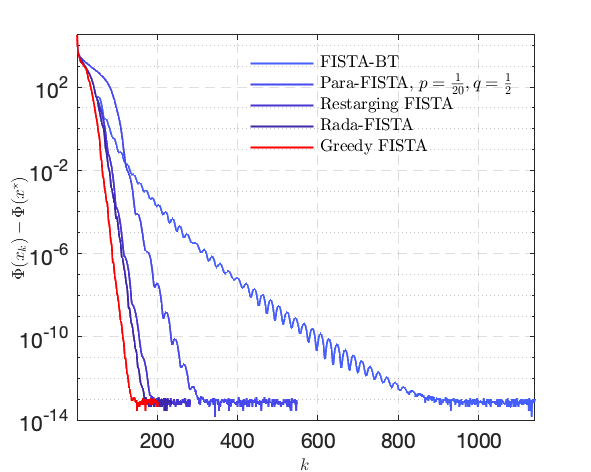

| Error |

Objective function |

|---|---|

|

|

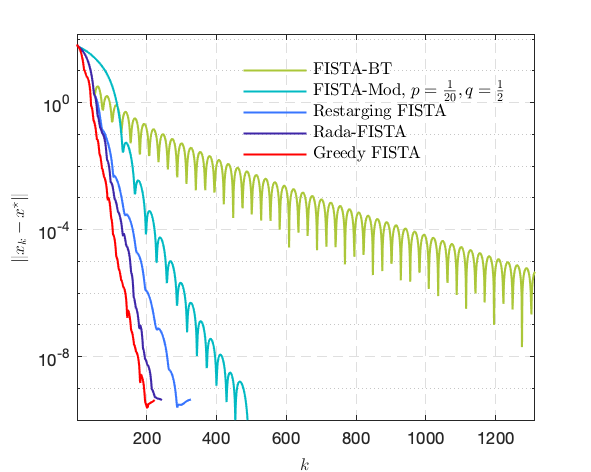

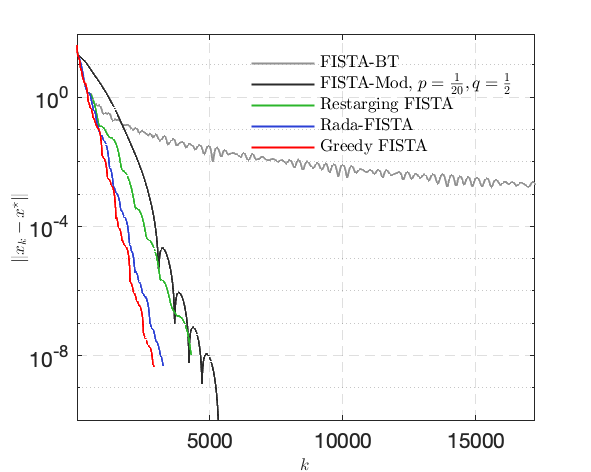

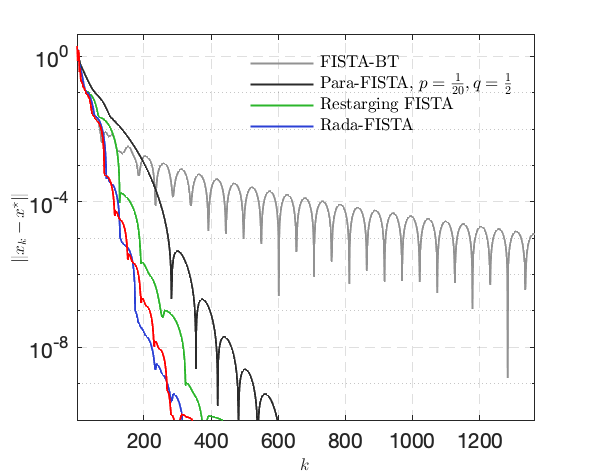

| Error |

Objective function |

|---|---|

|

|

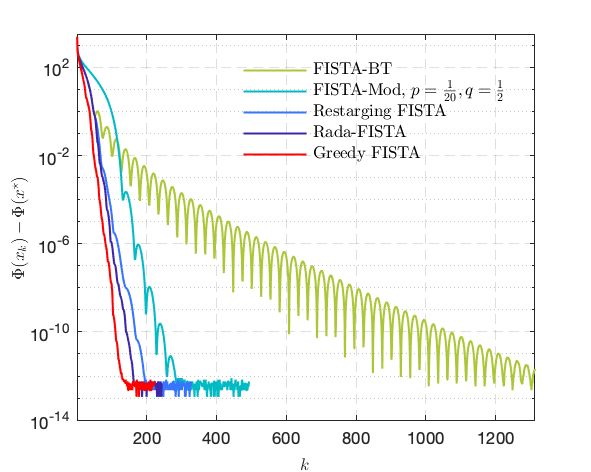

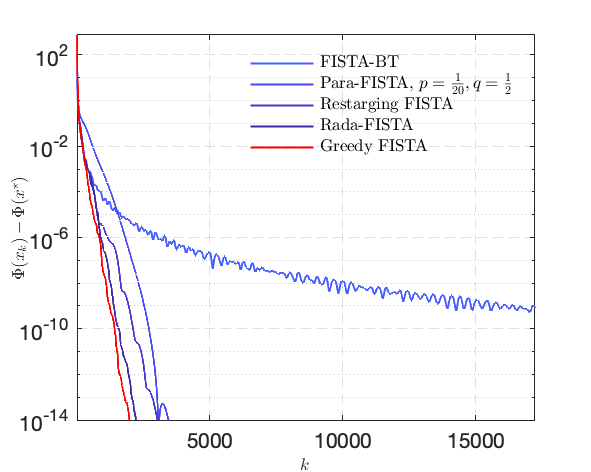

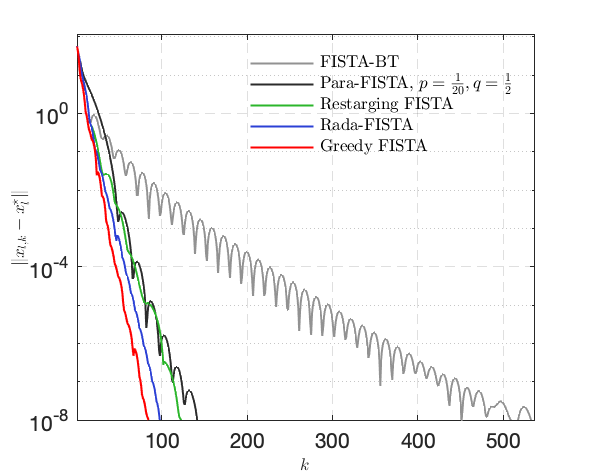

| Error |

Objective function |

|---|---|

|

|

| Error |

Objective function |

|---|---|

|

|

Consider the problem $$ \min_{x \in \mathbb{R}^n } \mu |x|{1} + \frac{1}{m} \sum{i=1}^m \log({ 1+e^{ -l_{i} h_{i}^T x } }) , $$

| Error |

Objective function |

|---|---|

|

PCP considers the following problem $$ \min_{x_{l}, x_{s} \in \mathbb{R}^{m\times n}}~ \frac{1}{2}|f-x_{l}-x_{s}|^2 + \mu |x_{s}|1 + \nu |x{l}|_* . $$

| Original frame | Foreground | Background | Performance comparison |

|---|---|---|---|

|

|

|

|

Copyright (c) 2018 Jingwei Liang