Azimuth provides a self-service portal for managing cloud resources, with a focus on simplifying the use of cloud for high-performance computing (HPC) and artificial intelligence (AI) use cases.

It is currently capable of targeting OpenStack clouds.

- Introduction

- Try Azimuth

- Timeline

- Architecture

- Deploying Azimuth

- Setting up a local development environment

Azimuth was originally developed for the JASMIN Cloud as a simplified version of the OpenStack Horizon dashboard, with the aim of reducing complexity for less technical users.

It has since grown to offer additional functionality with a continued focus on simplicity for scientific use cases, including the ability to provision complex platforms via a user-friendly interface. The platforms range from single-machine workstations with web-based remote console and desktop access to entire Slurm clusters and platforms such as JupyterHub that run on Kubernetes clusters.

Services are exposed to users without consuming floating IPs or requiring SSH keys using the Zenith application proxy.

Here, you can see Stig Telfer (CTO) and Matt Pryor (Senior Tech Lead and Azimuth project lead) from StackHPC presenting Azimuth at the OpenInfra Summit in Berlin in 2022:

Key features of Azimuth include:

- Supports multiple Keystone authentication methods simultaneously:

- Username and password, e.g. for LDAP integration.

- Keystone federation for integration with existing OpenID Connect or SAML 2.0 identity providers.

- Application credentials to allowing the distribution of easily-revokable credentials, e.g. for training, or for integrating with clouds that use federation but implementing the required trust is not possible.

- On-demand Platforms

- Unified interface for managing Kubernetes and CaaS platforms.

- Kubernetes-as-a-Service and Kubernetes-based platforms

- Operators provide a curated set of templates defining available Kubernetes versions, networking configurations, custom addons etc.

- Uses Cluster API to provision Kubernetes clusters.

- Supports Kubernetes version upgrades with minimal downtime using rolling node replacement.

- Supports auto-healing clusters that automatically identify and replace unhealthy nodes.

- Supports multiple node groups, including auto-scaling node groups.

- Supports clusters that can utilise GPUs and accelerated networking (e.g. SR-IOV).

- Installs and configures addons for monitoring + logging, system dashboards and ingress.

- Kubernetes-based platforms, such as JupyterHub, as first-class citizens in the platform catalog.

- Cluster-as-a-Service (CaaS)

- Operators provide a curated catalog of appliances.

- Appliances are Ansible playbooks that provision and configure infrastructure.

- Ansible calls out to Terraform to provision infrastructure.

- Uses AWX, the open-source version of Ansible Tower, to manage Ansible playbook execution and Consul to store Terraform state.

- Application proxy using Zenith:

- Zenith uses SSH tunnels to expose services running behind NAT or a firewall to the internet

using operator-controlled, random domains.

- Exposed services do not need to be directly accessible to the internet.

- Exposed services do not consume a floating IP.

- Zenith supports an auth callout for proxied services, which Azimuth uses to secure proxied services.

- Used by Azimuth to provide access to platforms, e.g.:

- Web-based console / desktop access using Apache Guacamole

- Monitoring and system dashboards

- Platform-specific interfaces such as Jupyter Notebooks and Open OnDemand

- Zenith uses SSH tunnels to expose services running behind NAT or a firewall to the internet

using operator-controlled, random domains.

- Simplified interface for managing basic OpenStack resources:

- Automatic detection of networking, with auto-provisioning of networks and routers if required.

- Create, update and delete machines with automatic network detection.

- Create, delete and attach volumes.

- Allocate, attach and detach floating IPs.

- Configure instance-specific security group rules.

If you have access to a project on an OpenStack cloud, you can try Azimuth!

A demo instance of Azimuth can be deployed on an OpenStack cloud by following these simple instructions. All that is required is an account on an OpenStack cloud and a host that is capable of running Ansible. Admin privileges on the target cloud are not normally required.

This section shows a timeline of the significant events in the development of Azimuth:

- Autumn 2015: Development begins on the JASMIN Cloud Portal, targetting JASMIN's VMware cloud.

- Spring 2016: JASMIN Cloud Portal goes into production.

- Early 2017: JASMIN Cloud plans to move to OpenStack, cloud portal v2 development begins.

- Summer 2017: JASMIN's OpenStack cloud goes into production, with the JASMIN Cloud Portal v2.

- Spring 2019: Work begins on JASMIN Cluster-as-a-Service (CaaS) with StackHPC.

- Initial work presented at UKRI Cloud Workshop.

- Summer 2019: JASMIN CaaS beta roll out.

- Spring 2020: JASMIN CaaS in use by customers, e.g. the

ESA Climate Change Initiative Knowledge Exchange project.

- Production system presented at UKRI Cloud Workshop.

- Summer 2020: Production rollout of JASMIN CaaS.

- Spring 2021: StackHPC fork JASMIN Cloud Portal to develop it for IRIS.

- Summer 2021: Zenith application proxy developed and used to provide web consoles in Azimuth.

- November 2021: StackHPC fork detached and rebranded to Azimuth.

- December 2021: StackHPC Slurm appliance integrated into CaaS.

- January 2022: Native Kubernetes support added using Cluster API (previously supported by JASMIN as a CaaS appliance).

- February 2022: Support for exposing services in Kubernetes using Zenith.

- March 2022: Support for exposing services in CaaS appliances using Zenith.

- June 2022: Unified platforms interface for Kubernetes and CaaS.

- October 2022: Support for Kubernetes platforms in the unified platforms interface.

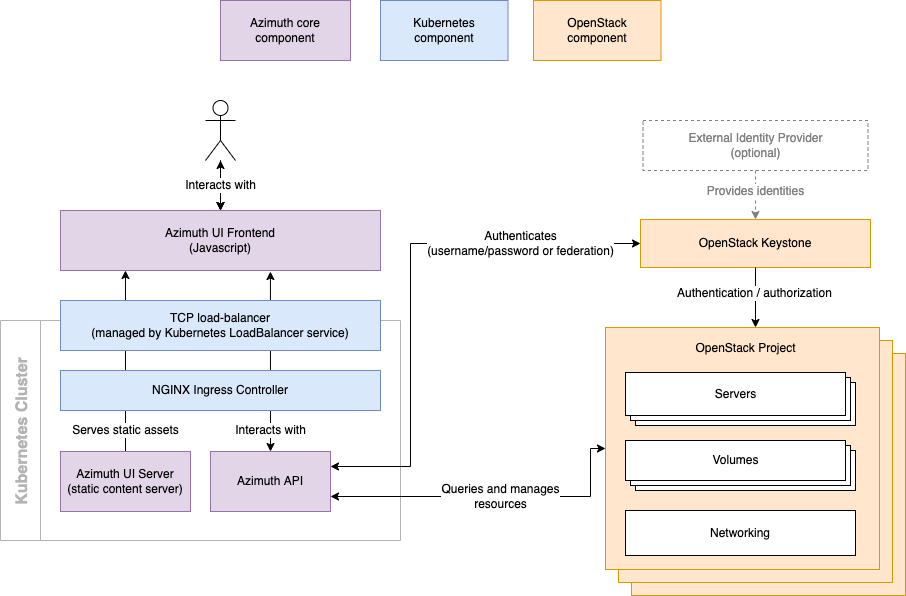

Azimuth consists of a Python backend providing a REST API (different to the OpenStack API) and a Javascript frontend written in React.

At it's core, Azimuth is just an OpenStack client. When a user authenticates with Azimuth, it negotiates with Keystone (using either username/password or federation) to obtain a token which is stored in a cookie in the user's browser. Azimuth then uses this token to talk to the OpenStack API on behalf of the user when the user submits requests to the Azimuth API via the Azimuth UI.

When the Zenith application proxy and Cluster-as-a-Service (CaaS) subsystems are enabled, this picture becomes more complicated - see Azimuth Architecture for more details.

Although Azimuth itself is a simple Python + React application that is deployed onto a Kubernetes cluster using Helm, a fully functional Azimuth deployment is much more complex and has many dependencies:

- A Kubernetes cluster.

- Persistent storage for Kubernetes configured as a Storage Class.

- The NGINX Ingress Controller for exposing services in Kubernetes.

- AWX for executing Cluster-as-a-Service jobs.

- Cluster API operators + custom Kubernetes operator for Kubernetes support.

- Zenith application proxy with authentication callout wired into Azimuth.

- Consul for Zenith service discovery and Terraform state for CaaS.

To manage this complexity, we use Ansible to deploy Azimuth and all of it's dependencies. See the Azimuth Deployment Documentation for more details.