xFormers is:

- Customizable building blocks: Independent/customizable building blocks that can be used without boilerplate code. The components are domain-agnostic and xFormers is used by researchers in vision, NLP and more.

- Research first: xFormers contains bleeding-edge components, that are not yet available in mainstream libraries like pytorch.

- Built with efficiency in mind: Because speed of iteration matters, components are as fast and memory-efficient as possible. xFormers contains its own CUDA kernels, but dispatches to other libraries when relevant.

- (RECOMMENDED, linux) Install latest stable with conda: Requires PyTorch 1.12.1, 1.13.1 or 2.0.0 installed with conda

conda install xformers -c xformers- (RECOMMENDED, linux & win) Install latest stable with pip: Requires PyTorch 2.0.0

pip install -U xformers- Development binaries:

# Use either conda or pip, same requirements as for the stable version above

conda install xformers -c xformers/label/dev

pip install --pre -U xformers- Install from source: If you want to use with another version of PyTorch for instance (including nightly-releases)

# (Optional) Makes the build much faster

pip install ninja

# Set TORCH_CUDA_ARCH_LIST if running and building on different GPU types

pip install -v -U git+https://github.com/facebookresearch/xformers.git@main#egg=xformers

# (this can take dozens of minutes)Memory-efficient MHA

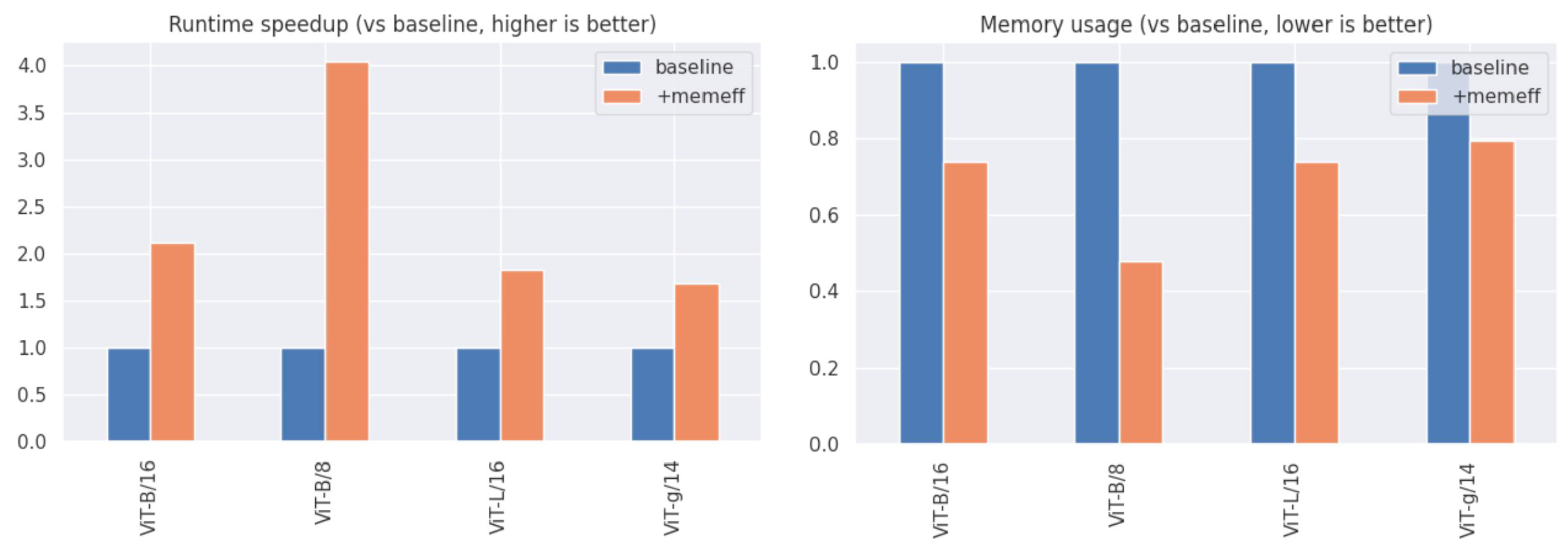

Setup: A100 on f16, measured total time for a forward+backward pass

Setup: A100 on f16, measured total time for a forward+backward pass

Note that this is exact attention, not an approximation, just by calling xformers.ops.memory_efficient_attention

More benchmarks

xFormers provides many components, and more benchmarks are available in BENCHMARKS.md.

This command will provide information on an xFormers installation, and what kernels are built/available:

python -m xformers.infoLet's start from a classical overview of the Transformer architecture (illustration from Lin et al,, "A Survey of Transformers")

You'll find the key repository boundaries in this illustration: a Transformer is generally made of a collection of attention mechanisms, embeddings to encode some positional information, feed-forward blocks and a residual path (typically referred to as pre- or post- layer norm). These boundaries do not work for all models, but we found in practice that given some accomodations it could capture most of the state of the art.

Models are thus not implemented in monolithic files, which are typically complicated to handle and modify. Most of the concepts present in the above illustration correspond to an abstraction level, and when variants are present for a given sub-block it should always be possible to select any of them. You can focus on a given encapsulation level and modify it as needed.

├── ops # Functional operators

└ ...

├── components # Parts zoo, any of which can be used directly

│ ├── attention

│ │ └ ... # all the supported attentions

│ ├── feedforward #

│ │ └ ... # all the supported feedforwards

│ ├── positional_embedding #

│ │ └ ... # all the supported positional embeddings

│ ├── activations.py #

│ └── multi_head_dispatch.py # (optional) multihead wrap

|

├── benchmarks

│ └ ... # A lot of benchmarks that you can use to test some parts

└── triton

└ ... # (optional) all the triton parts, requires triton + CUDA gpuAttention mechanisms

-

- whenever a sparse enough mask is passed

-

- courtesy of Triton

-

Local. Notably used in (and many others)

-

- See BigBird, Longformers,..

-

- See BigBird, Longformers,..

-

... add a new one see Contribution.md

Initializations

This is completely optional, and will only occur when generating full models through xFormers, not when picking parts individually.

There are basically two initialization mechanisms exposed, but the user is free to initialize weights as he/she sees fit after the fact.

- Parts can expose a

init_weights()method, which define sane defaults - xFormers supports specific init schemes which can take precedence over the init_weights()

If the second code path is being used (construct model through the model factory), we check that all the weights have been initialized, and possibly error out if it's not the case

(if you set xformers.factory.weight_init.__assert_if_not_initialized = True)

Supported initialization schemes are:

One way to specify the init scheme is to set the config.weight_init field to the matching enum value.

This could easily be extended, feel free to submit a PR !

- Many attention mechanisms, interchangeables

- Optimized building blocks, beyond PyTorch primitives

- Memory-efficient exact attention - up to 10x faster

- sparse attention

- block-sparse attention

- fused softmax

- fused linear layer

- fused layer norm

- fused dropout(activation(x+bias))

- fused SwiGLU

- Benchmarking and testing tools

- micro benchnmarks

- transformer block benchmark

- LRA, with SLURM support

- Programatic and sweep friendly layer and model construction

- Compatible with hierarchical Transformers, like Swin or Metaformer

- Hackable

- Not using monolithic CUDA kernels, composable building blocks

- Using Triton for some optimized parts, explicit, pythonic and user-accessible

- Native support for SquaredReLU (on top of ReLU, LeakyReLU, GeLU, ..), extensible activations

- NVCC and the current CUDA runtime match. Depending on your setup, you may be able to change the CUDA runtime with

module unload cuda; module load cuda/xx.x, possibly alsonvcc - the version of GCC that you're using matches the current NVCC capabilities

- the

TORCH_CUDA_ARCH_LISTenv variable is set to the architures that you want to support. A suggested setup (slow to build but comprehensive) isexport TORCH_CUDA_ARCH_LIST="6.0;6.1;6.2;7.0;7.2;7.5;8.0;8.6" - If the build from source OOMs, it's possible to reduce the parallelism of ninja with

MAX_JOBS(egMAX_JOBS=2) - If you encounter

UnsatisfiableErrorwhen installing with conda, make sure you have pytorch installed in your conda environment, and that your setup (pytorch version, cuda version, python version, OS) match an existing binary for xFormers

xFormers has a BSD-style license, as found in the LICENSE file.

If you use xFormers in your publication, please cite it by using the following BibTeX entry.

@Misc{xFormers2022,

author = {Benjamin Lefaudeux and Francisco Massa and Diana Liskovich and Wenhan Xiong and Vittorio Caggiano and Sean Naren and Min Xu and Jieru Hu and Marta Tintore and Susan Zhang and Patrick Labatut and Daniel Haziza},

title = {xFormers: A modular and hackable Transformer modelling library},

howpublished = {\url{https://github.com/facebookresearch/xformers}},

year = {2022}

}The following repositories are used in xFormers, either in close to original form or as an inspiration: