This is the official PyTorch implementation of the CVPR 2023 paper:

-

Install the Matterport3D Simulator (the latest version).

-

Install other dependencies.

pip install -r requirements.txt

Download the checkpoints, precomputed RGB, depth and normal features from here. Download other data following the instructions of Recurrent-VLN-BERT and HAMT, including the annotations, connectivity maps, and pretrained models.

The RGB, depth and normal fearures are already included in the downloaded data. If you want to reextract them yourself, please follow the instructions below.

- Download the RGB images from here.

- Estimate the depth maps and normal maps using Omnidata (https://github.com/EPFL-VILAB/omnidata/blob/main/omnidata_tools/torch/demo.py).

- Extract RGB, depth and normal fearures.

cd Recurrent-VLN-BERT

python img_features/precompute_img_features.py

cd VLN-HAMT

python preprocess/precompute_features_vit.py

cd Recurrent-VLN-BERT

bash run/train_geo.bash

The trained model will be saved in snap.

Evaluate the results using either the checkpoint provided by us or your trained model. Modify the argument load in run/test_geo.bash, and then

bash run/test_geo.bash

Modify the arguments in run/run_r2r_geo.bash, and then

cd VLN-HAMT/finetune_src

bash run/run_r2r_geo.bash

If you find our work useful to your research, please consider citing:

@inproceedings{huo2023geovln,

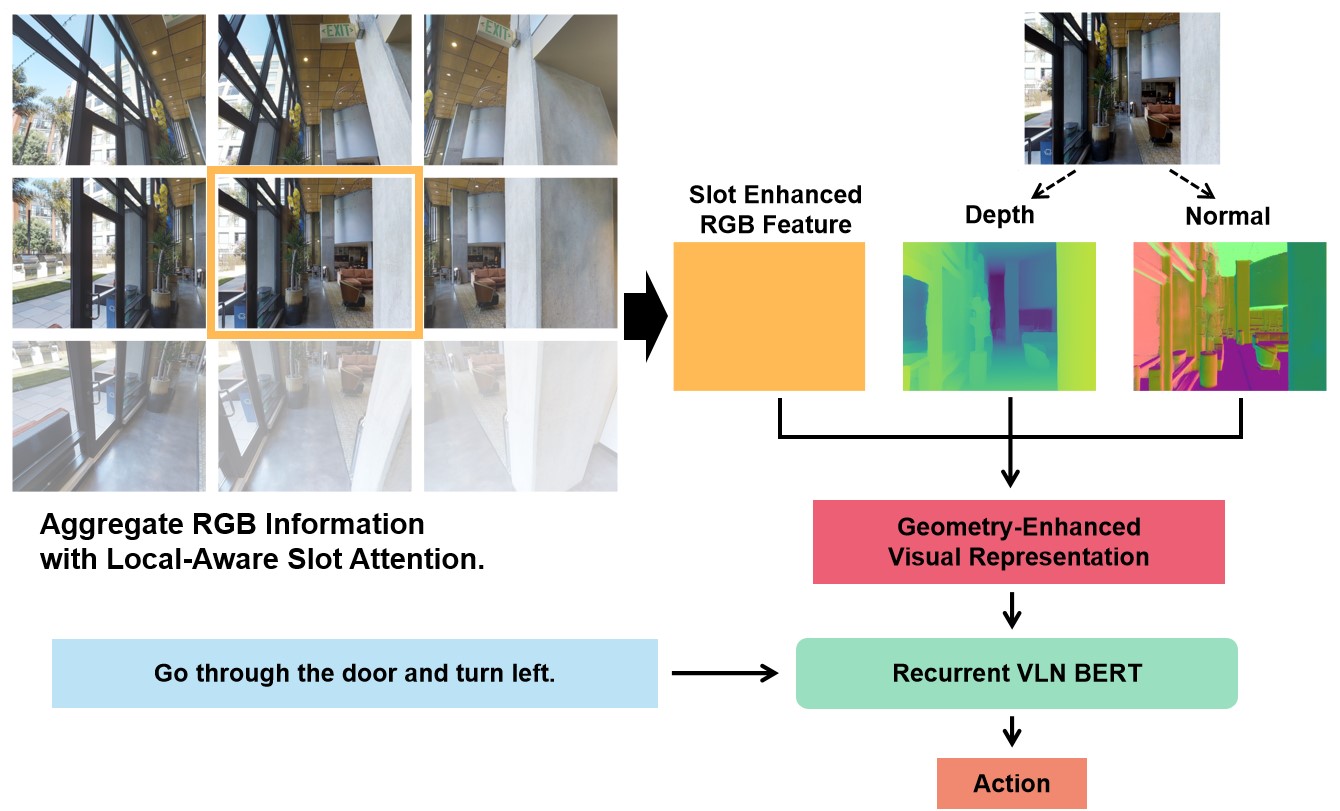

title={GeoVLN: Learning Geometry-Enhanced Visual Representation with Slot Attention for Vision-and-Language Navigation},

author={Huo, Jingyang and Sun, Qiang and Jiang, Boyan and Lin, Haitao and Fu, Yanwei},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={23212--23221},

year={2023}

}

Some of the codes for this project are borrowed from the following sources: