Official implementation of ViPT, including models and training&testing codes.

Models & Raw Results (Google Driver) Models & Raw Results (Baidu Driver: vipt)

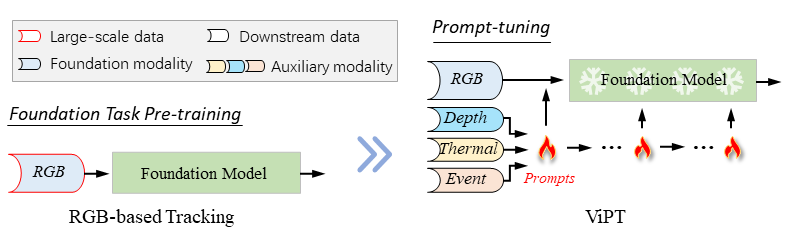

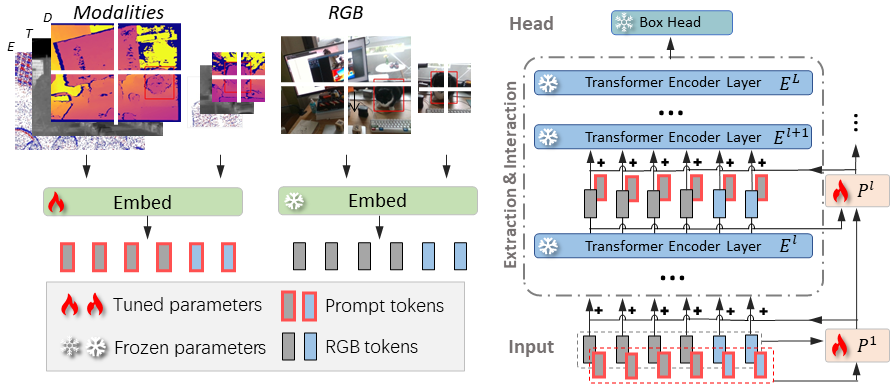

🔥🔥🔥 This work proposes ViPT, a new prompt-tuning framework for multi-modal tracking.

-

Tracking in RGB + Depth scenarios:

-

Tracking in RGB + Thermal scenarios:

-

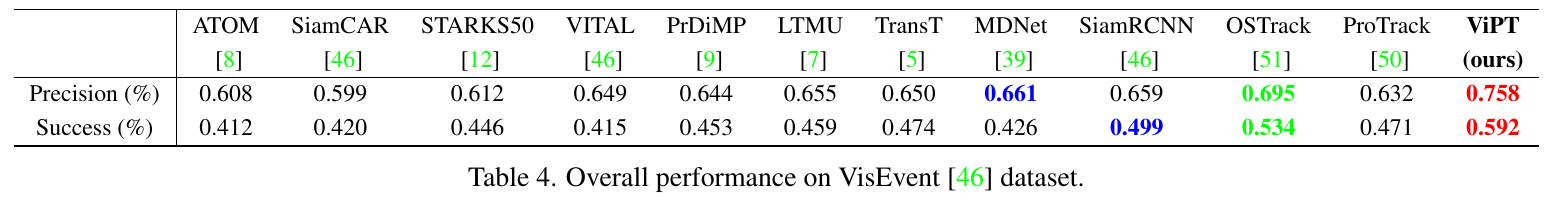

Tracking in RGB + Event scenarios:

[Mar 20, 2023]

- We release codes, models and raw results.

Thanks for your star 😝😝😝.

[Feb 28, 2023]

- ViPT is accepted to CVPR2023.

-

🔥 A new unified visual prompt multi-modal tracking framework (e.g. RGB-D, RGB-T, and RGB-E Tracking).

-

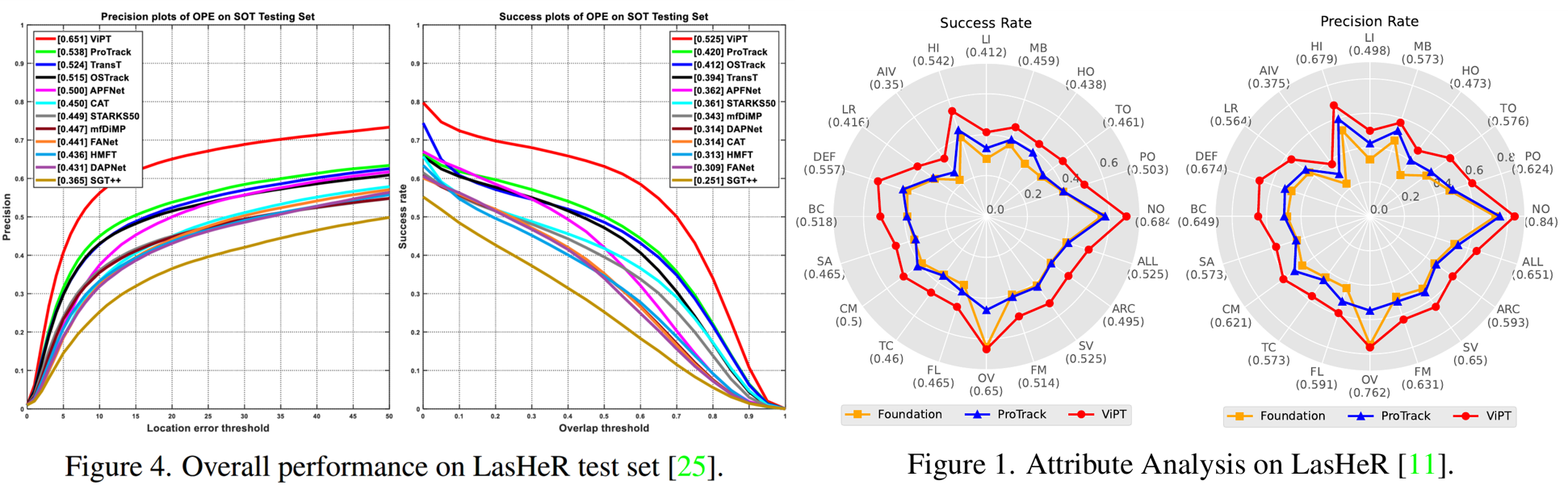

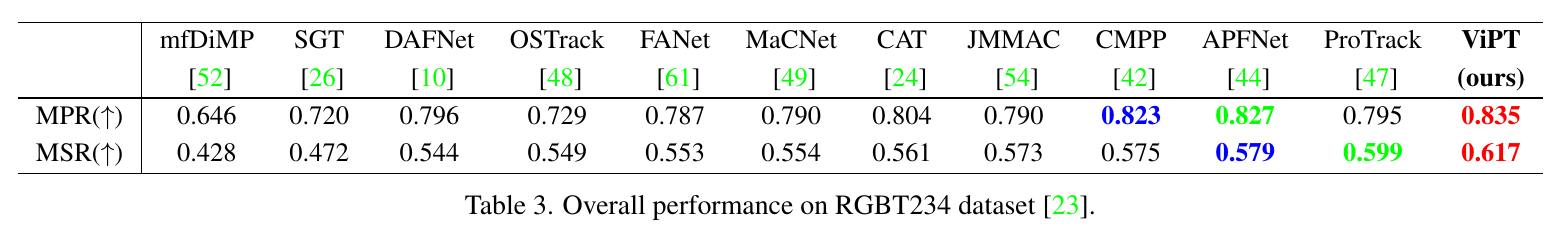

ViPT has high performance on multiple multi-modal tracking tasks.

-

ViPT is with high parameter-efficient tuning, containing only 0.84M trainable parameters (<1%).

-

We expect ViPT can attract more attention to prompt learning 🔥 for further research of multi-modal tracking.

Create and activate a conda environment:

conda create -n vipt python=3.7

conda activate vipt

Install the required packages:

bash install_vipt.sh

Put the training datasets in ./data/. It should look like:

$<PATH_of_ViPT>

-- data

-- DepthTrackTraining

|-- adapter02_indoor

|-- bag03_indoor

|-- bag04_indoor

...

-- LasHeR/train/trainingset

|-- 1boygo

|-- 1handsth

...

-- VisEvent/train

|-- 00142_tank_outdoor2

|-- 00143_tank_outdoor2

...

|-- trainlist.txt

Run the following command to set paths:

cd <PATH_of_ViPT>

python tracking/create_default_local_file.py --workspace_dir . --data_dir ./data --save_dir ./output

You can also modify paths by these two files:

./lib/train/admin/local.py # paths for training

./lib/test/evaluation/local.py # paths for testing

Dowmload the pretrained foundation model (OSTrack) and put it under ./pretrained/.

bash train_vipt.sh

You can train models with various modalities and variants by modifying train_vipt.sh.

[DepthTrack Test set & VOT22_RGBD]

These two benchmarks are evaluated using VOT-toolkit.

You need to put the DepthTrack test set to./Depthtrack_workspace/ and name it 'sequences'.

You need to download the corresponding test sequences at./vot22_RGBD_workspace/.

bash eval_rgbd.sh

[LasHeR & RGBT234]

Modify the <DATASET_PATH> and <SAVE_PATH> in./RGBT_workspace/test_rgbt_mgpus.py, then run:

bash eval_rgbt.sh

We refer you to LasHeR Toolkit for LasHeR evaluation, and refer you to MPR_MSR_Evaluation for RGBT234 evaluation.

[VisEvent]

Modify the <DATASET_PATH> and <SAVE_PATH> in./RGBE_workspace/test_rgbe_mgpus.py, then run:

bash eval_rgbe.sh

We refer you to VisEvent_SOT_Benchmark for evaluation.

If you find ViPT is helpful for your research, please consider citing:

@inproceedings{ViPT,

title={Visual Prompt Multi-Modal Tracking},

author={Jiawen, Zhu and Simiao, lai and Xin, Chen and Wang, Dong and Lu, Huchuan},

booktitle={CVPR},

year={2023}

}- This repo is based on OSTrack which is an excellent work.

- We thank for the PyTracking library, which helps us to quickly implement our ideas.

If you have any question, feel free to email jiawen@mail.dlut.edu.cn. ^_^