NOTE: This is a work in progress, the pandoc conversion seems to have added some funky layout or text problems. Feel free to make a PR if you want to fix anything.

The entire book released under Creative Commons Attribution-NonCommercial 4.0 International

Link: https://www.amazon.com/dp/B087GYV15Y

Copyright © John Riselvato 2020 Copyright © 3 Slashed Books 2020 All rights reserved

2. How to Install FFmpeg on macOS?

3. How to Install FFmpeg on Linux?

4. How to Install FFmpeg on Windows?

5. How to Configure FFmpeg with Extra Dependencies?

6. How to Install FFmpeg on a PHP Server?

7. How to Use FFmpeg in Various Languages?

8. How to Check the Installed FFmpeg Version?

10. What are All the Codecs FFmpeg Supports?

11. What are All the Formats FFmpeg Supports?

12. How to Copy a Codec From One File to Another?

13. How to Check the Audio / Video File Information?

14. How to Use Filters (-vf/-af VS -filter_complex)?

15. How to Chain Multiple Filters?

16. How to Use -filter_complex Without Losing Video Quality?

17. What is -map and How is it Used?

18. How to Convert an Entire Directory?

19. How to Extract Audio From a Video?

A Note about Audio conversions:

20. How to Convert Ogg to MP3?

21. How to Convert FLAC to MP3?

22. How to Convert WAV to MP3?

23. How to Merge Multiple MP3s into One Track?

24. How to Trim 'x' Seconds From the Start of an Audio Track?

25. How to Trim 'x' Seconds From the End of an Audio Track?

26. How to Trim 'x' Seconds From the Start and End of an Audio Track?

27. How to Adjust Audio Volume?

28. How to Crossfade Two Audio Tracks?

29. How to Normalize Audio Data?

30. How to Add an Echo to an Audio Track?

31. How to Change the Tempo of an Audio Track?

32. How to Change the Pitch / Sample Rate of an Audio Track?

A Note about Pitch, Tempo & Sample Rate

33. How to Generate an Audio Tone?

34. How to Generate Text to Speech Audio?

35. How to Add a Low-Pass Filter to an Audio Track?

36. How to Add a High-Pass Filter to an Audio Track?

A Note About Audio only Related Filters

37. How to Remove Audio From a Video?

38. How to Mix Additional Audio into a Video?

39. How to Replace the Audio on a Video?

40. How to Convert MOV to MP4?

41. How to Convert MKV to MP4?

42. How to Convert AVI to MP4?

43. How to Convert FLV to MP4?

44. How to Convert WebM to MP4?

A Note about Converting to MP4

45. How to Convert MP4 to GIF?

46. How to Compress MP4 and Reduce File Size?

47. How to Trim 'x' Seconds From the Start of a Video?

48. How to Trim 'x' Seconds From the End of a Video?

49. How to Trim 'x' Seconds From the Start and End of a Video?

50. How to Splice a Video into Segments?

51. How to Stitch Segments to One Video?

52. How to Loop a Section of Video Multiple Times?

53. How to Concatenate Multiple Videos?

54. How to Blend Two Videos Together?

55. How to Add Color Normalization to a Video?

56. How to Add Color Balance to a Video?

57. How to Edit the Hue of a Video?

58. How to Convert a Video to Black and White?

59. How to Edit the Saturation of a Video?

60. How to Invert the Colors of a Video?

62. How to Apply a Vignette to a Video?

63. How to Remove All Colors Except One From a Video?

64. How to Generate a Color Palette From a Video?

65. How to Apply a Color Palette to a Video?

66. How to Sharpen a Video with Unsharp?

67. How to Blur a Video with Unsharp?

68. How to Blur a Video with Smartblur?

69. How to Apply a Gaussian Blur to a Video?

70. How to Apply a Box Blur to a Video?

71. How to Apply a Pixelated Effect to a Video?

72. How to Adjust the Volume of a Video?

77. How to Change the Frame Rate of a Video?

78. How to Change the Resolution of a Video?

79. How to Apply Quantization to a Video?

80. How to Remove Duplicate Frames From a Video?

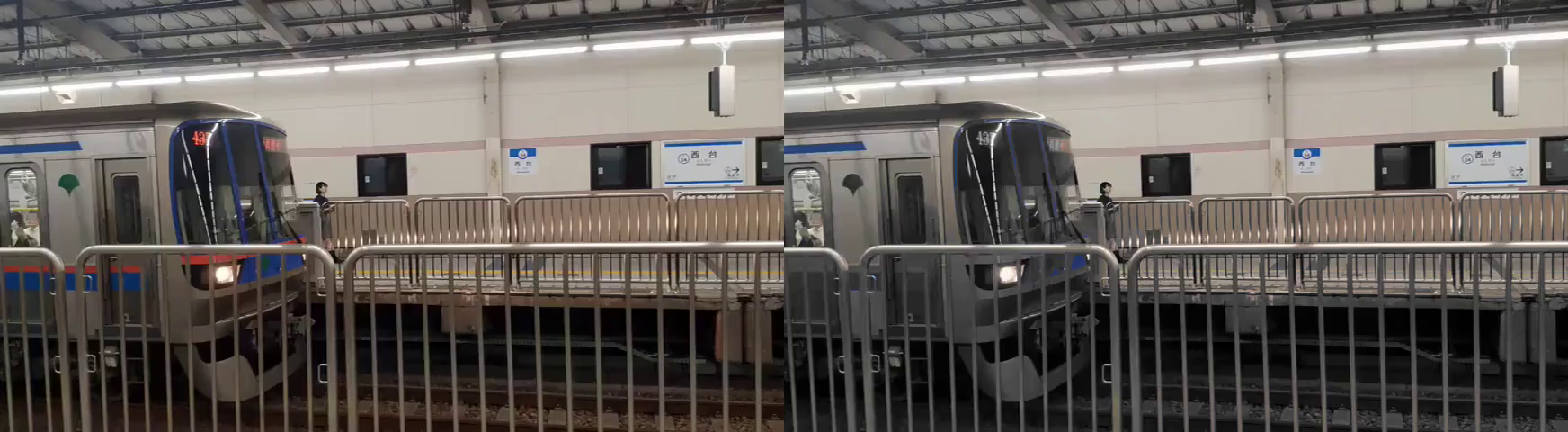

81. How to Stack Multiple Videos Horizontally?

82. How to Stack Multiple Videos Vertically?

83. How to Horizontal Flip a Video?

84. How to Vertically Flip a Video ?

86. How to Extract Subtitles From Video?

87. How to Add Subtitles to a Video?

88. How to Burn Subtitles Into a Video?

89. How to Overlay Custom Text in a Video?

90. How to Add a Transparent Watermark to a Video?

91. How to Create a Slideshow Video From Multiple Images?

92. How to Extract an Image Frame From a Video at a Specific Time?

93. How to Add an MP3 to an Image to Create a Video?

94. How to Convert a Video into a Tile Image?

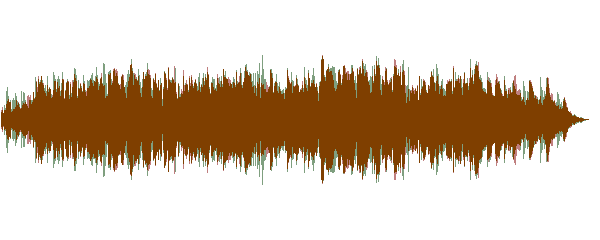

95. How to Generate a Picture Waveform from a Video?

96. How to Generate a Solid Colored Video?

97. How to Datamosh/Glitch a Video?

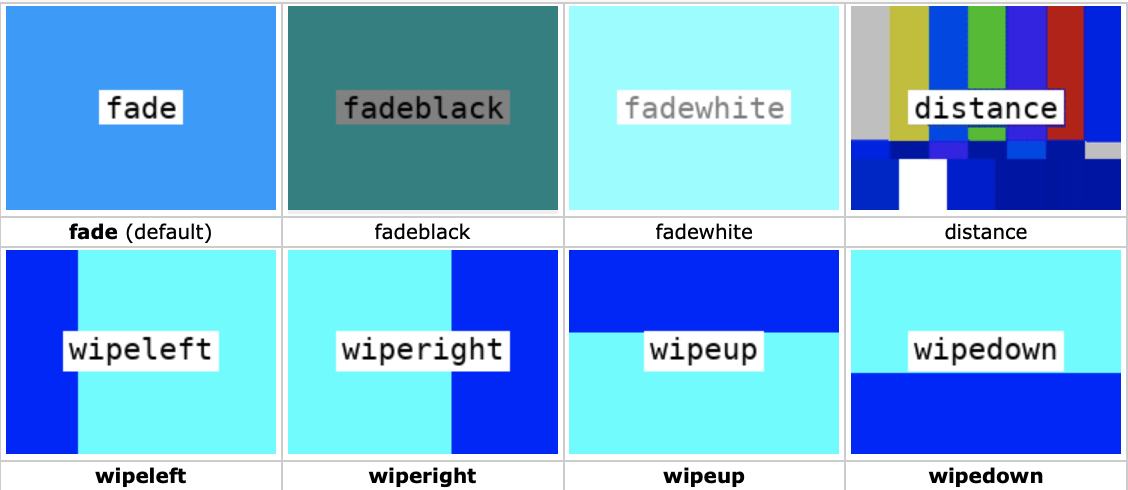

98. How to Add Various Fades to a Video?

99. How to Add Noise to a Video?

100. How to Apply Static to a Video?

101. How to Randomize Frames in a Video?

102. How to Use Green Screen to Mask a Video Into Another Video?

103. How to Use the Frei0r Filters?

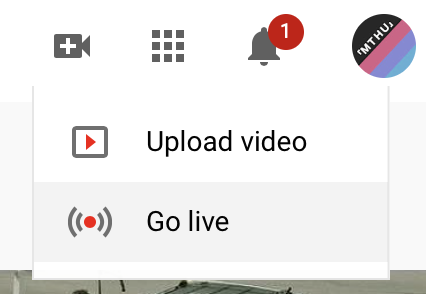

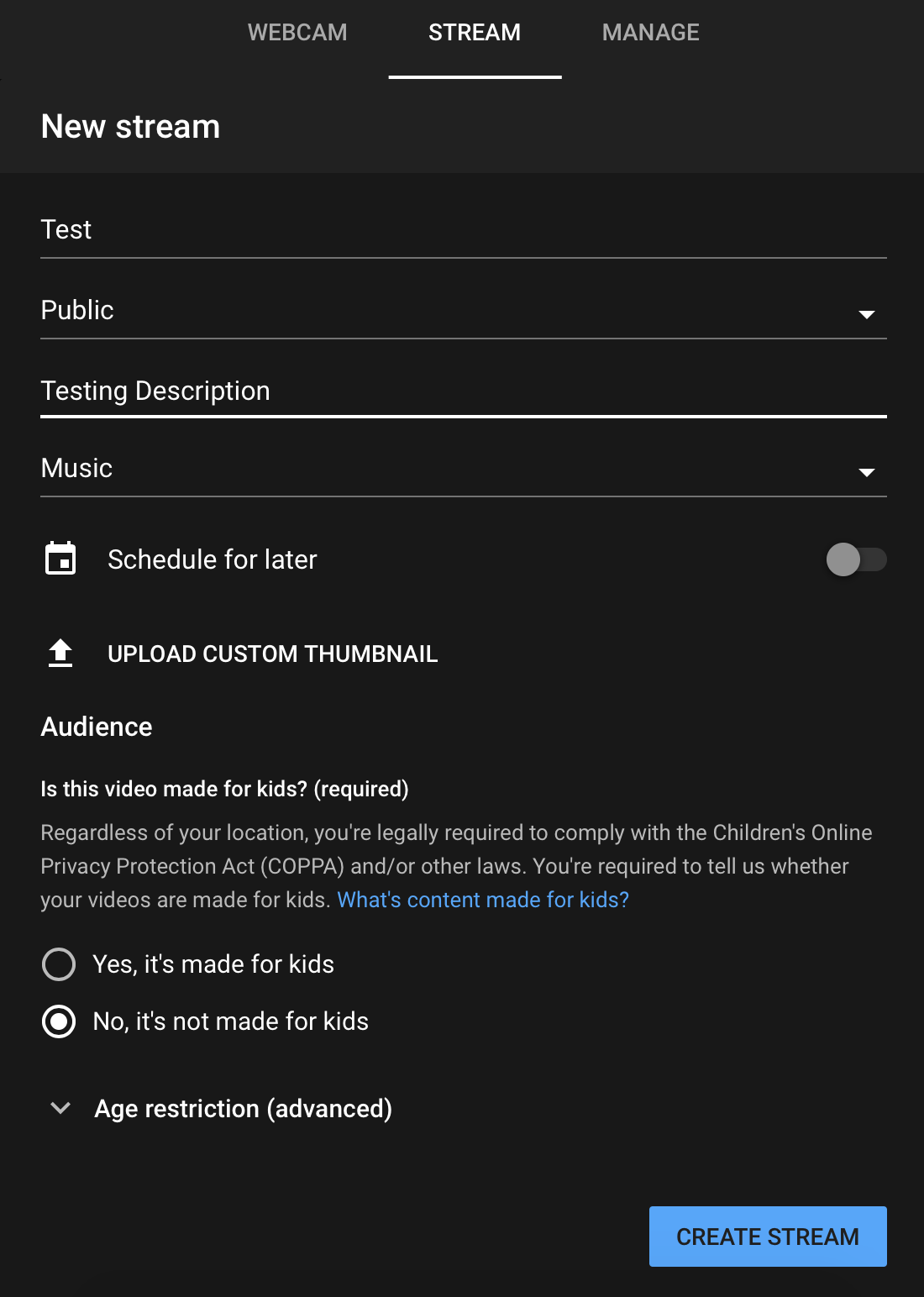

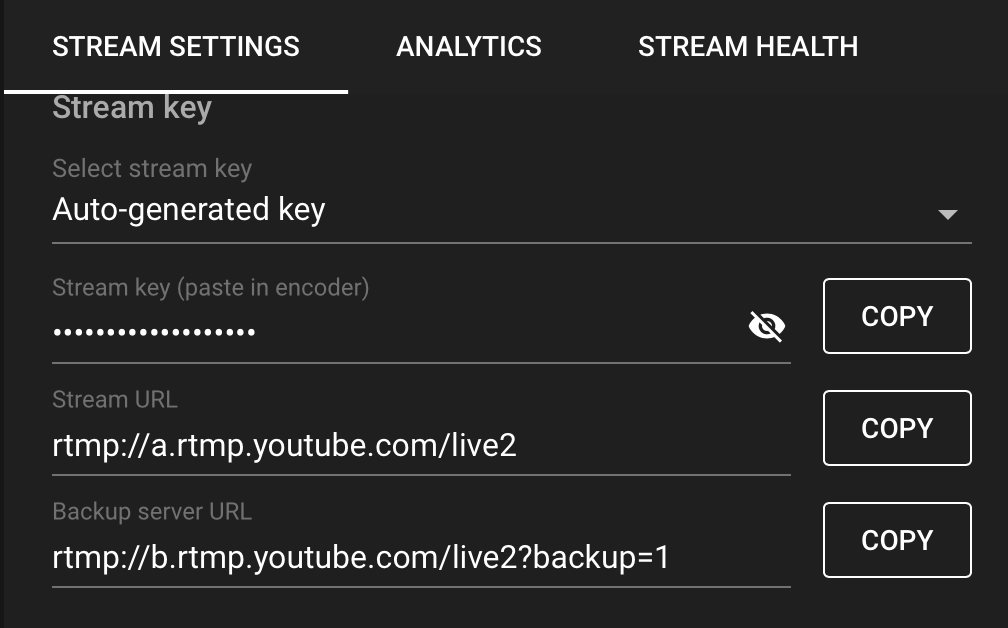

104. How to obtain a YouTube Streaming key?

105. How to Stream a File to YouTube?

106. How to Stream a Webcam to YouTube?

107. How to Use Filters with Video Streaming?

108. How to Fix the 'width/height not divisible by 2' Error?

110. How to Fix the 'Cannot connect audio filter to non audio input' Error?

111. How to Fix the 'Cannot connect video filter to audio input' Error?

112. How to Fix the 'No such filter: x' Error?

FFmpeg For Beginners Edit Audio & Video Like a Pro for Youtube and Social Media is the ultimate FFmpeg programmer's book for users at any level. Although readers at the novice level can gracefully learn FFmpeg, upon completing this book you'll graduate to an upper-intermediate skill level.

FFmpeg is a free and open-source multimedia framework for decoding, encode, transcoding, muxing, demuxing, streaming, filtering, editing and playback primarily for video and audio streams. FFmpeg is accessible via the command-line across most operating systems and is developed in C and Assembly.

The project was originally developed by Fabrice Bellard in December of 2000 and is currently maintained by the open-source FFmpeg community. Since it's initial release on GitHub it has had almost 100,000 commits to the repository and over 300 releases.

Its current license is LGPL 2.1+, GPL 2+ and if combined with software with a license FFmpeg is incompatible with GPL.

FFmpeg can be found in projects used by Facebook, YouTube, Apple, TV broadcast companies and so much more. In the world of digital media, FFmpeg is growing in popularity every year. At any given time most technology recruiting / job posting websites are constantly looking for FFmpeg developers.

If you're a hobby programmer and active on social media, FFmpeg can take boring photos or videos and turn them into elite tier experiences. Stand out from the crowd and use your FFmpeg skills to enhance your digital social status.

This book recommends that FFmpeg version 4.2.2+ is used with macOS or Linux/GNU operating systems as all examples here require Bash. Although FFmpeg is cross platform, Windows users may have to follow alternative practices not covered in this book.

This book is divided into two major sections; Audio and Video. This allows for the reader to advance their understanding gradually if read in sequence but as this book is in a question answer format, it can also be used as a quick reference manual. The following topics are covered:

-

History

-

Installation

-

Understanding the Basics of FFmpeg

-

Basic Audio Conversions

-

Audio Duration Editing

-

Top Audio Filters

-

Basic video conversions

-

Video Timeline Editing

-

Top Video Filters

-

Streaming

-

Common Errors

-

Resources

Upon completion, you'll have an intermediate understanding of FFmpeg with plenty of new tricks to advance your career or apply to social media. Before starting the programming, let's get into how to use it in this book.

FFmpeg provides the following features:

-

Decoding - the ability to decode a multimedia stream

-

Encoding - the ability to encode a multimedia stream

-

Transcoding - the ability to take one format and reformat to another

-

Muxing - the ability to combine audio and video into a single data

stream.

- Demuxing - the ability to split a data stream into multiple formats

(audio, video, subtitles)

- Filtering - the ability to apply complex algorithms to video or

audio streams

- Streaming - the ability to stream data in real time from a host to a

client

- Playback - the ability to play a video from terminal with ffplay

All of which are covered in this book.

FFmpeg at its core is a command line program for manipulating multimedia files. Commands are typed in a terminal/console and the output is stored in the specified file path.

In this book, $ indicates a blank line in the terminal/console which commands are typed and run from.

The standard command syntax for FFmpeg is as follows:

$ ffmpeg [input] [options & arguments] [output]

Bash can execute FFmpeg commands from a terminal or from a file but this book focuses on using command line one-liners to complete most actions.

FFmpeg also has a built-in player called ffplay. This tool allows for script testing or playback. Here's an example of playback using ffplay:

$ ffplay input.mp4

Here's an example of ffplay used to test a filter:

$ ffplay -i input.mp4 -vf "negate"

This is an additional feature of FFmpeg but not a major focus in this book.

The options and arguments in FFmpeg are the basis for converting videos/audio formats, applying filters and other various forms of manipulation. Options are FFmpeg commands that require arguments to perform an action, while arguments are the values passed inside a command such as input, output, filters, variables and other forms of data to complete an action.

Tip: Throughout this book the words argument and parameter are used interchangeably. Options and commands are also used interchangeably.

Different from most programming books, FFmpeg For Beginners aims to quickly answer the top FFmpeg questions while introducing the main concept to be expanded on. Thus, the book uses individual questions as the header of each example. The index in the back of the book has a list of key terms that make learning a specific task easier.

Filenames, command names and inline examples are shown in the Consolas font. Inline examples start with $ to indicate the start of a bash command and are highlighted in a mono-blue theme.

Placeholder input/output or variables are presented with square brackets [value] where default values are not defined or a definition is required.

Special notes are highlighted in bold with the word Tip. Commands with large amounts of customization may refer to external documentation underlined in a [hyperlink blue]. Ebook readers can click these hyperlinks for external access of said documentation.

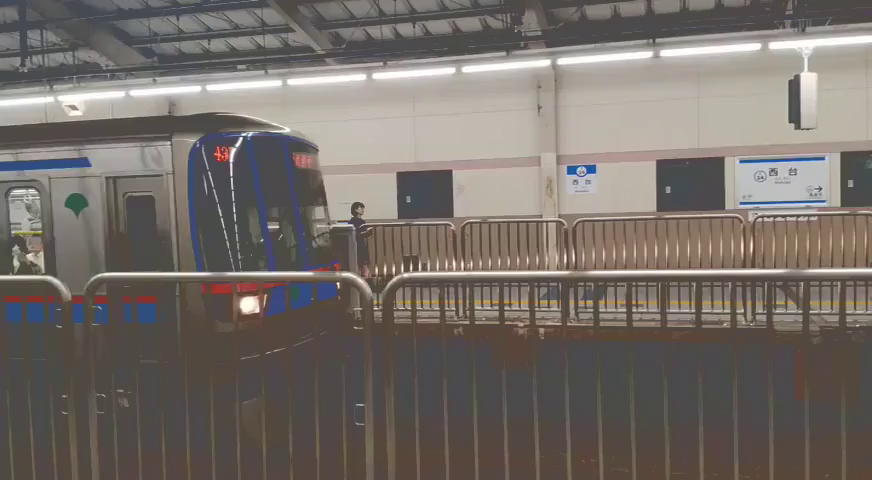

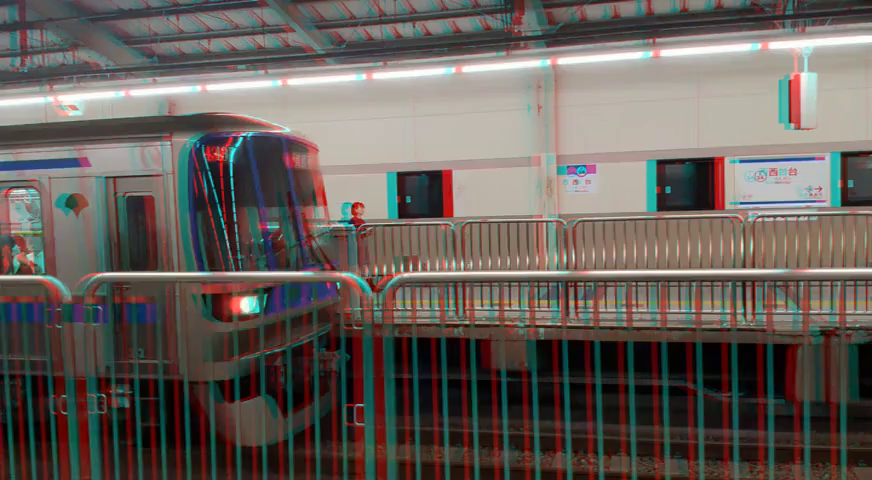

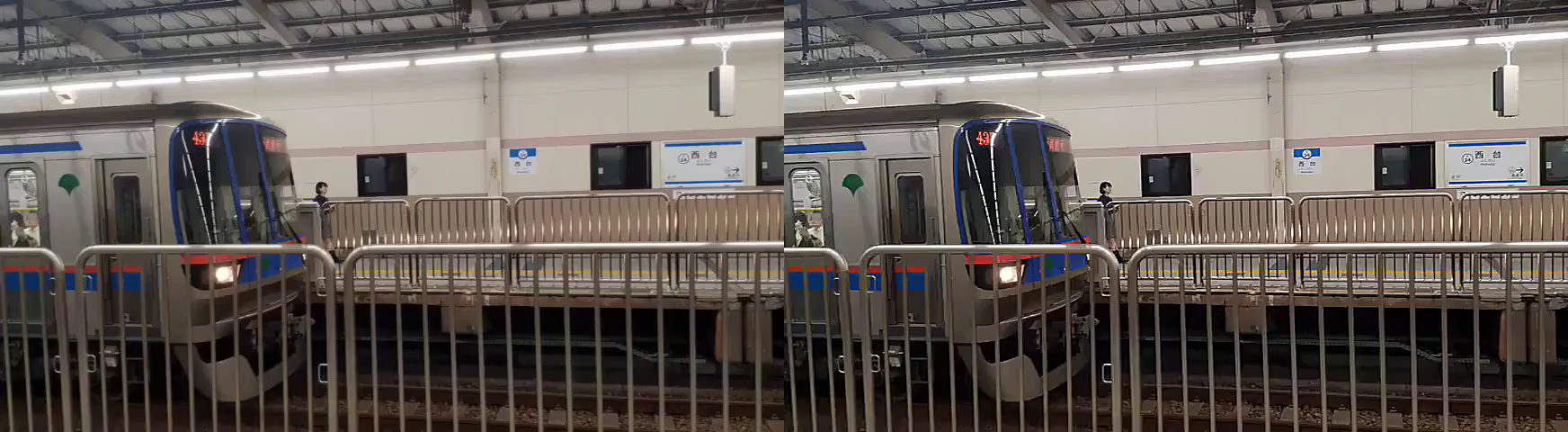

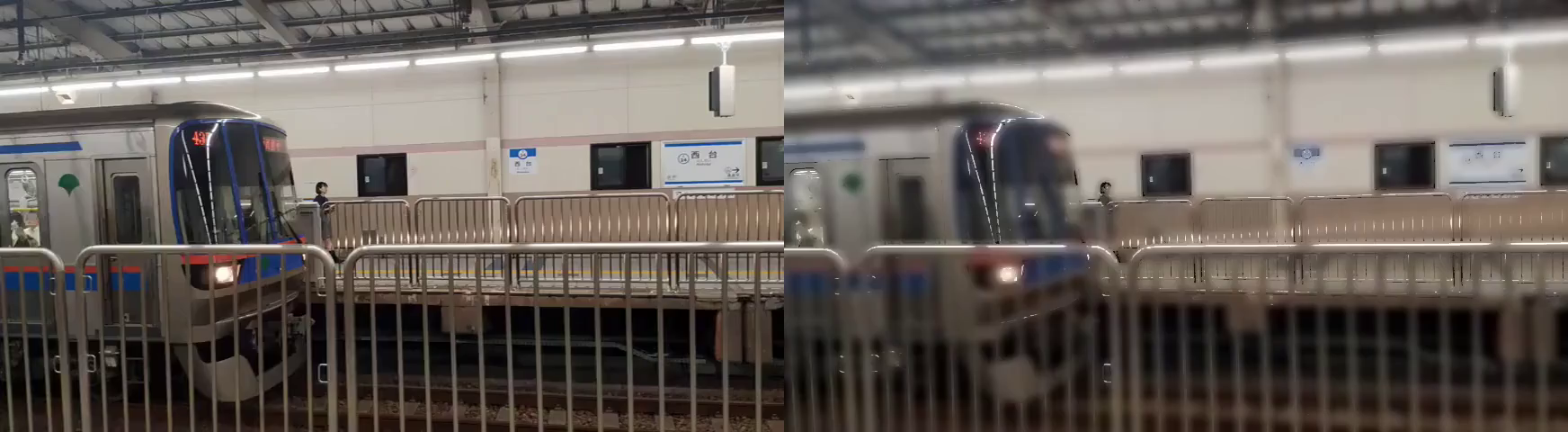

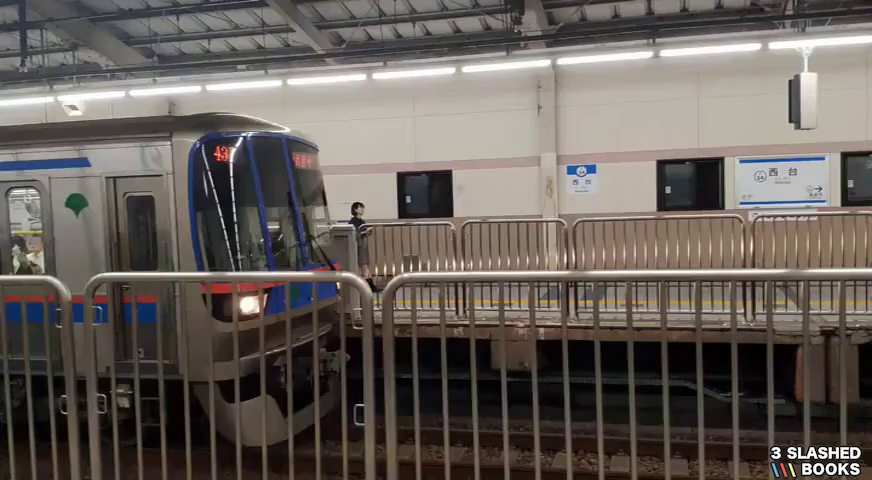

Throughout the book, a video of a Tokyo metro station is used as the primary file described as input.mp4 or input1.mp4. If a second video is required, the file input2.mp4 is used and contains a video of a busy road.

When images are used to provide a better understanding of the output, figure names are provided in relation to the question number. For example, Question 1 has figure 1.0 for the first image with figure 1.1 to follow.

If you have any issues or questions while using this book, feel free to contact me on Twitter: https://twitter.com/ffmpegtutorials

Tip: Every script in this book is available for free on my website: http://johnriselvato.com/tag/ffmpeg/

FFmpeg supports almost an unlimited amount of video and audio formats. Audio default to using MP3 for audio and MP4 for video. Don't worry, this book covers how to convert the most common audio and video formats to MP3 and MP4, but most if not all commands in this book work with any desired format with proper encoding. Standard filenames are as followed:

-

Audio input: input.mp3

-

Audio output: output.mp3

-

Video input: input.mp4/input1.mp4/input2.mp4

-

Video output: output.mp4

In instances where two FFmpeg commands are required to complete a task, the output of the first command takes the name of the action and with the final output file resulting in output.mp3/output.mp4. For example:

$ ffmpeg -i input.mp4 [blur argument] blur.mp4

$ ffmpeg -i blur.mp4 [color correction argument] output.mp4

Installation

This section covers the most common installation questions for FFmpeg. Covering the installation on macOS, Linux/GNU and Windows initially. FFmpeg also works well with PHP, and a few other programming languages. Getting started with these topics gets you working with FFmpeg as quickly as possible.

Let the answering of questions begin!

FFmpeg is the most well known multimedia framework for decoding, encode, transcoding, muxing, demuxing, streaming, filtering, editing and playing almost every video and audio format ever created. FFmpeg is not limited to open source formats but even those maintained by corporate entities.

FFmpeg is built with multiple multimedia libraries which are available free of charge and at its core used from the command line tool. FFmpeg is open source and cross platform, so running it on macOS, Linux, Windows, Android, iOS, etc is possible with little effort.

Most people use FFmpeg to convert one file format to another. Those with a little creative spark use FFmpeg to create complex audio or visual edits on the fly or even build entire software programs out of it. In this book you'll learn how to take this massive multimedia framework and master it.

Installing FFmpeg on macOS is simple for users with Homebrew installed. Homebrew is the package manager macOS as always needed but has yet to have. Think of it as an equivalent to apt-get on linux.

To quickly install Homebrew run the following:

$ /bin/bash -c "$(curl -fsSL

https://raw.githubusercontent.com/Homebrew/install/master/install.sh)"

Tip: For more information on homebrew or installing homebrew, visit: https://brew.sh

The FFmpeg installation command is as follows:

$ brew install ffmpeg

Tip: If additional libraries are required for an FFmpeg build, reference question 5, "How to Configure FFmpeg with Extra Dependencies?".

On Ubuntu or Debian based Linux operating systems with apt-get installed, FFmpeg installation is easy:

$ sudo apt-get update

$ sudo apt-get install ffmpeg

This installs the latest FFmpeg package but some Linux users may want to install from source. FFmpeg requires compilation and installation of various codecs and libraries not bundled with FFmpeg source code, thus out of scope for this book.

If installation from source is required, visit the following FFmpeg wiki page:

https://trac.ffmpeg.org/wiki/CompilationGuide/Ubuntu

Although this book may cover functionality that does not work out of the box on the windows operating system, FFmpeg is cross-platform and does everything macOS and Linux builds can do.

Installation of FFmpeg on Windows is more involved as Windows doesn't natively have a package manager. The good news is the people over at Zeranoe have provided an FFmpeg build for Windows 64-bit and 32-bit. Visit the following for more instructions: https://ffmpeg.zeranoe.com/builds/

FFmpeg requires additional configuration on Windows. Below is the general requirement to install FFmpeg but installation may vary:

-

Extract the FFmpeg build to C:\

-

Rename the build folder to FFmpeg

-

Navigate to Environment Variables by doing a Windows search for:

Edit the system environment variables

- In the Environment Variables window, select the Variable Path and

click New

- Type the path of the FFmpeg folder C:\FFmpeg\bin\ and click

OK to apply changes

Upon installation, FFmpeg is accessible via the command prompt. Typing ffmpeg and pressing enter to verify if the installation has been successful.

If the command prompt indicates that FFmpeg is not recognized double check to ensure the FFmpeg folder is referenced in Environment Variables properly.

Build dependencies are great additions to FFmpeg that can make the program even more powerful. These dependencies link to 3rd party tools that extend functionality or even simplify some processes. In this book there are a couple of audio/video filters that require extra installed dependencies. Installation is as follows:

macOS

As of Early 2020, macOS + homebrew install has disabled native dependencies support. Luckily, thanks to a discussion on GitHub the current workaround is as follows (install all available dependencies):

+-----------------------------------------------------------------------+

| $ brew uninstall --force --ignore-dependencies ffmpeg |

| $ brew install chromaprint amiaopensource/amiaos/decklinksdk |

| $ brew tap homebrew-ffmpeg/ffmpeg |

| |

| $ brew install ffmpeg |

| $ brew upgrade homebrew-ffmpeg/ffmpeg/ffmpeg $(brew options |

| homebrew-ffmpeg/ffmpeg/ffmpeg | grep -vE 's' | grep -- |

| '--with-' | grep -vi chromaprint | tr 'n' ' ') |

+=======================================================================+

+-----------------------------------------------------------------------+

Source: https://gist.github.com/Piasy/b5dfd5c048eb69d1b91719988c0325d8

Linux

Linux users who want to configure extra dependencies are required to install from source, reference question 3, "How to Install FFmpeg on Linux?".

Here's an example of how to install dependencies provided by the FFmpeg wiki:

$ cd ~/ffmpeg_sources &&

wget -O ffmpeg-snapshot.tar.bz2

https://ffmpeg.org/releases/ffmpeg-snapshot.tar.bz2 &&

tar xjvf ffmpeg-snapshot.tar.bz2 &&

cd ffmpeg &&

PATH="$HOME/bin:$PATH"

PKG_CONFIG_PATH="$HOME/ffmpeg_build/lib/pkgconfig" ./configure

--prefix="$HOME/ffmpeg_build"

--pkg-config-flags="--static"

--extra-cflags="-I$HOME/ffmpeg_build/include"

--extra-ldflags="-L$HOME/ffmpeg_build/lib"

--extra-libs="-lpthread -lm"

--bindir="$HOME/bin"

--enable-gpl

--enable-libaom

--enable-libass

--enable-libfdk-aac

--enable-libfreetype

--enable-libmp3lame

--enable-libopus

--enable-libvorbis

--enable-libvpx

--enable-libx264

--enable-libx265

--enable-nonfree &&

PATH="$HOME/bin:$PATH" make &&

make install &&

hash -r

Source: https://trac.ffmpeg.org/wiki/CompilationGuide/Ubuntu

Now that FFmpeg is installed on your specific operating system, you can use FFmpeg in the command line or graduate to using it on your webserver with PHP. The connection to PHP makes FFmpeg very dynamic and useful for generating audio or videos on a server or to be sent to a client.

Once FFmpeg is installed on the server, using PHPs' shell_exec() to access FFmpeg is as easy as using the command line, for example:

echo shell_exec("ffmpeg -i input.mp4 output.mp4 &");

There is a PHP library, PHP-FFMpeg, available if this method is too simplistic but it requires additional set up not covered in this book.

For more information visit the PHP-FFMpeg GitHub at: https://github.com/PHP-FFMpeg/PHP-FFMpeg

Command line one-liners are great for quick and one-off FFmpeg experiences but sooner or later the need for a custom application is required for efficiency. FFmpeg can be used with almost any programming language with little effort. Some languages might even have libraries of their own to extend FFmpeg natively. Below are a few examples of various languages using FFmpeg:

Python/FFmpy

FFmpeg is easily accessible using os.system in Python, as seen below:

import os

os.system("ffmpeg -i input.mp4 output.mp3")

But Python also has a 3rd party library ffmpy, which has its own syntax and way of using FFmpeg. In this example an MP4 is covered to MP3:

import ffmpy

ff = ffmpy.FFmpeg(

... inputs={'input.mp4': None},

... outputs={'output.mp3': None}

... )

ff.run()

More information: https://pypi.org/project/ffmpy/

Bash/Shell

Although most examples in this book are Bash one-liners, it is beneficial to know how to run FFmpeg from a Bash / Shell script. In this example, the script converts all MP4 files in a folder to MP3 (script.sh):

for i in *.mp4; do

OUTPUT=${i%.mp4}

echo $OUTPUT

ffmpeg -i "$i" $OUTPUT.mp3

done

Ruby/Streamio

Ruby also has its own FFmpeg library called Streamio. In this example, a MOV file is converted to MP4:

+-----------------------------------------------------------------------+

| require 'streamio-ffmpeg' |

| |

| input = FFmpeg::Movie.new("path/to/input.mov") |

| output.transcode("movie.mp4") # Output to mp4 |

+=======================================================================+

+-----------------------------------------------------------------------+

More information: https://github.com/streamio/streamio-ffmpeg

As mentioned earlier, FFmpeg is cross-platform and open source ports are available in almost every language or platform. Although not covered in this book, this also includes iOS and Android.

Are you using FFmpeg in a language or platform this book didn't cover? Share your project with me on Twitter: @ffmpegtutorials

At this point FFmpeg should be installed on your operating system of choice and ready for use. To double check installation run the following command:

$ ffmpeg -version

The output details the FFmpeg version as well as the various configurations enabled and disabled and which additional codecs are installed. Below is the recommended setup for this book:

$ ffmpeg -version

ffmpeg version 4.2.2 Copyright (c) 2000-2019 the FFmpeg developers

built with Apple clang version 11.0.0 (clang-1100.0.33.17)

configuration: --prefix=/usr/local/Cellar/ffmpeg/4.2.2_2

--enable-shared --enable-pthreads --enable-version3

--enable-avresample --cc=clang --host-cflags= --host-ldflags=

--enable-ffplay --enable-gnutls --enable-gpl --enable-libaom

--enable-libbluray --enable-libmp3lame --enable-libopus

--enable-librubberband --enable-libsnappy --enable-libtesseract

--enable-libtheora --enable-libvidstab --enable-libvorbis

--enable-libvpx --enable-libwebp --enable-libx264 --enable-libx265

--enable-libxvid --enable-lzma --enable-libfontconfig

--enable-libfreetype --enable-frei0r --enable-libass

--enable-libopencore-amrnb --enable-libopencore-amrwb

--enable-libflite --enable-libzvbi --enable-libopenjpeg

--enable-librtmp --enable-libspeex --enable-libsoxr

--enable-videotoolbox --disable-libjack --disable-indev=jack

libavutil 56. 31.100 / 56. 31.100

libavcodec 58. 54.100 / 58. 54.100

libavformat 58. 29.100 / 58. 29.100

libavdevice 58. 8.100 / 58. 8.100

libavfilter 7. 57.100 / 7. 57.100

libavresample 4. 0. 0 / 4. 0. 0

libswscale 5. 5.100 / 5. 5.100

libswresample 3. 5.100 / 3. 5.100

libpostproc 55. 5.100 / 55. 5.100

Tip: It is suggested to use FFmpeg version 4.2.2 or higher.

With FFmpeg installed, configured and ready to run it's time to learn the basics of FFmpeg. Although this book covers the top 112 most searched questions for FFmpeg, the functionality of this application is limitless. Next, let's explore the world of audio editing with FFmpeg!

Understanding the Basics of FFmpeg

This section quickly catches you up to speed on the foundational building blocks of FFmpeg and key concepts. Here you'll learn about codecs & formats, general filter syntax and how to use FFmpeg at the most basic level. This is the most important section of the book, as without this knowledge chances are you're using FFmpeg blindly.

FFmpeg has hundreds of different codecs to edit with, convert to and play from. In your journey to mastering FFmpeg you'll face the word codec so often that not understanding what one is, is a disservice to yourself.

Wikipedia explains a codec as:

"... a device or computer program which encodes or decodes a digital data stream or signal. A coder encodes a data stream or a signal for transmission or storage, possibly in encrypted form, and the decoder function reverses the encoding for playback or editing."

This book focuses on the MP3/AAC codecs for audio and the H.264 codec for video. These are the most popular codecs for modern software and websites making them the obvious choice. For example, it's much easier to post an MP4 file with the H.264 codec to Instagram and Twitter than it is to upload an Apple MOV video.

Tip: Codecs are not the container that makes up an MP4 but the encoded data to allow MP4 files to be understood by software.

Tip: Refer to question 11, "What are All the Formats FFmpeg Supports?", for more information about format containers.

Since codecs are the backbone of FFmpeg, it is beneficial to know how to access the full list of supported codecs. The command is as follows:

$ ffmpeg -codecs

The output from this command shows hundreds of codecs for both audio and video. For the full list of supported codecs, refer to the List of Codecs section in the back of this book.

Tip: The [libx264 / H.264 video codec for MP4 files are the default in this book.]{.mark}

[Tip: The MP3/AAC audio codecs for audio files are the default in this book.]{.mark}

Unlike codecs, formats are the digital input/output containers FFmpeg and other software use to identify the type of file.

While an MP4 is the extension, formats are the encoded video and audio container. For example, an MP4 file may contain one of the following audio codecs: ACC, AC3, ALS, MP3, or ACC.

Selecting the correct codec depends on the output format. This is not an automatic result but a manual selection through being familiar with the supported formats and their codecs. For a full list type:

$ ffmpeg -formats

The output of this command results in a list detailing hundreds of FFmpeg supported formats. For the full list of supported formats, refer to the List of Formats section in the back of this book.

Copying a codec from one file to another is easy and convenient. This is useful as a convenience method when the output uses the same codec as the input but in most cases the copying may be omitted.

Here is an example of a MOV file being converted to an MP4 with both audio and video codecs being copied:

$ ffmpeg -i input.mov -c:v copy -c:a copy output.mp4

This is the first syntax based command seen in this book, let's break it down. The -c:v (or -vcodec) sets the video codec, while -c:a (or -acodec) sets the audio codec. Adding the value copy after each results in FFmpeg using and copying the codec from the input file.

It is common to see on programming forums, such as Stack Overflow, examples where the author has copied the codec from one format type to another. More often than not, this is risky but due to programs like VLC, playback isn't halted regardless of format type + codec combinations.

In this example, an AVI file is being converted to an MP4 but there is a risk in copying the codecs:

$ ffmpeg -i input.avi -vcodec copy -acodec copy output.mp4

The reason for concern is the AVI format supports a wide range of codecs, DivX, Xvid, H.264, and MPEG-4 to name a few. Whereas an MP4 does not natively support DivX, Xvid or other codecs. So copying video codecs blindly may result in a faulty playback.

Tip: VLC might play an MP4 with a DivX codec but uploading to Instagram can not because it does not have a decoder for DivX playback.

Now that the importance of knowing which codecs a file format should conform to, let's investigate the information inside an input file. The command is as follows:

$ ffmpeg -i input.mp3

ffmpeg version 4.2.2 Copyright (c) 2000-2019 the FFmpeg developers

built with Apple clang version 11.0.0 (clang-1100.0.33.17)

configuration: --prefix=/usr/local/Cellar/ffmpeg/4.2.2_2

--enable-shared --enable-pthreads --enable-version3

--enable-avresample --cc=clang --host-cflags= --host-ldflags=

--enable-ffplay --enable-gnutls --enable-gpl --enable-libaom

--enable-libbluray --enable-libmp3lame --enable-libopus

--enable-librubberband --enable-libsnappy --enable-libtesseract

--enable-libtheora --enable-libvidstab --enable-libvorbis

--enable-libvpx --enable-libwebp --enable-libx264 --enable-libx265

--enable-libxvid --enable-lzma --enable-libfontconfig

--enable-libfreetype --enable-frei0r --enable-libass

--enable-libopencore-amrnb --enable-libopencore-amrwb

--enable-libopenjpeg --enable-librtmp --enable-libspeex

--enable-libsoxr --enable-videotoolbox --disable-libjack

--disable-indev=jack

libavutil 56. 31.100 / 56. 31.100

libavcodec 58. 54.100 / 58. 54.100

libavformat 58. 29.100 / 58. 29.100

libavdevice 58. 8.100 / 58. 8.100

libavfilter 7. 57.100 / 7. 57.100

libavresample 4. 0. 0 / 4. 0. 0

libswscale 5. 5.100 / 5. 5.100

libswresample 3. 5.100 / 3. 5.100

libpostproc 55. 5.100 / 55. 5.100

Input #0, mp3, from 'input.mp3':

Metadata:

title : 1989 MAZDA FAMILIA // Car

artist : テレビCM

track : 16

album : Visual Signals

date : 2020

encoder : Lavf58.29.100

Duration: 00:00:16.58, start: 0.023021, bitrate: 128 kb/s

Stream #0:0: Audio: mp3, 48000 Hz, stereo, fltp, 128 kb/s

Metadata:

encoder : Lavc58.54

Side data:

replaygain: track gain - 13.100000, track peak - unknown, album gain -

unknown, album peak - unknown,

At least one output file must be specified

After the FFmpeg version and configuration information the Input section details information like metadata, duration, encoding and more. In the above example it can be noted that input.mp3 is indeed an MP3 encoded with Lavc/Lavf.

Converting between one file format to another is extremely useful and might be all one might use FFmpeg for but where the real fun begins is in the filters. Filters are used to manipulate audio and video with the libavfilter library. These range from editing the color to adding complex algorithms to improve output.

A filter can apply a single change to an input file or multiple changes to multiple input & output files or anything in between. Filters follow a sequential order as written in the command.

The options -vf / -af (video filter / audio filter) are used for simple filtergraphs (one input, one output), and -filter_complex is used for complex filtergraphs (one or more inputs, one or more outputs). Using -filter_complex omits the specification of the multimedia type as both video and audio filters can be used at the same time.

Below is an example of reversing the input video and audio with both syntax types.

An example using -vf / -af syntax:

$ ffmpeg -i input.mp4 -vf "reverse" -af "areverse" output.mp4

An example using -filter_complex syntax:

$ ffmpeg -i input.mp4 -filter_complex

"[0:v]reverse;[0:a]areverse" output.mp4

The -filter_complex option isn't only used when chaining audio and video manipulation. -filter_complex can be used when multiple inputs are required or when temporary streams are created.

Tip: The [0:v] and [0:a] syntax indicates a way to access the input video and audio. See question 17, "What is -map and How is it Used?", for more details.

Tip: For more information see question 15, "How to Chain Multiple Filters?"

Lastly FFmpeg does have streaming capabilities and filters can be applied and changed at runtime. For more information see question 112, "How to Use Filters with Video Streaming?".

Using FFmpeg scripts a single filter at a time might get the job done but at times applying multiple filters is required. It is possible to apply one filter at a time constantly referencing the last output file but ideally chains are used to efficiently apply multiple filters.

There are two types of filter chaining in FFmpeg; Linear chains and distinct linear chains. Linear chains are separated by commas while distinct linear chains are separated by semicolons.

Linear chains are filters applied to an input or temporary stream but do not require creating additional temporary streams before creating the output file.

Below is an example of a linear chain horizontally flipping a video and then inverting the colors:

$ ffmpeg -i input.mp4 -vf "hflip,negate" output.mp4

Distinct linear chains use secondary inputs or temporary streams before creating the final output. In this example the input is split into temporary streams. The second stream is reversed and finally the two temporary streams are merged together. Resulting in an audio playback with both forward and reversed playing at the same time:

$ ffmpeg -i input.mp3 -filter_complex "asplit [main][tmp];

[tmp]areverse[new]; [new][main]amix=inputs=2[out]" -map

"[out]" output.mp3

Don't worry if the above code looks intimidating, distinct linear chains are easier to understand the more you use them.

Tip: See question 17, "What is -map and How is it Used?", for more details about distinct linear chains and mapping.

In addition to chaining, some filters may take a list of parameters which might look like chaining in itself. To distinguish between chaining and a parameter list the filter starts with the name followed by an equal sign followed by additional parameters that are separated by a colon. This example of multiple filter parameters adds two seconds to the end of an audio file:

$ ffmpeg -i input.mp3 -af "apad=packet_size=4096:pad_dur=2"

output.mp3

Where apad is the padding audio filter that has the parameters packet size and pad duration.

Tip: It is important to use -af or -filter_compex when applying an audio filter. Using -vf (video filter) outputs a new file but does not apply the apad filter.

Tip: Most audio filters start with an 'a' (for audio) followed by the name of the filter. Video and audio filters may have the same name and the suffix 'a' indicates the filter is specific to audio.

This is one of the most asked questions about FFmpeg and for a good reason. By default automatic compression is added depending on the filter. This is usually done to perform the filter quicker as the higher the quality, the longer the command takes for completion.

Compression is avoided by using a recommended codec and setting quality. It is recommended that the H.264 encoder, seen as libx264, is used with most video formats. This assumes that your FFmpeg installation is configured with --enable-libx264.

The H.264 codec can be set as seen in the example below:

$ ffmpeg -i input.mp4 -c:v libx264 output.mp4

Setting the constant rate factor (-crf) is another recommended compression technique. This is an encoding mode for some codecs to adjust file data rate up or down which in return affects the quality level. Lossless output has a value of 0 as the lower the number the higher the quality but larger the file size. The default value is 23 while 51 is the lowest quality. According to the FFmpeg documentation, values between 17-28 are "virtually lossless" visually but not technically. Here's an example of using -crf:

$ ffmpeg -i input.mp4 -c:v libx264 -crf 28 output.mp4

In addition to setting the codec and constant rate factor, selecting a preset can also increase quality but increases the file size. Setting the preset determines the encoding speed and compression ratio. The slower the preset the longer the computation time. The presets are as follows:

-

ultrafast

-

superfast

-

veryfast

-

faster

-

fast

-

medium -- default

-

slow

-

slower

-

veryslow

For the highest video quality with all 3 settings, use the following command:

$ ffmpeg -i input.mp4 -c:v libx264 -crf 0 -preset veryslow output.mp4

Tip: If a filter is causing quality to degrade, add these options between the input and output.

Tip: Although the question states -filter_complex this can also be applied to -vf (video filter).

The -map functionality is an advanced feature that allows the user to specify the input audio or video for the output file. In addition, virtual streams may be created to apply complex manipulations that can be accessed with -map.

Normally, a map isn't required to set an output as the audio and video are known. Here an input is duplicated to an output and FFmpeg knows to use the same audio and video for the final result:

$ ffmpeg -i input.mp4 output.mp4

If this was written using -map the example would like this:

$ ffmpeg -i input.mp4 -map 0:v -map 0:a output.mp4

Here the map of 0:v is the video of input 1 (0 index) and 0:a is the audio of input 1 (0 index).

In examples where multiple inputs are available, the use of -map specifies which stream is included in the output. In the example below, the 0:v is the input1.mp4 video and 1:a is the input2.mp4 audio. This results in a new output with the video from the first input and the audio from the second:

$ ffmpeg -i input1.mp4 -i input2.mp4 -map 0:v -map 1:a output.mp4

In the case of using a filter with a script of two inputs, the specific input must be set. The syntax is a little different in this situation as the filter must know which input uses the filter.

In this example, the output video is from input1.mp4 and a filter is used on the audio of input2.mp4.

The volume of the audio had an increased volume of 10dB because that second input was specified to have the filter applied to it:

$ ffmpeg -i input1.mp4 -i input2.mp4 -filter_complex

"[1:a]volume=10dB[new_audio]" -map 0:v -map [new_audio]:a

output.mp4

The volume filter is applied to [1:a], the audio from input2.mp4, and a new temporary stream, [new_audio], must be created for -map to set the new audio in the output. Accessing the audio from [new_audio] follows the same pattern with the [new_audio]:a syntax.

If an input has multiple audio sources as seen in MKV files, the access to a specific audio channel syntax is as seen below:

$ ffmpeg -i input.mkv -map 0:v -map 0:a:1 output.mp4

The additional syntax adds a third variable for the audio source. If the above MKV had 2 audio sources, 0:a:0 or 0:a:1 could be used to select the appropriate source. This is useful for when an MKV has multiple language tracks but the output only needs one.

In addition to creating a temporary stream, it can also have a filter applied to it through chaining. Here the same concept from the last example, except the [new_audio] stream has an areverse filter applied to it:

$ ffmpeg -i input1.mp4 -i input2.mp4 -filter_complex

"[1:a]volume=50dB[new_audio];

[new_audio]areverse[reversed_audio]" -map 0:v -map

[reversed_audio]:a -shortest output.mp4

Here a new audio stream, [reversed_audio], is created and set for the output. The final results being video from input1.mp4 with audio from input2.mp4 with 10dB higher volume and reverse is now complete.

The syntax of -map accesses to a stream's audio and video is very similar to dictionaries in the Swift programming language. A dictionary has a key and value, [key:value], and in FFmpeg the key is the index/name while the value is either an audio or video source.

Tip: If the output requires video and audio both must be mapped.

Before exploring how to use all the amazing and powerful filters, let's first learn one more useful trick; converting an entire directory. Whether it's to convert an entire folder or apply a filter to multiple files, cycling through files is bound to come up in any workflow.

In the example below, this one-liner converts all WAV files in a folder to MP3:

$ **for** i **in** *.wav; **do** ffmpeg -i "$i" "${i%.*}.mp3";

**done**

This keeps a copy of the input WAV file while additionally adding a new MP3 file to the same folder.

All FFmpeg commands can use this same format but at some point a shell script might be required to complete complex filtering. See question 7, "How to Use FFmpeg in Various Languages?"

Congratulations, you now have an understanding of FFmpeg basics. The difference between codecs & formats, filters and maps are important for the rest of this book so feel free to review these questions a couple of times. But now that you're a pro at the basics, let's script a few basic audio conversions.

Basic Audio Conversions

At this point, you've grasped the basic understanding of how FFmpeg works in theory and now you are ready to start using FFmpeg in practice. To start off, let's take the most popular audio formats and convert them to MP3. This book starts off with audio first to help introduce concepts about syntax, functionality and best practices. Everything found in the audio section of this book can be applied to videos as well or other audio formats.

At times, you may have a video that you wish to extract the audio from. This is easily done by simply converting the video into an audio format. For example, here an MP4 is converted to an MP3:

$ ffmpeg -i input.mp4 -vn output.mp3

A new argument -vn (no video) is used to ensure the encoded MP3 is only audio. FFmpeg is smart enough that this argument is optional in most cases.

If for any reason a conversion of a video to audio format results in a broken MP3, setting the mp3 codec to libmp3lame may fix the issue:

$ ffmpeg -i input.mp4 -vn -acodec libmp3lame output.mp3

For the sake of reference here's the most simplistic way to convert an MP4 to an MP3:

$ ffmpeg -i input.mp4 output.mp3

The following questions will quickly convert the most common audio formats into MP3. FFmpeg supports hundreds of different formats, to check if your specific audio format is supported visit question 11, "What are All the Formats FFmpeg Supports?", for a full list check the back of the book.

Ogg is an open container format by the xiph.org foundation with no restrictions on software patents. Ogg does have a higher audio quality at smaller file sizes but is not commonly supported on physical devices natively (MP3 players, iPhone, etc).

Fortunately, Ogg to MP3 is simplistic and an easy conversion. With audio to audio conversions setting -acodec maybe redundant as seen below:

$ ffmpeg -i input.ogg output.mp3

Tip: If an MP3 codec is required add -acodec libmp3lame.

The Free Lossless Audio Codec or FLAC is another free audio codec and container which is known for its lossless audio quality. Oddly enough, FLAC is also maintained and developed by the xiph.org foundation. Other than FLACs inconvenience of player support, audiophiles consider FLAC to be one of the best formats for it's Hi-Res audio.

Although this might have superior audio quality, MP3 is just more convenient and thus converting a FLAC to MP3 is as follows:

$ ffmpeg -i input.flac -ab 320k output.mp3

In this example, the introduction of -ab or bitrate can be noted. The higher the bitrate the higher the quality but larger the file.

Tip: This conversion automatically keeps metadata (v3.2+)

WAV is probably the most common audio format next to MP3. The WAV format has been developed and maintained by Microsoft and IBM since its initial release in 1991. WAV files are known for their large file size due to its uncompressed audio but high quality format so converting to MP3 may be required:

$ ffmpeg -i input.wav -ab 320k output.mp3

The -ab here is audio bitrate. The higher the value the better the audio quality but it results in a large file. This is optional.

Converting one audio file to another format is a simple but powerful tool. There are even people on http://fiverr.com (a quick gig freelancing website) who have had 100s of jobs solely on the basis of converting one audio file to another. You too now have that ability.

Want to sign up for Fiverr? Feel free to use my referral code and "Earn Up To $100"* in credit: http://www.fiverr.com/s2/6229935ae0 * according to Fiverr referral terms and services

Audio Duration Editing

Non-linear audio editing with FFmpeg is possible and convenient for quick duration changes. Traditionally, a program like Audacity with graphical user interface (GUI) would be used to trim parts of the track. In this section you'll learn how to use a terminal to remove a few seconds from the beginning or a few minutes from the end of an audio track. Really any kind of cutting, chopping, trimming, etc can be accomplished with a little bit of practice.

Merging audio with FFmpeg is one of the most searched questions on the internet and couldn't be any easier. The only difference is a txt file with a list of MP3 tracks must be made before the merging can begin. Below is an example list inside the file (file.txt):

file 'track1.mp3'

file 'track2.mp3'

file 'track3.mp3'

Next, using the following FFmpeg one-liner, all three MP3 files are merged into one long track:

$ ffmpeg -f concat -i file.txt -c copy output.mp3

concat or concatenate is one of the most common processes of combining media files in FFmpeg. It should be noted that the MP3 files in the list must contain the same bitrate and sample rate for concat to work. Additional conversion might be required.

In this example, FFmpeg lacks a specific media file as an input but uses input variables for the external file (file.txt). Thus -f is used to "force" a non-media file type input.

concat

Indicates the argument that combines multiple media files

-f

Indicates a forced input or output

The next 3 questions deal with trimming the length of an audio track. Trimming seems like it should be easy but actually requires multiple, initially, confusing arguments. Once one gets familiar with the syntax, trimming isn't difficult. In order to understand the next 3 questions, let's look at the common FFmpeg arguments to properly trim audio.

-ss [time]

Indicates seeking (the start time of audio) and must be set before the

input audio

-t [time]

Indicates the input audio duration. Must be set manually

-async [sample per second]

This "stretches/squeezes" the audio stream to fit the timestamp.

-async 1 is a special case that corrects only the start of the audio

stream

[time]

The formatting for time are in order from hour, minute seconds

(HH:MM:SS)

To introduce the concept of trimming with FFmpeg, this example removes audio from the start of a track.

Here the input file has a 1 minute duration with a 10 second introduction of silence. To remove that silence, it's important to identify these two numbers because trimming requires tricky subtraction. Let's look at the example below:

$ ffmpeg -ss 00:00:10 -i input.mp3 -t 00:01:00 -async 1 output.mp3

As outlined in "A Note About Trimming", -ss is used to seek to a part in the audio and -t is the duration of the playback. A 1 minute audio track subtracted by 10 seconds of seeking results in the first 10 seconds being removed.

Tip: The -ss argument must be set before the input file else the seeking is applied to the output regardless of the input.

A minor setback with trimming is manually setting the audio duration value. Luckily, FFmpeg has a tool called ffprobe which is used to give the exact duration:

$ ffprobe -i input.mp3 -show_entries format=duration -v quiet -of

csv="p=0" -sexagesimal

Challenge: See question 7, "How to Use FFmpeg in Various Languages?", for ideas on how to use this output as a variable and dynamically set track duration.

Trimming a couple of seconds off the end of a track is easy but again requires a different thinking of how time works in FFmpeg. Like trimming from the start of an audio track, trimming from the end of a track uses the argument -t or duration with no seeking.

In this example, the input file is 1 minute long with the requirement that has 10 seconds removed from the end:

$ ffmpeg -t 00:00:50 -i input.mp3 -async 1 output.mp3

By setting a duration smaller than the actual audio duration, FFmpeg automatically trims the input. Now the benefit of manually setting the duration instead of FFmpeg automatically handling it is understandable.

Tip: The -t argument is required to be set before the input else FFmpeg thinks you're forcing a duration on the output regardless of the input.

The last two questions covered applying a trim at the start of an audio track and a trim to the end, but what does the command look like combined?

In this example, the input file is 1 minute long with the requirement of 10 seconds removed from the start and 10 seconds removed from the end of the audio. This results in a new 40 second audio clip:

$ ffmpeg -t 00:00:50 -i input.mp3 -ss 00:00:10 -async 1 output.mp3

In question 25, "How to Trim 'x' Seconds From the End of an Audio Track?", using the seeking argument, trimming the start of an audio track is achieved.

In question 26, "How to Trim 'x' Seconds From the Start and End of an Audio Track?", using a smaller duration than the input audio resulted in the end of the audio to be trimmed.

Together, the two can easily manipulate the length of audio quickly and easily.

A lot of FFmpeg programmers get into FFmpeg because they want to convert or trim a file. You now can do both but don't stop here, learning various audio and video filters takes your programming skills to that next level. Plus this is where FFmpeg becomes creative and artistic, here the power of FFmpeg really shines.

Top Audio Filters

This section covers the most common audio filters that are useful for everyday editing. Since this is just an audio section the -af option is used when a single audio source is required. When two or more audio sources are used -filter_complex is required. For more information visit question 14, "How to Use Filters (-vf/-af VS -filter_complex)?".

Here the topics of adjusting volume, applying crossfades, normalizing clips, adding echo and so much more are covered. Each question builds off the last to increase difficulty. Let's master the art of audio manipulation!

As you learn more about FFmpeg, you'll find filters that solve problems in a matter of seconds vs minutes in software. One example of this is adjusting the volume of an input. In the following one-liner, the volume is increased by 10dB (decibels):

$ ffmpeg -i input.mp3 -af "volume=volume=10dB" output.mp3

The volume value can also be a negative value to decrease the volume:

$ ffmpeg -i input.mp3 -af "volume=volume=-10dB" output.mp3

Tip: The volume filter also works on video input.

volume

Indicates the volume filter name

volume

Indicates the volume value (input_volume * value = output_volume)

The volume filter has over 17 different parameters to precisely change the sound. For more information visit, http://ffmpeg.org/ffmpeg-filters.html#volume

Making a music mix? Easily crossfade each track with FFmpeg within seconds. Crossfading creates a smooth fade in and out between each audio file. In this example a crossfade of 1 second is applied between both inputs:

$ ffmpeg -i input1.mp3 -i input2.mp3 -filter_complex

"acrossfade=duration=00:00:01:curve1=exp:curve2=exp" output.mp3

acrossfade

Indicates the filter name for crossfading

duration, d

Indicates the duration of the crossfade effect

curve1

Indicates the crossfader curve for the first input

curve2

Indicates the crossfader curve for the first input

Although in this example, both crossfade curves are exp or

*exponential*, FFmpeg support multiple curves but below are 6

recommended curves:

log

Logarithmic

par

Parabola

ipar

Inverted parabola

losi

Logistic sigmoid

cub

cubic

nofade

No fade applied

For more information on crossfades and curves, visit: https://ffmpeg.org/ffmpeg-filters.html#afade

Ever get an audio file that just isn't loud enough but when the volume is increased it peaks? The solution to this problem is to run the audio through a normalization algorithm. Normalization with loudnorm, uses a true peak loudness to increase the maximum volume for each bit.

For audio signals on radio and tv broadcasts, a guideline exists for the permitted maximum levels thus setting a standard for increasing the volume throughout a track. This filter increases volume without changing the sound, compression or quality. This normalization standard is called EBU R128 and what the loudnorm filter is built off of.

If this is starting to feel complex, don't worry, here's the recommended settings for normalizing audio with loudnorm:

$ ffmpeg -i input.mp3 -af "loudnorm=I=-16:LRA=11:TP=-1.5" output.mp3

Tip: The above example has been found all over the internet, without a clear identity of who invented these exact variables. Play around with the values to get a sound you prefer.

loudnorm

Indicates the name of the normalization filter

I, i

Indicates the integrated loudness (-70 to -5.0 with default -24.0)

LRA, lra

Indicates the loudness range (1.0 to 20.0 with default 7.0)

TP, tp

Indicates the max true peak (-9.0 to 0.0 with default -2.0)

For more information on loudnorm, visit: https://ffmpeg.org/ffmpeg-filters.html#loudnorm

Adding an echo (or reflected sound) to an audio track is a great way to add more ambiance or smoothness to a choppy playback. It's a personal desired effect but for how simple it is to use, it's a nice filter to have in the back pocket.

Here aecho uses of gain, delays and decays make for a great airy echo effect that fades over time:

$ ffmpeg -i input.mp3 -af

"aecho=in_gain=0.5:out_gain=0.5:delays=500:decays=0.2" output.mp3

In this filter the delay and decay arguments are plural because multiple delays and decays are stackable using the | syntax for extra echo control, as seen below:

$ ffmpeg -i input.mp3 -af

"aecho=in_gain=0.5:out_gain=0.5:delays=500|200:decays=0.2|1.0"

output.mp3

Tip: The number of delays and decays must equal the same amount so if there are 2 delay numbers, there must be 2 decay numbers.

aecho

Indicates the name of the echo filter

in_gain

Indicates the input gain reflected signal (default 0.6)

out_gain

Indicates the output reflected signal (default 0.3)

delays

Indicates the list of time intervals (in milliseconds) between the

original signal and reflections (0.0 to 90000.0 with default 0.5)

decays

Indicates the list of loudness of each reflected signal (0.0 to 1.0 with

default 0.5)

Tip: If this filter is applied to the video input, a pixel format may be required, -pix_fmt yuv420p. YUV is a color encoding system to define the color image or video which some player might need.

Changing the tempo of an audio file is easy with atempo. This filter only accepts one value, a number between 0.5 and 100. In this example, the audio tempo is increased by 50%:

$ ffmpeg -i input.mp3 -af "atempo=1.50" output.mp3

So how does 1.50 equal 50%? That's because any value above 1.0 increases the tempo and any value below 1.0 decreases the tempo.

Here the tempo is reduced by 50% instead:

$ ffmpeg -i input.mp3 -af "atempo=0.50" output.mp3

Reducing the tempo has an issue with making the playback choppy and robotic. This is because changing the tempo is a time-stretch. The audio is slower or faster but the pitch does not change.

Tip: In question 32, "How to Change the Pitch / Sample Rate of an Audio Track?", a preferred method of decreasing tempo with a smoother playback can be explored.

Changing the pitch of an audio track means the tempo stays the same but the audio pitch increases/decreases. There isn't a native way to change the pitch without also changing the playback speed. Luckily, chaining the atempo and asetrate filters together can achieve this effect.

In this example, the pitch of the audio decreases by 50% by changing the sample rate with asetrate, which by itself results in a longer playback duration:

$ ffmpeg -i input.mp3 -af "asetrate=44100*0.5" output.mp3

Tip: Changing the sample rate to change the pitch might create a conflict because some players or websites. For example, Bandcamp requires audio with a sample rate of 44.1kHz.

To keep the pitch change while setting the preferred sample rate the filter aresample is needed, as seen below:

$ ffmpeg -i input.mp3 -af "asetrate=44100*0.5,aresample=44100"

output.mp3

As stated earlier in this question, for a true pitch to be applied the tempo shouldn't change. With the use of atempo the pitch can change while the tempo nearly stays the same. In the example below, an atempo between 1.5 to 1.7 should result in proper pitch change:

$ ffmpeg -i input.mp3 -af

"asetrate=44100*0.5,atempo=1.5,aresample=44100" output.mp3

There are no correct answers here for what works best, that's up to you. Play around with each version, find a sound that works with your ears and what you are trying to accomplish.

We Are コンピューター生成 by メトロイヤー

an album made entirely with FFmpeg

For example, I've used pitching down, echos and randomized splicing to create sample based Vaporwave music. The entire album was completely generated with FFmpeg using Japanese vintage commercials as input. Personally, I am very pleased with the sound.

Listen to it here: https://mthu.bandcamp.com/album/we-are

FFmpeg also has the ability to generate sounds or tones natively. With the right kind of scripting, you could make chiptune like music straight from the terminal. In this example, generating a single 2000Hz sound for 10 seconds is enough for now:

$ ffmpeg -f lavfi -i "sine=frequency=2000:duration=10" output.mp3

A new argument, lavfi, needs to be introduced here as this time there is no input media. Libavfilter, or lavfi as seen above, is an input virtual device that allows for filters, like sine, to virtually create data for future output.

sine

Indicates the sine filter name. Sine generates signals made from sine

waves

frequency, f

Indicates the tone or frequency (with default 440Hz)

duration, d

Indicates the duration of the generated frequency output

Tip: You'll need to enable --enable-libflite, full Tutorial: http://johnriselvato.com/how-to-install-flite-flitevox-for-ffmpeg/

Generating text to speech is a great feature to have locally on a computer. From using it in a YouTube video to making memes on Twitter, once you learn this filter, you're creating content at new speeds.

There are two methods to set text and generate speech with flite; Either from a file or inside in the command.

An example of text from a text file (speech.txt):

$ ffmpeg -f lavfi -i "flite=textfile=speech.txt" output.mp3

An example of text inside the command:

$ ffmpeg -f lavfi -i flite=text='Hello World!' output.mp3

For more information about flite, visit: https://ffmpeg.org/ffmpeg-filters.html#flite

A low-pass filter is an audio filter that cuts off frequencies that are higher than the desired cutoff frequency. This filter is popular in music production as it can be used to soften audio or remove undesired noise.

With FFmpeg the filter lowpass is used by setting the desired cutoff frequency in Hz. In this example, any frequency above 3200Hz is cut off:

$ ffmpeg -i input.mp3 -af "lowpass=f=3200" output.mp3

The low-pass filter is great for getting closer to the bass of the audio. For the opposite effect, a high-pass filter is used which can be found in question 36, "How to Add a High-Pass Filter to an Audio Track?".

A high-pass filter is an audio filter that cuts off frequencies that are lower than the desired cutoff frequency. This is often used to cut out bass from an audio source while leaving the treble side of the audio.

The filter in FFmpeg is called highpass and is used by setting the desired cut off frequency in Hz. For example, here bass lower than 300Hz is remove:

$ ffmpeg -i input.mp3 -af "highpass=f=3200" output.mp3

The high-pass filter removes the lower frequencies but for the opposite effect, a low-pass filter is used. More information on the low-pass filter read question 35, "How to Add a Low-pass Filter to an Audio Track?".

This covers the most common questions about audio filters. The next few questions are specific to audio inside video formats. Here removing audio, mixing new audio and even replacing audio in a video is covered.

Although this next command isn't exactly a filter, it's still useful to know how to remove or mute, audio in an MP4.

Tip: If extracting audio from a video is required, review question 19, "How to Extract Audio From a Video?".

Below is the quickest way to remove audio from any form of video using the -an option:

$ ffmpeg -i input.mp4 -an -vcodec copy output.mp4

Tip: -an indicates no audio output.

Adding an additional audio source on top of the audio already in a video can be accomplished with the amix filter. This might be useful for adding background music to a commentary video. The command is as follows:

$ ffmpeg -i input.mp4 -i input.mp3 -filter_complex "amix" -map 0:v

-map 0:a -map 1:a output.mp4

A common issue that comes up with amix is the volume of one input over powers the second. Setting the louder input volume down can resolve this. In the example below the volume of the first input audio [0:a] is reduce 40%:

$ ffmpeg -i input.mp4 -i input.mp3 -filter_complex

"[0:a]volume=0.40,amix" -map 0:v -map 0:a -map 1:a -shortest

output.mp4

The option -shortest here changes the duration to the longest input media. For example, if the MP4 is longer than the MP3 the duration cuts to the MP3 length.

Tip: See question 17, "What is -map and How is it Used?", for more details.

Tip: If replacing the audio on a video is required, reference question 39, "How to Replace the Audio on a Video?", for more information.

There are times when the audio on a video needs to be replaced. Here filters are not even needed to replace audio but the use of -map become important to understand:

$ ffmpeg -i input.mp4 -i input.mp3 -map 0:v -map 1:a -shortest

output.mp4

Here the video from the first input and the audio from the second input is mapped to the output.

Tip: See question 17, "What is -map and How is it Used?", for more details.

Challenge: Using the same concepts in the above example, if two inputs were videos, how would you replace the first input video with the second input video but keep the first input's audio?

These examples only cover the surface of what audio filters FFmpeg has available. There are over 90 additional audio filters to accomplish even more tasks with new filters being added yearly.

For more information, visit: https://ffmpeg.org/ffmpeg-filters.html#Audio-Filters

Basic Video Conversions

In the previous section, basic audio conversions showed how simple yet powerful FFmpeg can be for audio. In this section the most common video conversions will get you familiar with using FFmpeg with video. In the following questions, you'll learn how to convert the most common video formats into MP4 and a few methods of file compression.

MOV is a video format developed by Apple but compatible with most software and players. MOV uses the MPEG-4 codec for compression making the conversion between MOV to MP4 very simple, as seen below:

$ ffmpeg -i input.mov output.mp4

Tip: MOV files are usually larger in size but a higher quality video in comparison to MP4.But the MOV format does run the risk of compatibility issues due to it being maintained for Apple products.

Tip: Instagram and Twitter support MOV video uploads.

The Matroska Multimedia Container or MKV is a free open container format that differs from formats like MOV or MP4. MKV files can store an unlimited amount of video, audio, pictures or subtitle tracks in one file. This stacking of media has benefits for videos that require audio or subtitles with multiple languages.

Because of the nature of MKV, converting to MP4 requires a few arguments, as seen in the example below:

$ ffmpeg -i input.mkv -c:a copy -c:v libx264 output.mp4

In this example, the command must specify that the audio codec is copied but the video must be encoded with libx264.

If a specific audio track is required for conversion, the command requires mapping the specific audio to the output. For example, if the MKV has two audio tracks and the second track is required for the output, the command is as follows:

$ ffmpeg -i input.mkv -map 0:v -map 0:a:1 output.mp4

Tip: Instagram and Twitter do not support MKV video uploads.

The AVI format is a multimedia container format developed and maintained by Microsoft. AVI has support for multiple video codecs including MPEG-4. This makes the conversion to MP4 simple:

$ ffmpeg -i input.avi output.mp4

If the input.avi codec is not MPEG-4, setting the libx264 codec might be required:

$ ffmpeg -i input.avi -c:v libx264 output.mp4

Tip: Instagram and Twitter do not support AVI video uploads.

FLV or Flash Video is a multimedia container format maintained by Adobe Systems. It was once the staple of how most media was delivered on the internet in the early 2000s.

Although replaced in the modern streaming world, FLV files do exist and converting to MP4 is easy when encoding with libx264:

$ ffmpeg -i input.flv -vcodec libx264 output.mp4

Tip: Instagram and Twitter do not support FLV video uploads.

As an open source alternative to HTML5 video (the successor of FLV), WebM is another multimedia container format that is currently being maintained by Google. WebM files are native to android devices but not iOS (or Safari), thus converting to MP4 is beneficial. Luckily, the conversion is simple because most WebM video codecs are H.264:

$ ffmpeg -i input.webm output.mp4

There may be an issue on macOS with the previewer not working with this new MP4, one solution is to set the frame rate during the conversion, as seen below:

$ ffmpeg -i input.webm -r 24 output.mp4

-r

Indicates the frame rate (Hz value) or FPS

Tip: Instagram and Twitter do not support WebM video uploads.

By now you have a basic understanding of how simple it is to convert the most popular video container formats to MP4. In most cases encoding to H.264 is all that is needed for formats.

To finish the "Basic Video Conversion" section, let's convert an MP4 into a GIF and learn how to compress MP4 files to reduce file size.

In the previous video format conversions the formats supported audio without any additional encoding. A Graphic Interchange Format or GIF is a bitmap image format without an audio component.

GIFs have been around since the late 80s and are still used to this day on web forums, Discord, text messages and everywhere in between. Because GIFs are just animated image frames, they are easy to send all over the internet without needing a video player. As with most conversions, the FFmpeg command is simple:

$ ffmpeg -i input.mp4 output.gif

Tip: Twitter does support the GIF format while Instagram natively does not.

Although not a conversion to another format, compression is one of the most asked questions about MP4 files with FFmpeg. People are constantly looking for ways to reduce file size while keeping quality generally the same.

This is easily done with -crf or Constant Rate Factor. -crf lowers the average bitrate and retains quality. -crf around 23 is recommended but feel free to experiment, the lower the number the higher the bitrate.

$ ffmpeg -i input.mp4 -vcodec libx265 -crf 23 output.mp4

Another example is with presets. Presets are various encoding speeds to compression ratios. The slower the preset the better compression. Compression was discussed in question 16, "How to Use -filter_complex Without Losing Video Quality?", the same suggestions apply.

Tip: A pixel format may be required for macOS previewer, -pix_fmt yuv420p.

Having a good understanding or even a reference book (like this) to quickly convert one video format to another is super useful and probably a skill you'll use for the rest of your life. You can now download any video and convert it to any format for any player or any device. How wonderful is that?!

In the next section, you'll take everything you learned so far from audio to the basic video and apply it to video timeline editing.

Video Timeline Editing

FFmpeg can be used for non-linear video editing. Traditionally, a graphical user interface (GUI) would be used to trim parts of a video but in the following section you'll learn how to use a terminal instead. FFmpeg can remove a few seconds from the beginning of a video or a few minutes from the end. Really any kind of cutting, chopping, slicing, etc can be accomplished with FFmpeg, and quickly.

Trimming a few seconds, minutes or hours from a video is simple but requires an understanding of the seeking and duration options.

In this example, the input file is 5 minutes long with a 15 second introduction that needs to be removed. It's important to identify these two numbers because trimming requires tricky subtraction. Let's look at the following example:

$ ffmpeg -ss 00:00:15 -i input.mp4 -t 00:05:00 -async 1 output.mp4

As outlined in "A Note About Trimming", -ss is used to seek to a part in the video and -t is the duration of the playback. A 5 minute long video subtracted by 15 seconds of seeking results in the first 15 seconds being removed.

A major setback with trimming is manually setting the video duration value. Luckily, FFmpeg has a tool called ffprobe which can be used output an exact duration:

$ ffprobe -i input.mp4 -show_entries format=duration -v quiet -of

csv="p=0" -sexagesimal

Challenge: See question 7, "How to Use FFmpeg in Various Languages?", for ideas on how to use this output as a variable and dynamically set video duration.

Trimming a couple of seconds, minutes or hours off the end of a video is easy but again requires a different understanding of time in FFmpeg. Trimming from the end of an MP4 uses the argument -t or duration with no seeking.

Tip: The duration must be set before the input else FFmpeg applies the duration to the final output.

In this example, the input file is 5 minutes long with the requirement of 1 minute removed from the end:

$ ffmpeg -t 00:04:00 -i input.mp4 -async 1 output.mp4

By setting a smaller time than the actual video duration, FFmpeg automatically trims the video length (5 minutes - 1 minute = 4 minutes). Now the benefit of manually setting the duration instead of FFmpeg automatically handling it is obvious.

The last two questions covered trimming the start of a video and trimming the end of a video, but what does the command look like combined?

In this example, the input file is 5 minutes long with the requirement of 15 seconds to be removed from the start and 1 minute to be removed from the end. Resulting in a new 3 minute and 45 second video clip:

$ ffmpeg -t 00:04:00 -i input.mp4 -ss 00:00:15 -async 1 output.mp4

Together, with the use of -t and -ss, the manipulation of the video length is quick and easy.

Splicing a video (or dividing a video into multiple segments) is a common way to break large files into smaller parts. This is commonly found in streaming but can also be used to break up a video into smaller clips.

Here is a basic example where the input is a 20 second clip with the desired result of multiple 5 second segments:

$ ffmpeg -i input.mp4 -c copy -f segment -segment_time 00:00:05

-reset_timestamps 1 output_%02d.mp4

Naturally, it is expected that each clip is 5 seconds long. Unfortunately, -segment_time is not always accurate and takes the time value as a recommendation.

The FFmpeg documentation states using -force_key_frames is one way to force each cut length but by frames not duration.

segment

Indicates the segment filter name

-segment_time

Indicates the segment duration to time (default value 2)

-reset_timestamps

Indicates requiring each segment to begin with a near-zero timestamp

which is useful for when each segment must have playback (optional)

Challenge: How would you do this with an audio file instead?

Stitching videos generated by the segment filter is accomplished using the concat filter. See Question 50, "How to Splice a Video into Segments?", for more details on segmenting video output.

Imagine you've generated a bunch of segments for a stream and now you need to combine them back together as the packages arrive at the client. First a list of videos to stitch together must be created. The file (file.txt) might look like the following:

file 'output_00.mp4'

file 'output_01.mp4'

file 'output_02.mp4'

file 'output_03.mp4'

file 'output_04.mp4'

Then run the following command to create a single file again:

$ ffmpeg -f concat -i file.txt -c copy -fflags +genpts output.mp4

Streaming with concat is not covered in this book but more information can be found by visiting: https://ffmpeg.org/ffmpeg-formats.html#concat-1

fflags

Indicates the specific flag that is used

+genpts

Indicates the need to generate missing presentation timestamps (PTS) if

needed. A common requirement when a video is segmented by duration

instead of timestamp

Challenge: How would you do this with an audio file instead?

Looping a section of a video can be useful for presentations or advanced video editing. In this example the input.mp4 is a 5 minute long video with the requirement that after the 1 minute mark, the video loops 10 times.

$ ffmpeg -i input.mp4 -filter_complex

"loop=loop=10:size=1500:start=1500" -pix_fmt yuv420p output.mp4

In the requirements for this example it was stated that after the 1 minute mark the video loops yet the start number is 1500. This is because start requires a frame value not time. To calculate the frame value one must know the framerate of the input. In this example, input.mp4 has a 30 frames per second framerate (FPS). 30 FPS * 1 minute (60 seconds) = 1500 frames.

In addition to the start parameter, a size parameter must be set as well. size is the number of frames that are repeated. So from frames 0 to 1500 the loop plays 10 times after the 1500th frame has been played.

loop

Indicates the name of the loop filter

loop

Indicates the number of loops (default is 0 with -1 being infinite)

size

Indicates the number of frames (from 0 to value) that are repeated

start

Indicates first or starting frame of the loop (default is 0)

The loop filter works great with video but does not apply to audio output. Thus another method is required. Using concat multiple times on the same file could be used instead:

$ ffmpeg -i input.mp4 -i input.mp4 -i input.mp4 -filter_complex

"concat=n=3:v=1:a=1 [v] [a]" -map "[v]" -map "[a]"

output.mp4

Then with the use of seeking, -ss, the section to be repeated can be specified.

Challenge: Try looping a specific section of a video using concat, -t and -ss.

Below is a way to identify the framerate of an input (as seen in red):

$ ffmpeg -i input.mp4

ffmpeg version 4.2.2 Copyright (c) 2000-2019 the FFmpeg developers

built with Apple clang version 11.0.0

Configuration: <removed for clarity>

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from 'input.mp4':

Metadata:

major_brand : isom

minor_version : 512

compatible_brands: isomiso2avc1mp41

encoder : Lavf58.29.100

Duration: 00:00:20.05, start: 0.000000, bitrate: 1802 kb/s

Stream #0:0(und): Video: h264 (High) (avc1 / 0x31637661), yuv420p,

720x480 [SAR 40:33 DAR 20:11], 1667 kb/s, 29.97 fps, 29.97 tbr, 30k

tbn, 59.94 tbc (default)

Metadata:

handler_name : Core Media Video

timecode : 01:00:00:00

Stream #0:1(und): Audio: aac (LC) (mp4a / 0x6134706D), 48000 Hz,

stereo, fltp, 128 kb/s (default)

Metadata:

handler_name : Core Media Audio

Stream #0:2(eng): Data: none (tmcd / 0x64636D74), 0 kb/s

Metadata:

handler_name : Core Media Video

timecode : 01:00:00:00

Tip: See question 17, "What is -map and How is it Used?", for more details.

Just like stitching segments into the original file, concat is used the same way. Refer to question 51, "How to Stitch Segments to One Video?", for more details.

Using concat with a txt file has one catch, all the MP4s must have the exact codec, framerate, resolution, etc. An example text file is shown below (file.txt):

+-----------------------------------------------------------------------+

| file 'input0.mp4' |

| |

| file 'input1.mp4' |

| |

| file 'input2.mp4' |

+=======================================================================+

+-----------------------------------------------------------------------+

Then run the following command to load the txt file to create a single file from the list:

$ ffmpeg -f concat -i file.txt -c copy -fflags +genpts output.mp4

But how can you join multiple MP4 files that are not exactly the same resolution, codec or framerates? A different method of concat is required, as seen in the example below:

$ ffmpeg -i input0.mp4 -i input1.mp4 -i input2.mp4 -filter_complex

"[0:v] [0:a] [1:v] [1:a] [2:v] [2:a] concat=n=3:v=1:a=1

[v] [a]" -map "[v]" -map "[a]" output.mp4

concat

Indicates the concat filter name

n