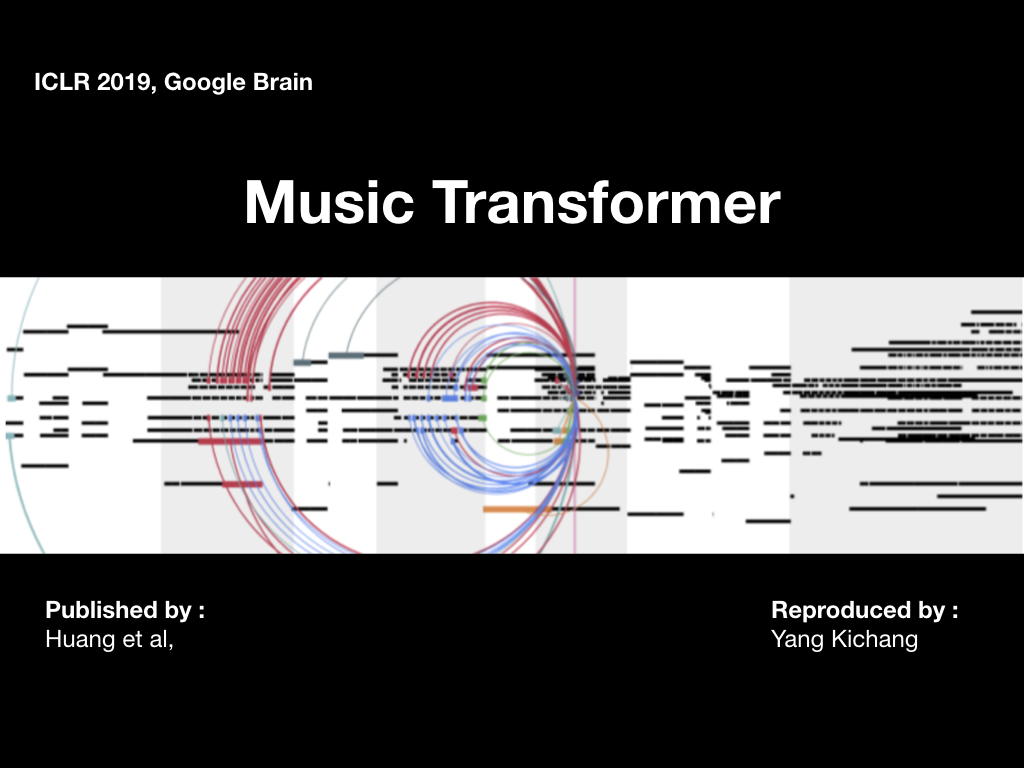

- 2019 ICLR, Cheng-Zhi Anna Huang, Google Brain

- Re-producer : Yang-Kichang

- paper link

- paper review

- This Repository is perfectly cometible with pytorch

- Domain: Dramatically reduces the memory footprint, allowing it to scale to musical sequences on the order of minutes.

- Algorithm: Reduced space complexity of Transformer from O(N^2D) to O(ND).

-

In this repository using single track method (2nd method in paper.).

-

If you want to get implementation of method 1, see here .

-

I refered preprocess code from performaceRNN re-built repository.. -

Preprocess implementation repository is here.

$ git clone https://github.com/jason9693/MusicTransformer-pytorch.git

$ cd MusicTransformer-pytorch

$ git clone https://github.com/jason9693/midi-neural-processor.git

$ mv midi-neural-processor midi_processor$ sh dataset/script/{ecomp_piano_downloader, midi_world_downloader, ...}.sh- These shell files are from performaceRNN re-built repository implemented by djosix

$ python preprocess.py {midi_load_dir} {dataset_save_dir}$ python train.py -c {config yml file 1} {config yml file 2} ... -m {model_dir}- learning rate : 0.0001

- head size : 4

- number of layers : 6

- seqence length : 2048

- embedding dim : 256 (dh = 256 / 4 = 64)

- batch size : 2

- Baseline Transformer ( Green, Gray Color ) vs Music Transformer ( Blue, Red Color )

$ python generate.py -c {config yml file 1} {config yml file 2} -m {model_dir}