1D-ResNet Ensemble for Freezing of Gait Prediction Trained on Data Collected From a Wearable 3D Lower Back Sensor

This repository is home to the source code for the results reported in

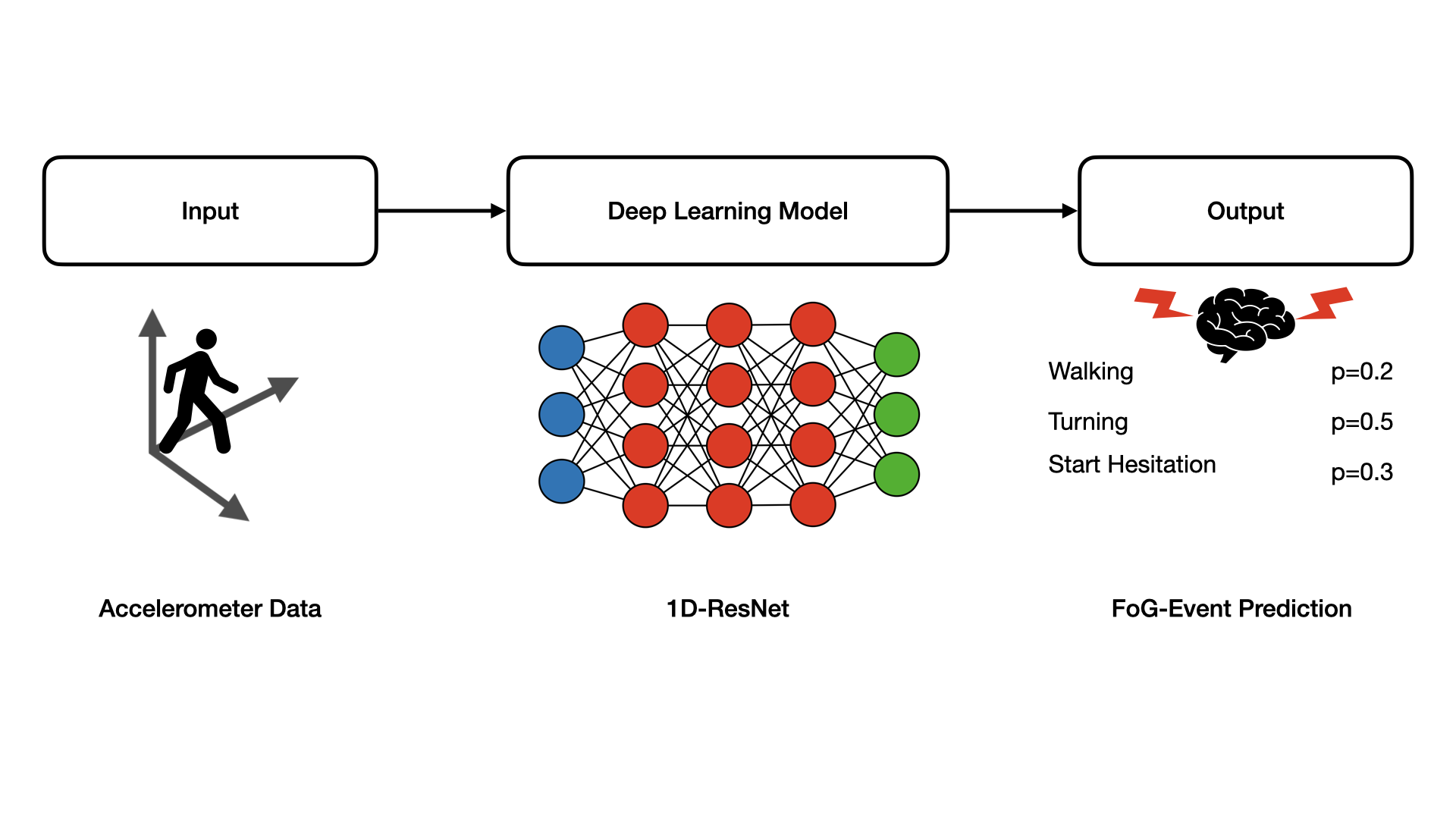

The paper presents a Deep-Learning based approach to detecting Freezing of Gait (FOG) in accelerometer data.

A standard computer with enough RAM is required for inference and evaluation.

For training, an NVIDIA GPU with > 10 GB VRAM is recommended. The algorithm was developed on an NVIDIA RTX3060 with 12 GB of VRAM. Model training took ~5 hours. As Pytorch's automatic mixed precision feature was utilized, the training code can not be run on a CPU / Mac while inference and evaluation work on these systems without modifications.

# install pytorch

conda install pytorch torchvision torchaudio pytorch-cuda=11.7 -c pytorch -c nvidia# clone repository

$ git clone https://github.com/janbrederecke/fog# install requirements

$ cd fog

$ pip install -r requirements.txtResults will be saved as results.csv in ./.

Rough values are returned, as calculation of the competition metrics did not rely on probabilities. You could use a sigmoid function to make them more human readable.

Download the weights and put them in ./weights/FoG/.

Execute the following code:

$ python main.py inference kaggle_modelsTrain your own models using the included train argument described below.

Execute the following code:

$ python main.py inferenceInference is currently set up to be run on the example data provided in /data/test/.

Your data is saved as a .csv file and follows the format (see below) of the deFOG data and is located at ./data/test/defog.

Every recorded session is saved as an individual .csv file.

Time,AccV,AccML,AccAP,StartHesitation,Turn,Walking,Valid,Task

0,-1.01730346044636,0.105947677733569,-0.0671944702082652,0,0,0,false,false

1,-1.01997198880408,0.105368755184179,-0.0689203630006303,0,0,0,false,false

2,-1.01794234301126,0.103586433290663,-0.0694099738857769,0,0,0,false,false- Time: An integer timestep, series from the deFOG dataset are recorded at 100 Hz

- AccV, AccML, and AccAP: Acceleration from a lower-back sensor on three axes: V - vertical, ML - mediolateral, AP - anteroposterior. Data is in units of g for deFOG.

- StartHesitation, Turn, Walking: Indicator variables for the occurrence of each of the event types.

- Valid: There were cases during the video annotation that were hard for the annotator to decide if there was an akinetic (i.e., essentially no movement) FoG or the subject stopped voluntarily. Only event annotations where the series is marked true should be considered as unambiguous.

- Task: Series were only annotated where this value is true. Portions marked false should be considered unannotated.

Your data is saved as a .csv follows the format (see below) of the tdcs FOG data and is located at ./data/test/tdcsfog.

Every recorded session is saved as an individual .csv file.

Time,AccV,AccML,AccAP,StartHesitation,Turn,Walking

0,-9.49559221912324,-0.906945044395018,-0.336842987439936,0,0,0

1,-9.48801268356261,-0.899194900216428,-0.337949687012932,0,0,0

2,-9.492908192609,-0.904170188891895,-0.343040653636143,0,0,0- Time: An integer timestep, series from the tdcs FOG dataset are recorded at 128 Hz

- AccV, AccML, and AccAP: Acceleration from a lower-back sensor on three axes: V - vertical, ML - mediolateral, AP - anteroposterior. Data is in units of m/s^2 for tdcsfog.

- StartHesitation, Turn, Walking: Indicator variables for the occurrence of each of the event types.

More specific information on the formatting requirements is provided through the competition pages on Kaggle.

Download the competition data from Kaggle preferably using the Kaggle API.

Place the defog and tdcsfog folders in ./data/train/.

Place the metadata files for defog and tdcsfog in ./data/.

Run training.

$ python main.py trainModel weights will be saved at ./weights/FoG/.

Results of the evaluation will be printed to the console and saved in ./weights/FoG/.

$ python main.py evaluation kaggle_models$ python main.py evaluation