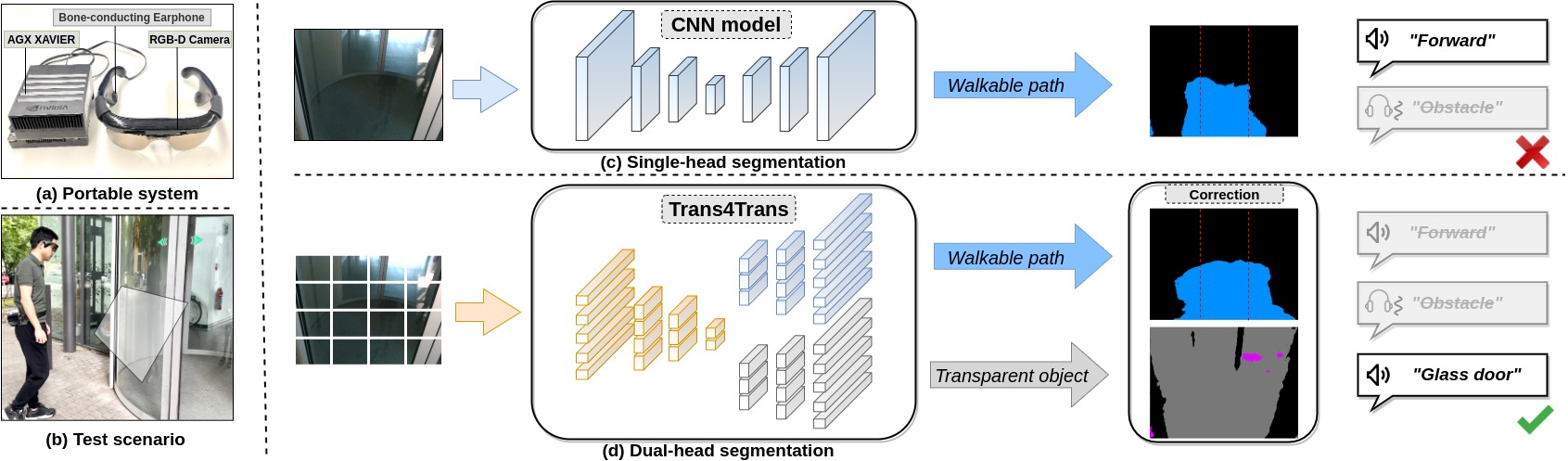

We build upon a portable system based on the proposed Trans4Trans model, aiming to assist the people with visual impairment to correctly interact with general and transparent objects in daily living.

Please refer to our conference paper.

Trans4Trans: Efficient Transformer for Transparent Object Segmentation to Help Visually Impaired People Navigate in the Real World, ICCVW 2021, [paper].

For more details and the driving scene segmentation on benchmarks including Cityscapes, ACDC, and DADAseg, please refer to the journal version.

Trans4Trans: Efficient Transformer for Transparent Object and Semantic Scene Segmentation in Real-World Navigation Assistance, T-ITS 2021, [paper].

Create environment:

conda create -n trans4trans python=3.7

conda activate trans4trans

conda install pytorch==1.8.0 torchvision==0.9.0 torchaudio==0.8.0 cudatoolkit=11.1 -c pytorch -c conda-forge

conda install pyyaml pillow requests tqdm ipython scipy opencv-python thop tabulateAnd install:

python setup.py develop --userCreate datasets directory and prepare ACDC, Cityscapes, DensePASS, Stanford2D3D, and Trans10K datasets as the structure below:

./datasets

├── acdc

│ ├── gt

│ └── rgb_anon

├── cityscapes

│ ├── gtFine

│ └── leftImg8bit

├── DensePASS

│ ├── gtFine

│ └── leftImg8bit

├── stanford2d3d

│ ├── area_1

│ ├── area_2

│ ├── area_3

│ ├── area_4

│ ├── area_5a

│ ├── area_5b

│ └── area_6

└── transparent/Trans10K_cls12

│ ├── test

│ ├── train

│ └── validation

Create pretrained direcotry and prepare pretrained models as:

.

├── pvt_medium.pth

├── pvt_small.pth

├── pvt_tiny.pth

└── v2

├── pvt_medium.pth

├── pvt_small.pth

└── pvt_tiny.pth

Before training, please modify the config file to match your own paths.

We train our models on 4 1080Ti GPUs, for example:

python -m torch.distributed.launch --nproc_per_node=4 tools/train.py --config-file configs/trans10kv2/pvt_tiny_FPT.yamlWe recommend to use the mmsegmentation framework to train models with higher resolutions (e.g. 768x768).

Before testing the model, please change the TEST_MODEL_PATH of the config file.

python -m torch.distributed.launch --nproc_per_node=4 tools/eval.py --config-file configs/trans10kv2/pvt_tiny_FPT.yamlThe weights can be downloaded from BaiduDrive.

We use Intel RealSense R200 camera on our assistive system. The librealsense are required.

Please install the librealsense legacy version.

And install the dependencies for the demo:

pip install pyrealsense==2.2

pip install pyttsx3

pip install pydubDownload the pretrained weight from GoogleDrive and save as workdirs/cocostuff/model_cocostuff.pth and workdirs/trans10kv2/model_trans.pth.

(Optional) Please check demo.py to customize the configurations, for example, the speech volumn and frequency.

After installation and weights downloaded, run bash demo.sh.

This repository is under the Apache-2.0 license. For commercial use, please contact with the authors.

If you are interested in this work, please cite the following work:

@inproceedings{zhang2021trans4trans,

title={Trans4Trans: Efficient transformer for transparent object segmentation to help visually impaired people navigate in the real world},

author={Zhang, Jiaming and Yang, Kailun and Constantinescu, Angela and Peng, Kunyu and M{\"u}ller, Karin and Stiefelhagen, Rainer},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={1760--1770},

year={2021}

}

@article{zhang2022trans4trans,

title={Trans4Trans: Efficient transformer for transparent object and semantic scene segmentation in real-world navigation assistance},

author={Zhang, Jiaming and Yang, Kailun and Constantinescu, Angela and Peng, Kunyu and M{\"u}ller, Karin and Stiefelhagen, Rainer},

journal={IEEE Transactions on Intelligent Transportation Systems},

year={2022},

publisher={IEEE}

}