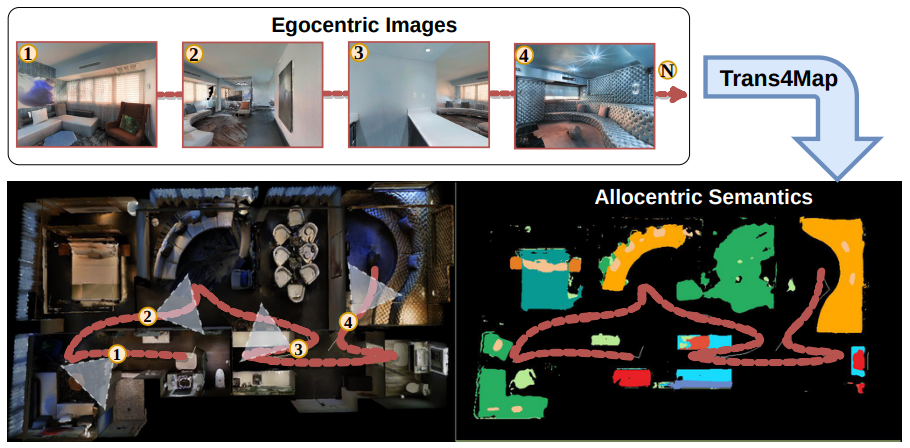

Trans4Map: Revisiting Holistic Bird's-Eye-View Mapping from Egocentric Images to Allocentric Semantics with Vision Transformers

Chang Chen, Jiaming Zhang, Kailun Yang, Kunyu Peng, Rainer Stiefelhagen

In this work, we propose an end-to-end one-stage Transformer-based framework for Mapping, termed Trans4Map. Our egocentric-to-allocentric mapping process includes three steps: (1) the efficient transformer extracts the contextual features from a batch of egocentric images; (2) the proposed Bidirectional Allocentric Memory (BAM) module projects egocentric features into the allocentric memory; (3) the map decoder parses the accumulated memory and predicts the top-down semantic segmentation map.

More detailed can be found in our arxiv paper.

conda create -n Trans4Map python=3.7

conda activate Trans4Map

cd /path/to/Trans4Map

pip install -r requirements.txt

To get RGBD renderings in Matterport3D dataset, we need to install Habitat-sim and Habitat-lab. To ensure consistency with our working environment,please install the following version Habitat-sim == 0.1.5 and Habitat-lab == 0.1.5.

You can prepare the training and test dataset in the same way as SMNet.

data/paths.jsonhas the given trajectories which are manually recorded by SMNet.- The semantic top-down ground truth are also available : GT, please place them under

data/semmap. - Our project is working with Matterport3D dataset and Replica dataset, please download them and place them under

data/mp3d or data/replica.

To train our Trans4Map with different backbones, run:

python train.py

To generate the test result, run the following code:

python build_test_date_feature.py

python test.py

To obtain the mIOU and mBF1, run:

python eval/eval.py

python eval/eval_bfscore.py

| Method | Backbone | mIOU(%) | weight |

|---|---|---|---|

| ConvNeXt | ConvNeXt-T | 35.91 | |

| ConvNeXt | ConvNeXt-S | 36.49 | |

| FAN | FAN-T | 31.07 | |

| FAN | FAN-S | 34.62 | |

| Swin | Swin-T | 34.19 | |

| Swin | Swin-S | 36.80 | |

| Trans4Map | MiT-B2 | 40.02 | B2 |

| Trans4Map | MiT-B4 | 40.88 | B4 |

This repository is under the Apache-2.0 license. For commercial use, please contact with the authors.

If you are interested in this work, please cite the following work:

@inproceedings{chen2023trans4map,

title={Trans4Map: Revisiting Holistic Bird's-Eye-View Mapping from Egocentric Images to Allocentric Semantics with Vision Transformers},

author={Chen, Chang and Zhang, Jiaming and Yang, Kailun and Peng, Kunyu and Stiefelhagen, Rainer},

booktitle={2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

year={2023}

}