- 👋 Hi, I’m @jahangircsebuet

- 👀 I’m interested in ... Fullstack development

- 🌱 I’m currently learning ... leetcode problem solving, Java Collection Framework

- 💞️ I’m looking to collaborate on ...

- 📫 How to reach me ... email at jahangir.08.cse@gmail.com

Upcoming contents:

- What I have learned from the course Programming Distributed Application

- What I have learned from the course Cloud Computing

- What I have learned from the course Artificial Intelligence

- What I have learned from Blockchain

- What I have learned from Zero Knowledge Proof

- What I have learned from Text Classification

- What I have learned from Generative Adversarial Networks

- What I have learned from Cryptography

- What I have learned from DL Energy Aspects Course

Research Topics: • Trustworthy Machine Learning (TML)

o Privacy-Preserving Machine Learning

o Adversarial Machine Learning

o Distributed and Secure Systems

• Trustworthy Blockchains

o Use of blockchain infrastructure to enhance cyber-security

o Cryptographic protocols to enhance the trust and privacy on blockchains

o Post-quantum secure blockchains

• Secure Internet of Things and Systems (IoTs) and Generation Wireless Networks

o Light-weight cryptography for IoT

o Cryptographic protocols for vehicular and UAV networks

o Post-quantum secure IoTs

• Privacy-Enhancing Technologies

o Secure Multi-party Computation

o Distributed cloud security

o Breach-Resilient Infrastructures (Protection of Genetic/Medical Data)

Hyperledger Fabric Tutorial (Recommended): https://www.udemy.com/course/learn-hyperledger-fabric-chaincode-development-using-java/

###################################################### Evolution of ML/DL #############################################

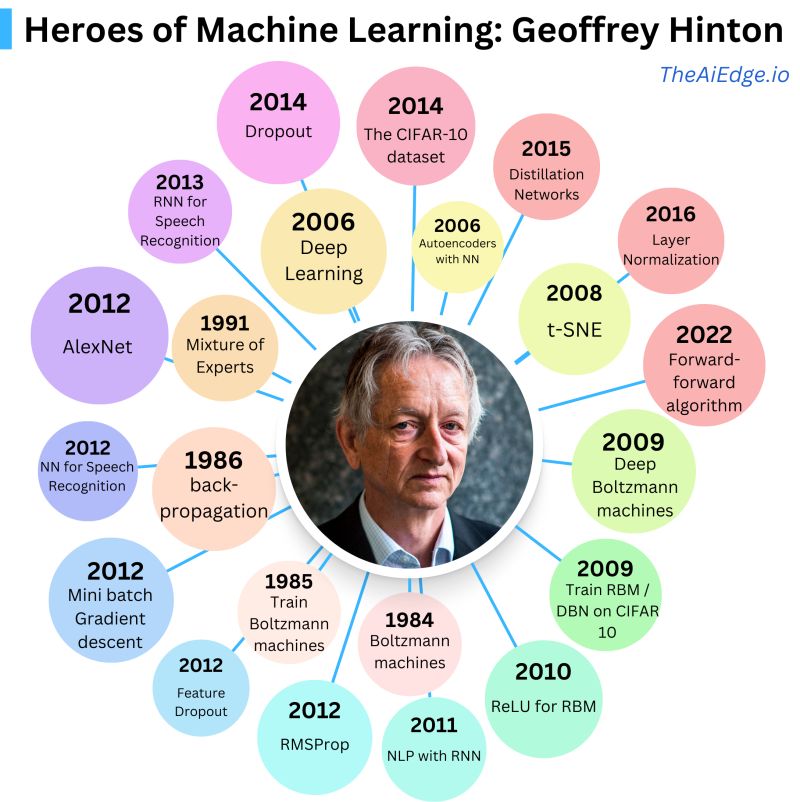

When it comes to Deep Learning, I think nobody symbolizes the field better than Geoffrey Hinton, the father of Deep Learning. He even coined the term! Here are his biggest contributions to the field:

- 1986 - He invents the Boltzmann machines

- 1985 - He proposes a new learning algorithm for Boltzmann machines

- 1986 - He is credited as one of the inventors of the Back-propagation algorithm

- 1991 - He invents the Mixture of Experts

- 2006 - He proposes an algorithm to train Deep Belief Nets. This is the article that led to the term "Deep Learning"

- 2006 - He shows how to build Autoencoders with Neural Networks

- 2008 - He invents t-SNE, a new technique for dimension reduction

- 2009 - He presents an algorithm to train Deep Boltzmann machines

- 2009 - Trains Restricted Boltzmann Machines and Deep Belief Networks with the CIFAR-10 dataset

- 2010 - Shows the improved performance of Restricted Boltzmann Machines with ReLU

- 2011 - Shows how to build a generative text model with Recurrent Neural Networks

- 2012 - Invents RMSprop in a course lecture (!!!)

- 2012 - Proposes the Feature Dropout technique to improve networks

- 2012 - Suggests mini-batch gradient descent in a course lecture

- 2012 - Deep Learning for speech recognition

- 2014 - Revolutions computer vision capabilities with AlexNet (the most cited paper of his whole career)

- 2014 - He proposes the Dropout technique to reduce overfitting

- 2014 - the CIFAR 10 dataset is made available

- 2015 - He invents the Distillation Network to reduce the size of models

- 2016 - He invents the Layer Normation technique (used in every Transformer architecture

- 2017- He proposes CapsNets, or Capsule networks aiming to overcome some limitations of CNNs, particularly in the area of understanding hierarchical relationships between objects and their parts within an image

- 2022 - He presents a new alternative to the Back-propagation algorithm: the Forward-forward algorithm

Now, the guy has 327 publications, so I couldn't capture everything here, but I believe this encapsulates his most impactful works. Considering the trend, it seems a lot more is going to come from him in the coming years! #machinelearning #datascience #artificialintelligence

-- 👉 Let me help you become better at Machine Learning: https://TheAiEdge.io

###################################################### Exercise #############################################

Health cheat codes I know at 33, that I wish I knew at 22:

-

Do cardio for overall health & longevity, do exercise to build muscle & strength, do diet to burn fat , do yoga/stretching for mobility.

-

7-8 hours of sleep is non-negotiable. The percentage of people who can have perfect physiology with less than 7 hour of sleep is almost zero.

3)5-10 minute of sunlight exposure within the first couple of hours of waking up is crucial. It boosts energy, brain power and helps to fall asleep at night in the right time

-

If you had less than 7 hour of sleep or if you are feeling low in energy- take a short nap.

-

Workout atleast 3 times per week. Get brutally strong in basic compound movements like Overhead Press, pullups, Dips, rows, Squats, deadlift etc . Try to progress in these exercises in each week .

-

Perfect the form of these exercises. Have proper mind-muscle connection when working out. Ask for help from your trainer in the gym, or watch good tutorials in youtube. Perfecting the form will keep you injury free , while making you the best gains.

-

Pick any form of cardio that you enjoy. Do it as often as you can.

8 ) For the vast majority of people the best way to do cardio is to walk outside. Walk at least 8k steps per day.

9 ) Prioritize having plenty of vegetables and protein in each meal. If you want to build muscle and strength- try to hit at least 1.8 gram of protein per kilo of body weight everyday.

-

Carbohydrates are not bad. In fact, they are key to optimal performance in exercise . What is really bad, is processed foods. Minimize processed foods, as much as you can.

-

Intermittent fasting is a great tool to stay in calorie deficit very easily and loose fat. But, it is not at all necessary. Many people function well, when having a good breakfast .

-

In a chaotic world, stillness of mind is key. The time tested way to foster a calm mind, is by praying/ meditating or spending time in nature .

-

Have coffee at least 1-2 hour after waking up . Have water, before having coffee.

-

Have a pullup bar in your home. You can build really impressive fitness just by doing some pullups, some pushups, some squats/lunges - in your home .

-

Put sunscreen on everyday. Moisturize well in winters .

-

True wealth is a house full of love. Give time to your family.

-

Gratitude is like vitamin D , for soul. Even a tiny amount can nourish your soul . When you remember old friends, drop them a message , saying you missed them. Tell your partner that you love him/her.

-

Drink a lot of water. 2 liter is bare minimum, 3 liters plus, if you are active

-

Do some form of stretching/yoga everyday- even if it is for 1-2 minutes . This will keep you mobile

-

If all these sound too much at least do the following: prioritize vegetables and proteins in each meal , get 7 hour+ sleep, lift 3x a week, walk 8k+ steps & drink plenty of water.

Conferences needed for Amazon: AAAI, ACL, ASPLOS, AISTATAS CAV CIKM Coiling ECCV EMNLP EuroSys FAST FMCAD ICASSP ICCV ICLR ICML ICRA IJCAI Informs Interspeech KDD MLSys NeurIPS NSDI PLDI POPL Recsys SLT SIGIR SOSP The Web Conf WSDM

Few recent papers:

-

Utilizing BERT for Information Retrieval: Survey, Applications, Resources, and Challenges (ACM Computing Surveys, Impact Factor: 16.6): https://lnkd.in/eKAQxAF8

-

A comprehensive evaluation of large Language models on benchmark biomedical text processing tasks (Computers in Biology and Medicine, Impact Factor: 7.7): https://lnkd.in/efph4XHp

-

BenLLMEval: A Comprehensive Evaluation into the Potentials and Pitfalls of Large Language Models on Bengali NLP (LREC-COLING 2024, One of the top 5 conferences in NLP): https://lnkd.in/ePdp3aHN

Datasets: https://imerit.net/blog/17-best-text-classification-datasets-for-machine-learning-all-pbm/

[The 20 Newsgroups Dataset]: This popular dataset is perfect for anyone looking to experiment with text classification. It contains 20,000 unique newsgroup documents that have been partitioned between 20 separate newsgroups.

[AG’s New Topic Classification Dataset]: This impressive collection of 1M+ articles was gathered by an academic news search engine across 2,000 separate news sources. It contains a whopping 30,000 training samples and 1,900 testing samples.

[Reuters Text Categorization Dataset]: Containing 21,000+ Reuters documents gathered from Reuters’ newswire in 1987, this text classification dataset has a training set of 13,625 documents. It also comes with a testing set of 6,188 documents.