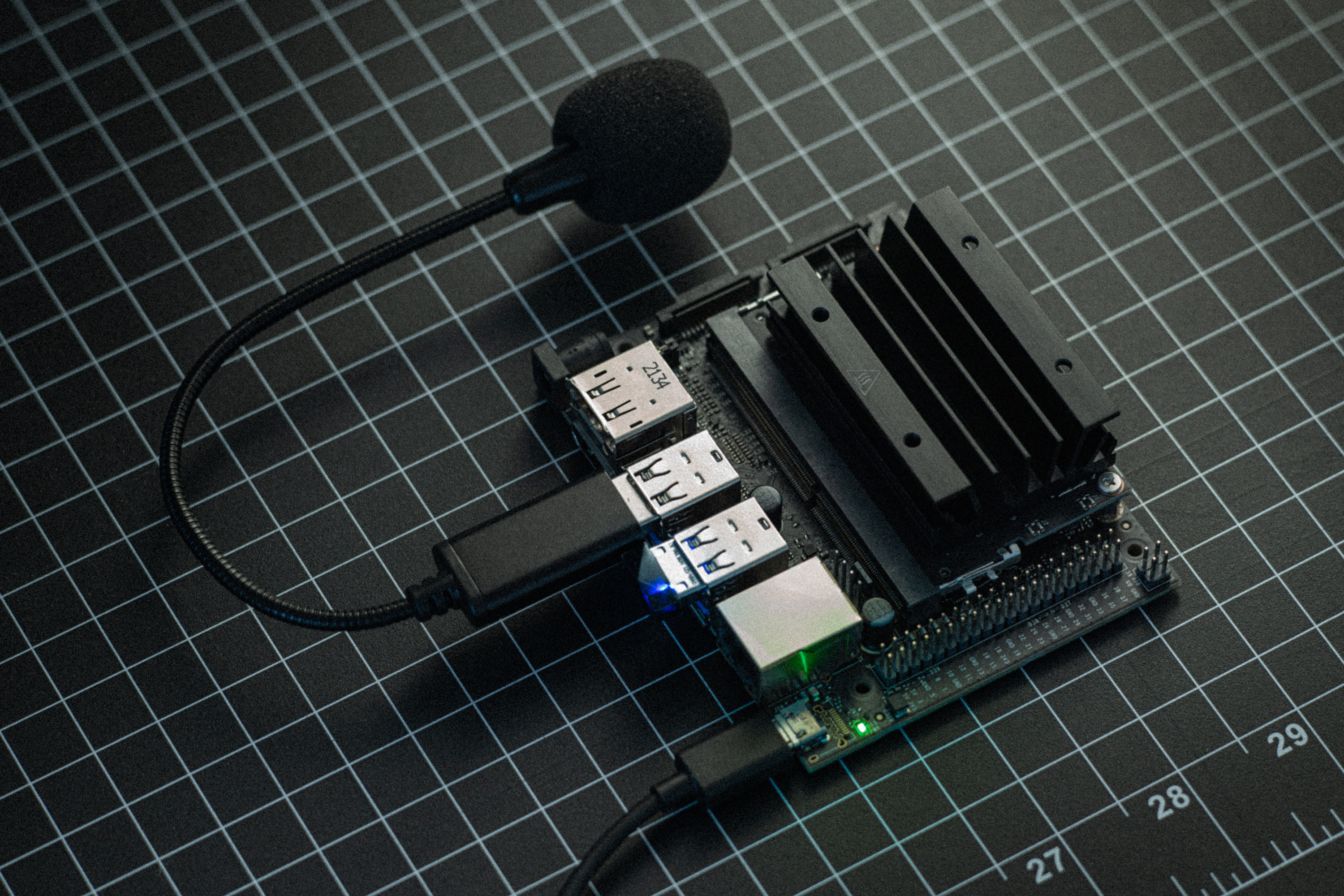

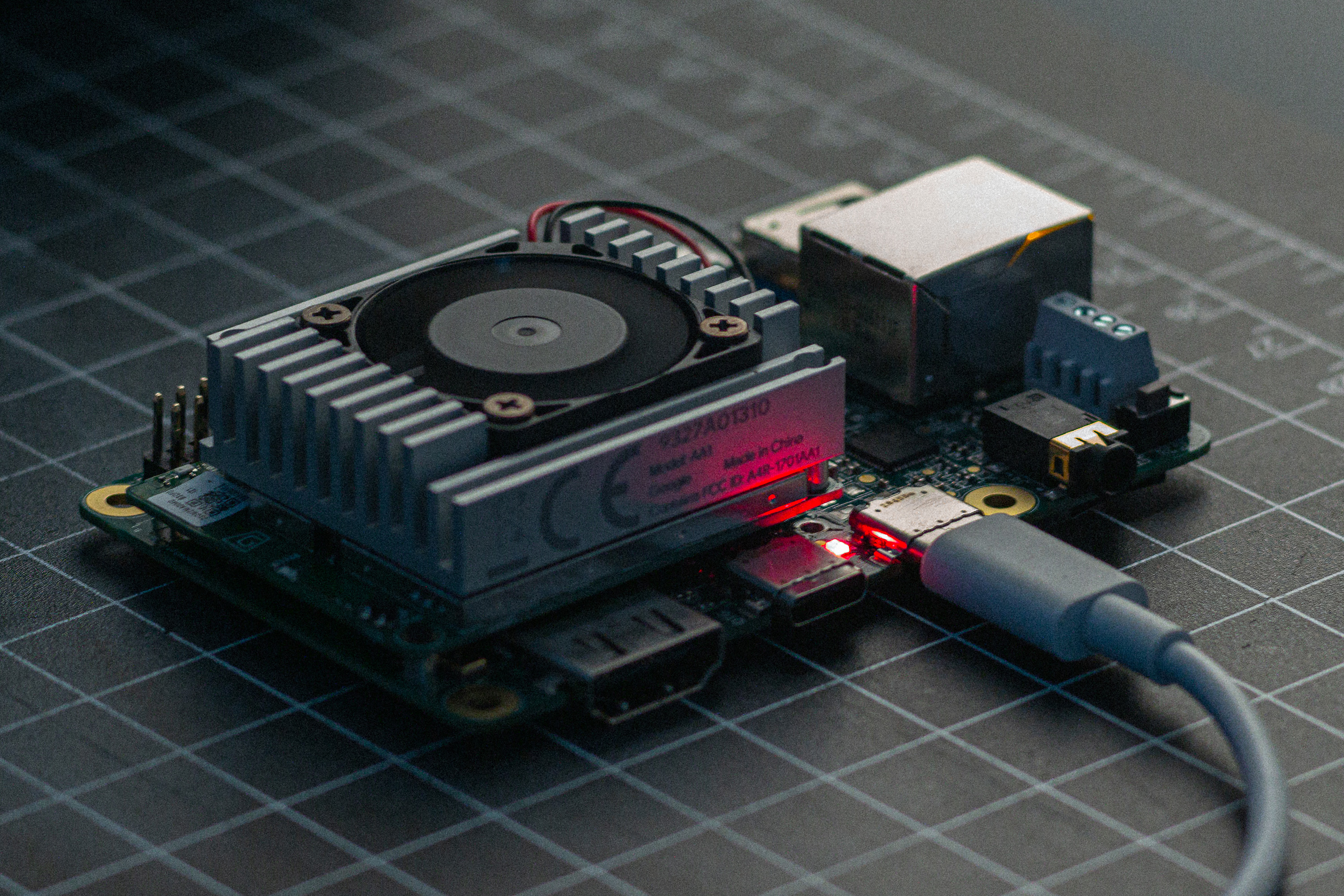

Porting OpenAI Whisper speech recognition to edge devices with hardware ML accelerators, enabling always-on live voice transcription. Current work includes Jetson Nano and Coral Edge TPU.

| Part | Price (2023) |

|---|---|

| NVIDIA Jetson Nano Developer Kit (4G) | $149.00 |

| ChanGeek CGS-M1 USB Microphone | $16.99 |

| Noctua NF-A4x10 5V Fan (or similar, recommended) | $13.95 |

| D-Link DWA-181 Wi-Fi Adapter (or similar, optional) | $21.94 |

The base.en version of Whisper seems to work best for the Jetson Nano:

baseis the largest model size that fits into the 4GB of memory without modification.- Inference performance with

baseis ~10x real-time in isolation and ~1x real-time while recording concurrently. - Using the english-only

.enversion further improves WER (<5% on LibriSpeech test-clean).

Dilemma:

- Whisper and some of its dependencies require Python 3.8.

- The latest supported version of JetPack for Jetson Nano is 4.6.3, which is on Python 3.6.

- No easy way to update Python to 3.8 without losing CUDA support for PyTorch.

Workaround:

First, follow the developer kit setup instructions, connect the Wi-Fi adapter and the microphone to USB, and ideally install a fan. (Also plugging in an Ethernet cable helps to make the downloads faster.) Then, get a shell on the Jetson Nano:

ssh user@jetson-nano.localWe will use NVIDIA Docker containers to run inference. Get the source code and build the custom container:

git clone https://github.com/maxbbraun/whisper-edge.git

bash whisper-edge/build.shLaunch inference:

bash whisper-edge/run.shYou should see console output similar to this:

I0317 00:42:23.979984 547488051216 stream.py:75] Loading model "base.en"...

100%|#######################################| 139M/139M [00:30<00:00, 4.71MiB/s]

I0317 00:43:14.232425 547488051216 stream.py:79] Warming model up...

I0317 00:43:55.164070 547488051216 stream.py:86] Starting stream...

I0317 00:44:19.775566 547488051216 stream.py:51]

I0317 00:44:22.046195 547488051216 stream.py:51] Open AI's mission is to ensure that artificial general intelligence

I0317 00:44:31.353919 547488051216 stream.py:51] benefits all of humanity.

I0317 00:44:49.219501 547488051216 stream.py:51]The stream.py script run in the container accepts flags for different configurations:

bash whisper-edge/run.sh --help

USAGE: stream.py [flags]

flags:

stream.py:

--channel_index: The index of the channel to use for transcription.

(default: '0')

(an integer)

--chunk_seconds: The length in seconds of each recorded chunk of audio.

(default: '10')

(an integer)

--input_device: The input device used to record audio.

(default: 'plughw:2,0')

--language: The language to use or empty to auto-detect.

(default: 'en')

--latency: The latency of the recording stream.

(default: 'low')

--model_name: The version of the OpenAI Whisper model to use.

(default: 'base.en')

--num_channels: The number of channels of the recorded audio.

(default: '1')

(an integer)

--sample_rate: The sample rate of the recorded audio.

(default: '16000')

(an integer)

Try --helpfull to get a list of all flags.To see if the microphone is working properly, use alsa-utils:

sudo apt-get -y install alsa-utils

# Is the USB device connected?

lsusb

# Is the correct recording device selected?

arecord -l

# Is the gain set properly?

alsamixer

# Does a test recording work?

arecord --format=S16_LE --duration=5 --rate=16000 --channels=1 --device=plughw:2,0 test.wavSee the corresponding issue about what supporting the Google Coral Edge TPU may look like.