This is a mini project inspired by the Natural Language Processing subject in Unimelb. It follows a funnel approach where sentences relevant to the input claim are returned then filtered and finally classified for whether it supports or refutes your claim.

You may access the the demo here.

The code repo for the react front end may be accessed here if you're interested.

The system verifies your claim in 3 simple steps:

- Your input claim is used to return relevant documents from a search engine holding Wikipedia pages.

- The returned documents are further narrowed down using Entity Linking.

- Infer for each filtered sentence whether it supports or refutes the claim.

The components that are these 3 steps can simply be known as the Search Engine, Entity Linking and Natural Language Inference respectively.

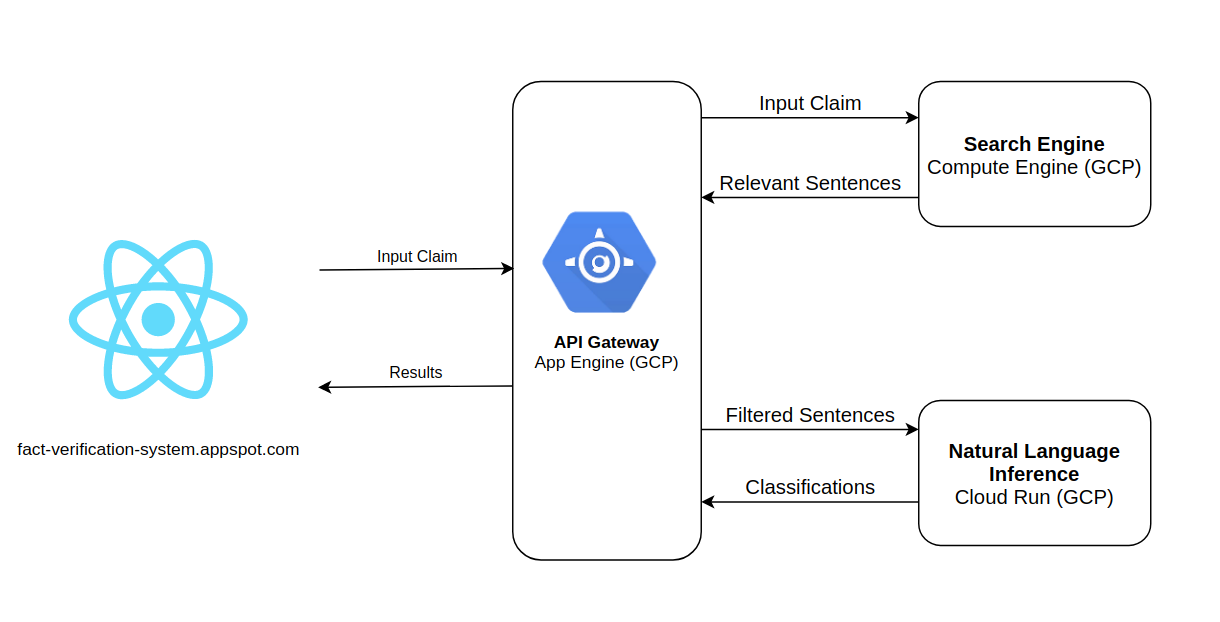

Architecture:

Key Technologies Used:

Pytorch, React, Flask and GCP (App Engine, Compute Engine, Cloud Run)

The search engine uses the bm25 ranking algorithm to return the relevant documents for step 2.

The Okapi BM25 algorithm scores each document in the inverted index database based on the classic idea of TF-IDF. The implementation used in this project is sourced here.

A subset of Wikipedia (~ 250,000 pages) is randomly sampled to be stored in an in-memory database. This is only done to save cost of hosting it on GCP.

Entity linking is the idea of mapping words of interest in a sentence (e.g. a person's name or locations) to a target knowledge base, in this case our input claim. At least one word of interest must match between the returned sentence and your input claim to progress to step 3. Words of interest are extracted using NER tagger from spacy.

A binary classifier is trained on ~25 million sentences from Wikipedia. Sentences are first converted into BERT word embedding. BERT is a language model trained on the Wikipedia dataset by Google using the Transformer architecture. This project's implementation extends the pytorch module from BERT from huggingface.

The extension is as follows:

class BERTNli(BertModel):

""" Fine-Tuned BERT model for natural language inference."""

def __init__(self, config):

super().__init__(config)

self.fc1 = nn.Linear(768, 512)

self.fc2 = nn.Linear(512, 256)

self.fc3 = nn.Linear(256, 1)

def forward(self,

input_ids=None,

token_type_ids=None):

x = super(BERTNli, self)\

.forward(input_ids=input_ids, token_type_ids=token_type_ids)

(_, pooled) = x # see huggingface's doc.

x = F.relu(self.fc1(pooled))

x = F.relu(self.fc2(x))

x = torch.sigmoid(self.fc3(x))

return x

- rank-bm25

- spacy

- transformers (huggingface)