Author: Roberto Zegers R.

This ROS package implements a robot localization system using AprilTag markers. The tags used correspond to the family tag36h11, which has 587 different tags.

Packages on which this package depends:

First install AprilTag and its ROS wrapper by cloning the next repository into the /src directory of your catkin_workspace:

$ git clone https://github.com/RIVeR-Lab/apriltags_ros.git

Then clone this repository:

$ git clone https://github.com/rfzeg/apriltag_robot_pose.git

Compile the package with catkin_make and source your workspace.

Note:

The package requires to spawn a robot that publishes camera images into ROS. The standard for cameras on ROS is to publish to topics such as:

- /image_raw - an unmodified camera image

- /camera_info - information about the camera calibration

Start all neccesary components by executing the included bash script (xterm required):

./start_demo.sh

Or alternatively start the demo launching every node manually:

- Fire up Gazebo world that includes AprilTag markers, e.g.:

roslaunch plywood_mazes maze_3_6x6.launch - In a new terminal load a robot URDF file to the parameter server, e.g.:

$ roslaunch udacity_bot robot_description.launch - And spawn the robot model, e.g.:

$ roslaunch udacity_bot spawn_udacity_bot.launch - Launch the noisy_odom node:

$ rosrun apriltag_robot_pose noisy_odom.py - In a new window kick off the static transform broadcaster node:

$ roslaunch apriltag_robot_pose static_transforms.launch - Then launch the AprilTag detector node to detect AR markers in the camera image:

$ roslaunch apriltag_robot_pose apriltag_detector.launch - Next execute the robot pose estimator node:

$ rosrun apriltag_robot_pose robot_pose.py - In order to see the robot pose estimator node in action open RViz:

$ roslaunch apriltag_robot_pose rviz.launch - Launch the map_server node to see the map in RViz, e.g.:

$ roslaunch plywood_mazes map_server_maze_3.launch

If you want to manually control the robot, open a new terminal window and run:

$ rosrun rqt_robot_steering rqt_robot_steering

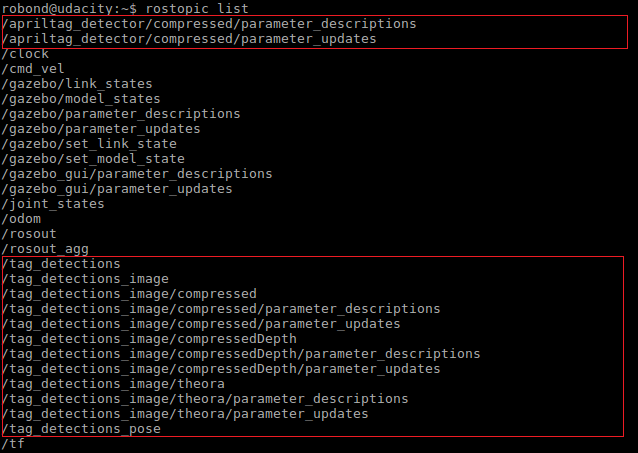

On a new terminal run this command to see the existing topics:

$ rostopic list

If everything is correct, a list of topics published by the apriltag detector node should appear:

Fig.1 The available topics shown by using the rostopic list command

Then check that AprilTags are being detected by placing the robot's camera in front of a tag and running:

$ rostopic echo /tag_detections

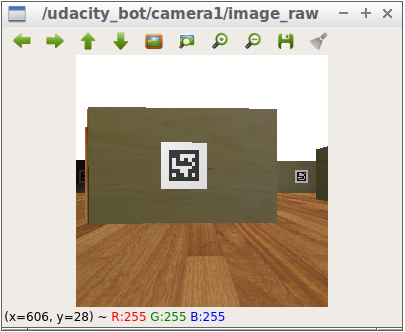

To view raw images, for instance on the topic /udacity_bot/camera1/image_raw, use:

$ rosrun image_view image_view image:=/udacity_bot/camera1/image_raw

To check that the parameters defined in the tag_sizes.yaml file were loaded into the param server type:

$ rosparam get /apriltag_detector/tag_descriptions

To look at the numeric values of a transform between the map frame and any specific AR marker tag:

$ rosrun tf tf_echo map tag_0

or visualize the complete tf tree using RQT:

$ rosrun rqt_tf_tree rqt_tf_tree

If you would like the apriltag_robot_pose node to display output at the DEBUG verbosity level use:

$ rosservice call /apriltag_robot_pose/set_logger_level "{logger: 'rosout', level: 'debug'}"

- Gazebo is crashing as it is starting up: Usually, it is enough to run it again (probably several times).

- ImportError No module named apriltags.msg: When using custom messages, make sure the packages containing them have been compiled.

- ERROR: cannot launch node of type [map_server/map_server]: map_server

You have to install map_server first:

$ sudo apt install ros-kinetic-map-server

This package has only been tested on Ubuntu 16.04 LTS with ROS Kinetic and Gazebo 7.15.

- Averaging quaternions is not straightforward. At the moment the robot's estimated orientation, when several AR markers are detected, is the orientation of the first detected marker. One could do a SLERP to average two quaternions from two (closest) detected markers for a better robot's orientation estimation. See: danielduberg/safe_flight#1

- http://wiki.ros.org/tf2/Tutorials/Writing%20a%20tf2%20broadcaster%20%28Python%29

- The general relationship between the map, odom and base_link frames is already described in Coordinate Frames for Mobile Platforms.

- Average of Quaternions

- https://github.com/lucien386/DJISummerCamp2019/blob/master/ROS_Ws/src/sc_localization/odom_update_node.cpp