This repository contains code and data for the short paper "Template-guided Clarifying Question Generation for Web Search Clarification" (CIKM'2021).

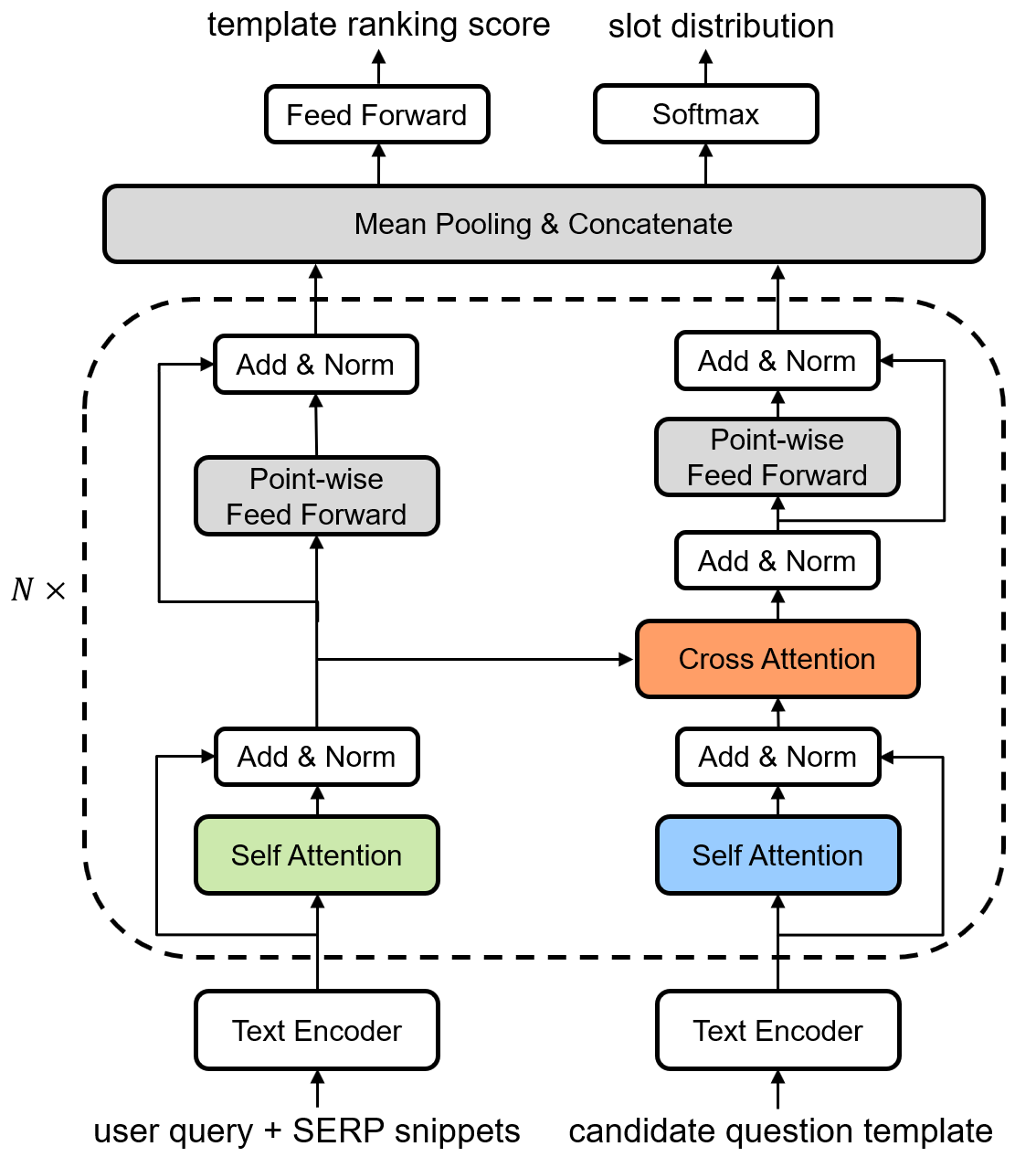

In this paper, we frame the task of asking clarifying questions for Web search clarification as the unified problem of question template selection and slot generation, with the objective of jointly learning to select the question template from a list of template candidates and fill in the question slot from a slot vocabulary. We investigate a simple yet effective Template-Guided Clarifying Question generation model (TG-ClariQ), the architecture of which is shown as below:

The implementation is based on Python 3. To install the dependencies, please run:

pip install -r requirements.txt

Note: The RankLib.jar in the baseline models (RankNet and LambdaMART) needs Java runtime environment.

The pre-trained BERT model can be downloaded from Hugging Face's model card, please download config.json, pytorch_model.bin, vocab.txt and place these files into the folder pretrain/bert/base-uncased/. The pre-trained Glove word vectors can be downloaded from here, then unzip the file as glove.42B.300d.txt and place into the folder pretrain/glove/.

We have uploaded the preprocessed MIMICS dataset to the data/ folder, where the MIMICS-train.zip needs to be unzipped. For more details about the raw MIMICS data collection, please refer to the Microsoft MIMICS repo.

For the LSTM-based encoder, please first set the parameters in the script run_train_lstm.sh, then run:

sh run_train_lstm.sh

For the BERT-based encoder, please first set the parameters in the script run_train_bert.sh, then run:

sh run_train_bert.sh

Please first set the parameters in the script run_test.sh, then run:

sh run_test.sh

Please first set the parameters in the script run_eval.sh. Note that --eval_metric should be set within ACC, MRR, BLEU, and Entity-F1, --eval_file should specify the generated output file of each model. Please run:

sh run_eval.sh

Our implementation of the RankNet and LambdaMART is based on the RankLib, the Seq2Seq is based on the OpenNMT. We thank the Hugging Face Transformers for the pretrained models and high-quality code.

If you find our code is helpful in your work, you can cite our paper as:

@inproceedings{wang2021template,

title = {Template-guided Clarifying Question Generation for Web Search Clarification},

author = {Wang, Jian and Li, Wenjie},

booktitle = {Proceedings of the 30th ACM International Conference on Information and Knowledge Management (CIKM'21)},

year = {2021},

pages = {3468–3472},

}