This proxy allows you to record your chats to create datasets based on your conversations with any Chat LLM.

- Maintains OpenAI API compatibility

- Supports both streaming and non-streaming responses

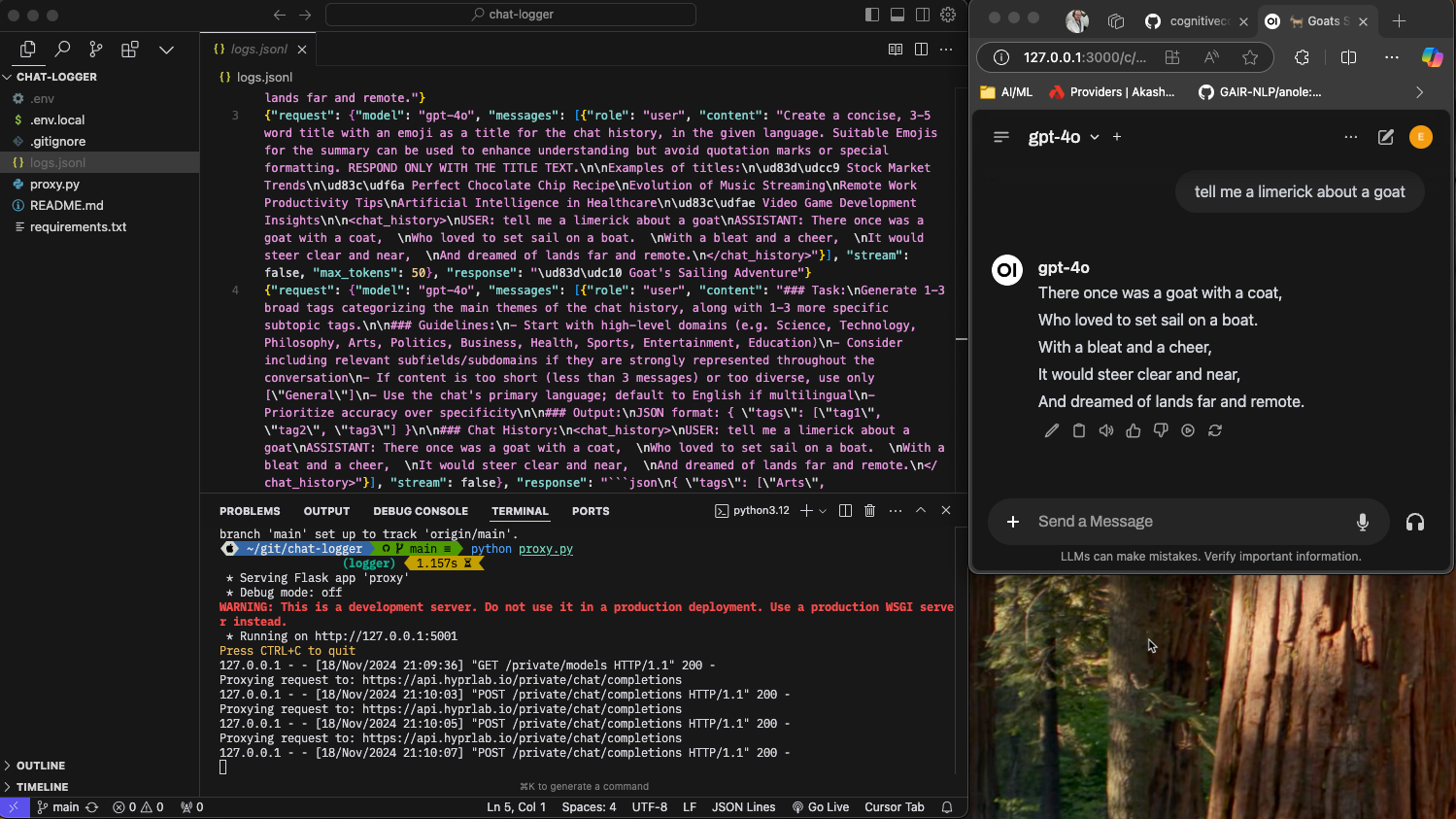

- Automatic request logging to JSONL format

- Configurable model selection

- Error handling with detailed responses

- Request/response logging with thread-safe implementation

- Clone the repository

- Install dependencies:

pip install -r requirements.txt- Create a

.envfile based on.env.local:

OPENAI_API_KEY=your_api_key_here

OPENAI_ENDPOINT=https://api.openai.com/v1

OPENAI_MODEL=gpt-4- Start the server:

python proxy.py-

The server will run on port 5001 by default (configurable via PORT environment variable)

-

Use the proxy exactly as you would use the OpenAI API, but point your requests to your local server:

curl http://localhost:5001/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer your-api-key" \

-d '{

"messages": [{"role": "user", "content": "Hello!"}],

"stream": true

}'OPENAI_API_KEY: Your OpenAI API keyOPENAI_ENDPOINT: The base URL for the OpenAI APIOPENAI_MODEL: The default model to use for requestsPORT: Server port (default: 5001)

All requests and responses are automatically logged to logs.jsonl in JSONL format. The logging is thread-safe and includes both request and response content.

The proxy includes comprehensive error handling that:

- Preserves original OpenAI error messages when available

- Provides detailed error information for debugging

- Maintains proper HTTP status codes

MIT