Code release for our papers: ICRA'23 Keypoint-GraspNet: Keypoint-based 6-DoF Grasp Generation from the Monocular RGB-D input and IROS'23 KGNv2: Separating Scale and Pose Prediction for Keypoint-based 6-DoF Grasp Synthesis on RGB-D input.

Please follow INSTALL.md to prepare for the environment.

First download our released weights for KGNv1 and/or KGNv2. Put them under the folder ./exp. Then run the demo on real world data via:

bash experiments/demo_kgnv{1|2}.shNOTE: the released KGNv1 weight is trained on single-object data, while the KGNv2 weight is trained on multi-object data.

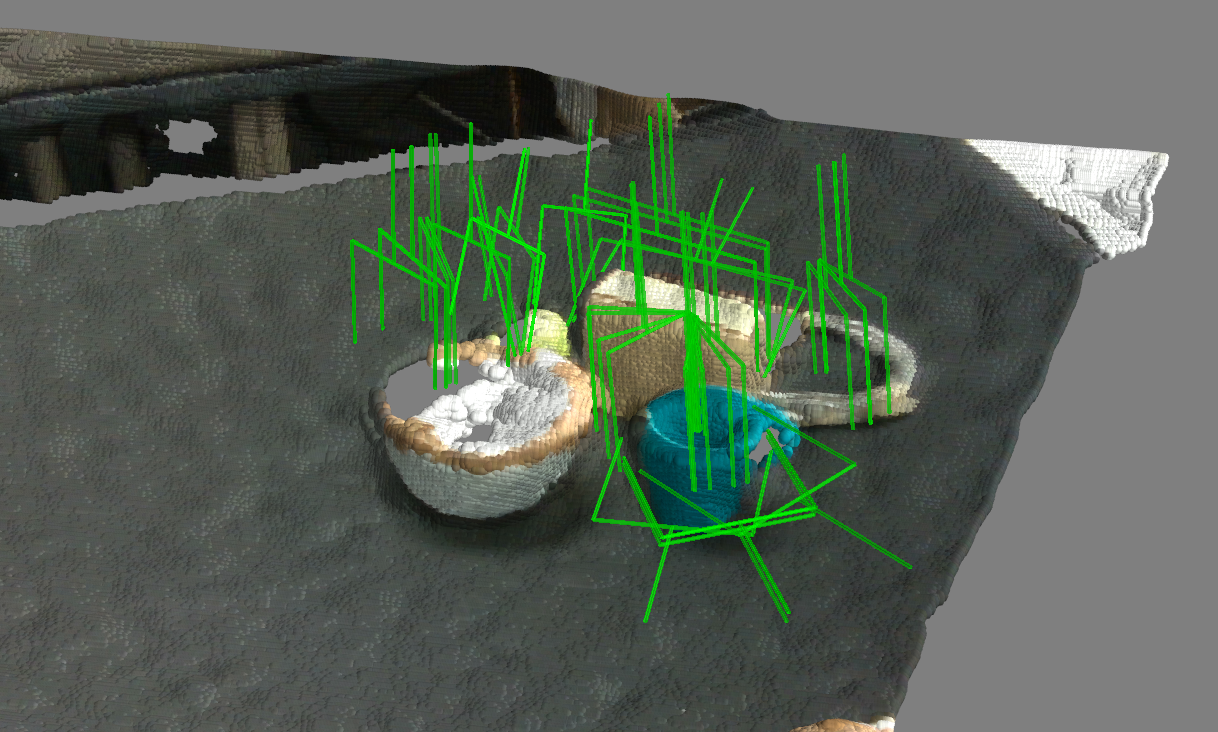

You should seem example results (from kgnv2):

The dataset used in the papers can be downloaded from the links: sinlge-object and multi-object. Download, extract, and put them in the ./data/ folder.

Alternatively, you can also generate the data by yourself. For single-object data generation:

python main_data_generate.py --config_file lib/data_generation/ps_grasp_single.yamlMulti-object data generation:

python main_data_generate.py --config_file lib/data_generation/ps_grasp_multi.yamlFirst download pretrained ctdet_coco_dla_2x model following the instruction. Put it under ./pretrained_weights/ folder.

Then run the training code.

bash experiments/train_kgnv{1|2}.sh {single|multi}single/multi: Train on single- or multi-object data.

bash experiments/test_kgnv{1|2}.sh {single|multi} {single|multi}First single/multi: Evaluate the weight trained on single- or multi-object data.

Second single/multi: Evaluate on single- or multi-object data.

Some code borrowed from the CenterPose and Acronym.

Please consider citing our work if you find the code helpful:

@inproceedings{chen2022keypoint,

title={Keypoint-GraspNet: Keypoint-based 6-DoF Grasp Generation from the Monocular RGB-D input},

author={Chen, Yiye and Lin, Yunzhi and Xu, Ruinian and Vela, Patricio},

booktitle={IEEE International Conference on Robotics and Automation (ICRA)},

year={2023}

}

@article{chen2023kgnv2,

title={KGNv2: Separating Scale and Pose Prediction for Keypoint-based 6-DoF Grasp Synthesis on RGB-D input},

author={Chen, Yiye and Xu, Ruinian and Lin, Yunzhi and Chen, Hongyi and Vela, Patricio A},

journal={IEEE International Conference on Intelligent Robots and Systems (IROS)},

year={2023}

}