GKNet: grasp keypoint network for Grasp Candidates Detection

Ruinian Xu, Fu-Jen Chu and Patricio A. Vela

- Abstract

- Highlights

- Vision Benchmark Results

- Installation

- Dataset

- Usage

- Develop

- Physical Experiments

- Supplemental Material

Contemporary grasp detection approaches employ deep learning to achieve robustness to sensor and object model uncertainty. The two dominant approaches design either grasp-quality scoring or anchor-based grasp recognition networks. This paper presents a different approach to grasp detection by treating it as keypoint detection. The deep network detects each grasp candidate as a pair of keypoints, convertible to the grasp representation g = {x, y, w, θ} T, rather than a triplet or quartet of corner points. Decreasing the detection difficulty by grouping keypoints into pairs boosts performance. The addition of a non-local module into the grasp keypoint detection architecture promotes dependencies between a keypoint and its corresponding grasp candidate keypoint. A final filtering strategy based on discrete and continuous orientation prediction removes false correspondences and further improves grasp detection performance. GKNet, the approach presented here, achieves the best balance of accuracy and speed on the Cornell and the abridged Jacquard dataset (96.9% and 98.39% at 41.67 and 23.26 fps). Follow-up experiments on a manipulator evaluate GKNet using 4 types of grasping experiments reflecting different nuisance sources: static grasping, dynamic grasping, grasping at varied camera angles, and bin picking. GKNet outperforms reference baselines in static and dynamic grasping experiments while showing robustness to grasp detection for varied camera viewpoints and bin picking experiments. The results confirm the hypothesis that grasp keypoints are an effective output representation for deep grasp networks that provide robustness to expected nuisance factors.

- Accurate: The proposed method achieves 96.9% and 98.39% detection rate over the Cornell Dataset and AJD, respectively.

- Fast: The proposed method is capable of running at real-time speed of 41.67 FPS and 23.26 FPS over the Cornell Dataset and AJD, respectively.

| Backbone | Acc (w o.f.) / % | Acc (w/o o.f.) / % | Speed / FPS |

|---|---|---|---|

| DLA | 96.9 | 96.8 | 41.67 |

| Hourglass-52 | 94.5 | 93.6 | 33.33 |

| Hourglass-104 | 95.5 | 95.3 | 21.27 |

| Resnet-18 | 96.0 | 95.7 | 66.67 |

| Resnet-50 | 96.5 | 96.4 | 52.63 |

| VGG-16 | 96.8 | 96.4 | 55.56 |

| AlexNet | 95.0 | 94.8 | 83.33 |

| Backbone | Acc (w o.f.) / % | Acc (w/o o.f.) / % | Speed / FPS |

|---|---|---|---|

| DLA | 98.39 | 96.99 | 23.26 |

| Hourglass-52 | 97.21 | 93.81 | 15.87 |

| Hourglass-104 | 97.93 | 96.04 | 9.90 |

| Resnet-18 | 97.95 | 95.97 | 31.25 |

| Resnet-50 | 98.24 | 95.91 | 25.00 |

| VGG-16 | 98.36 | 96.13 | 21.28 |

| AlexNet | 97.37 | 94.53 | 34.48 |

Please refer to for INSTALL.md installation instructions.

The two training datasets are provided here:

- Cornell: Download link.

In case the download link expires in the future, you can also use the matlab scripts provided in the

GKNet_ROOT/scripts/data_augto generate your own dataset based on the original Cornell dataset. You will need to modify the corresponding path for loading the input images and output files. - Abridged Jacquard Dataset (AJD): Download link.

After downloading datasets, place each dataset in the corresponding folder under GKNet_ROOT/datasets/.

The cornell dataset should be placed under GKNet_ROOT/datasets/Cornell/ and the AJD should be placed under GKNet_ROOT/datasets/Jacquard/.

Download models ctdet_coco_dla_2x and put it under GKNet_ROOT/models/.

For training the Cornell Dataset:

python scripts/train.py dbmctdet_cornell \

--exp_id dla34 \

--batch_size 4 \

--lr 1.25e-4 \

--arch dla_34 \

--dataset cornell \

--load_model models/ctdet_coco_dla_2x.pth \

--num_epochs 15 \

--val_intervals 1 \

--save_all \

--lr_step 5,10For training AJD:

python scripts/train.py dbmctdet \

--exp_id dla34 \

--batch_size 4 \

--lr 1.25e-4 \

--arch dla_34 \

--dataset jac_coco_36 \

--load_model models/ctdet_coco_dla_2x.pth \

--num_epochs 30 \

--val_intervals 1 \

--save_allYou can evaluate your own trained models or download pretrained models and put them under GKNet_ROOT/models/.

For evaluating the Cornell Dataset:

python scripts/test.py dbmctdet_cornell \

--exp_id dla34_test \

--arch dla_34 \

--dataset cornell \

--fix_res \

--flag_test \

--load_model models/model_dla34_cornell.pth \

--ae_threshold 1.0 \

--ori_threshold 0.24 \

--center_threshold 0.05For evaluating AJD:

python scripts/test.py dbmctdet \

--exp_id dla34_test \

--arch dla_34 \

--dataset jac_coco_36 \

--fix_res \

--flag_test \

--load_model models/model_dla34_ajd.pth \

--ae_threshold 0.65 \

--ori_threshold 0.1745 \

--center_threshold 0.15If you are interested in training GKNet on a new or customized dataset, please refer to DEVELOP.md. Also you can leave your issues here if you meet some problems.

To run physical experiments with GKNet and ROS, please follow the instructions provided in Experiment.md.

This section collects results of some experiments or discussions which aren't documented in the manuscript due to the lack of enough scientific values.

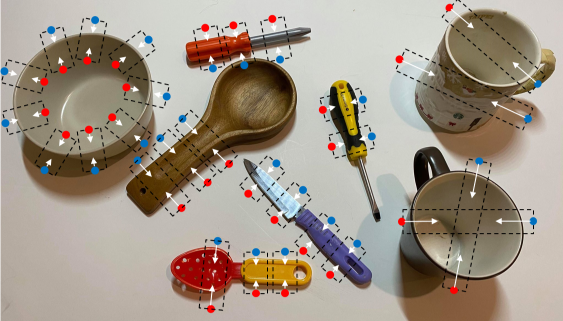

This readme file documents some examples with visualiztions for Top-left, bottom-left and bottom-right (TlBlBr) grasp keypoint representation. Theseexamples help clarify the effectiveness of grasp keypoint representation of less number of keypoints.

The result is recorded in tune_hp.md

The demo video of all physical experiments are uploaded on the Youtube. Please watch it if you are interested.

Some of the source data was summarized with the raw source data not provided. The links below provide access to the source material:

- Trial results of bin picking experiment.

- 6-DoF summary results for clutter clearance or bin-picking tasks.

Considering that GGCNN didn't provide the result of training and testing on the Cornell Dataset, we implemented their work based on their public repository. The modified version is provided here.

GKNet is released under the MIT License (refer to the LICENSE file for details). Portions of the code are borrowed from CenterNet, dla (DLA network), DCNv2(deformable convolutions). Please refer to the original License of these projects (See Notice).

If you use GKNet in your work, please cite:

@article{xu2021gknet,

title={GKNet: grasp keypoint network for grasp candidates detection},

author={Xu, Ruinian and Chu, Fu-Jen and Vela, Patricio A},

journal={arXiv preprint arXiv:2106.08497},

year={2021}

}