An R package of datasets and wrapper functions for tidyverse-friendly introductory linear regression used in "Statistical Inference via Data Science: A ModernDive into R and the Tidyverse"" available at ModernDive.com.

Get the released version from CRAN:

install.packages("moderndive")Or the development version from GitHub:

# If you haven't installed remotes yet, do so:

# install.packages("remotes")

remotes::install_github("moderndive/moderndive")Let’s fit a simple linear regression of teaching score (as evaluated

by students) over instructor age for 463 courses taught by 94

instructors at the UT Austin:

library(moderndive)

score_model <- lm(score ~ age, data = evals)Among the many useful features of the moderndive package outlined in

our essay “Why should you use the moderndive package for intro linear

regression?”

we highlight three functions in particular as covered there.

We also mention the geom_parallel_slopes() function as #4.

Get a tidy regression table with confidence intervals:

get_regression_table(score_model)## # A tibble: 2 x 7

## term estimate std_error statistic p_value lower_ci upper_ci

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 intercept 4.46 0.127 35.2 0 4.21 4.71

## 2 age -0.006 0.003 -2.31 0.021 -0.011 -0.001

Get information on each point/observation in your regression, including fitted/predicted values & residuals, organized in a single data frame with intuitive variable names:

get_regression_points(score_model)## # A tibble: 463 x 5

## ID score age score_hat residual

## <int> <dbl> <int> <dbl> <dbl>

## 1 1 4.7 36 4.25 0.452

## 2 2 4.1 36 4.25 -0.148

## 3 3 3.9 36 4.25 -0.348

## 4 4 4.8 36 4.25 0.552

## 5 5 4.6 59 4.11 0.488

## 6 6 4.3 59 4.11 0.188

## 7 7 2.8 59 4.11 -1.31

## 8 8 4.1 51 4.16 -0.059

## 9 9 3.4 51 4.16 -0.759

## 10 10 4.5 40 4.22 0.276

## # … with 453 more rows

Get all the scalar summaries of a regression fit included in

summary(score_model) along with the mean-squared error and root

mean-squared error:

get_regression_summaries(score_model)## # A tibble: 1 x 8

## r_squared adj_r_squared mse rmse sigma statistic p_value df

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0.011 0.009 0.292 0.540 0.541 5.34 0.021 2

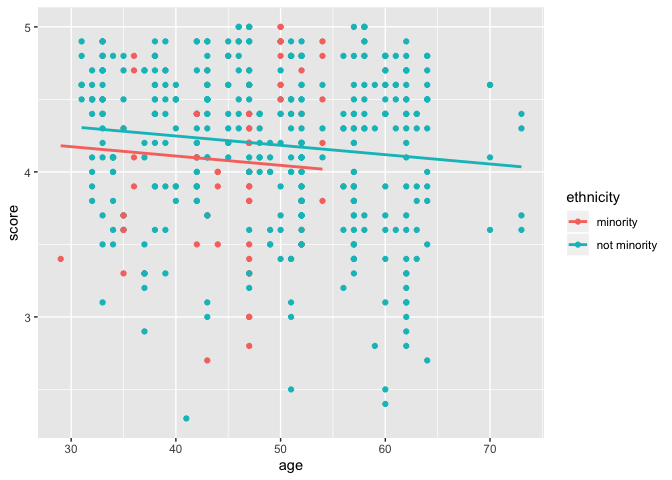

Plot parallel slopes regression models involving one categorical and one

numerical explanatory/predictor variable (something you cannot do using

ggplot2::geom_smooth()).

library(ggplot2)

ggplot(evals, aes(x = age, y = score, color = ethnicity)) +

geom_point() +

geom_parallel_slopes(se = FALSE)Want to output cleanly formatted tables in an R Markdown document? Just

add print = TRUE to any of the three get_regression_*()

functions.

get_regression_table(score_model, print = TRUE)| term | estimate | std_error | statistic | p_value | lower_ci | upper_ci |

|---|---|---|---|---|---|---|

| intercept | 4.462 | 0.127 | 35.195 | 0.000 | 4.213 | 4.711 |

| age | -0.006 | 0.003 | -2.311 | 0.021 | -0.011 | -0.001 |

Want to apply your fitted model on new data to make predictions? No

problem! Include a newdata data frame argument to

get_regression_points().

For example, the Kaggle.com practice competition House Prices: Advanced

Regression

Techniques

requires you to fit/train a model to the provided train.csv training

set to make predictions of house prices in the provided test.csv test

set. The following code performs these steps and outputs the predictions

in submission.csv:

library(tidyverse)

library(moderndive)

# Load in training and test set

train <- read_csv("https://github.com/moderndive/moderndive/raw/master/vignettes/train.csv")

test <- read_csv("https://github.com/moderndive/moderndive/raw/master/vignettes/test.csv")

# Fit model

house_model <- lm(SalePrice ~ YrSold, data = train)

# Make and submit predictions

submission <- get_regression_points(house_model, newdata = test, ID = "Id") %>%

select(Id, SalePrice = SalePrice_hat)

write_csv(submission, "submission.csv")The resulting submission.csv is formatted such that it can be

submitted on Kaggle, resulting in a “root mean squared logarithmic

error” leaderboard score of

0.42918.

The three get_regression functions are wrappers of functions from the

broom

package for converting statistical analysis objects into tidy tibbles

along with a few added tweaks:

get_regression_table()is a wrapper forbroom::tidy()get_regression_points()is a wrapper forbroom::augment()get_regression_summaries()is a wrapper forbroom::glance()

Why did we create these wrappers?

- The

broompackage function namestidy(),augment(), andglance()don’t mean anything to intro stats students, where as themoderndivepackage function namesget_regression_table(),get_regression_points(), andget_regression_summaries()are more intuitive. - The default column/variable names in the outputs of the above 3

functions are a little daunting for intro stats students to

interpret. We cut out some of them and renamed many of them with

more intuitive names. For example, compare the outputs of the

get_regression_points()wrapper function and the parentbroom::augment()function.

get_regression_points(score_model)## # A tibble: 463 x 5

## ID score age score_hat residual

## <int> <dbl> <int> <dbl> <dbl>

## 1 1 4.7 36 4.25 0.452

## 2 2 4.1 36 4.25 -0.148

## 3 3 3.9 36 4.25 -0.348

## 4 4 4.8 36 4.25 0.552

## 5 5 4.6 59 4.11 0.488

## 6 6 4.3 59 4.11 0.188

## 7 7 2.8 59 4.11 -1.31

## 8 8 4.1 51 4.16 -0.059

## 9 9 3.4 51 4.16 -0.759

## 10 10 4.5 40 4.22 0.276

## # … with 453 more rows

library(broom)

augment(score_model)## # A tibble: 463 x 9

## score age .fitted .se.fit .resid .hat .sigma .cooksd .std.resid

## <dbl> <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 4.7 36 4.25 0.0405 0.452 0.00560 0.542 0.00197 0.837

## 2 4.1 36 4.25 0.0405 -0.148 0.00560 0.542 0.000212 -0.274

## 3 3.9 36 4.25 0.0405 -0.348 0.00560 0.542 0.00117 -0.645

## 4 4.8 36 4.25 0.0405 0.552 0.00560 0.541 0.00294 1.02

## 5 4.6 59 4.11 0.0371 0.488 0.00471 0.541 0.00193 0.904

## 6 4.3 59 4.11 0.0371 0.188 0.00471 0.542 0.000288 0.349

## 7 2.8 59 4.11 0.0371 -1.31 0.00471 0.538 0.0139 -2.43

## 8 4.1 51 4.16 0.0261 -0.0591 0.00232 0.542 0.0000139 -0.109

## 9 3.4 51 4.16 0.0261 -0.759 0.00232 0.541 0.00229 -1.40

## 10 4.5 40 4.22 0.0331 0.276 0.00374 0.542 0.000488 0.510

## # … with 453 more rows

Please note that this project is released with a Contributor Code of Conduct. By participating in this project you agree to abide by its terms.