This repository contains the PyTorch implementation of the following two papers:

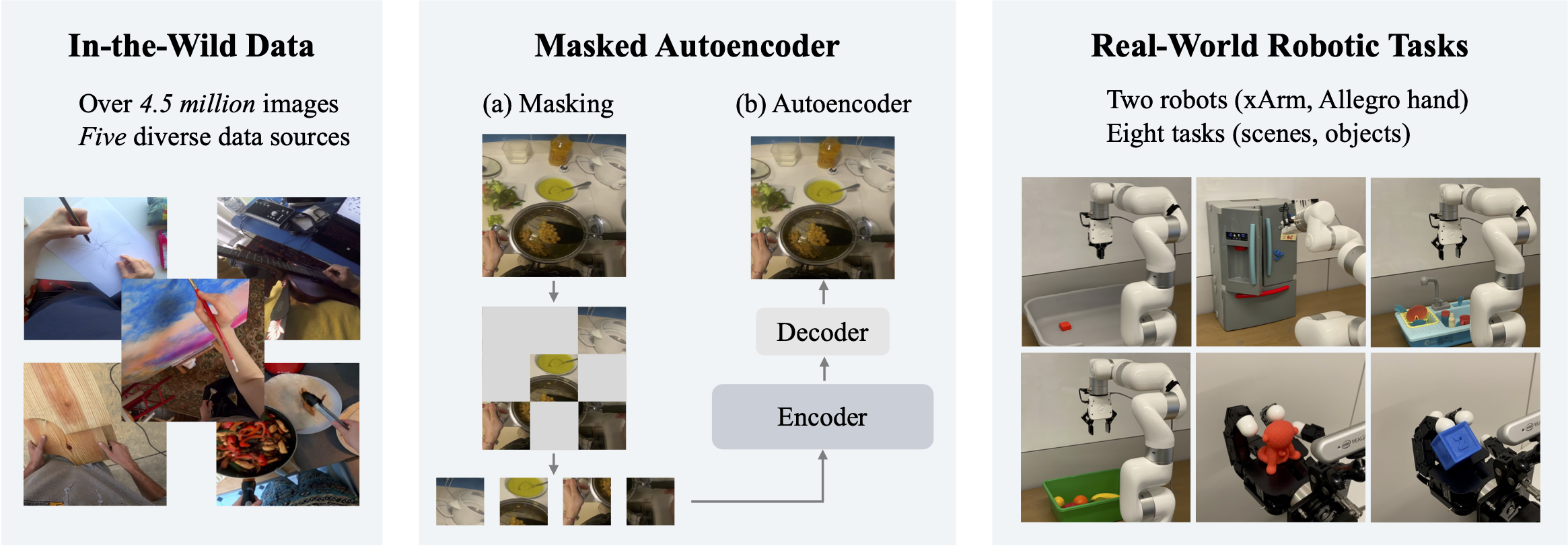

- Masked Visual Pre-training for Motor Control

- Real-World Robot Learning with Masked Visual Pre-training

It includes the pre-trained vision models and PPO/BC training code used in the papers.

We provide our pre-trained vision encoders. The models are in the same format as mae and timm:

| backbone | params | images | objective | md5 | download |

|---|---|---|---|---|---|

| ViT-S | 22M | 700K | MAE | fe6e30 | model |

| ViT-B | 86M | 4.5M | MAE | 526093 | model |

| ViT-L | 307M | 4.5M | MAE | 5352b0 | model |

You can use our pre-trained models directly in your code (e.g., to extract image features) or use them with our training code. We provde instructions for both use-cases next.

Install PyTorch and mvp package:

pip install git+https://github.com/ir413/mvp

Import pre-trained models:

import mvp

model = mvp.load("vitb-mae-egosoup")

model.freeze()Please see TASKS.md for task descriptions and GETTING_STARTED.md for installation and training instructions.

If you find the code or pre-trained models useful in your research, please consider citing an appropriate subset of the following papers:

@article{Xiao2022

title = {Masked Visual Pre-training for Motor Control},

author = {Tete Xiao and Ilija Radosavovic and Trevor Darrell and Jitendra Malik},

journal = {arXiv:2203.06173},

year = {2022}

}

@article{Radosavovic2022,

title = {Real-World Robot Learning with Masked Visual Pre-training},

author = {Ilija Radosavovic and Tete Xiao and Stephen James and Pieter Abbeel and Jitendra Malik and Trevor Darrell},

year = {2022},

journal = {CoRL}

}

We thank NVIDIA IsaacGym and PhysX teams for making the simulator and preview code examples available.