Introduction | Results Demo | Installation | Inference Code | News | Statement | Reference

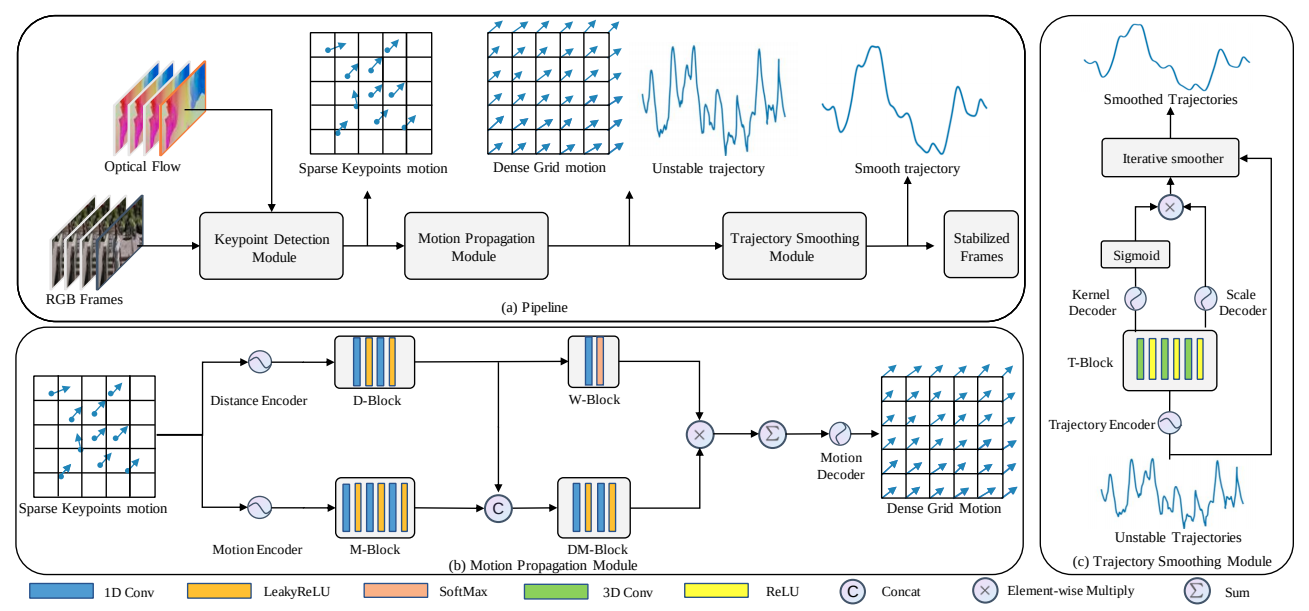

This repository contains the code, models, test results for the paper DUT: Learning Video Stabilization by Simply Watching Unstable Videos. It contains a keypoint detetcion module for robust keypoint detection, a motion propagation module for grid-based trajectories estimation, and a trajectory smoothing module for dynamic trajectory smoothing. The DUT model is totally unsupervised and just need unstable videos for training.

We have released the inference code, a pretrained model, which can be found in section inference code for more details. The inference code for DIFRINT and StabNet are also included in this repo.

We test DUT on the NUS dataset and provide a result overview below (odd rows for unstable inputs and even rows for stabilized videos).

For the motion propagation module, we propose a multi-homography estimation strategy instead of directly using RANSAC for single homography estimation to deal with multiple planes cases. The outliers recognized by RANSAC and the multi-planes identified with the multi-homography strategy are as follows:

The trajectory smoothing module predicts dynamic kernels for iterative smoothing according to the trajectories themselves, the smoothing process can be viewed below:

Requirements:

- Python 3.6.5+

- Pytorch (version 1.4.0)

- Torchvision (version 0.5.0)

-

Clone this repository

git clone https://github.com/Annbless/DUTCode.git -

Go into the repository

cd DUTCode -

Create conda environment and activate

conda create -n DUTCode python=3.6.5conda activate DUTCode -

Install dependencies

conda install pytorch==1.4.0 torchvision==0.5.0 cudatoolkit=10.1 -c pytorchpip install -r requirements.txt

Our code has been tested with Python 3.6.5, Pytorch 1.4.0, Torchvision 0.5.0, CUDA 10.1 on Ubuntu 18.04.

Here we provide the procedure of testing on sample videos by our pretrained models:

-

Download pretrained model and unzip them to ckpt folder

-

Put clipped frames from unstable videos in the images folder (we have provided one example in the folder)

-

Run the stabilization script

chmod +x scripts/*./scripts/deploy_samples.sh -

The results of our DUT, DIFRINT, and StabNet are provided under the results folder

- release inference code for the DUT model;

- include inference code for the DIFRINT model, thanks to jinsc37;

- include pytorch version StabNet model, thanks to cxjyxxme;

- include traditional stabilizers in python;

- release metrics calculation code;

- release training code for the DUT model;

This project is for research purpose only, please contact us for the licence of commercial use. For any other questions please contact yuxu7116@uni.sydney.edu.au.