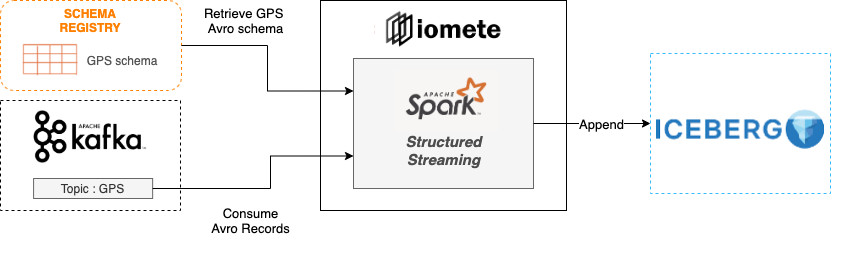

This is a collection of data movement capabilities. This streaming job copies data from Kafka to Iceberg.

Currently, two deserialization format supported.

- JSON

- AVRO

In the Spark configuration, a user-defined reference json schema can be defined, and the system processes the binary data accordingly. Otherwise, It considers the schema of the first row and assumes the rest of the rows is compatible.

Converts binary data according to the schema defined by the user or retrieves the schema from the schema registry.

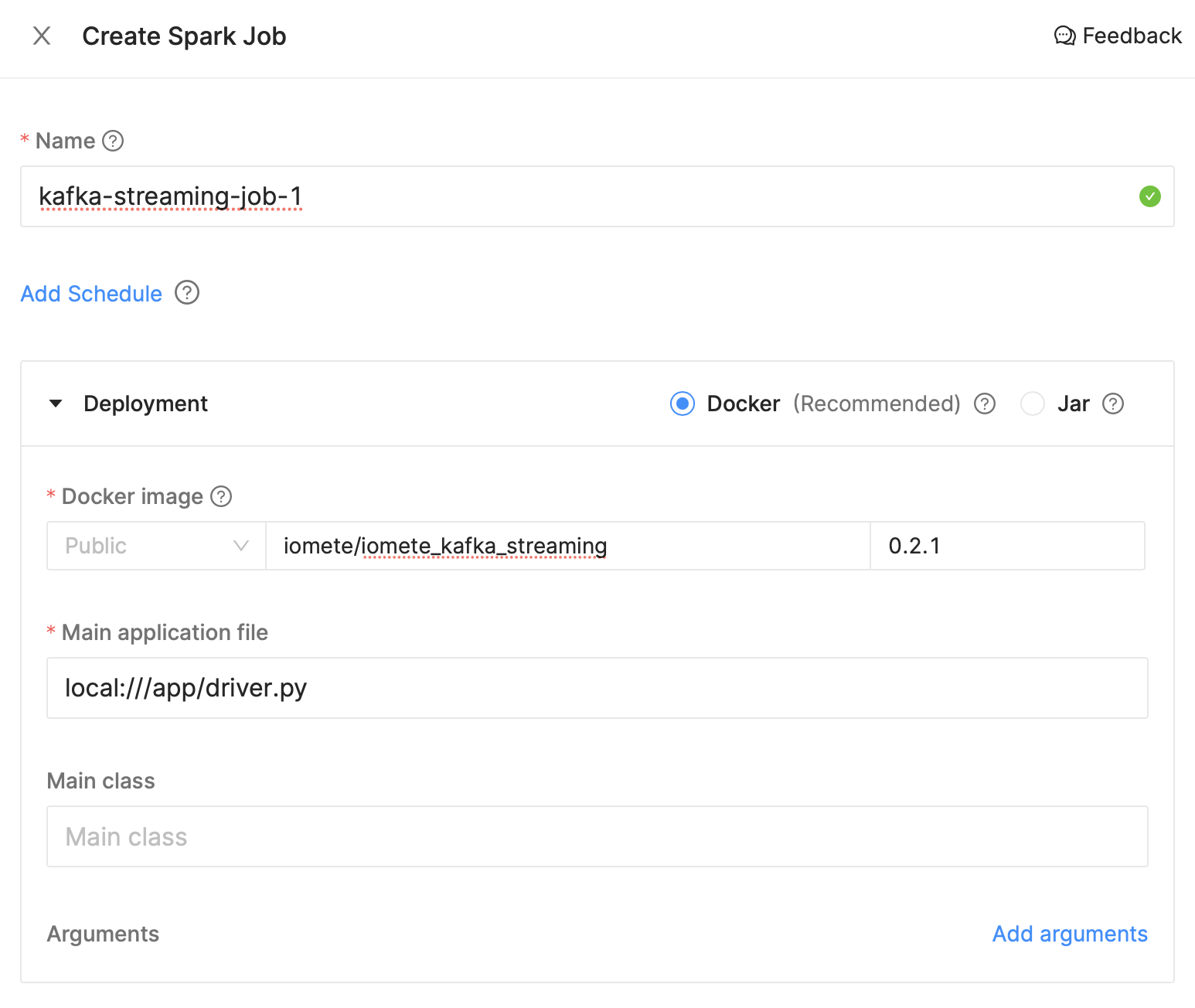

- Go to

Spark Jobs. - Click on

Create New.

Specify the following parameters (these are examples, you can change them based on your preference):

- Name:

kafka-streaming-job - Docker Image:

iomete/iomete_kafka_streaming_job:0.2.1 - Main application file:

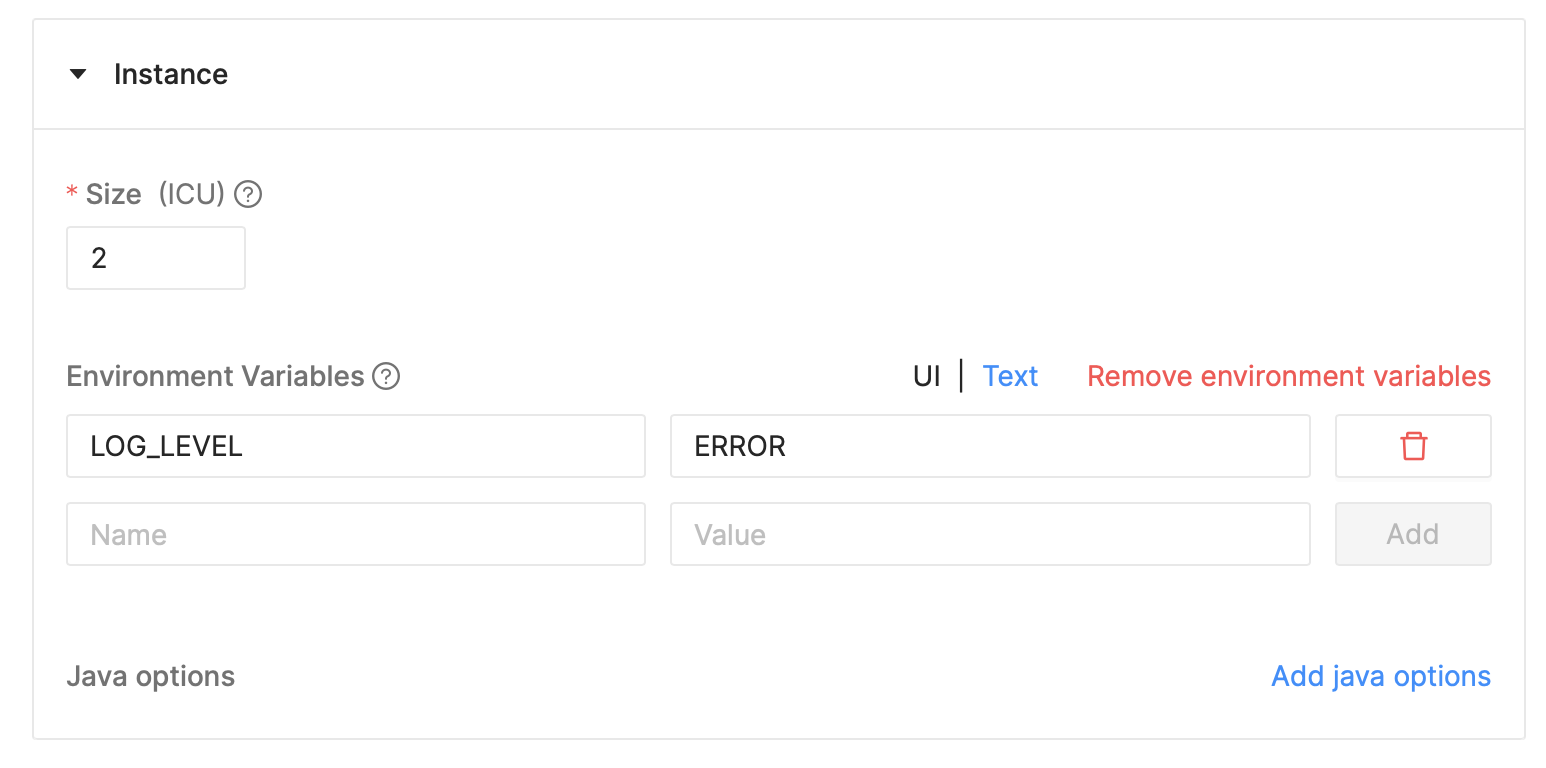

local:///app/driver.py - Environment Variables:

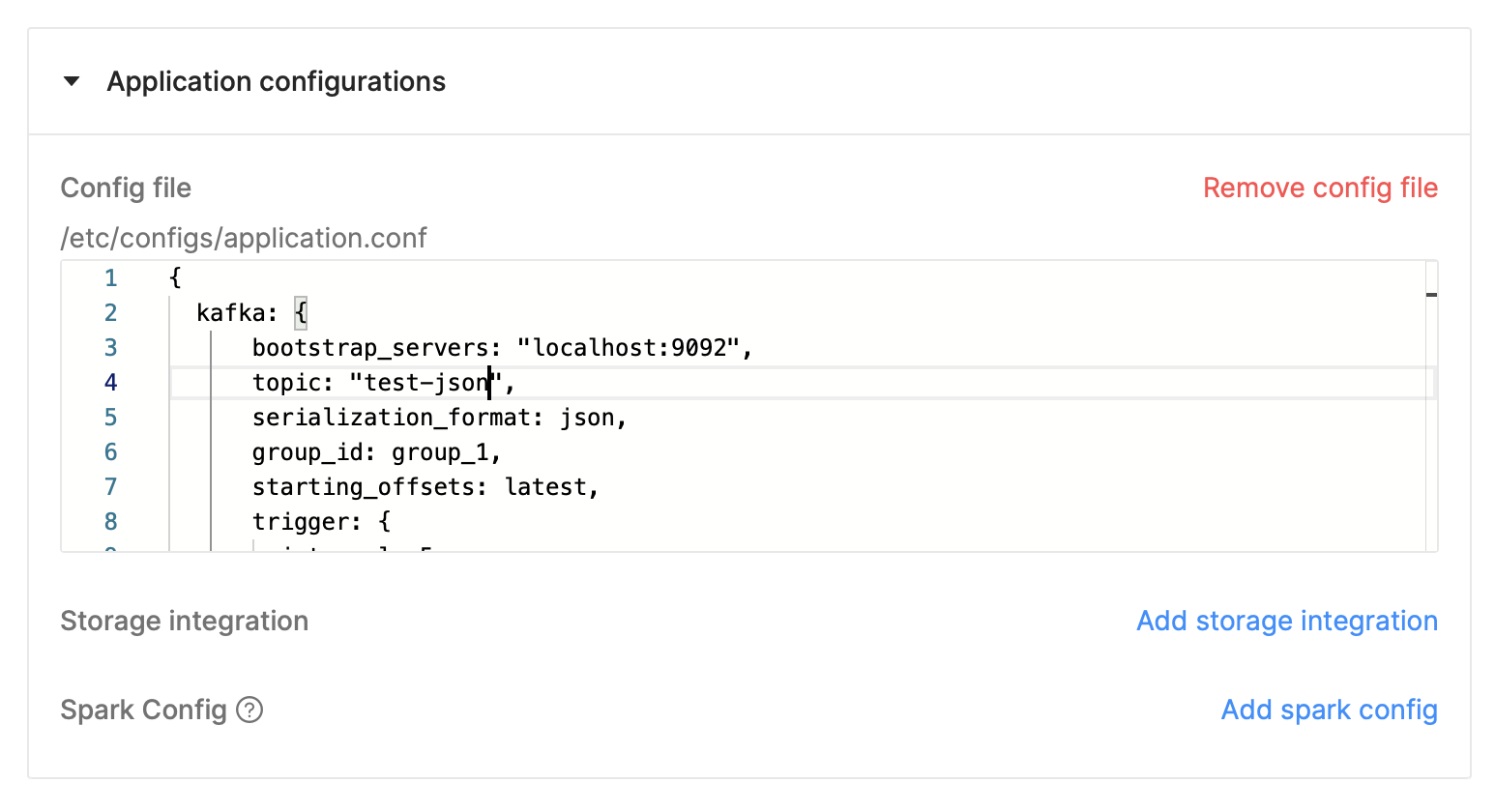

LOG_LEVEL:INFOorERROR - Config file:

{

kafka: {

bootstrap_servers: "localhost:9092",

topic: "usage.spark.0",

serialization_format: json,

group_id: group_1,

starting_offsets: latest,

trigger: {

interval: 5

unit: seconds # minutes

},

schema_registry_url: "http://127.0.0.1:8081"

},

database: {

schema: default,

table: spark_usage_20

}

}| Property | Description | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

kafka |

Required properties to connect and configure.

|

||||||||||||

database |

Destination database properties.

|

Create Spark Job - Instance

You can use Environment Variables to store your sensitive data like password, secrets, etc. Then you can use these variables in your config file using the

${ENV_NAME}syntax.

Create Spark Job - Application Environment

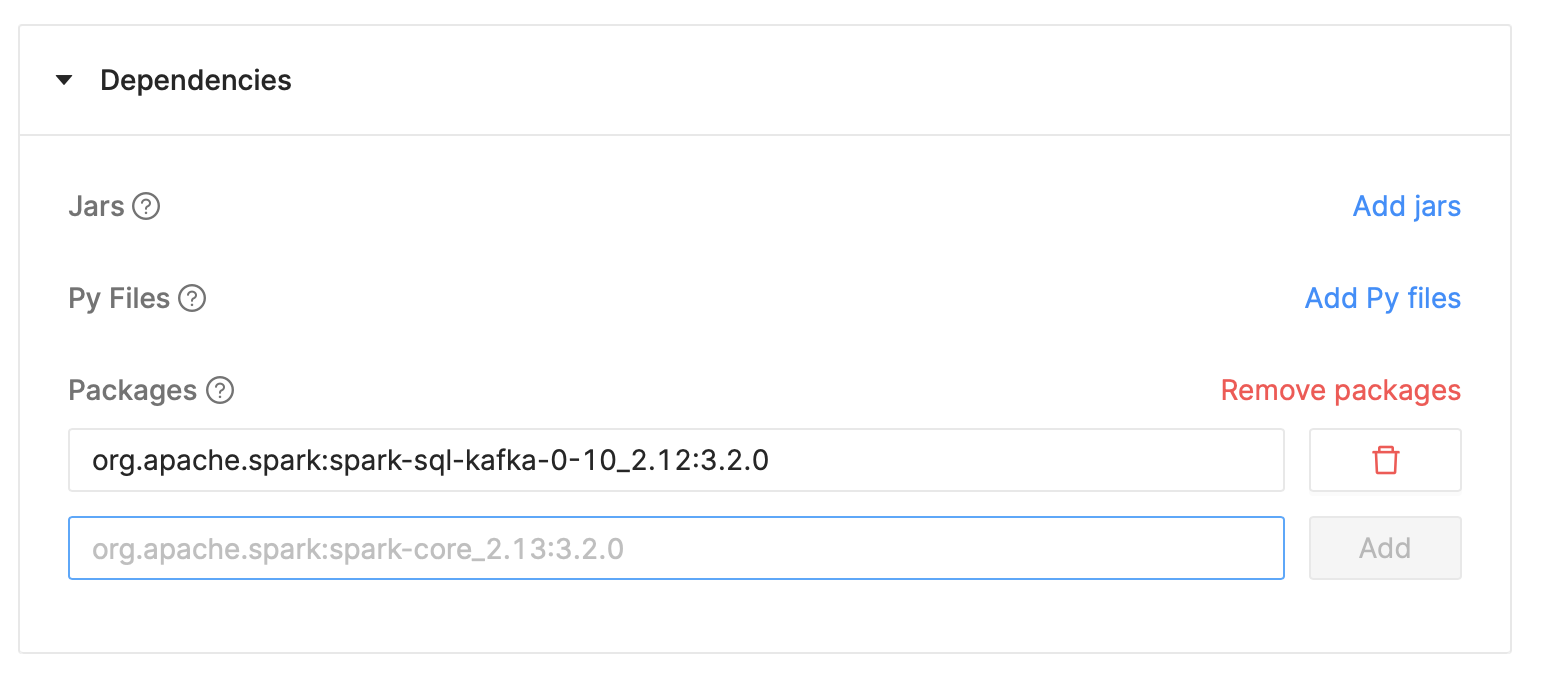

Create Spark Job - Application dependencies

Prepare the dev environment

virtualenv .env #or python3 -m venv .env

source .env/bin/activate

pip install -e ."[dev]"Run test

pytest