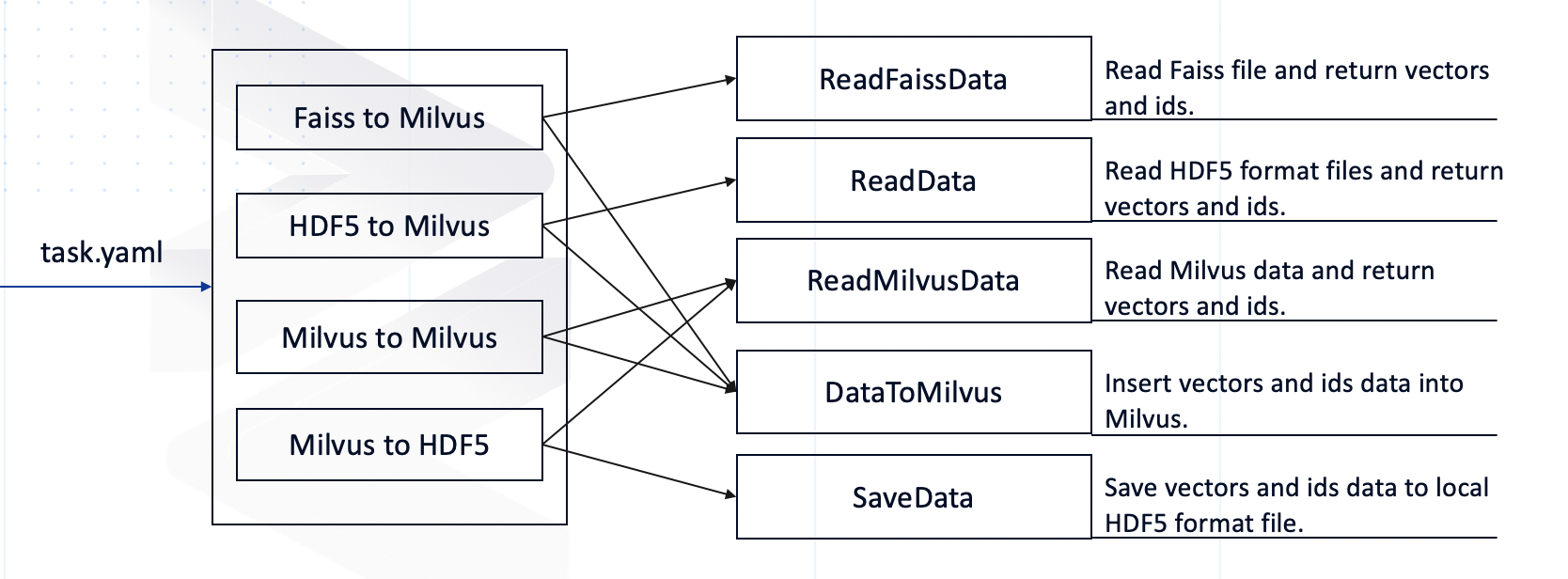

MilvusDM (Milvus Data Migration) is a data migration tool for Milvus that supports importing Faiss and HDF5 data files into Milvus, migrating data between Milvus, and it also supports batch backup of Milvus data to local files. Using milvusdm can help developers improve usage efficiency, reduce operation and maintenance costs.

- Operating system requirements

| Operating system | Supported versions |

|---|---|

| CentOS | 7.5 or higher |

| Ubuntu LTS | 18.04 or higher |

- Software requirements

| Software | Version |

|---|---|

| Milvus | 0.10.x or 1.x or 2.x |

| Python3 | 3.7 or higher |

| pip3 | Corresponds to python version. |

- Configure environment variables

Add the following two lines to ~/.bashrc file:

export MILVUSDM_PATH='/home/$user/milvusdm'

export LOGS_NUM=0MILVUSDM_PATH: This parameter defines the working path of milvusdm. Logs and data generated by Milvusdm will be stored in this path. The default value is

/home/$user/milvusdm.LOGS_NUM: Milvusdm log file generates one per day. This parameter defines the number of log files to be saved. The default value is 0, which means all log files are saved.

Make the configured environment variables:

$ source ~/.bashrc- Install milvusdm by pip

$ pip3 install pymilvusdm==2.0The pymilvusdm2.0 is used to migrate data from Milvus(0.10.x or 1.x) to Milvus2.x.

Export one Faiss index file to Milvus in a specified collection or partition.

In the current version, only flat and ivf_flat indexes for floating data are supported.

- Download the example yaml

$ wget https://raw.githubusercontent.com/milvus-io/milvus-tools/main/yamls/F2M.yaml- Config the yaml

F2M:

milvus_version: 1.x

data_path: '/home/data/faiss1.index'

dest_host: '127.0.0.1'

dest_port: 19530

mode: 'append'

dest_collection_name: 'test'

dest_partition_name: ''

collection_parameter:

dimension: 256

index_file_size: 1024

metric_type: 'L2'

- Optional parameters:

dest_partition_name- The Parameter

modecan be selected fromappend,skip,overwrite. This parameter takes effect only when the specified collection name exists in Milvus library. append: Append data to the existing collection

skip: Skip the existing collection and do not perform any operations

overwrite: Delete the old collection, create a new collection with the same name and then import the data.

- Parameter description

| parameter | description | example |

|---|---|---|

| F2M | Task: Export data in HDF5 to Milvus. | |

| milvus_version | Version of Milvus. | 0.10.5 |

| data_path | Path to the data in Faiss. | '/home/user/data/faiss.index' |

| dest_host | Milvus server address | '127.0.0.1' |

| dest_port | Milvus server port. | 19530 |

| mode | Mode of migration. | 'append' |

| dest_collection_name | Name of the collection to import data to. | 'test' |

| dest_partition_name | Name of the partition to import data to. (Optional) | 'partition' |

| collection_parameter | Collection-specific information such as vector dimension, index file size, and similarity metric. | dimension: 512 index_file_size: 1024 metric_type: 'HAMMING' |

- Usage

$ milvusdm --yaml F2M.yamlExport one or more HDF5 files to Milvus in a specified collection or partition.

We provide the HDF5 examples of float vectors(dim-100) and binary vectors(dim-512) and their corresponding ids.

- Download the yaml

$ wget https://raw.githubusercontent.com/milvus-io/milvus-tools/main/yamls/H2M.yaml- Config the yaml

H2M:

milvus-version: 1.x

data_path:

- /Users/zilliz/float_1.h5

- /Users/zilliz/float_2.h5

data_dir:

dest_host: '127.0.0.1'

dest_port: 19530

mode: 'overwrite' # 'skip/append/overwrite'

dest_collection_name: 'test_float'

dest_partition_name: 'partition_1'

collection_parameter:

dimension: 128

index_file_size: 1024

metric_type: 'L2'or

H2M:

milvus_version: 1.x

data_path:

data_dir: '/Users/zilliz/HDF5_data'

dest_host: '127.0.0.1'

dest_port: 19530

mode: 'append' # 'skip/append/overwrite'

dest_collection_name: 'test_binary'

dest_partition_name:

collection_parameter:

dimension: 512

index_file_size: 1024

metric_type: 'HAMMING'

- Optional parameters:

dest_partition_name- Just configure

data_pathordata_dir, while the other one is None.

- Parameter description

| parameter | description | example |

|---|---|---|

| H2M | Task: Export data in HDF5 to Milvus. | |

| milvus_version | Version of Milvus. | 0.10.5 |

| data_path | Path to the HDF5 file. | - /Users/zilliz/float_1.h5 - /Users/zilliz/float_1.h5 |

| data_dir | Directory of the HDF5 files. | /Users/zilliz/Desktop/HDF5_data |

| dest_host | Milvus server address. | '127.0.0.1' |

| dest_port | Milvus server port. | 19530 |

| mode | Mode of migration | 'append' |

| dest_collection_name | Name of the collection to import data to. | 'test_float' |

| dest_partition_name | Name of the partition to import data to.(optional) | 'partition_1' |

| collection_parameter | Collection-specific information such as vector dimension, index file size, and similarity metric. | dimension: 512 index_file_size: 1024 metric_type: 'HAMMING' |

- Usage

$ milvusdm --yaml H2M.yamlMilvusDM does not support migrating data from Milvus 2.0 standalone to Milvus 2.0 cluster.

Copy a collection of source_milvus or multiple partitions of a collection into the corresponding collection or partition in dest_milvus.

- Download the yaml

$ wget https://raw.githubusercontent.com/milvus-io/milvus-tools/main/yamls/M2M.yaml- Config the yaml

M2M:

milvus_version: 1.x

source_milvus_path: '/home/user/milvus'

mysql_parameter:

host: '127.0.0.1'

user: 'root'

port: 3306

password: '123456'

database: 'milvus'

source_collection: # specify the 'partition_1' and 'partition_2' partitions of the 'test' collection.

test:

- 'partition_1'

- 'partition_2'

dest_host: '127.0.0.1'

dest_port: 19530

mode: 'skip' # 'skip/append/overwrite'Or

M2M:

milvus_version: 1.x

source_milvus_path: '/home/user/milvus'

mysql_parameter:

source_collection: # specify the collection named 'test'

test:

dest_host: '127.0.0.1'

dest_port: 19530

mode: 'skip' # 'skip/append/overwrite'

- Required parameters:

source_milvus_path,source_collection,dest_host,dest_portandmode.- If you are using MySQL to manage source_milvus metadata, configure the

mysql_parameterparameter, which is empty if you are using SQLite.- The

source_collectionparameter must specify a collection name, and the following partition name is optional and multiple partitions can be added.

- Parameter Description

| parameter | description | example |

|---|---|---|

| M2M | Task: Copy the data from Milvus to the same version of Milvus. | |

| milvus_version | The dest-milvus version. | 0.10.5 |

| source_milvus_path | Working directory of the source Milvus. | '/home/user/milvus' |

| mysql_parameter | MySQL settings for the source Milvus, including mysql host, user, port, password and database parameters. |

host: '127.0.0.1' user: 'root' port: 3306 password: '123456' database: 'milvus' |

| source_collection | Names of the collection and its partitions in the source Milvus. | test: - 'partition_1' - 'partition_2' |

| dest_host | Target Milvus server address. | '127.0.0.1' |

| dest_port | Target Milvus server port. | 19530 |

| mode | Mode of migration | 'skip' |

-

Usage

It will copy the

source_milvuscollection data todest_milvus.

$ milvusdm --yaml M2M.yamlExport a Milvus collection or multiple partitions of a collection to a local HDF5 format file.

- Download the yaml

$ wget https://raw.githubusercontent.com/milvus-io/milvus-tools/main/yamls/M2H.yaml- Config the yaml

M2H:

milvus_version: 1.x

source_milvus_path: '/home/user/milvus'

mysql_parameter:

host: '127.0.0.1'

user: 'root'

port: 3306

password: '123456'

database: 'milvus'

source_collection: # specify the 'partition_1' and 'partition_2' partitions of the 'test' collection.

test:

- 'partition_1'

- 'partition_2'

data_dir: '/home/user/data'Or

M2H:

milvus_version: 1.x

source_milvus_path: '/home/user/milvus'

mysql_parameter:

source_collection: # specify the collection named 'test'

test:

data_dir: '/home/user/data'

- The

source_milvus_path,source_collection, anddata_dirparameters are required.- If you are using MySQL to manage source_milvus metadata, configure the

mysql_parameterparameter, which is empty if you are using SQLite.- The

source_collectionparameter must specify a collection name, and the following partition name is optional and multiple partitions can be added.

- Parameter description

| parameter | description | example |

|---|---|---|

| M2H | Task: Export Milvus data to local HDF5 format files. | |

| milvus_version | The source-milvus version. | 0.10.5 |

| source_milvus_path | Working directory of Milvus. | '/home/user/milvus' |

| mysql_parameter | MySQL settings for Milvus, including mysql host, user, port, password and database parameters. |

host: '127.0.0.1' user: 'root' port: 3306 password: '123456' database: 'milvus' |

| source_collection | Names of the collection and its partitions in Milvus. | test: - 'partition_1' - 'partition_2' |

| data_dir | Directory to save HDF5 files. | '/home/user/data' |

-

Usage

It will generate the corresponding hfd5 format file and H2M configuration file in the

data_dirdirectory.

$ milvusdm --yaml M2H.yamlIf you would like to contribute code to this project, you can find out more about our code structure:

- pymilvusdm

- core

- milvus_client.py: Performs client operations in Milvus.

- read_data.py: Reads the HDF5 files on your local drive. (Add your code here to support reading data files in other formats.)

- read_faiss_data.py: Reads Faiss data files.

- read_milvus_data.py: Reads Milvus data files.

- read_milvus_meta.py: Reads Milvus metadata.

- data_to_milvus.py: Creates collections or partitions as specified in .yaml files and imports vectors and the corresponding IDs into Milvus.

- save_data.py: Saves data as HDF5 files.

- write_logs.py: Writes

debug/info/errorlogs during runtime.

- faiss_to_milvus.py: Imports Faiss data into Milvus.

- hdf5_to_milvus.py: Imports HDF5 files into Milvus.

- milvus_to_milvus.py: Migrates data from a source Milvus to a target Milvus.

- milvus_to_hdf5.py: Saves Milvus data as HDF5 files.

- main.py: Executes tasks as specified by the received .yaml file.

- setting.py: Stores configurations for MilvusDM operation.

- core

- setup.py: Creates and uploads pymilvusdm file packages to PyPI (Python Package Index).

- Faiss to Milvus

- Supports vector data of binary type.

- Milvus to Milvus

- Specify multiple collections or multiple partitions based on blocklist and allowlist.

- When

source_collection='*', all Milvus data is exported. - Support for merging multiple collections or partitions in source_milvus into one collection in dest_milvus.

- Milvus Dump

- Milvus Restore