In this demo we will present how to run JDG with x-site replication on several OpenShfift clusters.

-

A running OpenShift cluster (can be

oc cluster up, the setup principles are exactly the same) -

occlient installed -

makeinstalled

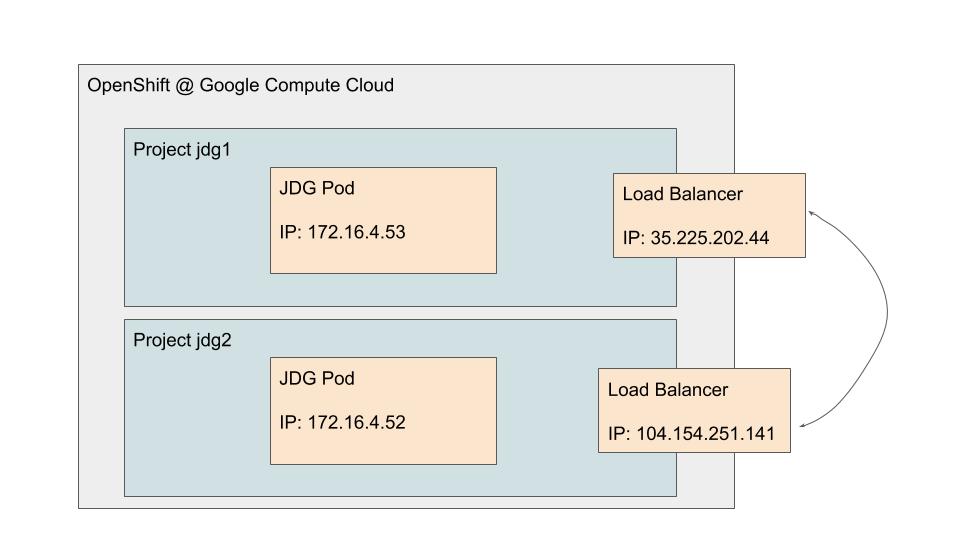

It is perfectly fine to perform this demo on oc cluster up using two separate projects. Here we will be using projects jdg1 and jdg2. The setup looks like the following:

Each Pod binds to its local IP Address (eth0). This IP is figured out from Downward API but can be also found using hostname -i or by specifying JGroups bind address to bind_addr="match-interface:eth0" (see Bela’s take on JGroups @ Docker).

We create a Load Balancer in front of each groups of Pods with an externally reachable IP address. It usually takes a while to create such a Load Balancer.

Finally, we use those externally reachable addresses for forming so called Global Cluster for x-site replication.

-

oc new-project jdg1- creates projectjdg1 -

make install-templates test - starts a single node of JDG

-

oc new-project jdg2- creates projectjdg2 -

make install-templates test - starts a single node of JDG

-

At this point we have two separate (singleton) clusters in two separate projects

-

oc get svc/jdg-app-x-site -o wide -n jdg2- grab the external IP address for LB injdg2, write it down -

oc get svc/jdg-app-x-site -o wide -n jdg1- grab the external IP address for LB injdg1, write it down -

oc edit cm/jdg-app-configuration -n jdg2- edit the configuration ofjdg1. -

Replace all

external_addrproperties with real Load Balancer IP. -

Modify

initial_hostsinTCPPINGtoLB1[55200],LB2[55200] -

Configure sites in

relay -

oc delete pod jdg-app-0 -n jdg2 && oc delete pod jdg-app-0 -n jdg1- restart the pods -

Check the logs and make sure the x-site cluster formed correctly. This may take a moment.

// ConfigMap jdg-app-configuration

//in Infinispan Subsystem

<distributed-cache name="default">

<!-- Depending what you want to do, you need to put different things here ;) -->

<backups>

<backup site="gcp2" strategy="ASYNC" />

</backups>

</distributed-cache>

...

<subsystem xmlns="urn:infinispan:server:jgroups:8.0">

<channels default="cluster">

<channel name="cluster"/>

<!-- We need additional JGroups Channel which binds into relay stack -->

<channel name="global" stack="relay-global"/>

</channels>

<stacks default="${jboss.default.jgroups.stack:kubernetes}">

<stack name="relay-global">

<transport type="TCP" socket-binding="jgroups-tcp-relay">

<!-- Since we are behind the Load Balancer, we need to put an

external IP here. JGroups need to be aware of the IP address translation -->

<property name="external_addr">${jboss.bind.address:127.0.0.1}</property>

</transport>

<protocol type="TCPPING">

<!-- Here we put all global cluster members (their LB IPs) -->

<property name="initial_hosts">${jboss.bind.address:127.0.0.1}[55200]</property>

</protocol>

<protocol type="MERGE3"/>

<protocol type="FD_SOCK" socket-binding="jgroups-tcp-fd">

<!-- Again, the FD protocol needs to be aware of the address translation -->

<property name="external_addr">${jboss.bind.address:127.0.0.1}</property>

</protocol>

<protocol type="FD_ALL"/>

<protocol type="VERIFY_SUSPECT"/>

<protocol type="pbcast.NAKACK2">

<property name="use_mcast_xmit">false</property>

</protocol>

<protocol type="UNICAST3"/>

<protocol type="pbcast.STABLE"/>

<protocol type="pbcast.GMS"/>

<protocol type="MFC"/>

</stack>

<!-- The stack below is responsible for local cluster discovery (within a single DC) -->

<stack name="kubernetes">

<transport type="TCP" socket-binding="jgroups-tcp"/>

<!-- We use DNS_PING to avoid additional permissions -->

<protocol type="openshift.DNS_PING" socket-binding="jgroups-mping"/>

<protocol type="MERGE3"/>

<protocol type="FD_SOCK" socket-binding="jgroups-tcp-fd"/>

<protocol type="FD_ALL"/>

<protocol type="VERIFY_SUSPECT"/>

<protocol type="pbcast.NAKACK2">

<property name="use_mcast_xmit">false</property>

</protocol>

<protocol type="UNICAST3"/>

<protocol type="pbcast.STABLE"/>

<protocol type="pbcast.GMS"/>

<protocol type="MFC"/>

<protocol type="FRAG3"/>

<!-- The site below is the name of "my" site. This needs to be replaced by gcp2 for jdg2 -->

<relay site="gcp1">

<!-- And again, this needs to be set to gcp for jdg1 -->

<remote-site name="gcp2" stack="relay-global" cluster="global"/>

<property name="relay_multicasts">false</property>

</relay>

</stack>

</stacks>

</subsystem>

...

<socket-binding-group name="standard-sockets" default-interface="public" port-offset="${jboss.socket.binding.port-offset:0}">

<socket-binding name="jgroups-tcp" port="7600"/>

<!-- This port needs to match the port exposed by the LB Service -->

<socket-binding name="jgroups-tcp-relay" port="55200" />

</socket-binding-group>