This is the official implementation of Flipped-VQA (EMNLP 2023) (arxiv).

Dohwan Ko1*, Ji Soo Lee1*, Wooyoung Kang2, Byungseok Roh2, Hyunwoo J. Kim1.

1Department of Computer Science and Engineering, Korea University 2Kakao Brain

To install requirements, run:

git clone https://github.com/mlvlab/Flipped-VQA.git

cd Flipped-VQA

mkdir pretrained

mkdir data

conda create -n ovqa python=3.8

conda activate flipped-vqa

sh setup.sh

You can download our preprocessed datasets (NExT-QA, STAR, DramaQA, VLEP and TVQA) at here. Put them in ./data. Also, you can download original LLaMA at here, and put the checkpoint in ./pretrained.

./pretrained

└─ llama

|─ 7B

| |─ consolidated.00.pth

| └─ params.json

|─ 13B

| :

|─ 33B

| :

└─ tokenizer.model

./data

|─ nextqa

| |─ train.csv

| |─ val.csv

| └─ clipvitl14.pth

|─ star

| :

|─ dramaqa

| :

|─ vlep

| :

└─ tvqa

:

torchrun --rdzv_endpoint 127.0.0.1:1234 --nproc_per_node 4 train.py --model 7B \

--max_seq_len 128 --batch_size 8 --epochs 5 --warmup_epochs 2 --bias 3.5 --tau 100. --max_feats 10 --dataset nextqa \

--blr 9e-2 --weight_decay 0.14 --output_dir ./checkpoint/nextqa --accum_iter 2 --vaq --qav

torchrun --rdzv_endpoint 127.0.0.1:1234 --nproc_per_node 4 train.py --model 7B \

--max_seq_len 128 --batch_size 8 --epochs 5 --warmup_epochs 2 --bias 3 --tau 100. --max_feats 10 --dataset star \

--blr 9e-2 --weight_decay 0.16 --output_dir ./checkpoint/star --accum_iter 1 --vaq --qav

torchrun --rdzv_endpoint 127.0.0.1:1234 --nproc_per_node 4 train.py --model 7B \

--max_seq_len 384 --batch_size 2 --epochs 5 --warmup_epochs 2 --bias 3 --tau 100. --max_feats 10 --dataset dramaqa \

--blr 9e-2 --weight_decay 0.10 --output_dir ./checkpoint/dramaqa --accum_iter 8 --vaq --qav

torchrun --rdzv_endpoint 127.0.0.1:1234 --nproc_per_node 4 train.py --model 7B \

--max_seq_len 256 --batch_size 4 --epochs 5 --warmup_epochs 2 --bias 3 --tau 100. --max_feats 10 --dataset vlep \

--blr 6e-2 --weight_decay 0.20 --output_dir ./checkpoint/vlep --accum_iter 8 --sub --qav

torchrun --rdzv_endpoint 127.0.0.1:1234 --nproc_per_node 8 train.py --model 7B \

--max_seq_len 650 --batch_size 1 --epochs 5 --warmup_epochs 2 --bias 3 --tau 100. --max_feats 10 --dataset tvqa \

--blr 7e-2 --weight_decay 0.02 --output_dir ./checkpoint/tvqa --dataset tvqa --accum_iter 4 --sub --vaq --qav

This repo is built upon LLaMA-Adapter.

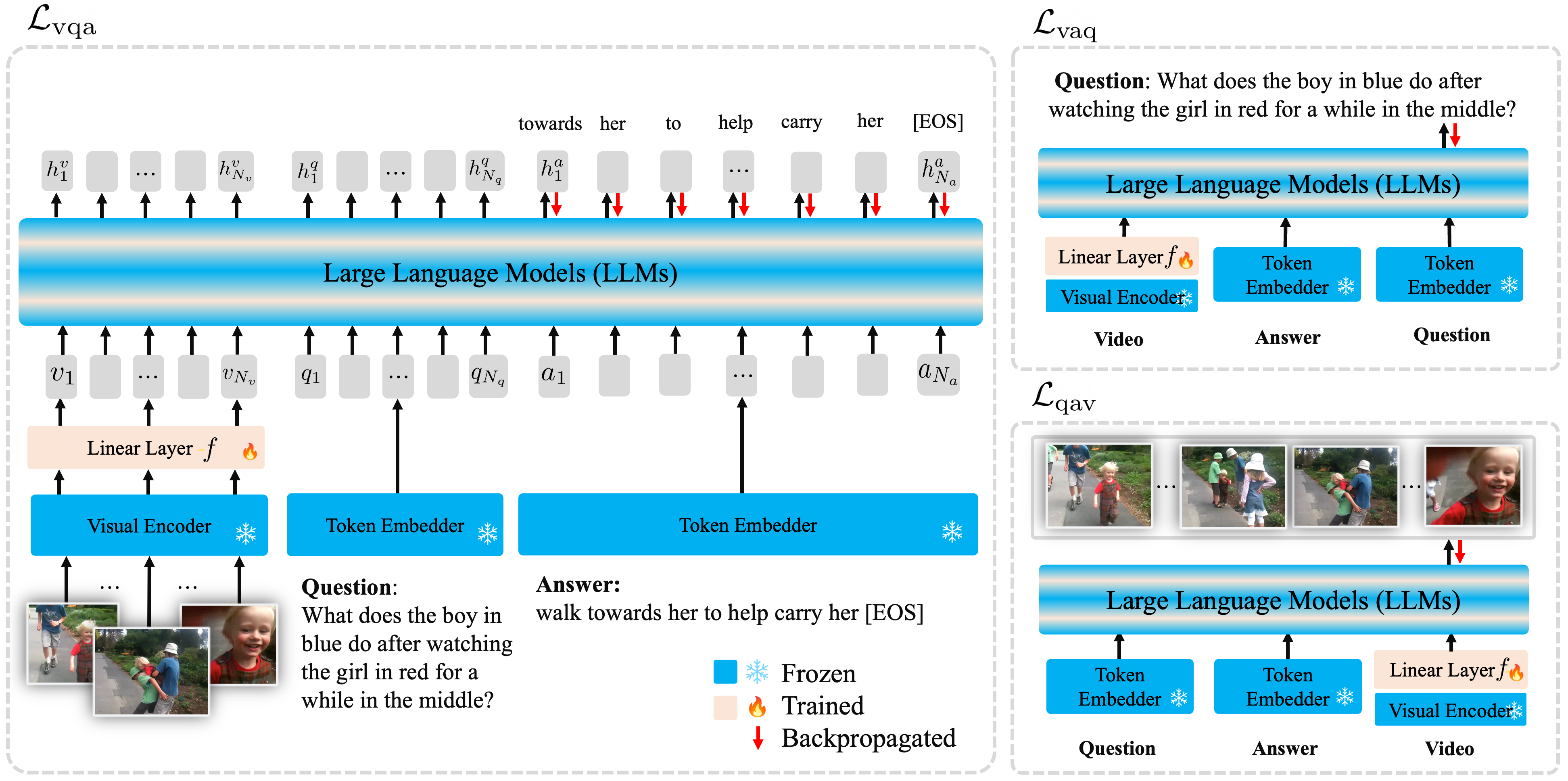

@inproceedings{ko2023large,

title={Large Language Models are Temporal and Causal Reasoners for Video Question Answering},

author={Ko, Dohwan and Lee, Ji Soo and Kang, Wooyoung and Roh, Byungseok and Kim, Hyunwoo J},

booktitle={EMNLP},

year={2023}

}