This tutorial demonstrates the use of Nvidia GPUs for machine learning on Industrial Edge. In particular, it shows how to turn an official TensorFlow container with Jupyter notebook server into a GPU-accelerated app. For introductory material and guidance on creating own Industrial Edge apps, please see the documentation for app developers.

- Nvidia GPU compatible with Nvidia's OSS drivers

- Industrial Edge App Publisher (IEAP) v1.13.5

- Industrial Edge Device Kit (IEDK) v1.16.0-4

- Industrial Edge Device with GPU support being enabled

Let us use a stock TensorFlow container that automatically starts the Jupyter notebook server.

The image is tensorflow/tensorflow:2.14.0-gpu-jupyter.

It contains all necessary Nvidia support libraries.

Hence, we have as a minimal Docker Compose file:

version: '2.4'

services:

jupyter-notebook-with-gpu:

image: tensorflow/tensorflow:2.14.0-gpu-jupyter

mem_limit: 8192mbHowever, this will not yet grant GPU access to the app as Docker containers cannot access GPUs out-of-the-box.

Important

Industrial Edge requires a mem_limit to be specified.

If the memory consumption exceeds the limit during runtime, the app is stopped.

As TensorFlow is very memory-hungry, we use a rather large amount of memory.

To enable GPU acceleration, we need to provide GPU access to the jupyter-notebook-with-gpu service by means of Industrial Edge's Resource Manager.

This is accomplished with an x-resources:limits entry under the service.

Specifying nvidia.com/gpu: 1 will claim one Nvidia GPU for exclusive use by this container.

Generally, one can claim an arbitrary number of resources.

Note

Device names or numbers are not hardcoded, just the number of resource instances of a specific resource class needs to be given. Which instances (devices) are actually mapped into the container is decided by the Resource Manager.

Do not forget the runtime: iedge entry so that the extension field x-resources is handled correctly.

If runtime: iedge is missing, the resource claim is ignored, and no GPU is allocated.

version: '2.4'

services:

jupyter-notebook-with-gpu:

image: tensorflow/tensorflow:2.14.0-gpu-jupyter

runtime: iedge

x-resources:

limits:

nvidia.com/gpu: 1

mem_limit: 8192mbTo turn the TensorFlow-Jupyter image into an Industrial Edge app, a few specific tweaks are needed:

- By default, Jupyter starts up with a random token which must be specified in the URL.

This needs to be turned off by overriding the initial

command(we use command line arguments--NotebookApp.token="" --NotebookApp.password=""). - We need an nginx config so that upon icon click, the Jupyter web page (under default port 8888) is opened:

[{"name":"jupyter-notebook-with-gpu","protocol":"HTTP","port":"8888","headers":"","rewriteTarget":"/jupyter-notebook-with-gpu"}]. This makes the app available under URL prefixtensorflow-jupyter-demo-app, i.e., the Jupyter app is accessed underhttp://<ied-url>/jupyter-notebook-with-gpu. At the same time, we need to tell Jupyter to use this URL as root, so that the Jupyter notebook works correctly (--NotebookApp.base_url=/jupyter-notebook-with-gpu). - Moreover, we make the standard volumes

publishandcfg-dataavailable so that Jupyter notebooks can access them. These are needed to get files into and out of the container and to have persistent storage.

Finally, we obtain the following Docker Compose file (see docker-compose.example.yml):

version: '2.4'

services:

jupyter-notebook-with-gpu:

image: tensorflow/tensorflow:2.14.0-gpu-jupyter

command: '/bin/bash -c "jupyter notebook --notebook-dir=/tf --ip 0.0.0.0 --no-browser --allow-root --NotebookApp.allow_origin=* --NotebookApp.base_url=/jupyter-notebook-with-gpu --NotebookApp.token=\"\" --NotebookApp.password=\"\" > /tf/publish/jupyter-console.log 2>&1"'

runtime: iedge

x-resources:

limits:

nvidia.com/gpu: 1

labels:

com_mwp_conf_nginx: '[{"name":"jupyter-notebook-with-gpu","protocol":"HTTP","port":"8888","headers":"","rewriteTarget":"/jupyter-notebook-with-gpu"}]'

mem_limit: 8192mb

volumes:

- ./publish/:/tf/publish/

- ./cfg-data/:/tf/cfg-data/One option to turn a Docker Compose file into an app is to use the Industrial Edge App Publisher (IEAP):

-

Open IEAP.

-

Ensure a workspace folder is seleced with several GB of free disk space.

-

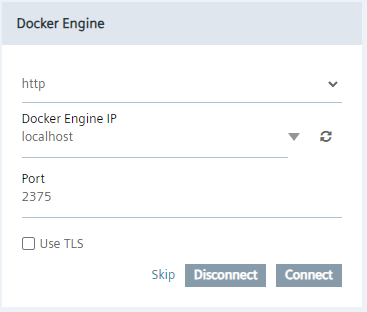

Make sure a Docker engine is connected. Most users will have a local Docker engine running.

Note

Within Windows Docker Desktop, one needs to check "Expose daemon on tcp://localhost:2375 without TLS".

-

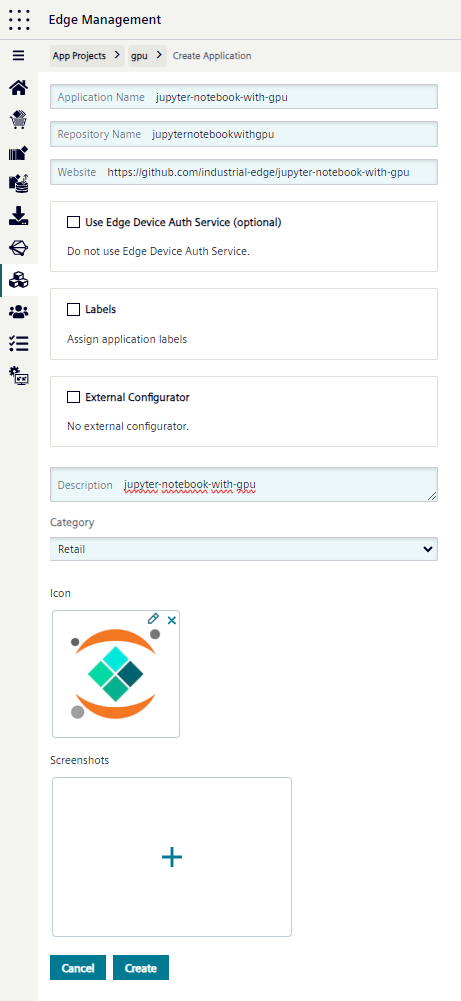

Connect to the IEM via "Go Online" in IEAP. For this, the IEM URL as well as credentials are needed. Upon success, the App Projects on the IEM are listed at the bottom of the page.

-

Create a new application with the "+ Create Application" button. This will redirect you to the IEM web page where the new app parameters can be specified.

Note

It is necessary to select a proper App Project first within which the new applicaton will be created. If there is no App Project yet, one needs to be created.

-

Create application version. This is to add the actual code of the application.

-

Within IEAP (one might need to click the reload button to see the newly created app), go to the list of app versions by clicking on the app icon.

-

Click "+ Add New Version".

-

Select the Docker Compose version.

-

Click "Import YAML" and select the docker-compose.yaml file.

-

Edit the Docker Compose file (by clicking the pen symbol), select "Storage" and delete the duplicate entries

/publishand/cfg-data(keep the/tf/publishand/td/cfg-datamounts). -

Click "Review". This will lead you to the review page showing the final Docker Compose file for the application:

-

Click on "Validate & Create".

-

Choose a proper app version number and click "Create". This pulls the docker image in the background and assembles the Industrial Edge application version on the local computer. This may take some time and requires several GB of free disk space in the workspace folder.

-

Note

Sometimes, it is helpful to issue docker pull tensorflow/tensorflow:2.14.0-gpu-jupyter before this operation to make sure the Docker image is available locally.

- Upload the application to the IEM. This may take some time depending on the network connectivity of both the local machine and the IEM.

From the IEM, install the app onto an IED equipped with a GPU and the Industrial Edge Resource being available.

Note

Before installation, make sure that the GPU is not occupied by another app. The installation will fail if the app cannot be started, and the app will not start if it cannot claim a GPU.

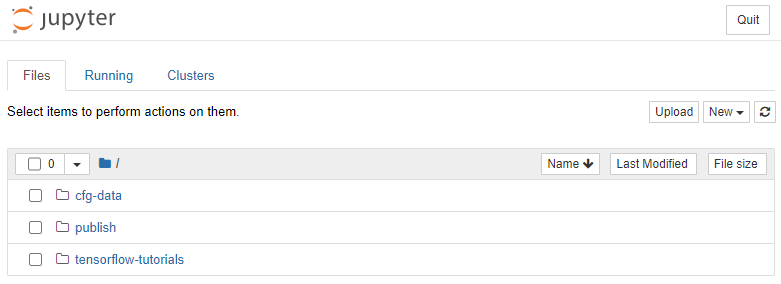

Upon successful installation, click on the app. The app should be running and the Jupyter file browser should open in a new browser window.

Note

/tensorflow-tutorials contains demo notebooks.

These can be opened and executed.

Only notebooks saved in the /publish directory will be persistent.

All other files will be deleted when the app is restarted.

Save important work under /publish.

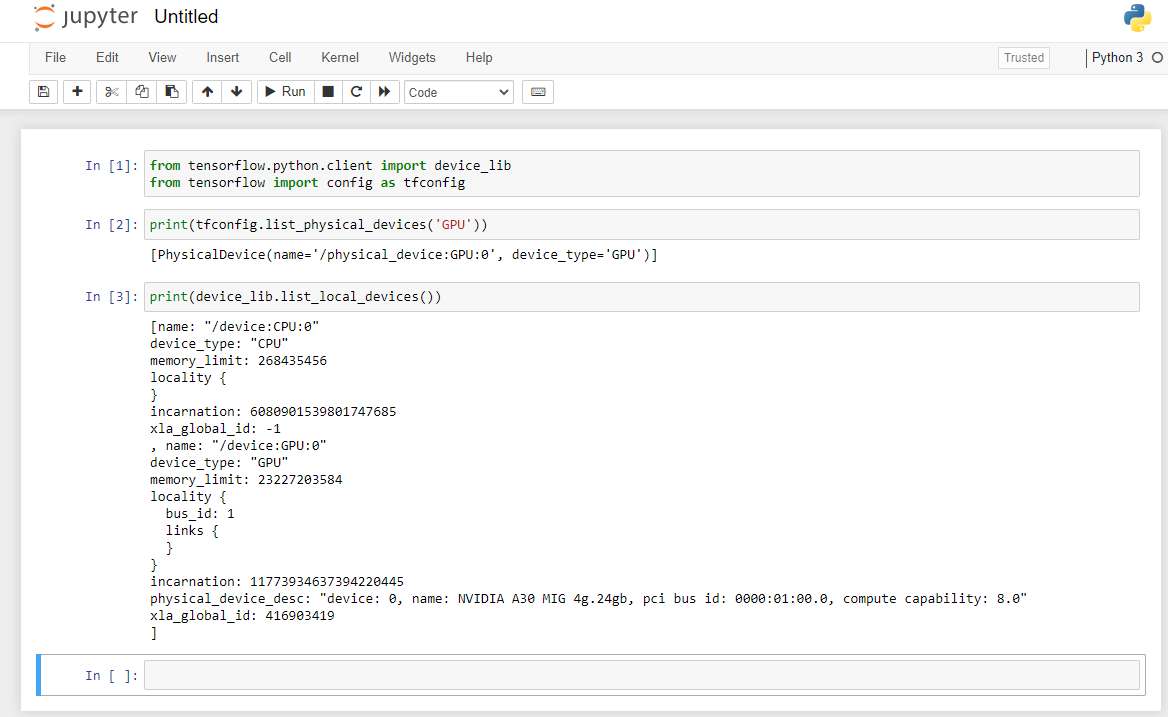

GPU presence can be tested with print(tfconfig.list_physical_devices('GPU')) or print(device_lib.list_local_devices()) in a freshly opened Jupyter notebook (-> New -> "Python 3")

Thank you for your interest in contributing. Please report bugs, unclear documentation, and other problems regarding this repository in the Issues section. Additionally, feel free to propose any changes to this repository using Pull Requests.

If you haven't previously signed the Siemens Contributor License Agreement (CLA), the system will automatically prompt you to do so when you submit your Pull Request. This can be conveniently done through the CLA Assistant's online platform. Once the CLA is signed, your Pull Request will automatically be cleared and made ready for merging if all other test stages succeed.

Please read the Legal information.

IMPORTANT - PLEASE READ CAREFULLY:

This documentation describes how you can download and set up containers which consist of or contain third-party software. By following this documentation, you agree that using such third-party software is done at your own discretion and risk. No advice or information, whether oral or written, obtained by you from us or from this documentation shall create any warranty for the third-party software. Additionally, by following these descriptions or using the contents of this documentation, you agree that you are responsible for complying with all third-party licenses applicable to such third-party software. All product names, logos, and brands are property of their respective owners. All third-party company, product, and service names used in this documentation are for identification purposes only. Use of these names, logos, and brands does not imply endorsement.