Navigation RL Agent

In this Reinforcement Learning (RL) project, State of the Art RL algorithms like Deep-Q-Networks & Prioritized Deep-Q-Network architectures are explored to build an AI Agent to successfully navigate through a custom environment while avoiding obstacles. The RL task explored in this project is Episodic in nature.

Agent interaction with Environment in Project

Objective

The objective is to train an Reinforcement Learning Agent to autonomously traverse through an enclosed environment by choosing appropriate actions in order to maximize its reward by collecting Yellow Bananas while minimizing penalties incurred by gathering Blue Bananas. The objects / bananas are randomly spawned in the environment over all of the timesteps in an episode simulation.RL Environment

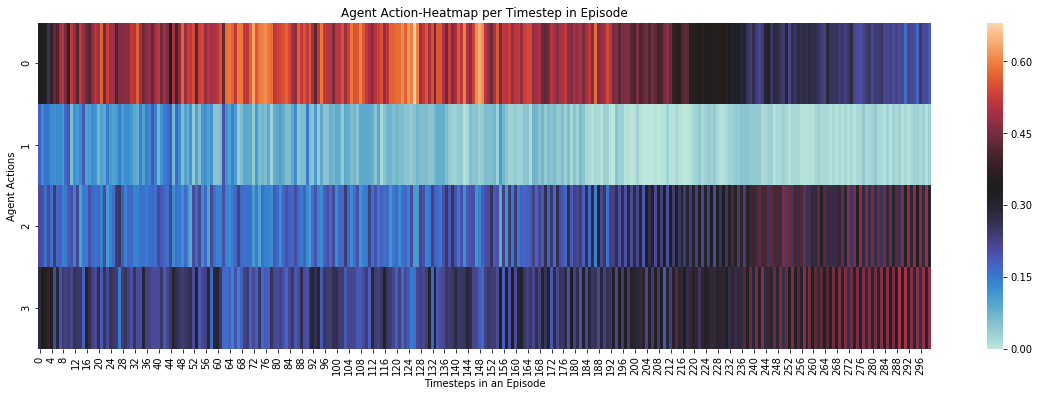

The simulated environment contains a single agent which navigates in the enclosed world with the task of maximizing the number of Yellow bananas collected while minimizing / avoiding the number of Blue bananas gathered. The state of the Environment is also synced with the Episodic nature of the task — each Episode is defined by 300 timesteps. At each timestep, the Agent can pick exactly one action from all the possible actions in Action-Space.

Average Freq. of Action choice (action-labels: 0,1,2,3) per action for the Agent for each timestep in an Episode

State-Action Space

-

The State-space of Agent in the defined Environment is determined by a vector of 37 (Observation Space Size) float values. This Observation vector carries vital information about the Envrionment & Agent at every timestep in an Episode like the agent's velocity, along with ray-based perception of objects around agent's forward direction etc.

-

The Action-space for the Agent is defined by a vector of size 4 i.e. there are 4 possible actions for the Agent to choose from in order to interact with the Environment. The 4 possible actions are related with navigation of the Agent & are as follows - Forwards, Backwards, Left, Right.

Reward Scheme

The Agent is awarded +1 point for collecting Yellow bananas, while colliding / gathering with a Blue banana incurs a penalty of -1 point. The collecting / gathering interaction mechanism of the AI Agent with environment objects is simple & by means of collision or contact between Agent & object (banana).Project Observations & Results

In this task, the normal Deep-Q-Network (DQN) architecture seems to outperform a DQN with Prioritiztion mechanism by achieving target-number of reward points within shorter number of iterations in contrast to Prioritized DQN. This could be because of the a leaky bias problem injected by the Prioritization mechanism implemented in the Agent Experience Buffer.

Results

Resulting Training Observation for the DQN RL Agent

The RL Agent has a very progressive improvement for the first 300 ~ 350 Episodes after which the Reward for the Agent saturates till +13 points per episode & does not appreciably increase from there.