- Any Browser to view the zeppelin and other services

- Git (Optional to download the contents of this repository)

- Vagrant - Download relevant platform installer & run the setup

- VirtualBox - To run the virtual ubuntu machine with hadoop stack

The virtual machine will be running the following services:

- HDFS NameNode + DataNode

- YARN ResourceManager/NodeManager + JobHistoryServer + ProxyServer

- Hive metastore and server2

- Spark history server

- Zeppelin Server

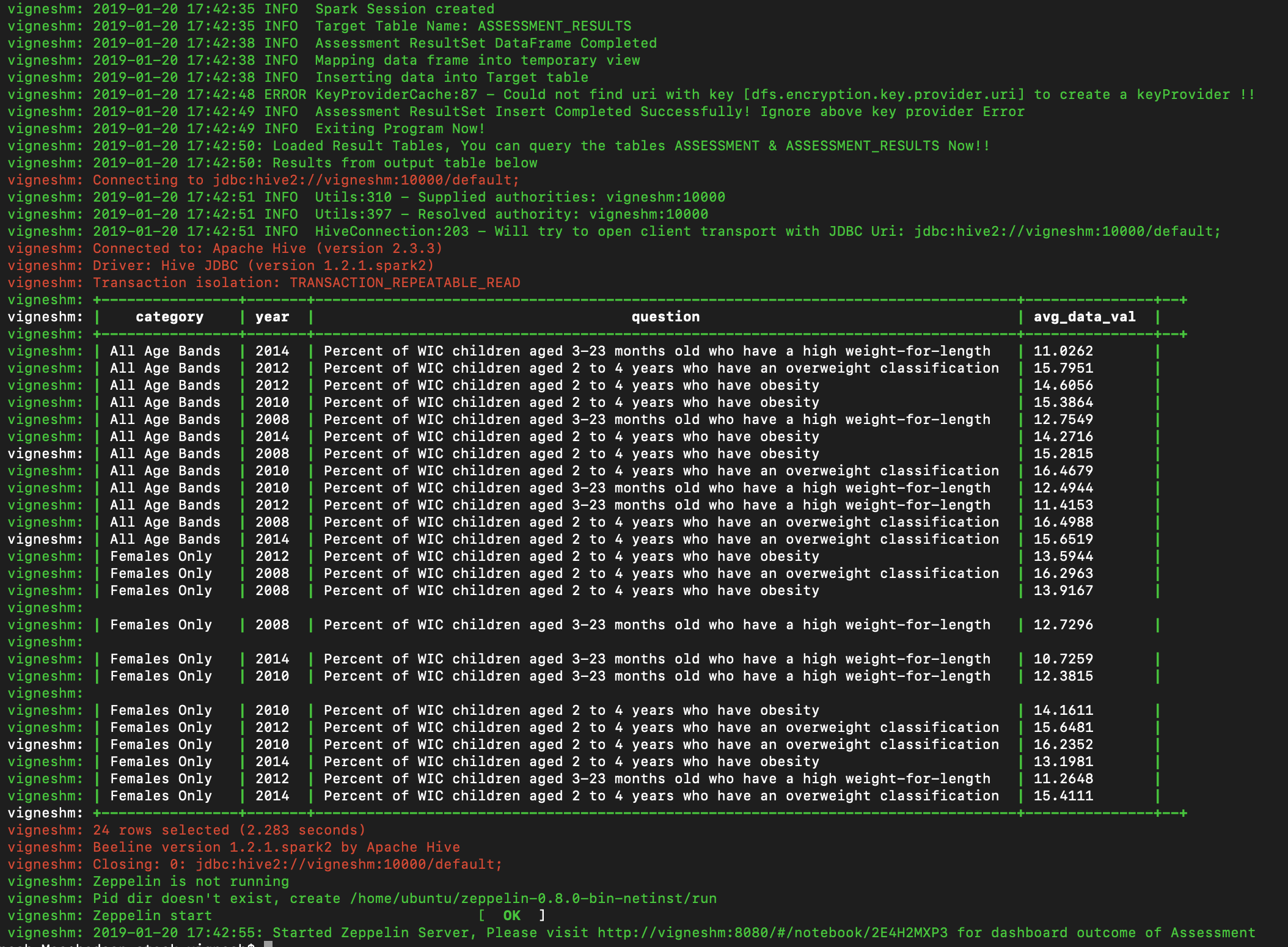

- At the end batch script will download csv file & load to hive. PySpark will then load the output table with result dataset which will be visualized in Apache Zeppelin

- Download and install VirtualBox & Vagrant with above given links.

- Clone this repo

git clone git@github.com:imWiki/hadoop_cust_ecosystem.git - In your terminal/cmd change your directory into the project directory (i.e.

cd hadoop_cust_ecosystem). - Run

vagrant up --provider=virtualboxto create the VM using virtualbox as a provider (NOTE This will take a while the first time as many dependencies are downloaded - subsequent deployments will be quicker as dependencies are cached in theresourcesdirectory). - Once above command is completed, by this time the data from given URL would've been loaded to Hive, You can see something like below at end of the execution i.e. Result Set Loaded into ASSESSMENT_RESULTS Target table,

- Execute

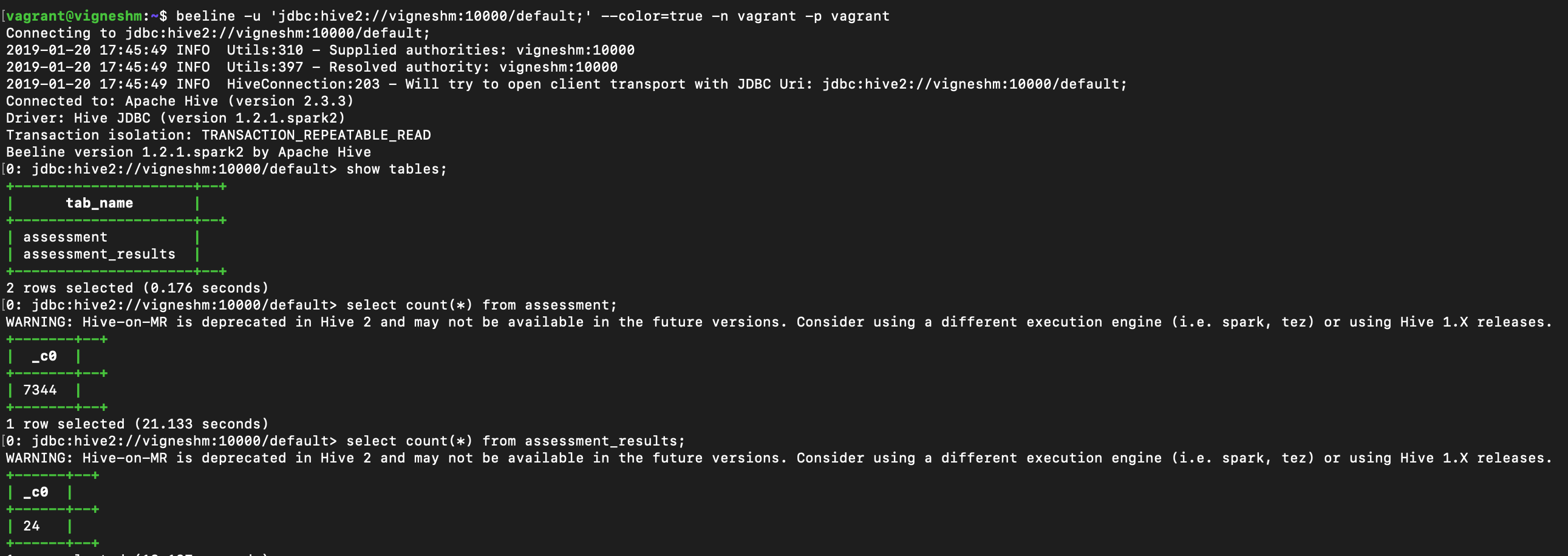

vagrant sshto login to the VM. - Execute

beeline -u 'jdbc:hive2://vigneshm:10000/default;' --color=true -n vagrant -p vagrantto login to the hive & see the tables created with requested data loaded.

- Main ETL Functionality is implemented in a shell script within the scripts directory

data_proc.sh& PySpark is written inasmt_results.py - Navigate to

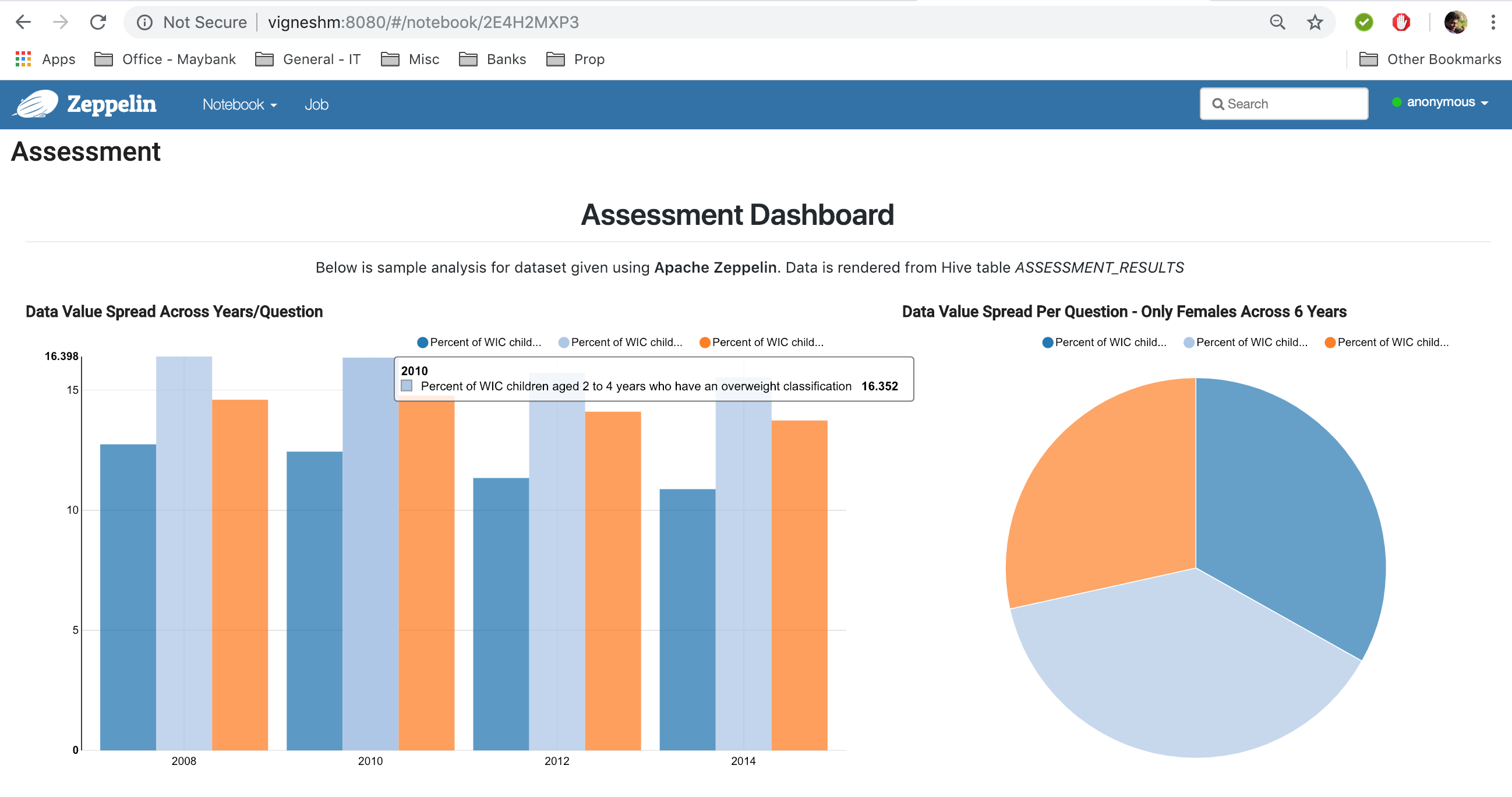

http://vigneshm:8080/#/notebook/2E4H2MXP3for simple visualization built on Zeppelin with given dataset. This is how it should look like,

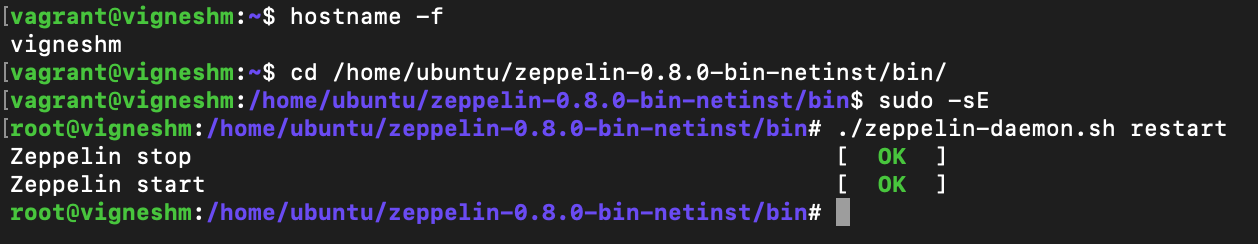

- In case if there are any issues running %spark.sql within zeppelin dashboards, it must be a conf glitch, please restart the service with commands given below after logging into vagrant virtual machine instance

cd /home/ubuntu/zeppelin-0.8.0-bin-netinst/bin/

sudo -sE

./zeppelin-daemon.sh restart

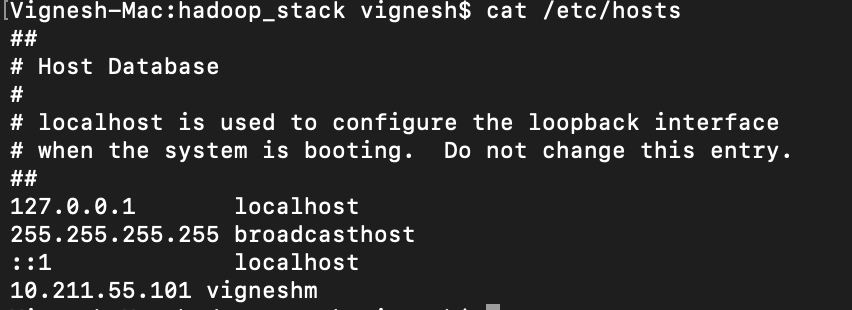

The ip address of the virtualbox machine will be 10.211.55.101. Please add this entry to your hosts file in your machine to access the services with hostname instead of IP in browser. As shown below,

Here are some URL to navigate to various service UI's:

- YARN resource manager: (http://vigneshm:8088)

- Job history: (http://vigneshm:19888/jobhistory/)

- HDFS: (http://vigneshm:50070/dfshealth.html)

- Spark history server: (http://vigneshm:18080)

- Spark context UI (if a Spark context is running): (http://vigneshm:4040)

Substitute the ip address of the container or virtualbox VM for vigneshm if necessary.

Vagrant automatically mounts the folder containing the Vagrant file from the host machine into

the guest machine as /vagrant inside the guest.

To stop the VM and preserve all setup/data within the VM: -

vagrant halt

or

vagrant suspend

Issue a vagrant up command again to restart the VM from where you left off.

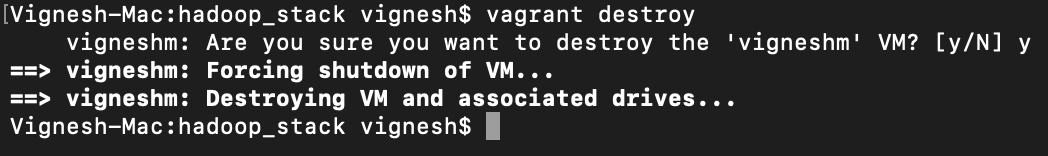

To completely wipe the VM so that vagrant up command gives you a fresh machine: -

vagrant destroy