ICDAR 2021 [ORAL PRESENTATION]

[ Paper ] |

[ Website ] |

|---|

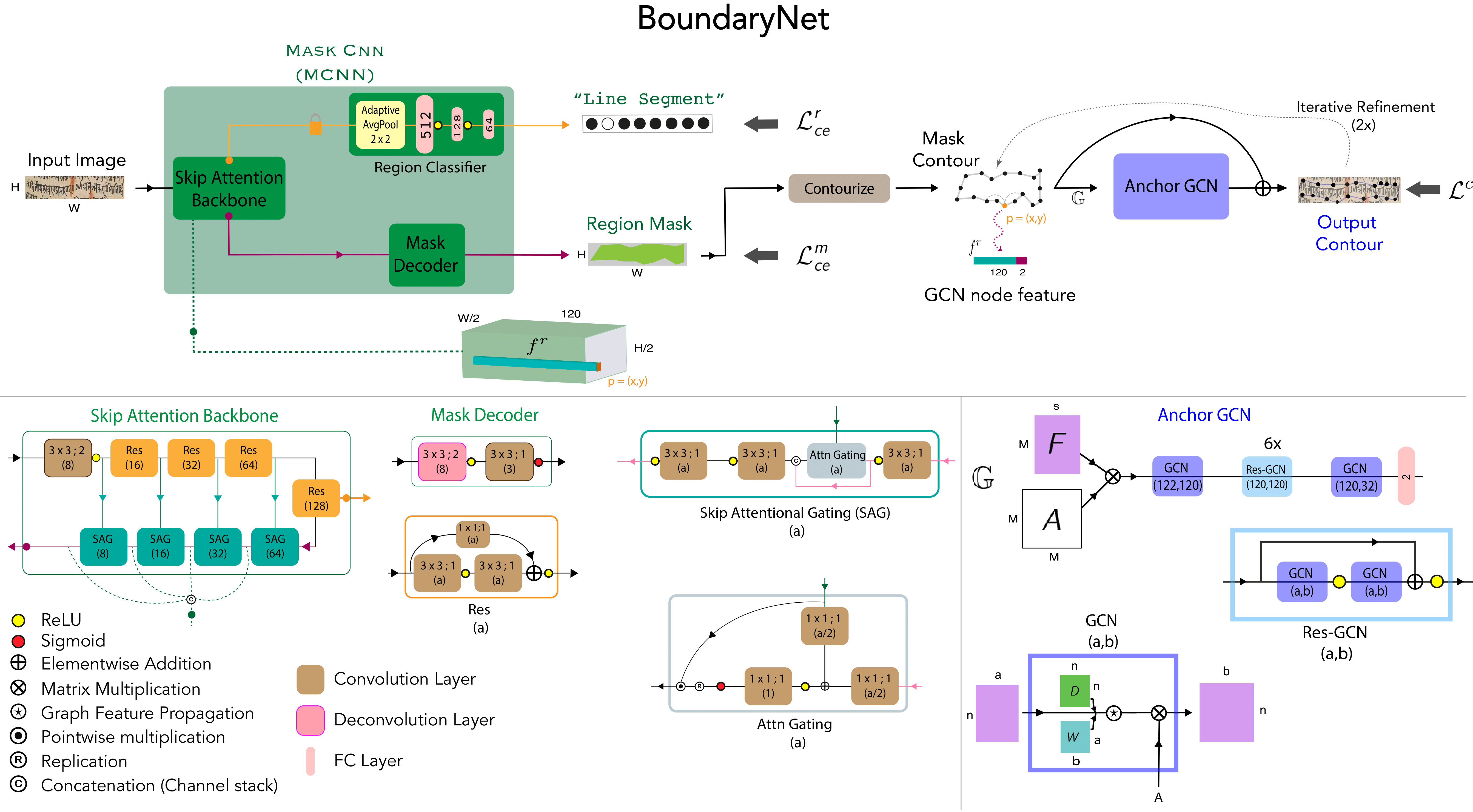

Figure : BoundaryNet architecture from different abstract levels: Mask-CNN, Anchor GCN.

Figure : BoundaryNet architecture from different abstract levels: Mask-CNN, Anchor GCN.

We propose a novel resizing-free approach for high-precision semi-automatic layout annotation. The variable-sized user selected region of interest is first processed by an attention-guided skip network. The network optimization is guided via Fast Marching distance maps to obtain a good quality initial boundary estimate and an associated feature representation. These outputs are processed by a Residual Graph Convolution Network optimized using Hausdorff loss to obtain the final region boundary.

--

--

The BoundaryNet code is tested with

- Python (

3.5.x) - PyTorch (

1.0.0) - CUDA (

10.2)

Please install dependencies by

pip install -r requirements.txtcd CODE

- Download the Indiscapes dataset - [

Dataset Link] - Place the

- Dataset Images under

datadirectory - Pretrained BNet Model weights in the

checkpointsdirectory - JSON annotation data in

datasetsdirectory

- Dataset Images under

- MCNN:

bash Scripts/train_mcnn.sh

- Anchor GCN:

bash Scripts/train_agcn.sh

- End-to-end Fine Tuning:

bash Scripts/fine_tune.sh

- For all of the above scripts, corresponding experiment files are present in

experimentsdirectory. - Any required parameter changes can be performed in these files.

Refer to the Readme.md under the configs directory for modified baselines - CurveGCN, PolyRNN++ and DACN.

To perform inference and get quantitative results on the test set.

bash Scripts/test.sh

Check the qualitative results in

visualizations/test_gcn_pred/directory.

- Add Document-Image path and Bounding Box coordinates in

experiments/custom_args.jsonfile. - Execute -

python test_custom.py --exp experiments/custom_args.json

Check the corresponding instance-level boundary results at

visualizations/test_custom_img/directory.

-

Add dataset images in

datafolder and Json annotations indatasets/data_splits/. -

Fine Tune MCNN

- Modify parameters in

experiments/encoder_experiment.jsonfile - Freeze the Skip Attention backbone

bash train_mcnn.sh

Check the corresponding instance-level boundary results at

visualizations/test_encoder_pred/directory.

- Train AGCN from scratch

- From new MCNN model file in

checkpoints - Modify the MCNN model checkpoint path in

models/combined_model.py

bash train_agcn.sh

Check the corresponding instance-level boundary results at

visualizations/test_gcn_pred/directory.

If you use BoundaryNet, please use the following BibTeX entry.

@inproceedings{trivedi2021boundarynet,

title = {BoundaryNet: An Attentive Deep Network with Fast Marching Distance Maps for Semi-automatic Layout Annotation},

author = {Trivedi, Abhishek and Sarvadevabhatla, Ravi Kiran},

booktitle = {International Conference on Document Analysis Recognition, {ICDAR} 2021},

year = {2021},

}For any queries, please contact Dr. Ravi Kiran Sarvadevabhatla

This project is open sourced under MIT License.