Mindspore implementation of Phrase-level Temporal Relationship Mining for Temporal Sentence Localization (AAAI2023).

@inproceedings{zheng2023phrase,

title={Phrase-level temporal relationship mining for temporal sentence localization},

author={Zheng, Minghang and Li, Sizhe and Chen, Qingchao and Peng, Yuxin and Liu, Yang},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={37},

number={3},

pages={3669--3677},

year={2023}

}

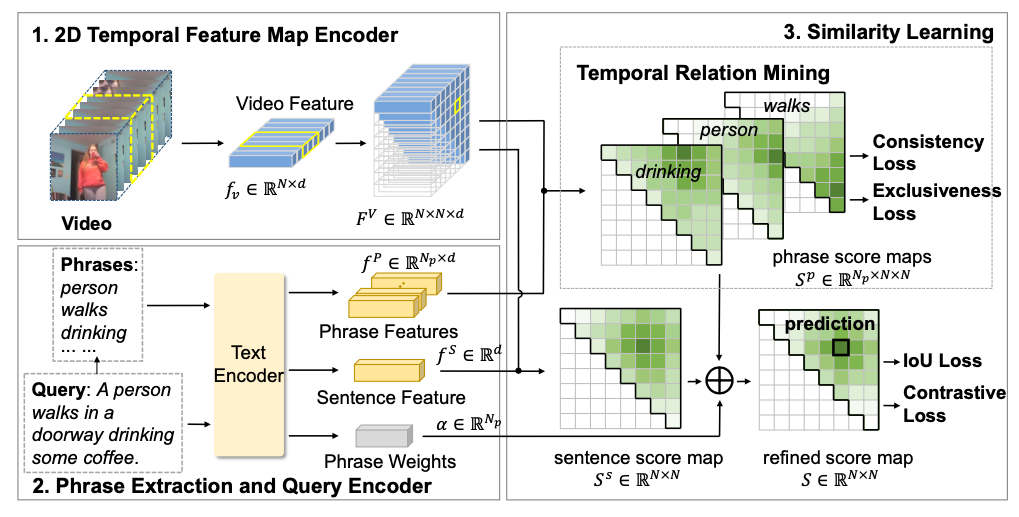

In this paper, we address the problem of video temporal sentence localization, which aims to localize a target moment from videos according to a given language query. We observe that existing models suffer from a sheer performance drop when dealing with simple phrases contained in the sentence. It reveals the limitation that existing models only capture the annotation bias of the datasets but lack sufficient understanding of the semantic phrases in the query. To address this problem, we propose a phrase-level Temporal Relationship Mining (TRM) framework employing the temporal relationship relevant to the phrase and the whole sentence to have a better understanding of each semantic entity in the sentence. Specifically, we use phrase-level predictions to refine the sentence-level prediction, and use Multiple Instance Learning to improve the quality of phrase-level predictions. We also exploit the consistency and exclusiveness constraints of phrase-level and sentence-level predictions to regularize the training process, thus alleviating the ambiguity of each phrase prediction. The proposed approach sheds light on how machines can understand detailed phrases in a sentence and their compositions in their generality rather than learning the annotation biases. Experiments on the ActivityNet Captions and Charades-STA datasets show the effectiveness of our method on both phrase and sentence temporal localization and enable better model interpretability and generalization when dealing with unseen compositions of seen concepts.

- pytorch

- mindspore & mindformers

# mindspore

conda install mindspore=2.2.11 -c mindspore -c conda-forge

# mindformers

git clone -b dev https://gitee.com/mindspore/mindformers.git

cd mindformers

bash build.sh- h5py

- yacs

- terminaltables

- tqdm

- transformers

We use the C3D feature for the ActivityNet Captions dataset. Please download from here and save as dataset/ActivityNet/sub_activitynet_v1-3.c3d.hdf5. We use the VGG feature provided by 2D-TAN for the Charades-STA dataset, which can be downloaded from here. Please save it as dataset/Charades-STA/vgg_rgb_features.hdf5.

To train on the ActivityNet Captions dataset:

python train_net.py --config-file configs/activitynet.yaml OUTPUT_DIR outputs/activitynetTo train on the Charades-STA dataset:

python train_net.py --config-file configs/charades.yaml OUTPUT_DIR outputs/charadeYou can change the options in the shell scripts, such as the GPU id, configuration file, et al.

Run the following commands for ActivityNet Captions evaluation:

python test_net.py --config configs/activitynet.yaml --ckpt trm_act_e8_all.ckpt Run the following commands for Charades-STA evaluation:

python test_net.py --config configs/charades.yaml --ckpt trm_charades_e9_all.ckpt

-

change the checkpoint path in

param_convert.py -

run the command

python param_convert.py --config-file configs/charades.yaml # configs/activitynet.yaml