Dynamic Risk Assessment System

The fourth project for ML DevOps Engineer Nanodegree by Udacity.

Description

This project is part of Unit 5: Machine Learning Model Scoring and Monitoring. The problem is to create, deploy, and monitor a risk assessment ML model that will estimate the attrition risk of each of the company's clients. Also setting up processes to re-train, re-deploy, monitor and report on the ML model.

Prerequisites

- Python 3 required

- Linux environment may be needed within windows through WSL

Dependencies

This project dependencies is available in the requirements.txt file.

Installation

Use the package manager pip to install the dependencies from the requirements.txt. Its recommended to install it in a separate virtual environment.

pip install -r requirements.txtProject Structure

📦Dynamic-Risk-Assessment-System

┣

┣ 📂data

┃ ┣ 📂ingesteddata # Contains csv and metadata of the ingested data

┃ ┃ ┣ 📜finaldata.csv

┃ ┃ ┗ 📜ingestedfiles.txt

┃ ┣ 📂practicedata # Data used for practice mode initially

┃ ┃ ┣ 📜dataset1.csv

┃ ┃ ┗ 📜dataset2.csv

┃ ┣ 📂sourcedata # Data used for production mode

┃ ┃ ┣ 📜dataset3.csv

┃ ┃ ┗ 📜dataset4.csv

┃ ┗ 📂testdata # Test data

┃ ┃ ┗ 📜testdata.csv

┣ 📂model

┃ ┣ 📂models # Models pickle, score, and reports for production mode

┃ ┃ ┣ 📜apireturns.txt

┃ ┃ ┣ 📜confusionmatrix.png

┃ ┃ ┣ 📜latestscore.txt

┃ ┃ ┣ 📜summary_report.pdf

┃ ┃ ┗ 📜trainedmodel.pkl

┃ ┣ 📂practicemodels # Models pickle, score, and reports for practice mode

┃ ┃ ┣ 📜apireturns.txt

┃ ┃ ┣ 📜confusionmatrix.png

┃ ┃ ┣ 📜latestscore.txt

┃ ┃ ┣ 📜summary_report.pdf

┃ ┃ ┗ 📜trainedmodel.pkl

┃ ┗ 📂production_deployment # Deployed models and model metadata needed

┃ ┃ ┣ 📜ingestedfiles.txt

┃ ┃ ┣ 📜latestscore.txt

┃ ┃ ┗ 📜trainedmodel.pkl

┣ 📂src

┃ ┣ 📜apicalls.py # Runs app endpoints

┃ ┣ 📜app.py # Flask app

┃ ┣ 📜config.py # Config file for the project which depends on config.json

┃ ┣ 📜deployment.py # Model deployment script

┃ ┣ 📜diagnostics.py # Model diagnostics script

┃ ┣ 📜fullprocess.py # Process automation

┃ ┣ 📜ingestion.py # Data ingestion script

┃ ┣ 📜pretty_confusion_matrix.py # Plots confusion matrix

┃ ┣ 📜reporting.py # Generates confusion matrix and PDF report

┃ ┣ 📜scoring.py # Scores trained model

┃ ┣ 📜training.py # Model training

┃ ┗ 📜wsgi.py

┣ 📜config.json # Config json file

┣ 📜cronjob.txt # Holds cronjob created for automation

┣ 📜README.md

┗ 📜requirements.txt # Projects required dependenciesSteps Overview

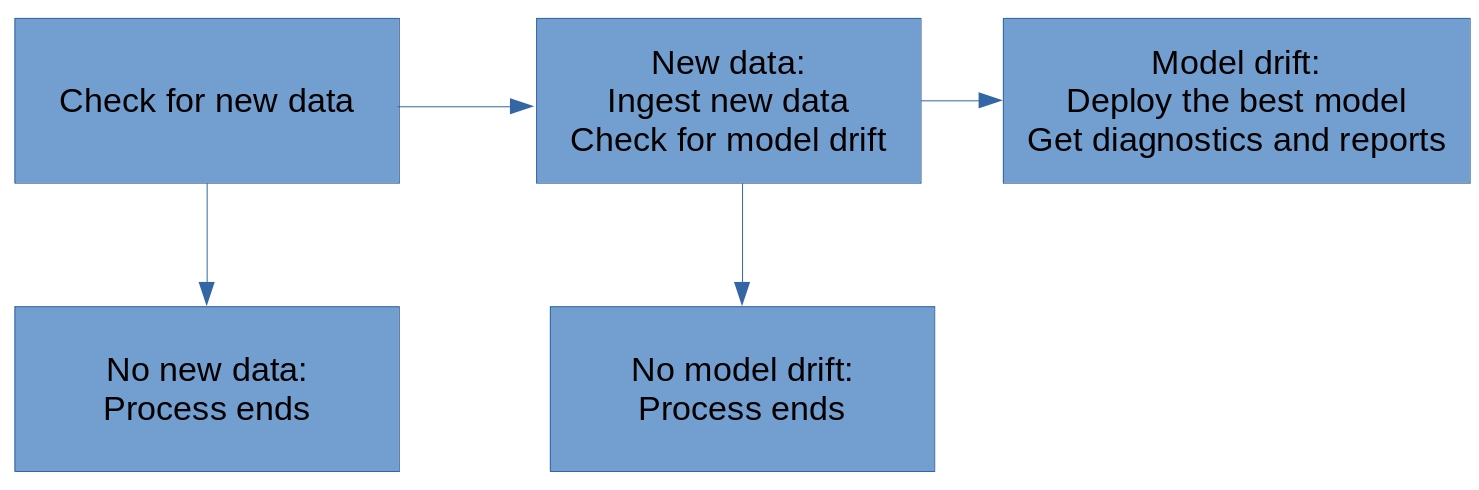

- Data ingestion: Automatically check if new data that can be used for model training. Compile all training data to a training dataset and save it to folder.

- Training, scoring, and deploying: Write scripts that train an ML model that predicts attrition risk, and score the model. Saves the model and the scoring metrics.

- Diagnostics: Determine and save summary statistics related to a dataset. Time the performance of some functions. Check for dependency changes and package updates.

- Reporting: Automatically generate plots and PDF document that report on model metrics and diagnostics. Provide an API endpoint that can return model predictions and metrics.

- Process Automation: Create a script and cron job that automatically run all previous steps at regular intervals.

Usage

1- Edit config.json file to use practice data

"input_folder_path": "practicedata",

"output_folder_path": "ingesteddata",

"test_data_path": "testdata",

"output_model_path": "practicemodels",

"prod_deployment_path": "production_deployment"2- Run data ingestion

cd src

python ingestion.pyArtifacts output:

data/ingesteddata/finaldata.csv

data/ingesteddata/ingestedfiles.txt

3- Model training

python training.pyArtifacts output:

models/practicemodels/trainedmodel.pkl

4- Model scoring

python scoring.pyArtifacts output:

models/practicemodels/latestscore.txt

5- Model deployment

python deployment.pyArtifacts output:

models/prod_deployment_path/ingestedfiles.txt

models/prod_deployment_path/trainedmodel.pkl

models/prod_deployment_path/latestscore.txt

6- Run diagnostics

python diagnostics.py7- Run reporting

python reporting.pyArtifacts output:

models/practicemodels/confusionmatrix.png

models/practicemodels/summary_report.pdf

8- Run Flask App

python app.py9- Run API endpoints

python apicalls.pyArtifacts output:

models/practicemodels/apireturns.txt

11- Edit config.json file to use production data

"input_folder_path": "sourcedata",

"output_folder_path": "ingesteddata",

"test_data_path": "testdata",

"output_model_path": "models",

"prod_deployment_path": "production_deployment"10- Full process automation

python fullprocess.py11- Cron job

Start cron service

sudo service cron startEdit crontab file

sudo crontab -e- Select option 3 to edit file using vim text editor

- Press i to insert a cron job

- Write the cron job in

cronjob.txtwhich runsfullprocces.pyevery 10 mins - Save after editing, press esc key, then type :wq and press enter

View crontab file

sudo crontab -lLicense

Distributed under the MIT License. See LICENSE for more information.

Resources

-

Flask

-

Reportlab