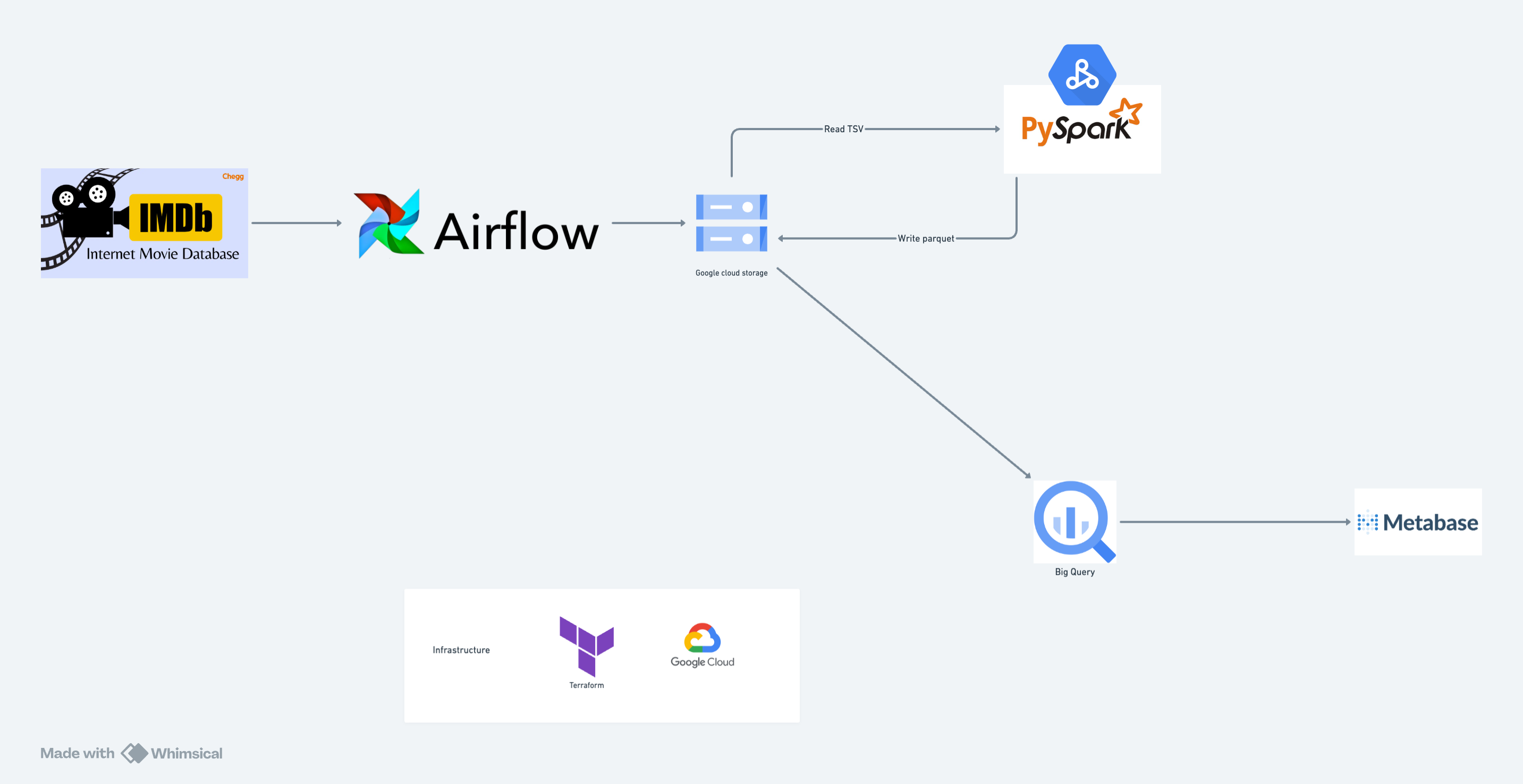

This marks the second project within the Data Engineering Zoomcamp curriculum. In this project, we'll demonstrate a pipeline designed to handle batch processing of data sourced from IMDb Non-Commercial Datasets.

IMDb, aka Internet Movie Database, serves as an extensive online repository of data concerning movies, TV shows, and video games. It encompasses a comprehensive array of details, including cast and crew information, plot summaries, ratings, and reviews, rendering it an indispensable resource for both movie enthusiasts and professionals in the industry.

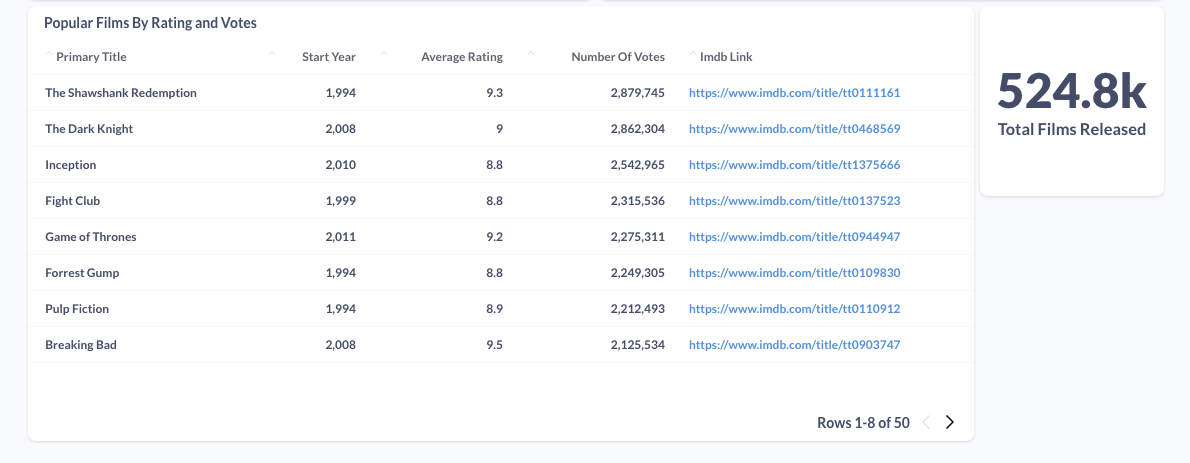

Our primary objective is to establish a robust data pipeline that retrieves raw data from the IMDB dataset, stores it, enhances its quality, and presents it in an intuitive dashboard. This pipeline enables us to address various inquiries, such as the distribution of films across genres, the duration of the longest movie in hours, the proportion of films within different categories, the total count of movies in the database, and the distribution of films by release years, among others.

As per the IMDB data specifications, the dataset undergoes daily updates. Consequently, our data pipeline is scheduled to execute daily at 1pm UTC time to ensure the integration of the latest data into our system.

- Dataset repo: IMDB

- Infrastructure as Code: Terraform

- Workflow Orchestration: Airflow

- Data Lake: Google Cloud Storage

- Data Warehouse: Google BigQuery

- Managed service for Apache Spark: Google Dataproc

- Transformation: PySpark

- Visualisation: Metabase

- Programming Language: Python and SQL

The cloud infrastructure has been established using Terraform, providing a scalable and reliable foundation for our applications and services. Meanwhile, Airflow, our workflow orchestration tool, is operational within a local Docker container.

- A Google Cloud Platform account.

- Install VSCode or Zed or any other IDE that works for you.

- Install Terraform

- Install Docker Desktop

- Install Google Cloud SDK

- Clone this repository onto your local machine.

-

Go to Google Cloud and create a new project.

-

Get the project ID and define the environment variables

GCP_PROJECT_IDin the .env file located in the root directory -

Create a Service account with the following roles:

BigQuery AdminStorage AdminViewerCompute AdminDataproc Administrator

-

Download the Service Account credentials and store it in

$HOME/.google/credentials/. -

You need to activate the following APIs here

- Cloud Storage API

- BigQuery API

-

Assign the

GOOGLE_APPLICATION_CREDENTIALSenvironment variable to the path of your JSON credentials file, such thatGOOGLE_APPLICATION_CREDENTIALSwill be $HOME/.google/credentials/<authkeys_filename>.json- add this line to the end of the

.bashrcfile

export GOOGLE_APPLICATION_CREDENTIALS=${HOME}/.google/google_credentials.json

- Activate the enviroment variable by runing

source .bashrc

- Using Zed or VSCode, open the cloned project

IMDB-pipeline-project. - To customize the default values of

variable "project"andvariable "region"to your preferred project ID and region, you have two options: either edit the variables.tf file in Terraform directly and modify the values, or set the environment variablesTF_VAR_projectandTF_VAR_region. - Open the terminal to the root project.

- Navigate to the root directory of the project in the terminal and then change the directory to the terraform folder using the command

cd terraform. - Set an alias

alias tf='terraform' - Initialise Terraform:

tf init - Plan the infrastructure:

tf plan - Apply the changes:

tf apply

- add this line to the end of the

- Please confirm that the following environment variables are configured in

.envin the root directory of the project.AIRFLOW_UID. The default value is 50000GCP_PROJECT_ID. This should be set from Create a Google Cloud Project sectionGCP_IMDB_BUCKET=imdb_datalake_<GCP project id>GCP_PROJECT_REGION. The location where the Data Proc is deployedGCP_IMDB_WH_DATASET=imdb_analyticsGCP_IMDB_DATAPROC_TEMP_BUCKET. Review the Google Cloud Storage (GCS) section in the console to find the temp bucket name for Data Proc. This is the value you need here.

- Run

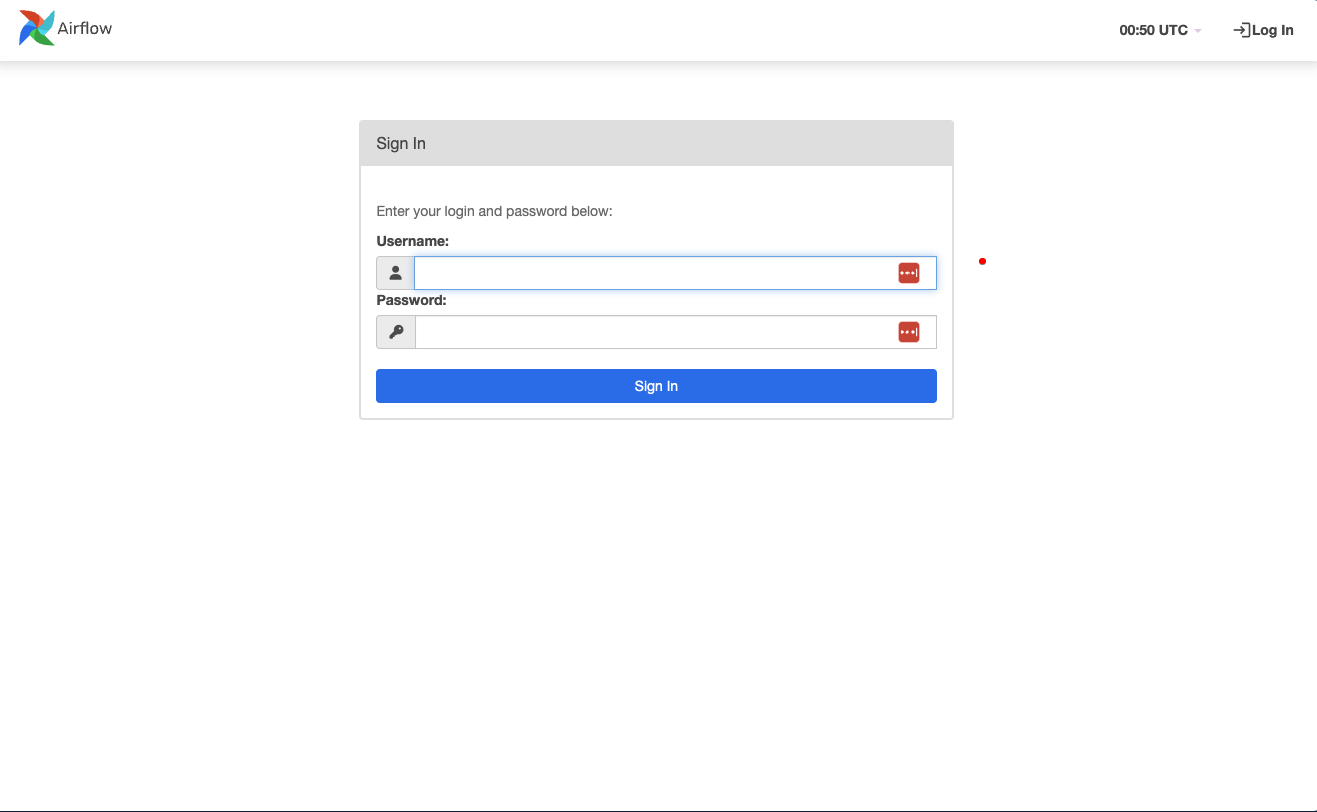

docker-compose up. - Access the Airflow dashboard by visiting

http://localhost:8080/in your web browser. The interface will resemble the following. Use the username and password airflow to log in.

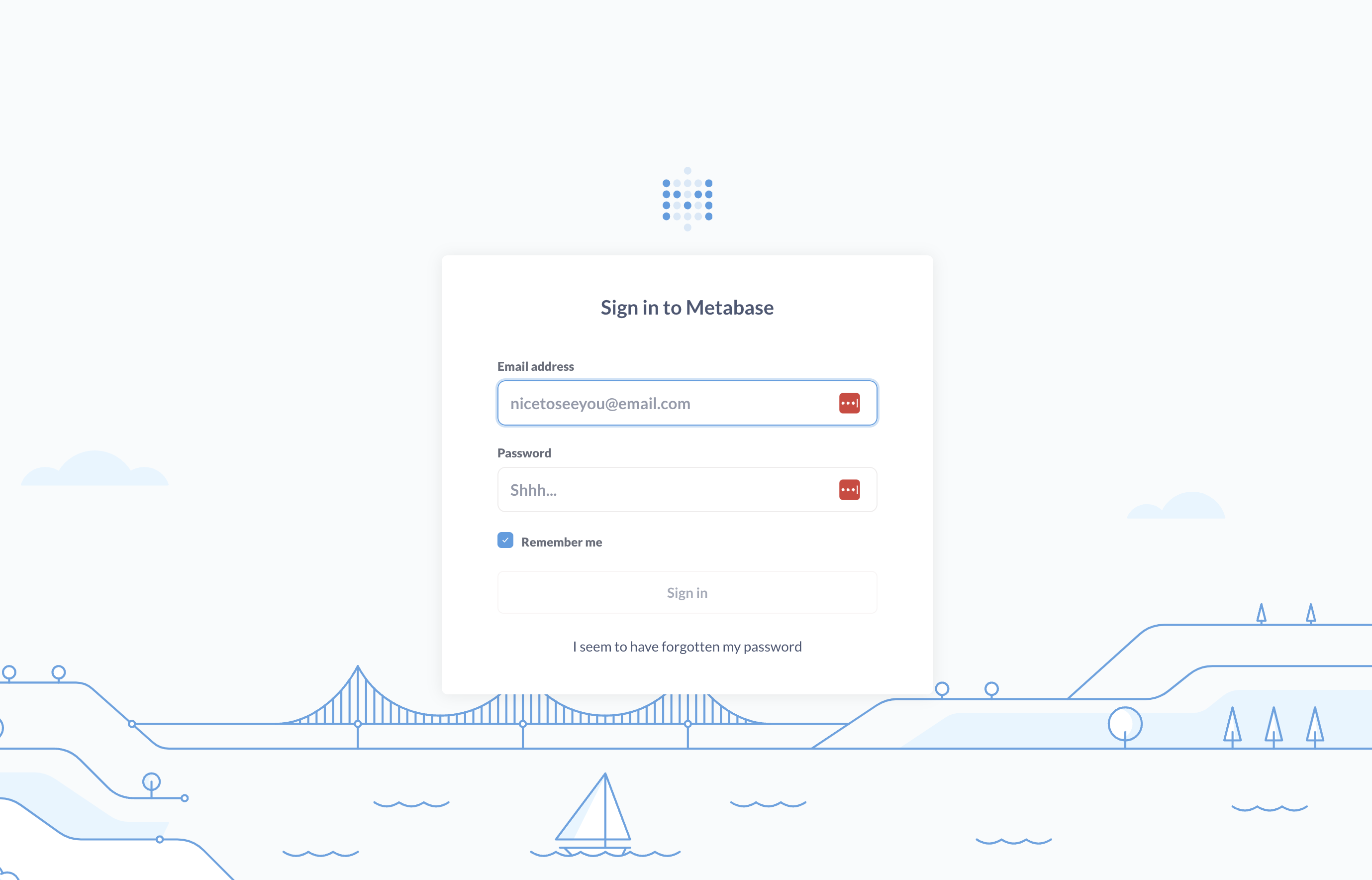

- Visit

http://localhost:1460in your web browser to access the Metabase dashboard. The interface will resemble the following. You will need to sign up to use the UI.

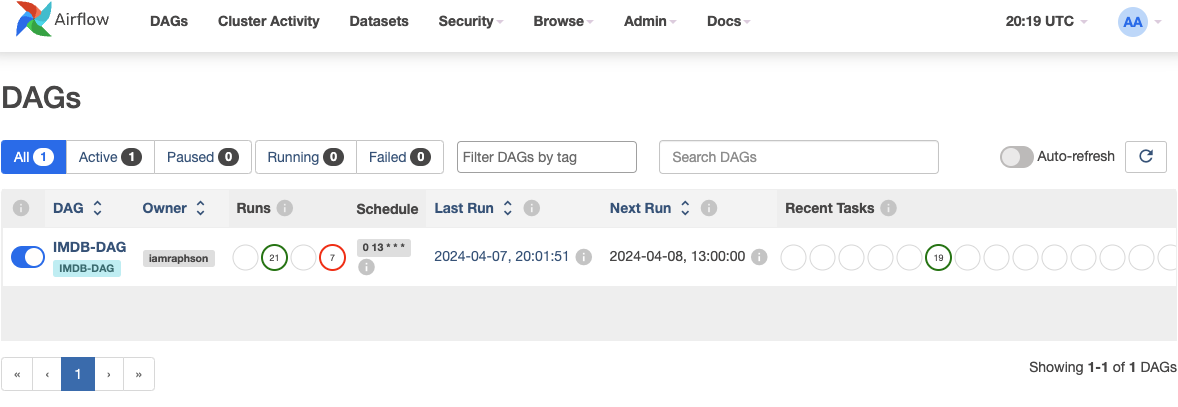

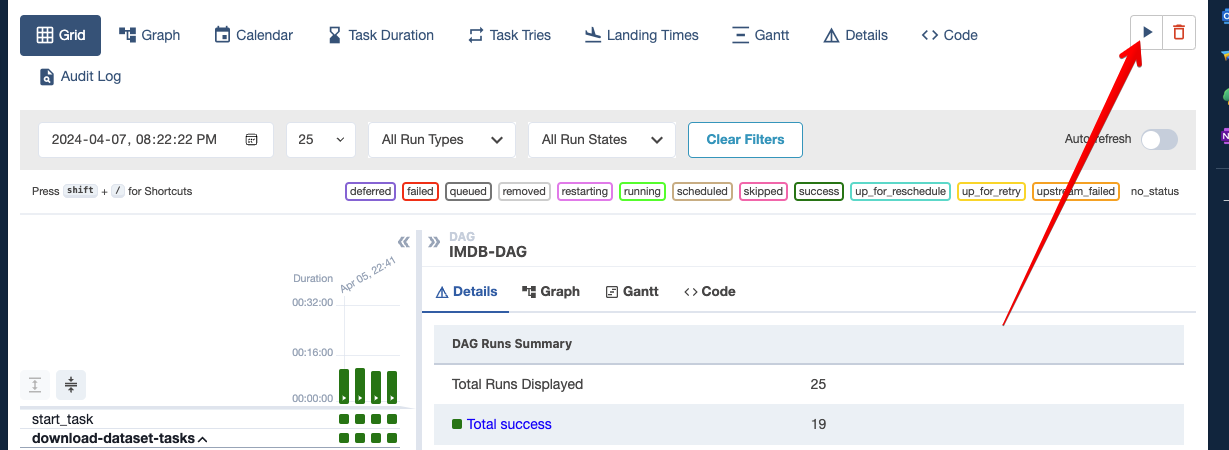

Once you've completed all the steps outlined in the previous section, you should now be able to view the Airflow dashboard in your web browser. Below will display as list of DAGs.

To run the DAG, Click on the play button.

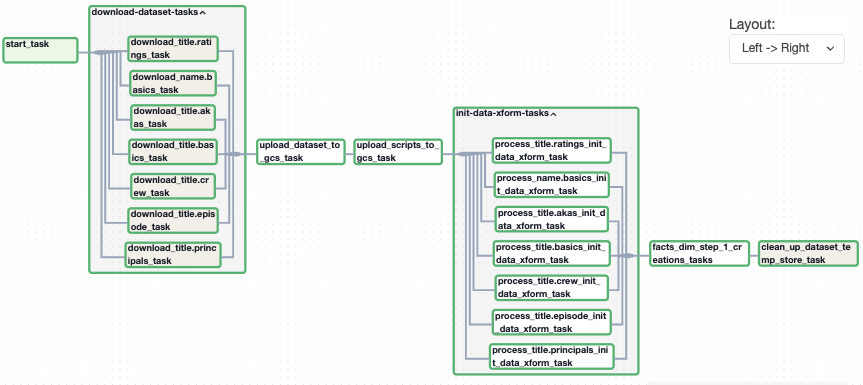

The IMDB DAG manages this process. All transformation scripts are located in the data_airflow/dags/scripts folder. Airflow copies these scripts to Google Cloud Storage (GCS) and submits transformation jobs to Data Proc.

Please watch the provided video tutorial to configure your Metabase database connection with BigQuery.You have the flexibility to customize your dashboard according to your preferences. Additionally, this PDF linked below contains the complete screenshot of the dashboard I created.

Twitter: @iamraphson

🦅