This Project is part of Data Science Nanodegree Program by Udacity in collaboration with Figure Eight. The initial dataset contains pre-labelled tweet and messages from real-life disaster. The aim of the project is to build a Natural Language Processing tool that categorize messages.

The Project is divided in the following Sections:

- Data Processing, ETL Pipeline to extract data from source, clean data and save them in a proper databse structure

- Machine Learning Pipeline to train a model able to classify text message in categories

- Web App to show model results in real time.

- Python 3.5+ (I used Python 3.6.5)

- Machine Learning Libraries: NumPy, SciPy, Pandas, Sciki-Learn

- Natural Language Process Libraries: NLTK

- SQLlite Database Libraqries: SQLalchemy

- Web App and Data Visualization: Flask, Plotly

-

Run the following commands in the project's root directory to set up your database and model.

- To run ETL pipeline that cleans data and stores in database

python data/process_data.py data/disaster_messages.csv data/disaster_categories.csv data/DisasterResponse.db - To run ML pipeline that trains classifier and saves

python models/train_classifier.py data/DisasterResponse.db models/classifier.pkl

- To run ETL pipeline that cleans data and stores in database

-

Run the following command in the app's directory to run your web app.

python run.py -

Go to http://0.0.0.0:3001/

In the data and models folder you can find two jupyter notebook that will help you understand how the model works step by step:

- ETL Preparation Notebook: learn everything about the implemented ETL pipeline

- ML Pipeline Preparation Notebook: look at the Machine Learning Pipeline developed with NLTK and Scikit-Learn

You can use ML Pipeline Preparation Notebook to re-train the model or tune it through a dedicated Grid Search section. In this case, it is warmly recommended to use a Linux machine to run Grid Search, especially if you are going to try a large combination of parameters. Using a standard desktop/laptop (4 CPUs, RAM 8Gb or above) it may take several hours to complete.

- Udacity for providing such a complete Data Science Nanodegree Program

- Figure Eight for providing messages dataset to train my model

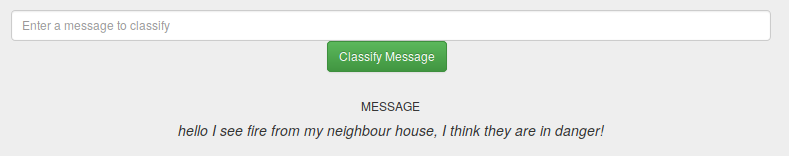

- This is an example of a message you can type to test Machine Learning model performance

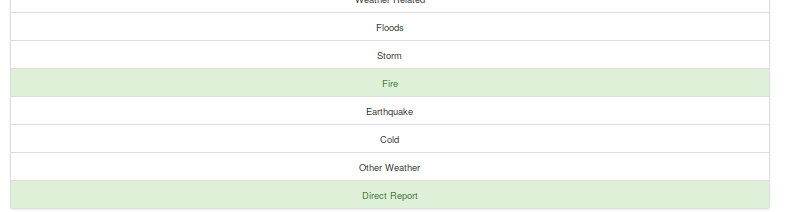

- After clicking Classify Message, you can see the categories which the message belongs to highlighted in green

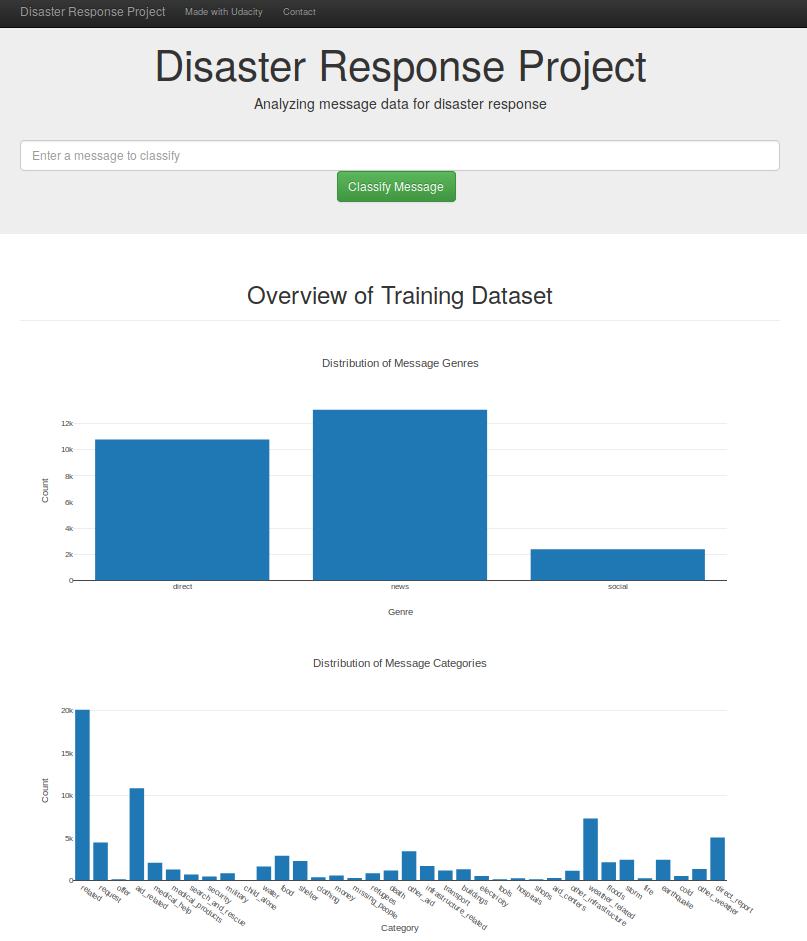

- The main page shows some graphs about training dataset, provided by Figure Eight