- SUMO Co-simulation Ph.I

- know how SUMO works, and how with CARLA

- collect related examples as initialization

- ?

- SUMO Co-simulation Ph.II

- SUMO Co-simulation Ph.III

- SUMO Co-simulation Ph.IV

@INPROCEEDINGS{INVS2021

author={Z. {Zhang} and S. {Wang} and Y. {Hong} and L. {Zhou} and Q. {Hao},

title={Distributed dynamic map fusion via federated learning for intelligent networked vehicles},

booktitle={2021 {IEEE} International Conference on Robotics and Automation, {ICRA}

2021, Xi’an, China, May 2021},

year={2021}

}- Ubuntu 18.04

- Python 3.7+

- CARLA >= 0.9.8, <=0.9.10

- CUDA>=10.0

- pytorch<=1.4.0

- llvm>=10.0

-

Clone this repository to your workspace

git clone https://github.com/lasso-sustech/CARLA_INVS.git --branch=main --depth=1 -

Enter the directory "CARLA_INVS" and install dependencies with

makemake dependency

It uses

aptandpip3with network access. You can try speed up downloading with fast mirror sites. -

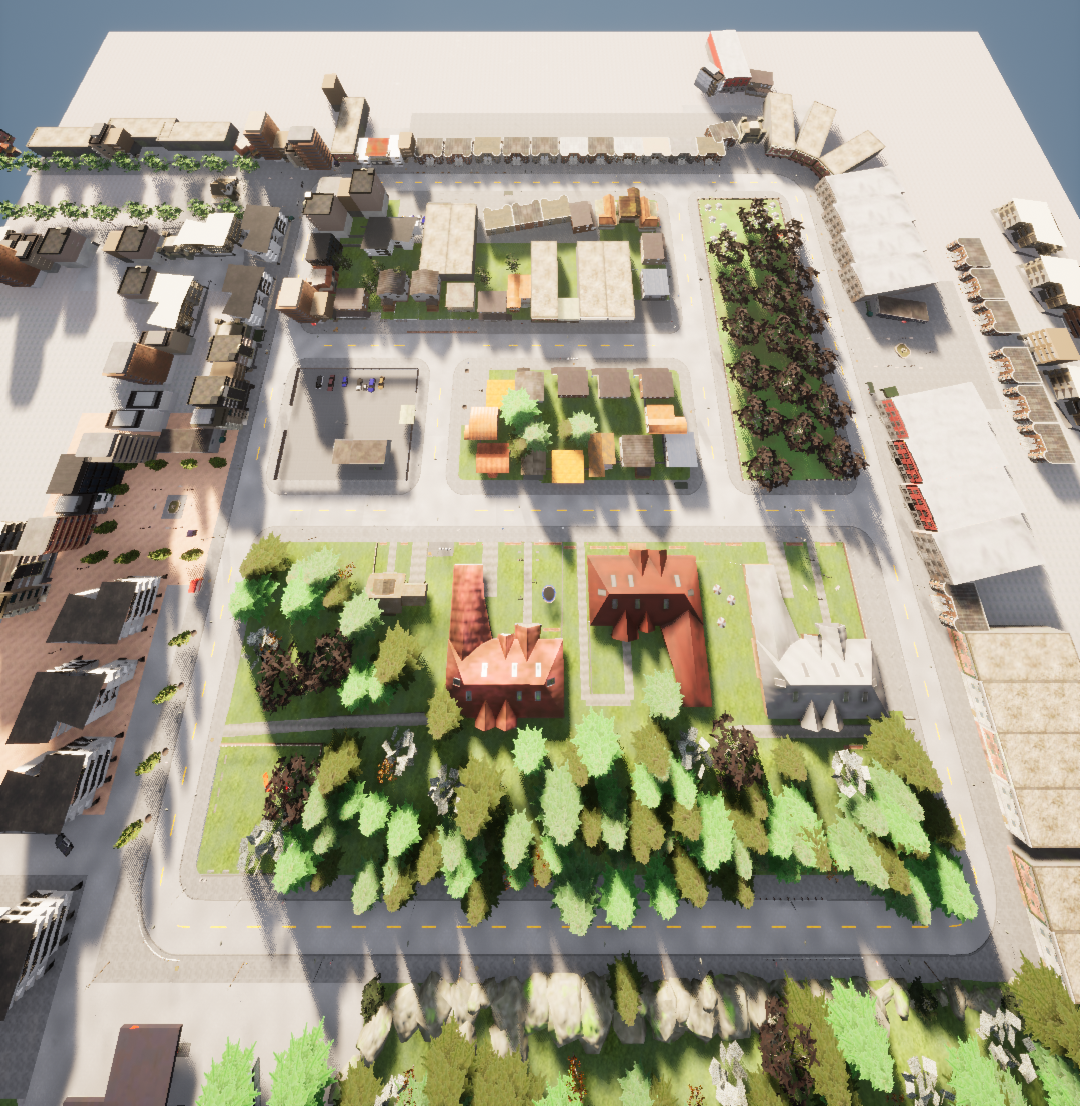

Download and extract the CARLA simulator somewhere (e.g.,

~/CARLA), and updateCARLA_PATHinparams.pywith absolute path to the CARLA folder location.

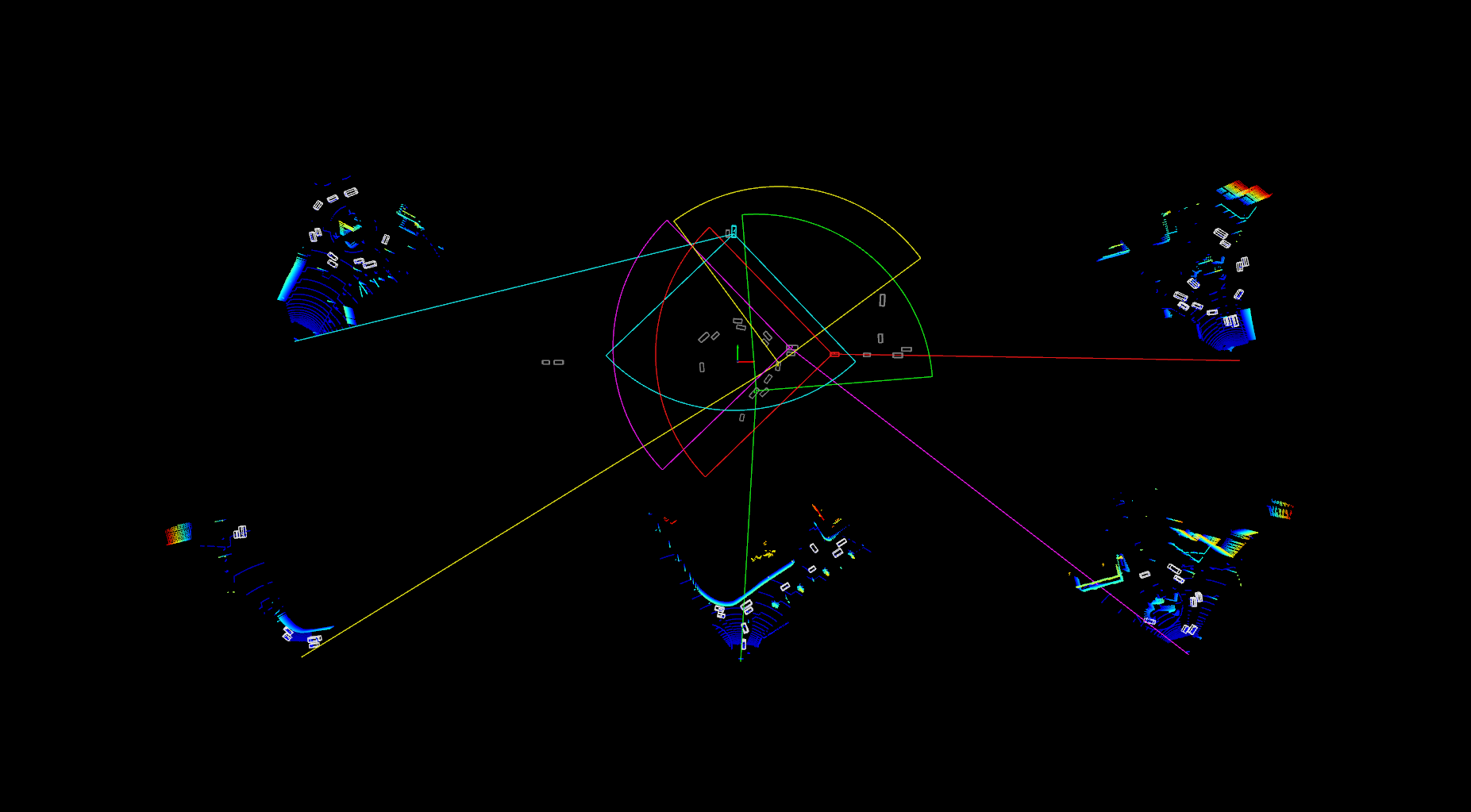

This repository is composed of three components:

gen_datafor dataset generation and visualization,PCDetfor training and testing,fusionfor global map fusion and visualization.The three components share the same configuration file

params.py.

Features: 1) LiDAR/camera raw data collection in multi-agent synchronously; 2) data transformation to KITTI format; 3) data visualization with Open3d library.

Tuning: Tune the configurations as you like in

params.pyfile under thegen_datasection.

-

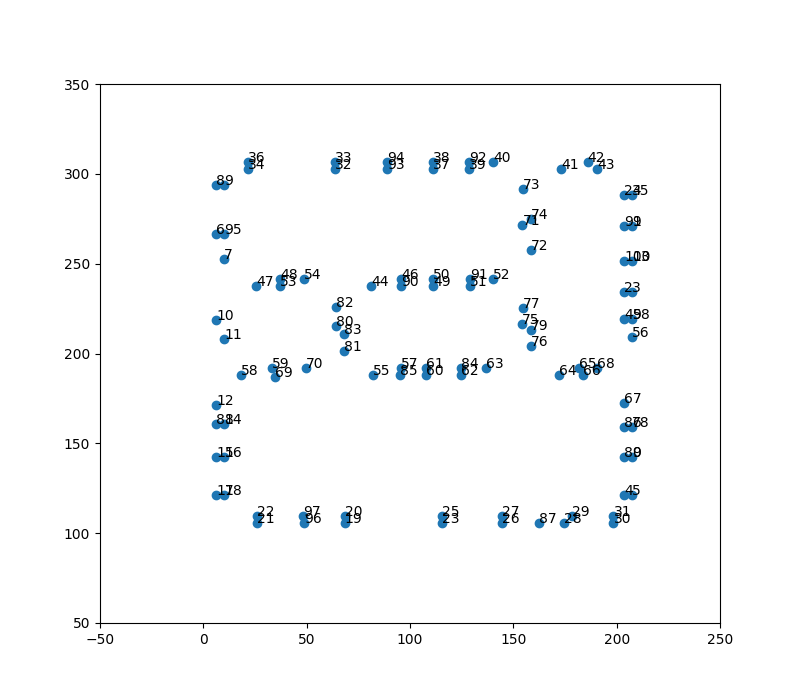

start up

CarlaUE4.shin yourCARLA_PATHfirstly and run the following script in shell to look for vehicles spawn points with point_id.python3 gen_data/Scenario.py spawn

-

run the following script to generate multi-agent raw data

python3 gen_data/Scenario.py record [x_1,...x_N] [y_1,...y_M]

where

x_1,...,x_Nis list of point_ids (separated by comma) for human-driven vehicles, andy_1,...,y_Mfor autonomous vehicles with sensors installation.The recording process would stop when

Ctrl+Ctriggered, and the generated raw data will be put at$ROOT_PATH/raw_data. -

Run the following script to transform raw data to KITTI format

python3 gen_data/Process.py raw_data/record2020_xxxx_xxxx

and the cooked KITTI format data will be put at

$ROOT_PATH/dataset -

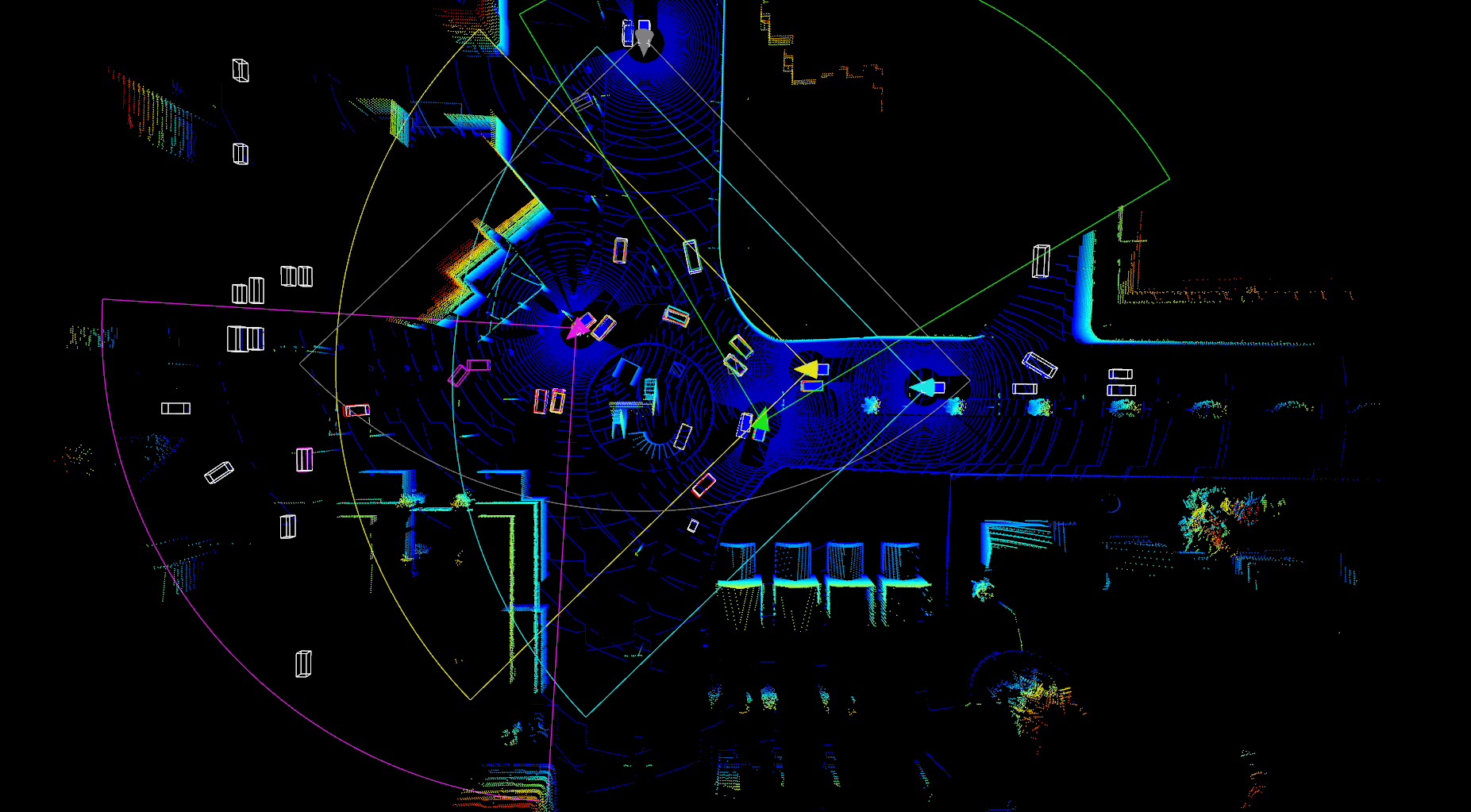

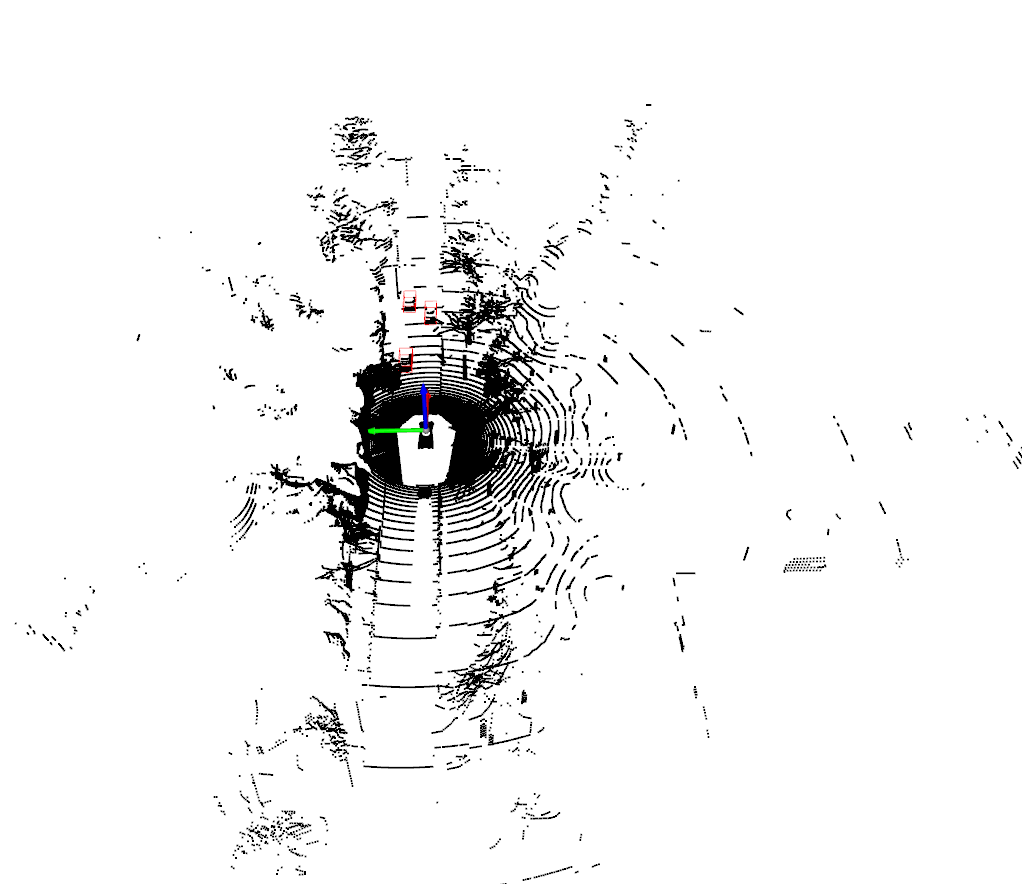

(Optional) run the following script to view KITTI Format data sample with Open3D

# The vehicle_id is the intelligent vehicle ID, and the frame_ID is the index of dataset. python3 gen_data/Visualization.py dataset/record2020_xxxx_xxxx vehicle_id frame_id

- prepare sample dataset in

$ROOT_PATH/data(link) - run

python3 PCDet/INVS_main.py

To be updated.

cd fusion;

python3 visualization/Visualization_local.py ../data/record2020_1027_1957 713 39336cd fusion;

python3 visualization/Visualization_fusion_map.py ../data/record2020_1027_1957 38549Should you have any question, please create issues, or contact Shuai Wang.

tmp

+- record2020_xxxx_xxxx

+- label #tmp labels

+- vhicle.xxx.xxx_xxx

+- sensor.camera.rgb_xxx

+- 0000.jpg

+- 0001.jpg

+- sensor.camera.rgb_xxx_label

+- 0000.txt

+- 0001.txt

+- sensor.lidar.rgb_cast_xxx

+- 0000.ply

+- 0001.ply

+- vhicle.xxx.xxx_xxx

label is the directory to save the tmp labels.

dataset

+- record2020_xxxx_xxxx

+- global_label #global labels

+- vhicle.xxx.xxx_xxx

+- calib00

+- 0000.txt

+- 0001.txt

+- image00

+- 0000.jpg

+- 0001.jpg

+- label00

+- 0000.txt

+- 0001.txt

+- velodyne

+- 0000.bin

+- 0001.bin

+- vhicle.xxx.xxx_xxx

-

label is the directory to save the ground truth labels.

-

calib is the calibration matrix from point cloud to image.