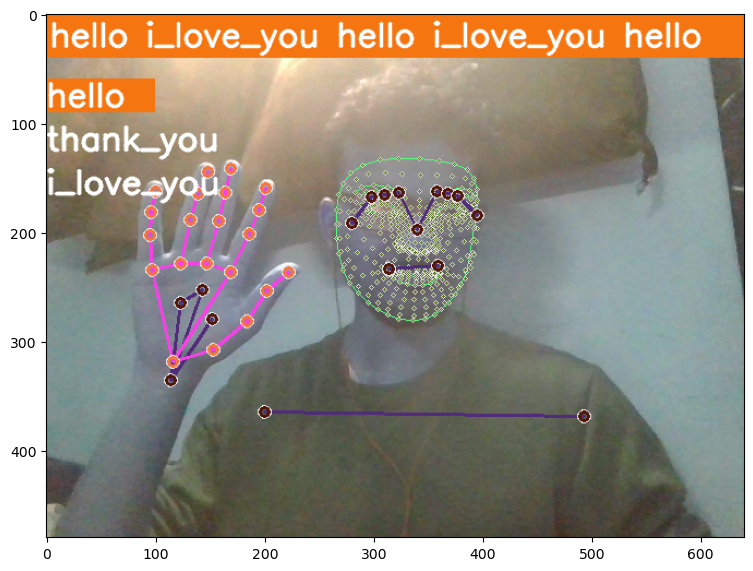

This project aims to create a system for estimating sign language gestures using MediaPipe for hand and head movement tracking and TensorFlow for building and training an LSTM (Long Short-Term Memory) model. The system focuses on detecting and interpreting the signs for "hello", "thank you", and "I love you".

- Utilizes MediaPipe to track hand and head movements frame by frame.

- Implements a TensorFlow LSTM model to learn sign language gestures.

- Trains the LSTM model on labeled data for accurate recognition of "hello", "thank you", and "I love you" signs.

- Python

- TensorFlow

- MediaPipe

- NumPy

- Matplotlib

Clone the repository:

git clone https://github.com/your_username/sign_language_estimation.git

Follow the instructions to perform the signs for "hello", "thank you", and "I love you".

The system will attempt to recognize the signs based on the hand and head movements captured by the webcam.

This project is licensed under the MIT License - see the LICENSE file for details.

This project was made possible because of this lecture by Nicholas Renotte.

Feel free to customize and extend the script according to your specific needs!