Private and secure AI tools for everyone's productivity.

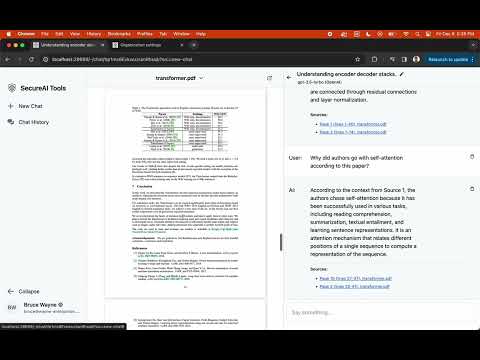

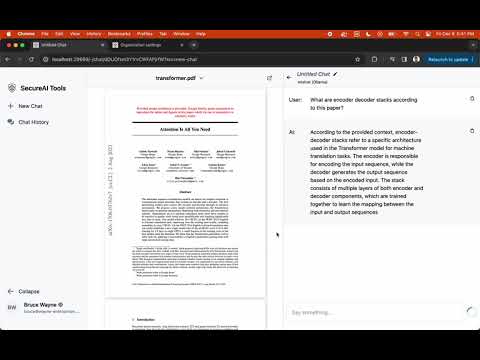

- Chat with AI: Allows you to chat with AI models (i.e. ChatGPT).

- Chat with Documents: Allows you to chat with documents (PDFs for now). Demo videos below

- Local inference: Runs AI models locally. Supports 100+ open-source (and semi-open-source) AI models through Ollama.

- Built-in authentication: A simple email/password authentication so it can be opened to internet and accessed from anywhere.

- Built-in user management: So family members or coworkers can use it as well if desired.

- Self-hosting optimized: Comes with necessary scripts and docker-compose files to get started in under 5 minutes.

- Lightweight: A simple web app with SQLite DB to avoid having to run docker container for DB. Data is persisted on host machine through docker volumes

mkdir secure-ai-tools && cd secure-ai-tools

The script downloads docker-compose.yml and generates a .env file with sensible defaults.

curl -sL https://github.com/SecureAI-Tools/SecureAI-Tools/releases/latest/download/set-up.sh | shCustomize the .env file created in the above step to your liking.

To accelerate inference on Linux machines, you will need to enable GPUs. This is not strictly required as the inference service will run on CPU-only mode as well, but it will be slow on CPU. So if your machine has Nvidia GPU then this step is recommended.

- Install Nvidia container toolkit if not already installed.

- Uncomment the

deploy:block indocker-compose.ymlfile. It gives inference service access to Nvidia GPUs.

docker compose up -d-

Login at http://localhost:28669/log-in using the initial credentials below, and change the password.

-

Email

bruce@wayne-enterprises.com -

Password

SecureAIToolsFTW!

-

-

Set up the AI model by going to http://localhost:28669/-/settings?tab=ai

-

Navigate to http://localhost:28669/- and start using AI tools

A set of features on our todo list (in no particular order).

- ✅ Chat with documents

- ✅ Support for OpenAI, Claude etc APIs

- Support for markdown rendering

- Chat sharing

- Mobile friendly UI

- Specify AI model at chat-creation time

- Prompt templates library