This repository accompanies the Medical Image Analysis (Elsevier) article "Interpretable and intervenable ultrasonography-based machine learning models for pediatric appendicitis". Earlier versions of this work were presented as an oral spotlight at the 2nd Workshop on Interpretable Machine Learning in Healthcare (IMLH), ICML 2022 and at the Workshop on Machine Learning for Multimodal Healthcare Data (ML4MHD 2023), co-located with ICML 2023.

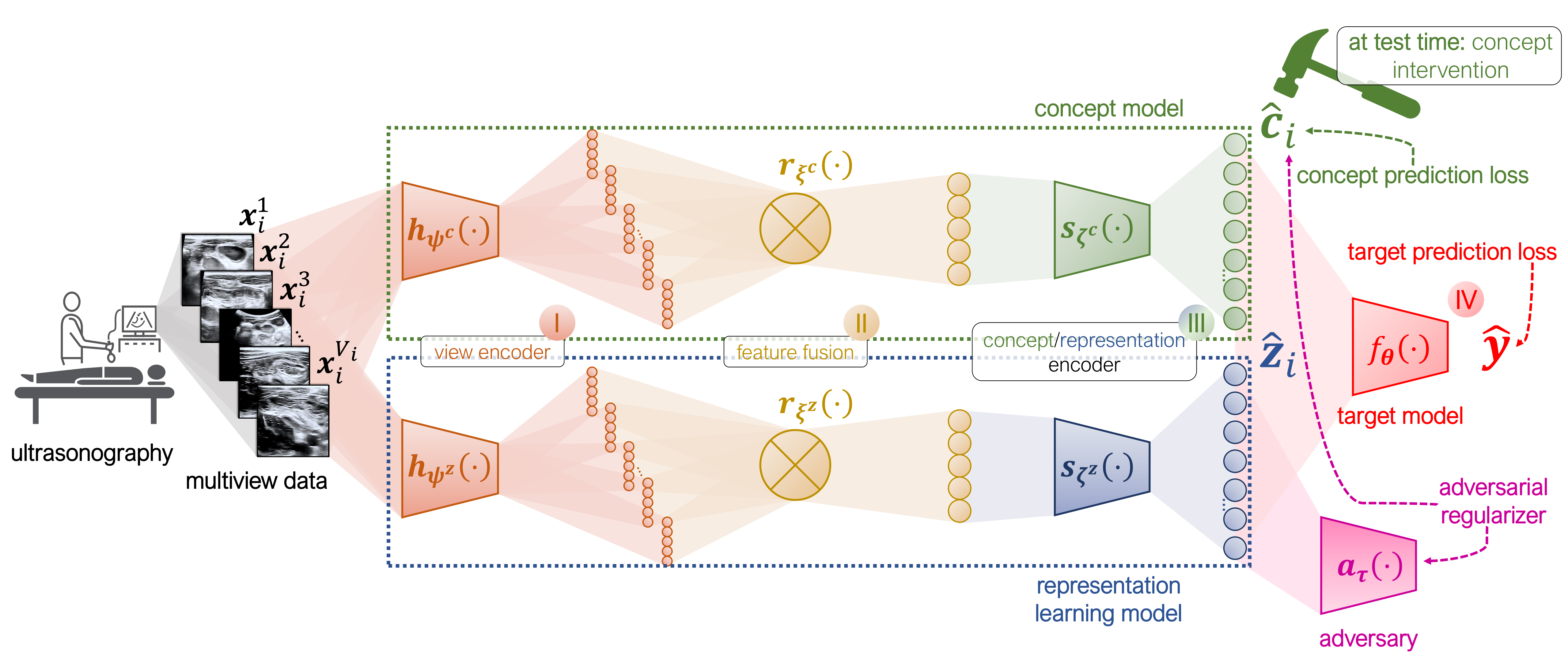

Schematic summary of the (semi-supervised) multiview concept bottleneck model.

Concept bottleneck models (CBM) are interpretable predictive models relying on high-level and human-understandable concepts: in practice, two models are trained, namely, (i) one mapping from the explanatory variables to the given concepts and (ii) another predicting the target variable based on the previously predicted concept values.

This repository implements extensions of the vanilla CBM to the multiview classification setting. In brief, a multiview concept bottleneck model (MVCBM) consists of the following modules: (i) per-view feature extraction; (ii) feature fusion; (iii) concept prediction and (iv) label prediction. To tackle cases when the given set of concepts is incomplete, for instance, due to the lack of domain knowledge or the cost of acquiring additional annotation, we also introduce a semi-supervised MVCBM (SSMVCBM) that, in addition to utilizing the given concepts, learns an independent representation predictive of the label.

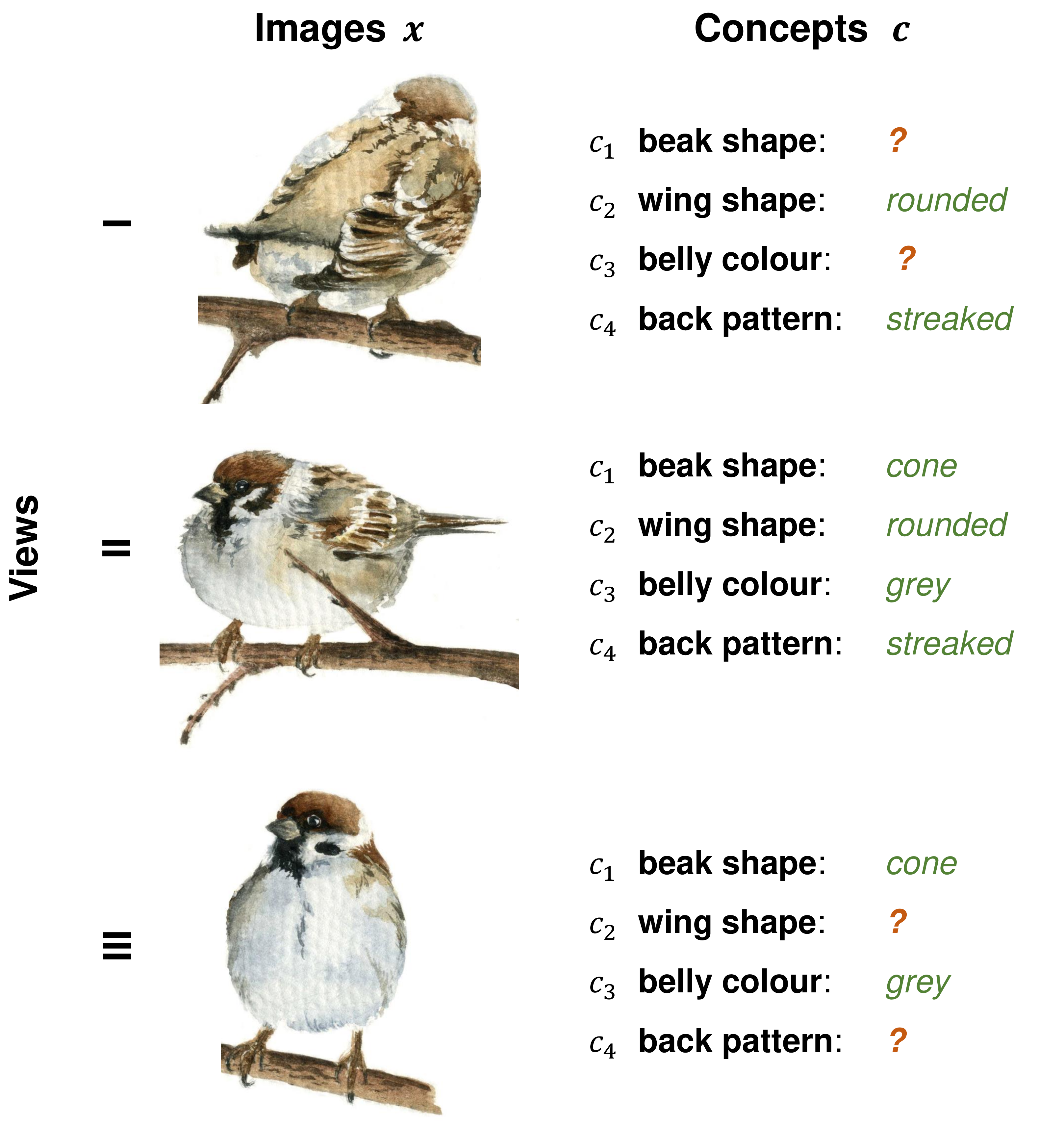

Concept-based classification in the multiview setting.

All the libraries required are in the conda environment environment.yml. To install it, follow the

instructions below:

conda env create -f environment.yml # install dependencies

conda activate multiview-cbm # activate environment

To prepare the pediatric appendicitis data, run this Jupyter notebook with the preprocessing code.

The Animals with Attributes 2 (AwA) dataset is freely available here. Before running

the experiments on the multiview AwA, make sure to copy the file datasets/all_classes.txt

to the dataset directory. The pediatric appendicitis dataset can be downloaded

from Zenodo (doi: 10.5281/zenodo.7711412).

Script train.py can be used to train and validate concept bottleneck models. /bin folder

contains example shell scripts for the different datasets and model designs. For example, to train an MVCBM on the MVAwA

dataset run:

# An example

python train.py --config configs/config_mawa_mvcbm.yaml

where configs/config_mawa_mvcbm.yaml is a configuration file specifying experiment,

dataset and model parameters. See /configs/ for concrete configuration examples and explanation of

the most important parameters.

The definitions of the models and architectures can be found in the /networks.py file. The structure of this repository is described in detail below:

.

├── bin # shell scripts

├── configs # experiment and model configurations

├── datasets # data preprocessing, loading and handling

│ ├── app_dataset.py # pediatric appendicitis dataloaders

│ ├── awa_dataset.py # AwA 2 dataloaders

│ ├── generate_app_data.py # data dictionaries for the pediatric appendicitis

│ ├── mawa_dataset.py # MVAwA dataloaders

│ ├── preprocessing.py # preprocessing utilities for the pediatric appendicitis

│ └── synthetic_dataset.py # synthetic dataloaders

├── DeepFill # DeepFill code

├── intervene.py # utility functions for model interventions

├── loss.py # loss functions

├── networks.py # neural network architectures and model definitions

├── notebooks # Jupyter notebooks

│ ├── Preprocess_app_data.ipynb # prepares and preprocesses pediatric appendicitis data

│ ├── Radiomics.ipynb # radiomics-based features and predictive models

│ └── radiomics_params.yaml # configuration file for radiomics feature extraction

├── pretrained_models # directory with pretrained models, s.a. ResNet-18

├── train.py # model training and validation script

├── utils # further utility functions

└── validate.py # model evaluation subroutines

This repository is maintained by Ričards Marcinkevičs (ricards.marcinkevics@inf.ethz.ch).

- DeepFill code was taken from the original implementation by Yu et al. (2018)

- Data loaders for the AwA dataset are based on the code from the repository by David Fan

To better understand the background behind this work, we recommend reading the following papers:

- Kumar, N., Berg, A.C., Belhumeur, P.N., Nayar, S.K.: Attribute and simile classifiers for face verification. In: 2009 IEEE 12th International Conference on Computer Vision, pp. 365–372. IEEE, Kyoto, Japan (2009). https://doi.org/10.1109/ICCV.2009.5459250

- Lampert, C.H., Nickisch, H., Harmeling, S.: Learning to detect unseen object classes by between-class attribute transfer. In: 2009 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Miami, FL, USA (2009). https://doi.org/10.1109/CVPR.2009.5206594

- Koh, P.W., Nguyen, T., Tang, Y.S., Mussmann, S., Pierson, E., Kim, B., Liang, P.: Concept bottleneck models. In: Daumé III, H., Singh, A. (eds.) Proceedings of the 37th International Conference on Machine Learning. Proceedings of Machine Learning Research, vol. 119, pp. 5338–5348. PMLR, Virtual (2020)

- Marcinkevičs, R., Reis Wolfertstetter, P., Wellmann, S., Knorr, C., Vogt, J.E.: Using machine learning to predict the diagnosis, management and severity of pediatric appendicitis. Frontiers in Pediatrics 9 (2021). https://doi.org/10.3389/fped.2021.662183

- Havasi, M., Parbhoo, S., Doshi-Velez, F.: Addressing leakage in concept bottleneck models. In: Oh, A.H., Agarwal, A., Belgrave, D., Cho, K. (eds.) Advances in Neural Information Processing Systems (2022). https://openreview.net/forum?id=tglniD_fn9

If you use the models or the dataset, please cite the papers below:

@article{MarcinkevicsReisWolfertstetterKlimiene2024,

title = {Interpretable and intervenable ultrasonography-based machine learning models

for pediatric appendicitis},

journal = {Medical Image Analysis},

volume = {91},

pages = {103042},

year = {2024},

issn = {1361-8415},

doi = {https://doi.org/10.1016/j.media.2023.103042},

url = {https://www.sciencedirect.com/science/article/pii/S136184152300302X},

author = {Ri\v{c}ards Marcinkevi\v{c}s and Patricia {Reis Wolfertstetter} and Ugne Klimiene

and Kieran Chin-Cheong and Alyssia Paschke and Julia Zerres and Markus Denzinger

and David Niederberger and Sven Wellmann and Ece Ozkan and Christian Knorr

and Julia E. Vogt}

}

@inproceedings{KlimieneMarcinkevics2022,

title={Multiview Concept Bottleneck Models Applied to Diagnosing Pediatric Appendicitis},

author={Klimiene, Ugne and Marcinkevi{\v{c}}s, Ri{\v{c}}ards and Reis Wolfertstetter, Patricia

and Ozkan, Ece and Paschke, Alyssia and Niederberger, David

and Wellmann, Sven and Knorr, Christian and Vogt, Julia E},

booktitle={2nd Workshop on Interpretable Machine Learning in Healthcare (IMLH), ICML 2022},

year={2022}

}

This repository is copyright © 2023 Marcinkevičs, Reis Wolfertstetter, Klimiene, Chin-Cheong, Paschke, Zerres, Denzinger, Niederberger, Wellmann, Ozkan, Knorr and Vogt.

This repository is additionally licensed under CC-BY-NC-4.0.