Haozhe Xie, Zhaoxi Chen, Fangzhou Hong, Ziwei Liu

S-Lab, Nanyang Technological University

- [2024/03/28] The testing code is released.

- [2024/03/03] The hugging face demo is available.

- [2024/02/27] The OSM and GoogleEarth datasets have been released.

- [2023/08/15] The repo is created.

@inproceedings{xie2024citydreamer,

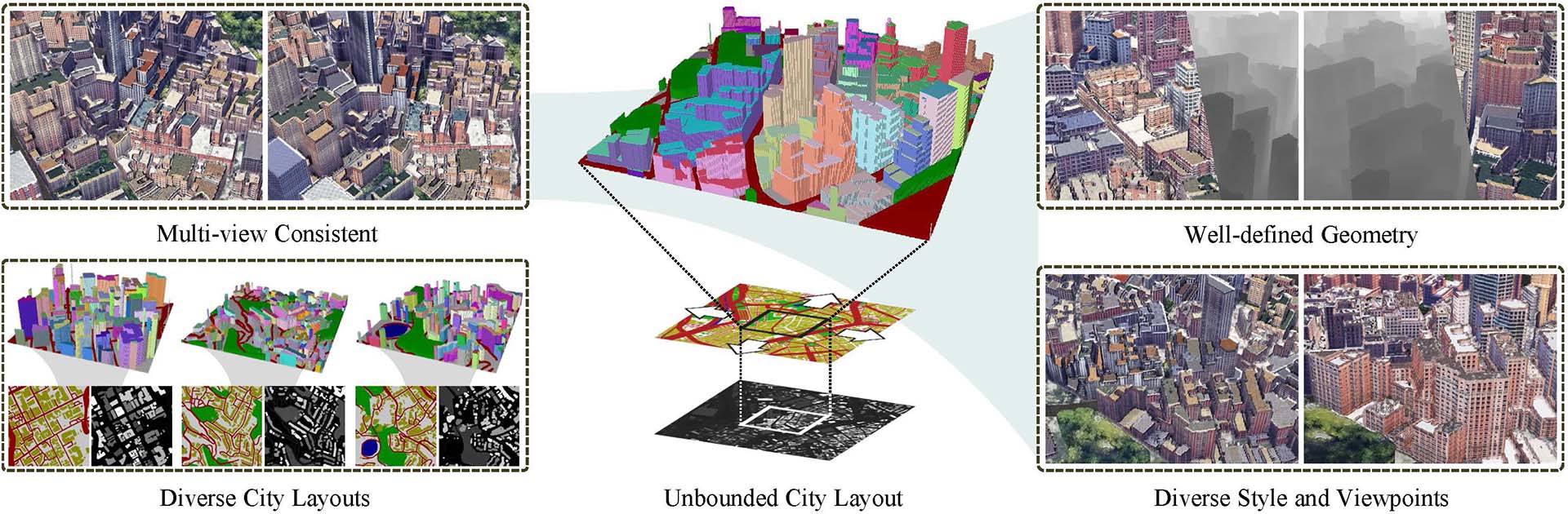

title = {City{D}reamer: Compositional Generative Model of Unbounded 3{D} Cities},

author = {Xie, Haozhe and

Chen, Zhaoxi and

Hong, Fangzhou and

Liu, Ziwei},

booktitle = {CVPR},

year = {2024}

}

The proposed OSM and GoogleEarth datasets are available as below.

The pretrained models are available as below.

Assume that you have installed CUDA and PyTorch in your Python (or Anaconda) environment.

The CityDreamer source code is tested in PyTorch 1.13.1 with CUDA 11.7 in Python 3.8. You can use the following command to install PyTorch with CUDA 11.7.

pip install torch==1.13.1+cu117 torchvision==0.14.1+cu117 --extra-index-url https://download.pytorch.org/whl/cu117After that, the Python dependencies can be installed as following.

git clone https://github.com/hzxie/city-dreamer

cd city-dreamer

CITY_DREAMER_HOME=`pwd`

pip install -r requirements.txtThe CUDA extensions can be compiled and installed with the following commands.

cd $CITY_DREAMER_HOME/extensions

for e in `ls -d */`

do

cd $CITY_DREAMER_HOME/extensions/$e

pip install .

doneBoth the iterative demo and command line interface (CLI) by default load the pretrained models for Unbounded Layout Generator, Background Stuff Generator, and Building Instance Generator from output/sampler.pth, output/gancraft-bg.pth, and output/gancraft-fg.pth, respectively. You have the option to specify a different location using runtime arguments.

├── ...

└── city-dreamer

└── demo

| ├── ...

| └── run.py

└── scripts

| ├── ...

| └── inference.py

└── output

├── gancraft-bg.pth

├── gancraft-fg.pth

└── sampler.pth

Moreover, both scripts feature runtime arguments --patch_height and --patch_width, which divide images into patches of size patch_heightxpatch_width. For a single NVIDIA RTX 3090 GPU with 24GB of VRAM, both patch_height and patch_width are set to 5. You can adjust the values to match your GPU's VRAM size.

python3 demo/run.pyThen, open http://localhost:3186 in your browser.

python3 scripts/inference.pyThe generated video is located at output/rendering.mp4.

This project is licensed under NTU S-Lab License 1.0. Redistribution and use should follow this license.