This package contains the accompanying code for the following paper:

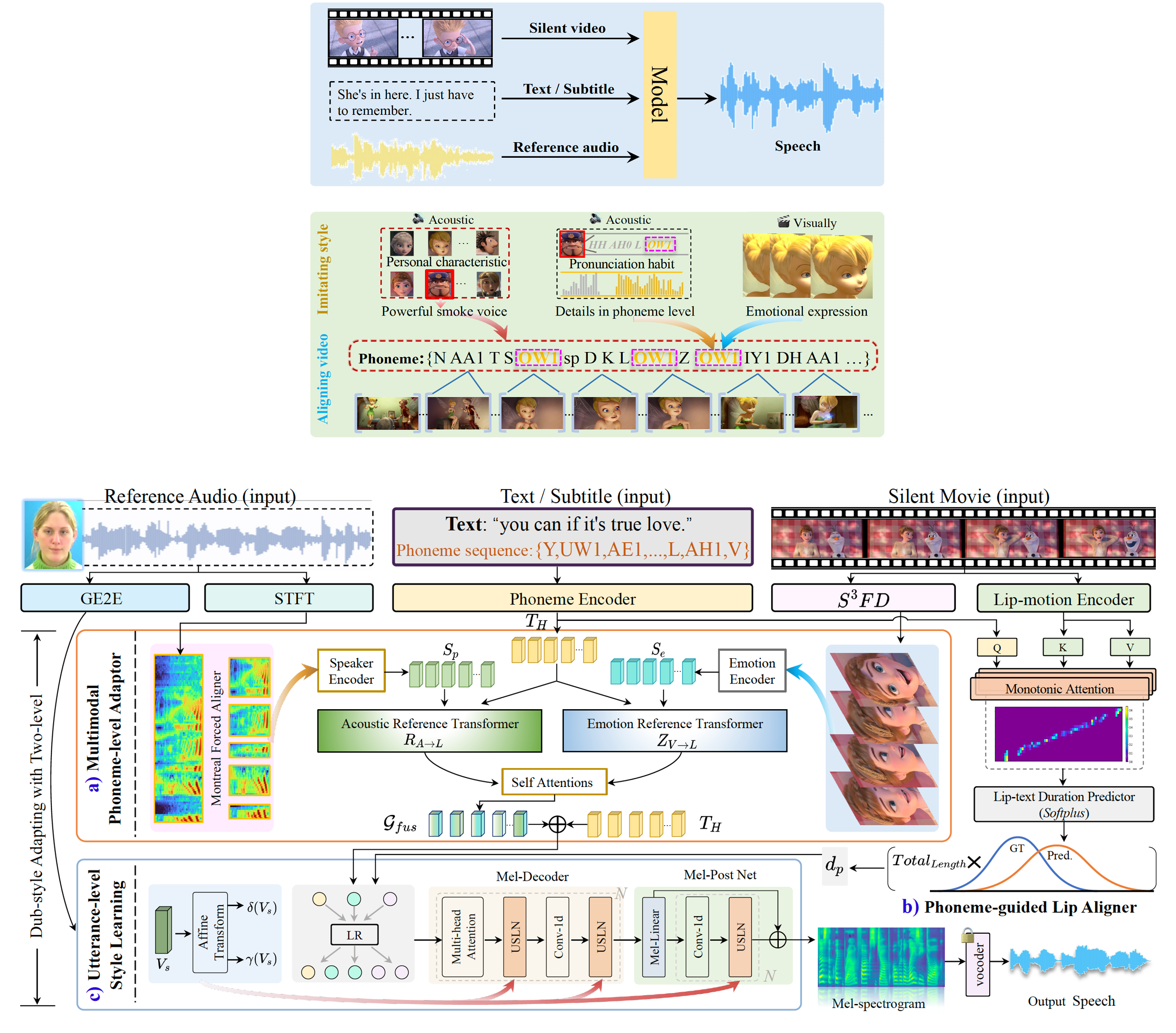

"StyleDubber: Towards Multi-Scale Style Learning for Movie Dubbing", which has appeared as long paper in the Findings of the ACL, 2024.

- Release StyleDubber's training and inference code.

- Release pretrained weights.

- Release the raw data and preprocessed data features of the GRID dataset.

- Metrics Testing Scripts (SECS, WER_Whisper).

- Update README.md (How to use).

- Release the preprocessed data features of the V2C-Animation dataset (chenqi-Denoise2).

- GRID (BaiduDrive (code: GRID) / GoogleDrive)

- V2C-Animation dataset (chenqi-Denoise2)

We provide the pre-trained checkpoints on GRID and V2C-Animation datasets as follows, respectively:

-

GRID: https://pan.baidu.com/s/1Mj3MN4TuAEc7baHYNqwbYQ (y8kb), Google Drive

-

V2C-Animation dataset (chenqi-Denoise2): https://pan.baidu.com/s/1hZBUszTaxCTNuHM82ljYWg (n8p5), Google Drive

Our python version is 3.8.18 and cuda version 11.5. It's possible to have other compatible version.

Both training and inference are implemented with PyTorch on a

GeForce RTX 4090 GPU.

conda create -n style_dubber python=3.8.18

conda activate style_dubber

pip install -r requirements.txtYou need repalce tha path in preprocess_config (see "./ModelConfig_V2C/model_config/MovieAnimation/config_all.txt") to you own path.

Training V2C-Animation dataset (153 cartoon speakers), please run:

python train_StyleDubber_V2C.pyYou need repalce tha path in preprocess_config (see "./ModelConfig_GRID/model_config/GRID/config_all.txt") to you own path.

Training GRID dataset (33 real-world speakers), please run:

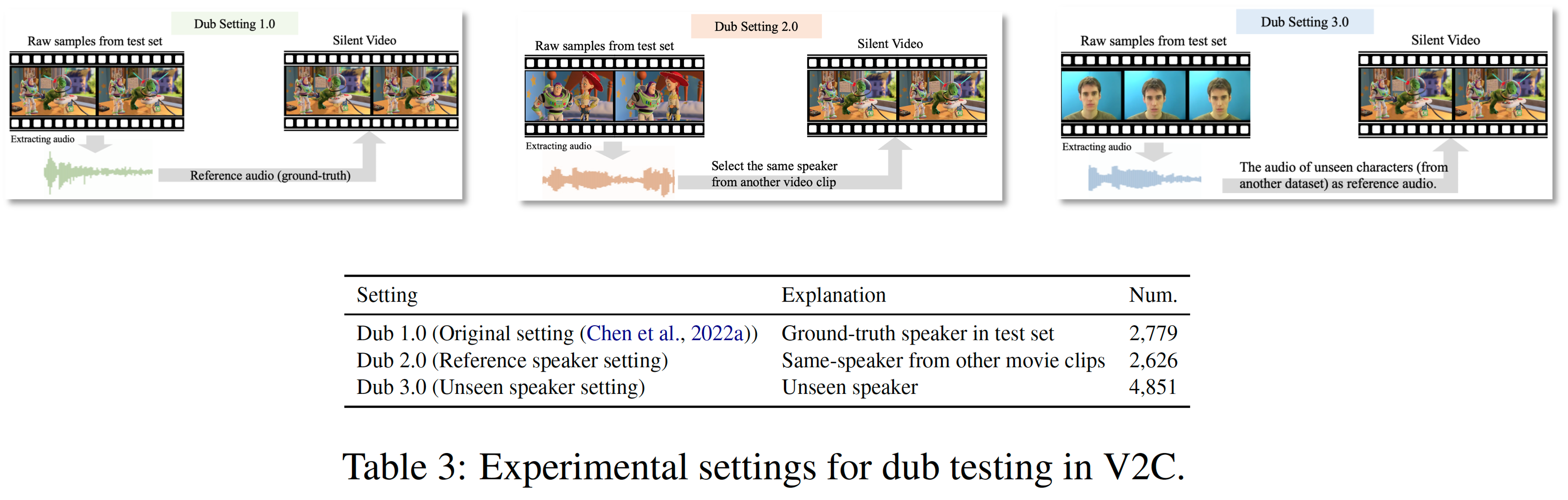

python train_StyleDubber_GRID.pypython 0_evaluate_V2C_Setting1.py --restore_step 47000python 0_evaluate_V2C_Setting2.py --restore_step 47000python 0_evaluate_V2C_Setting3.py --restore_step 47000If you find our work useful, please consider citing:

@article{cong2024styledubber,

title={StyleDubber: Towards Multi-Scale Style Learning for Movie Dubbing},

author={Cong, Gaoxiang and Qi, Yuankai and Li, Liang and Beheshti, Amin and Zhang, Zhedong and Hengel, Anton van den and Yang, Ming-Hsuan and Yan, Chenggang and Huang, Qingming},

journal={arXiv preprint arXiv:2402.12636},

year={2024}

}We would like to thank the authors of previous related projects for generously sharing their code and insights: CDFSE_FastSpeech2, Multimodal Transformer, SMA, Meta-StyleSpeech, and FastSpeech2.