The inspiration for this project comes from ultralytics/yolov3 && AlexeyAB/darknet Thanks.

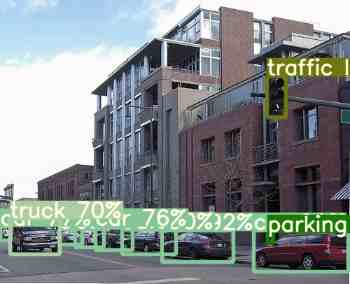

This project is a YOLOv4 object detection system. Development framework by PyTorch.

The goal of this implementation is to be simple, highly extensible, and easy to integrate into your own projects. This implementation is a work in progress -- new features are currently being implemented.

There are a huge number of features which are said to improve Convolutional Neural Network (CNN) accuracy. Practical testing of combinations of such features on large datasets, and theoretical justification of the result, is required. Some features operate on certain models exclusively and for certain problems exclusively, or only for small-scale datasets; while some features, such as batch-normalization and residual-connections, are applicable to the majority of models, tasks, and datasets. We assume that such universal features include Weighted-Residual-Connections (WRC), Cross-Stage-Partial-connections (CSP), Cross mini-Batch Normalization (CmBN), Self-adversarial-training (SAT) and Mish-activation. We use new features: WRC, CSP, CmBN, SAT, Mish activation, Mosaic data augmentation, CmBN, DropBlock regularization, and CIoU loss, and combine some of them to achieve state-of-the-art results: 43.5% AP (65.7% AP50) for the MS COCO dataset at a realtime speed of ~65 FPS on Tesla V100. Source code is at this https URL.

git clone https://github.com/Lornatang/YOLOv4-PyTorch.git

cd YOLOv4-PyTorch/

pip install -r requirements.txtcd weights/

bash download_weights.shcd data/

bash get_voc_dataset.shcd data/

bash get_coco2014_dataset.shcd data/

bash get_coco2017_dataset.sh- Example (COCO2017)

To train on COCO2014/COCO2017 run:

python train.py --config-file configs/COCO-Detection/yolov5-small.yaml --data data/coco2017.yaml --weights ""- Example (VOC2007+2012)

To train on VOC07+12 run:

python train.py --config-file configs/PascalVOC-Detection/yolov5-small.yaml --data data/voc2007.yaml --weights ""- Other training methods

Normal Training: python train.py --config-file configs/COCO-Detection/yolov5-small.yaml --data data/coco2014.yaml --weights ""

to begin training after downloading COCO data with data/get_coco2014_dataset.sh.

Each epoch trains on 117,263 images from the train and validate COCO sets, and tests on 5000 images from the COCO validate set.

Resume Training: python train.py --config-file configs/COCO-Detection/yolov5-small.yaml --data data/coco2014.yaml --resume

to resume training from weights/checkpoint.pth.

All numbers were obtained on local machine servers with 2 NVIDIA GeForce RTX 2080 SUPER GPUs & NVLink. The software in use were PyTorch 1.5.1, CUDA 10.2, cuDNN 7.6.5.

- Example (COCO2017)

To train on COCO2014/COCO2017 run:

python test.py --config-file configs/COCO-Detection/yolov5-small.yaml --data data/coco2017.yaml --weights weights/COCO-Detection/yolov5-small.pth- Example (VOC2007+2012)

To train on VOC07+12 run:

python test.py --config-file configs/PascalVOC-Detection/yolov5-small.yaml --data data/voc2007.yaml --weights weights/PascalVOC-Detection/yolov5-small.pthCommon Settings for VOC Models

- All VOC models were trained on

voc2007_trainval+voc2012_trainvaland evaluated onvoc2007_test. - The default settings are not directly comparable with YOLOv4's standard settings. The default settings are not directly comparable with Detectron's standard settings. For example, our default training data augmentation uses scale jittering in addition to horizontal flipping.

- For YOLOv3/YOLOv4, we provide baselines based on 2 different backbone combinations:

- Darknet-53: Use a ResNet+VGG backbone with standard conv and FC heads for mask and box prediction, respectively.

- CSPDarknet-53: Use a ResNet+CSPNet backbone with standard conv and FC heads for mask and box prediction, respectively. It obtains the best speed/accuracy tradeoff, but the other two are still useful for research.

| Model | train time (s/iter) |

inference time (ms/im) |

train mem (GB) |

APtest | AP50 | fps | params | FLOPs | download |

|---|---|---|---|---|---|---|---|---|---|

| MobileNet-v1 | 9.6 | 2.5 | 4.0 | 31.2 | 61.3 | 400 | 4.95M | 11.3B | model |

| VGG16 | - | - | - | - | - | - | - | - | - |

| YOLOv3-Tiny | 10.5 | 1.5 | 3.7 | 24.3 | 53.1 | 667 | 7.96M | 10.5B | model |

| YOLOv3 | 2.4 | 6.7 | 6.4 | 57.9 | 82.6 | 149 | 61.79M | 155.6B | model |

| YOLOv3-SPP | 2.4 | 6.7 | 6.3 | 59.7 | 83.3 | 149 | 62.84M | 156.5B | model |

| YOLOv4-Tiny | 12.3 | 1.5 | 2.7 | 20.0 | 46.0 | 667 | 3.10M | 6.5B | model |

| YOLOv4 | 2.1 | 7.5 | 6.7 | 61.4 | 83.7 | 133 | 60.52M | 131.6B | model |

| YOLOv5-small | 5.4 | 2.3 | 1.7 | 49.3 | 75.9 | 435 | 7.31M | 17.0B | model |

| YOLOv5-medium | 3.6 | 3.8 | 3.1 | 56.5 | 80.3 | 263 | 21.56M | 51.7B | model |

| YOLOv5-large | 2.8 | 6.1 | 5.1 | 59.4 | 81.6 | 164 | 47.50M | 116.4B | model |

| YOLOv5-xlarge | 1.4 | 10.8 | 7.2 | 60.2 | 82.6 | 93 | 88.56M | 220.6B | model |

Common Settings for COCO Models

- All COCO models were trained on

train2017and evaluated onval2017. - The default settings are not directly comparable with YOLOv4's standard settings. The default settings are not directly comparable with Detectron's standard settings. For example, our default training data augmentation uses scale jittering in addition to horizontal flipping.

- For YOLOv3/YOLOv4, we provide baselines based on 3 different backbone combinations:

- Darknet-53: Use a ResNet+VGG backbone with standard conv and FC heads for mask and box prediction, respectively.

- CSPDarknet-53: Use a ResNet+CSPNet backbone with standard conv and FC heads for mask and box prediction, respectively. It obtains the best speed/accuracy tradeoff, but the other two are still useful for research.

- GhostDarknet-53 : Use a ResNet+Ghost backbone with standard conv and FC heads for mask and box prediction, respectively.

| Model | train time (s/iter) |

inference time (ms/im) |

train mem (GB) |

APtest | AP50 | fps | params | FLOPs | download |

|---|---|---|---|---|---|---|---|---|---|

| MobileNet-v1 | - | - | - | - | - | - | - | - | - |

| VGG16 | - | - | - | - | - | - | - | - | - |

| YOLOv3-Tiny | - | - | - | - | - | - | - | - | - |

| YOLOv3 | - | - | - | - | - | - | - | - | - |

| YOLOv3-SPP | - | - | - | - | - | - | - | - | - |

| YOLOv4 | - | - | - | - | - | - | - | - | - |

| YOLOv4-Tiny | - | - | - | - | - | - | - | - | - |

| YOLOv5-small | - | - | - | - | - | - | - | - | - |

| YOLOv5-medium | - | - | - | - | - | - | - | - | - |

| YOLOv5-large | - | - | - | - | - | - | - | - | - |

| YOLOv5-xlarge | - | - | - | - | - | - | - | - | - |

detect.py runs inference on any sources:

python detect.py --cfg configs/COCO-Detection/yolov5-small.yaml --data data/coco2014.yaml --weights weights/COCO-Detection/yolov5-small.pth --source ...- Image:

--source file.jpg - Video:

--source file.mp4 - Directory:

--source dir/ - Webcam:

--source 0 - HTTP stream:

--source https://v.qq.com/x/page/x30366izba3.html

Run the commands below to create a custom model definition, replacing your-dataset-num-classes with the number of classes in your dataset.

# move to configs dir

cd configs/

create custom model 'yolov3-custom.yaml'. (In fact, it is OK to modify two lines of parameters, see `create_model.sh`)

bash create_model.sh your-dataset-num-classesAdd class names to data/custom.yaml. This file should have one row per class name.

Move the images of your dataset to data/custom/images/.

Move your annotations to data/custom/labels/. The dataloader expects that the annotation file corresponding to the image data/custom/images/train.jpg has the path data/custom/labels/train.txt. Each row in the annotation file should define one bounding box, using the syntax label_idx x_center y_center width height. The coordinates should be scaled [0, 1], and the label_idx should be zero-indexed and correspond to the row number of the class name in data/custom/classes.names.

In data/custom/train.txt and data/custom/val.txt, add paths to images that will be used as train and validation data respectively.

To train on the custom dataset run:

python train.py --config-file configs/yolov3-custom.yaml --data data/custom.yaml --epochs 100 Alexey Bochkovskiy, Chien-Yao Wang, Hong-Yuan Mark Liao

Abstract

There are a huge number of features which are said to improve Convolutional Neural Network (CNN) accuracy. Practical testing of combinations of such features on large datasets, and theoretical justification of the result, is required. Some features operate on certain models exclusively and for certain problems exclusively, or only for small-scale datasets; while some features, such as batch-normalization and residual-connections, are applicable to the majority of models, tasks, and datasets. We assume that such universal features include Weighted-Residual-Connections (WRC), Cross-Stage-Partial-connections (CSP), Cross mini-Batch Normalization (CmBN), Self-adversarial-training (SAT) and Mish-activation. We use new features: WRC, CSP, CmBN, SAT, Mish activation, Mosaic data augmentation, CmBN, DropBlock regularization, and CIoU loss, and combine some of them to achieve state-of-the-art results: 43.5% AP (65.7% AP50) for the MS COCO dataset at a realtime speed of ~65 FPS on Tesla V100. Source code is at this https URL.

[Paper] [Project Webpage] [Authors' Implementation]

@article{yolov4,

title={YOLOv4: Optimal Speed and Accuracy of Object Detection},

author={Alexey Bochkovskiy, Chien-Yao Wang, Hong-Yuan Mark Liao},

journal = {arXiv},

year={2020}

}