This template makes it easy for you to manage papers.

- Add paper information by

./add_paper_info.shor./add_paper_info.sh <name> - Run

./refresh_readme.sh

sparsegpt.prototxt

paper {

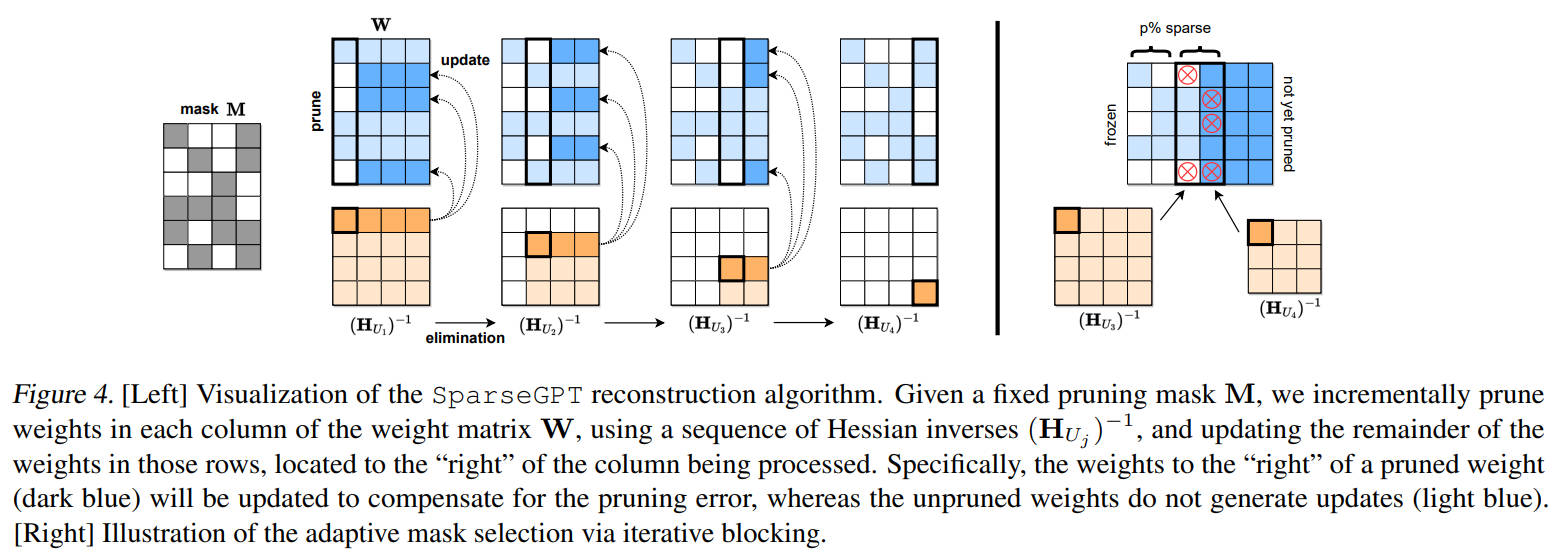

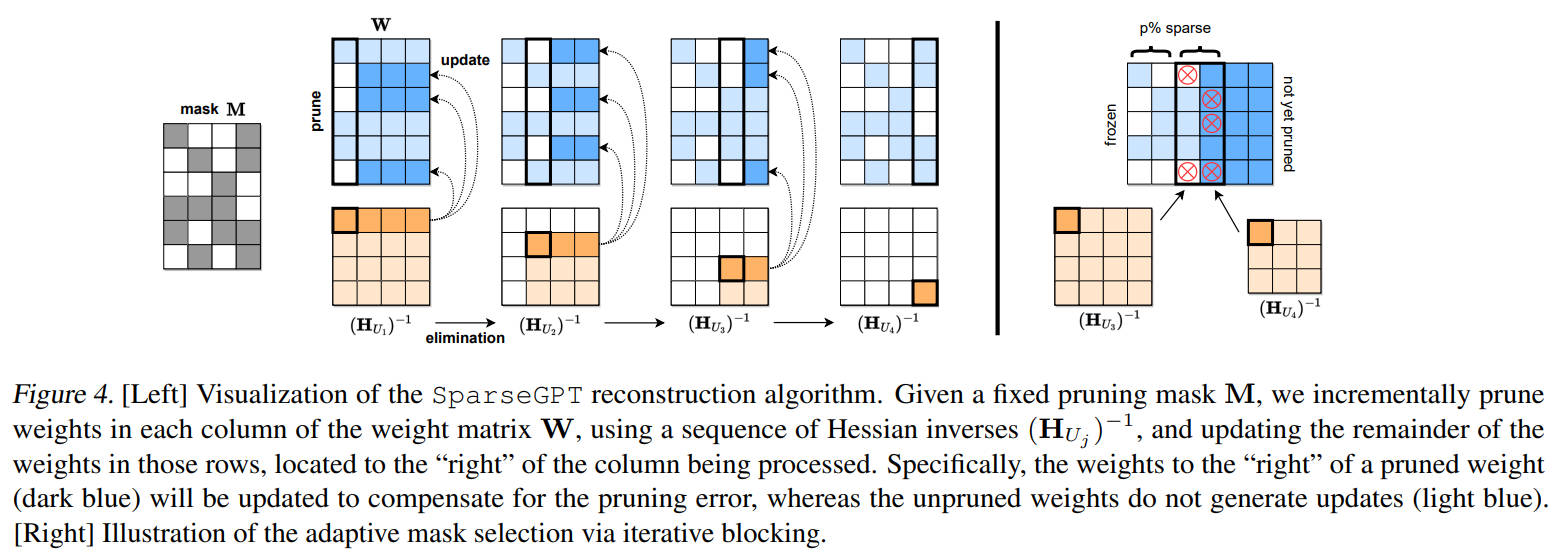

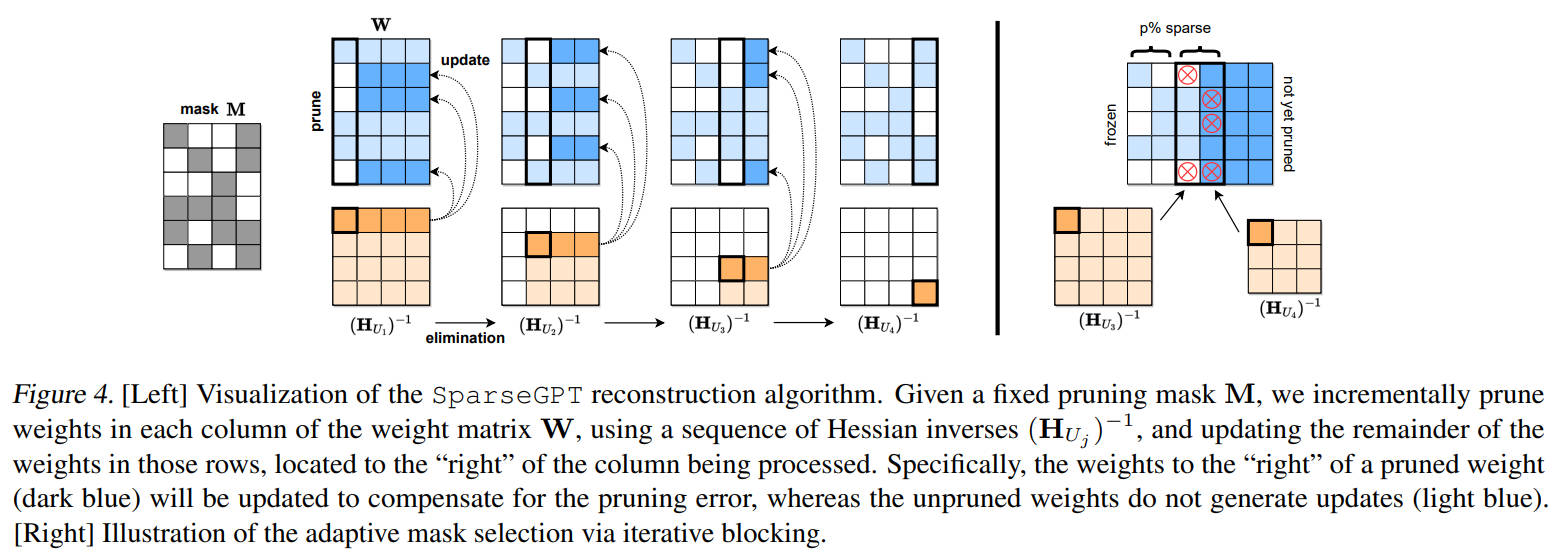

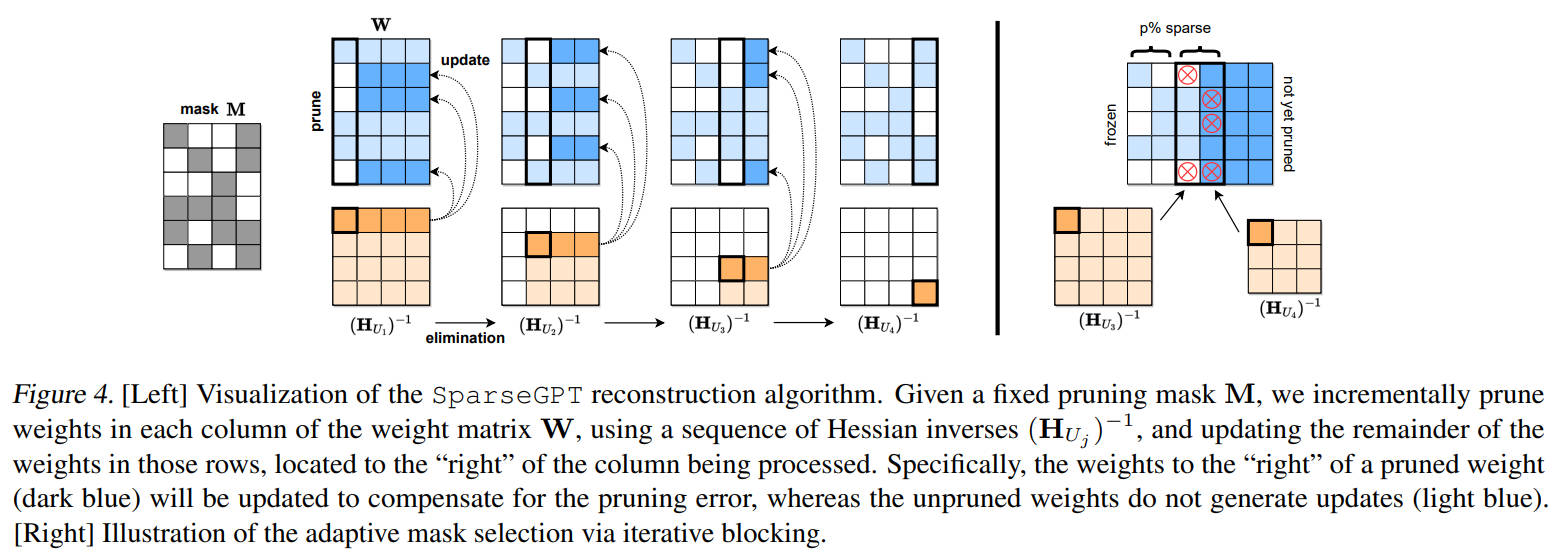

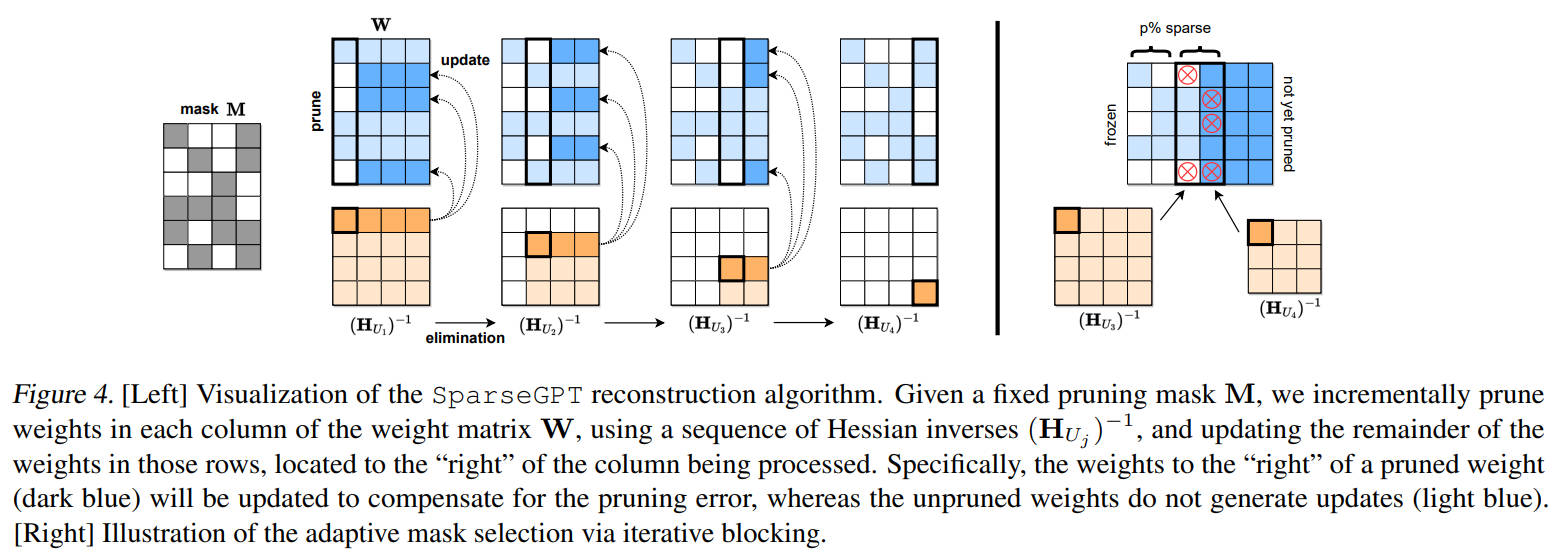

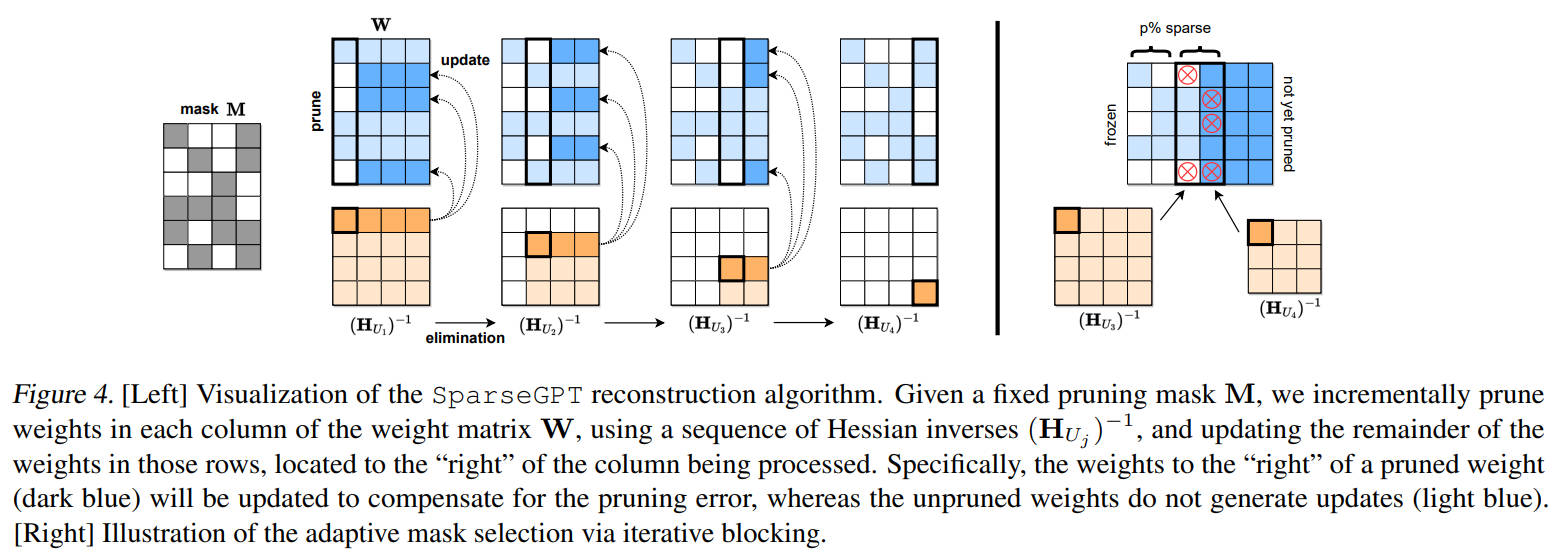

title: "SparseGPT: Massive Language Models Can be Accurately Pruned in one-shot."

abbr: "SparseGPT"

url: "https://arxiv.org/pdf/2301.00774.pdf"

authors: "Elias Frantar"

authors: "Dan Alistarh"

institutions: "IST Austria"

institutions: "Neural Magic"

}

pub {

where: "arXiv"

year: 2023

}

code {

type: "Pytorch"

url: "https://github.com/IST-DASLab/sparsegpt"

}

note {

url: "SparseGPT.md"

}

keyword {

words: "sparsity"

}

Quantization

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | RPTQ | RPTQ: Reorder-based Post-training Quantization for Large Language Models | arXiv | 2023 | PyTorch |

Sparse/Pruning

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | Deep Compression | Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding | ICLR | 2016 | |||

| 1 | OpenVINO | Post-training deep neural network pruning via layer-wise calibration | ICCV workshop | 2021 | |||

| 2 | abbr | DFPC: Data flow driven pruning of coupled channels without data | ICLR | 2023 | |||

| 3 | abbr | Holistic Adversarially Robust Pruning | ICLR | 2023 | |||

| 4 | MVUE | Minimum Variance Unbiased N:M Sparsity for the Neural Gradients | ICLR | 2023 | |||

| 5 | abbr | Pruning Deep Neural Networks from a Sparsity Perspective | ICLR | 2023 | |||

| 6 | abbr | Rethinking Graph Lottery Tickets: Graph Sparsity Matters | ICLR | 2023 | |||

| 7 | SMC | Sparsity May Cry: Let Us Fail (Current) Sparse Neural Networks Together! | ICLR | 2023 | SMC-Bench | ||

| 8 | SparseGPT | SparseGPT: Massive Language Models Can be Accurately Pruned in one-shot. | arXiv | 2023 | Pytorch | note |  |

2016

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | Deep Compression | Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding | ICLR | 2016 |

2021

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | OpenVINO | Post-training deep neural network pruning via layer-wise calibration | ICCV workshop | 2021 |

2023

ICCV workshop

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | OpenVINO | Post-training deep neural network pruning via layer-wise calibration | ICCV workshop | 2021 |

ICLR

arXiv

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | RPTQ | RPTQ: Reorder-based Post-training Quantization for Large Language Models | arXiv | 2023 | PyTorch | ||

| 1 | SparseGPT | SparseGPT: Massive Language Models Can be Accurately Pruned in one-shot. | arXiv | 2023 | Pytorch | note |  |

Eindhoven University of Technology

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | SMC | Sparsity May Cry: Let Us Fail (Current) Sparse Neural Networks Together! | ICLR | 2023 | SMC-Bench |

Habana Labs

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | MVUE | Minimum Variance Unbiased N:M Sparsity for the Neural Gradients | ICLR | 2023 |

Houmo AI

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | RPTQ | RPTQ: Reorder-based Post-training Quantization for Large Language Models | arXiv | 2023 | PyTorch |

IST Austria

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | SparseGPT | SparseGPT: Massive Language Models Can be Accurately Pruned in one-shot. | arXiv | 2023 | Pytorch | note |  |

Intel Corporation

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | OpenVINO | Post-training deep neural network pruning via layer-wise calibration | ICCV workshop | 2021 |

Neural Magic

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | SparseGPT | SparseGPT: Massive Language Models Can be Accurately Pruned in one-shot. | arXiv | 2023 | Pytorch | note |  |

Stanford University

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | Deep Compression | Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding | ICLR | 2016 |

Tencent AI Lab

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | RPTQ | RPTQ: Reorder-based Post-training Quantization for Large Language Models | arXiv | 2023 | PyTorch |

University of Texas at Austin

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | SMC | Sparsity May Cry: Let Us Fail (Current) Sparse Neural Networks Together! | ICLR | 2023 | SMC-Bench |

inst1

inst2

Bingzhe Wu

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | RPTQ | RPTQ: Reorder-based Post-training Quantization for Large Language Models | arXiv | 2023 | PyTorch |

Brian Chmiel

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | MVUE | Minimum Variance Unbiased N:M Sparsity for the Neural Gradients | ICLR | 2023 |

Dan Alistarh

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | SparseGPT | SparseGPT: Massive Language Models Can be Accurately Pruned in one-shot. | arXiv | 2023 | Pytorch | note |  |

Daniel Soudry

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | MVUE | Minimum Variance Unbiased N:M Sparsity for the Neural Gradients | ICLR | 2023 |

Elias Frantar

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | SparseGPT | SparseGPT: Massive Language Models Can be Accurately Pruned in one-shot. | arXiv | 2023 | Pytorch | note |  |

Ivan Lazarevich

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | OpenVINO | Post-training deep neural network pruning via layer-wise calibration | ICCV workshop | 2021 |

Name1

Name2

Nikita Malinin

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | OpenVINO | Post-training deep neural network pruning via layer-wise calibration | ICCV workshop | 2021 |

Shiwei Liu

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | SMC | Sparsity May Cry: Let Us Fail (Current) Sparse Neural Networks Together! | ICLR | 2023 | SMC-Bench |

Song Han

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | Deep Compression | Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding | ICLR | 2016 |

Zhangyang Wang

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | SMC | Sparsity May Cry: Let Us Fail (Current) Sparse Neural Networks Together! | ICLR | 2023 | SMC-Bench |

Zhihang Yuan

| meta | title | publication | year | code | note | cover | |

|---|---|---|---|---|---|---|---|

| 0 | RPTQ | RPTQ: Reorder-based Post-training Quantization for Large Language Models | arXiv | 2023 | PyTorch |