This repo aims to provide a better daily digest for newly published arXiv papers based on your own research interests and descriptions via relevancy ratings from GPT.

Staying up to date on arXiv papers can take a considerable amount of time, with on the order of hundreds of new papers each day to filter through. There is an official daily digest service, however large categories like cs.AI still have 50-100 papers a day. Determining if these papers are relevant and important to you means reading through the title and abstract, which is time-consuming.

This repository offers a method to curate a daily digest, sorted by relevance, using large language models. These models are conditioned based on your personal research interests, which are described in natural language.

- You modify the configuration file

config.yamlwith an arXiv Subject, some set of Categories, and a natural language statement about the type of papers you are interested in. - The code pulls all the abstracts for papers in those categories and ranks how relevant they are to your interest on a scale of 1-10 using

gpt-3.5-turbo. - The code then emits an HTML digest listing all the relevant papers, and optionally emails it to you using SendGrid. You will need to have a SendGrid account with an API key for this functionality to work.

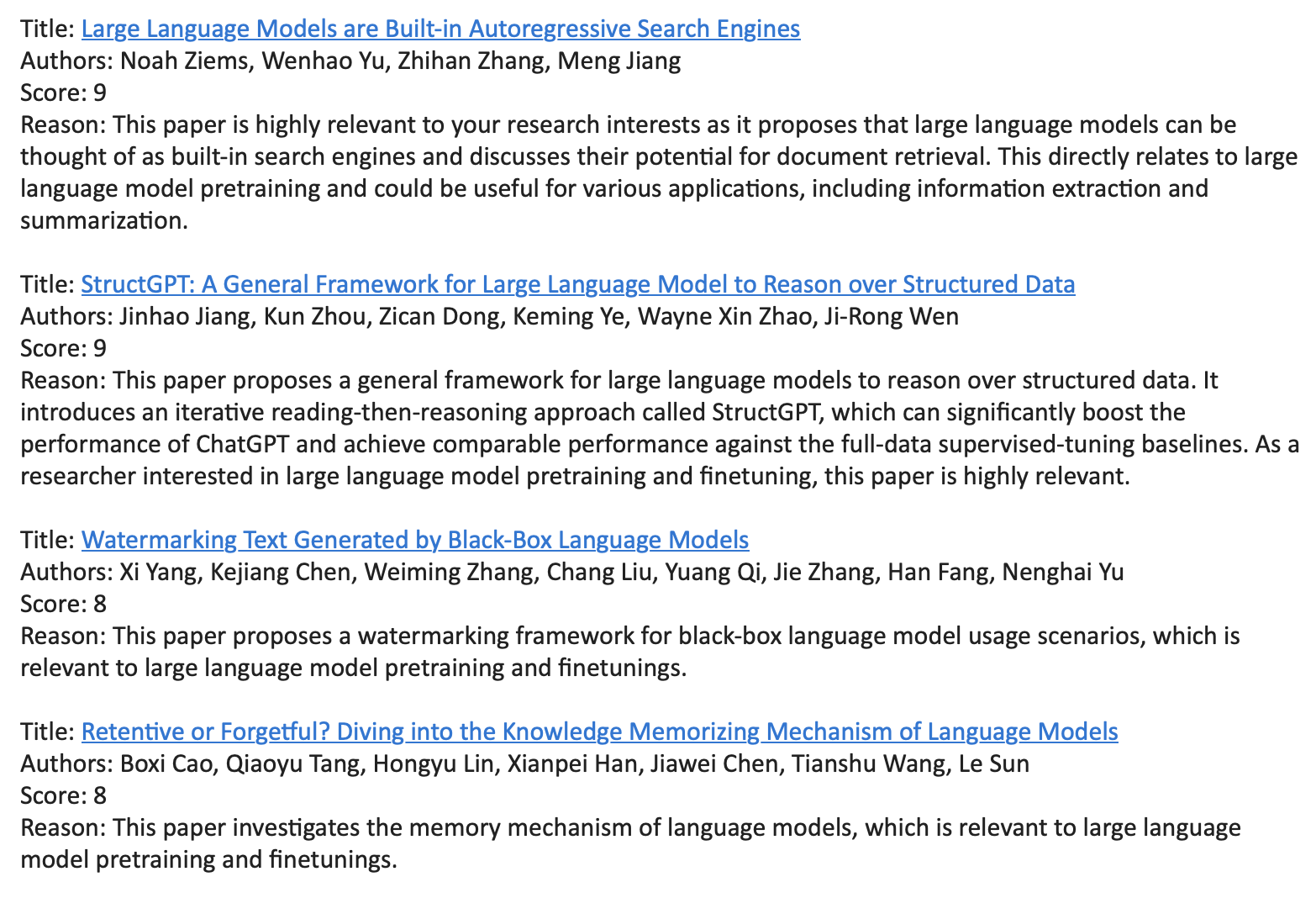

- Subject/Topic: Computer Science

- Categories: Artificial Intelligence, Computation and Language

- Interest:

- Large language model pretraining and finetunings

- Multimodal machine learning

- Do not care about specific application, for example, information extraction, summarization, etc.

- Not interested in paper focus on specific languages, e.g., Arabic, Chinese, etc.

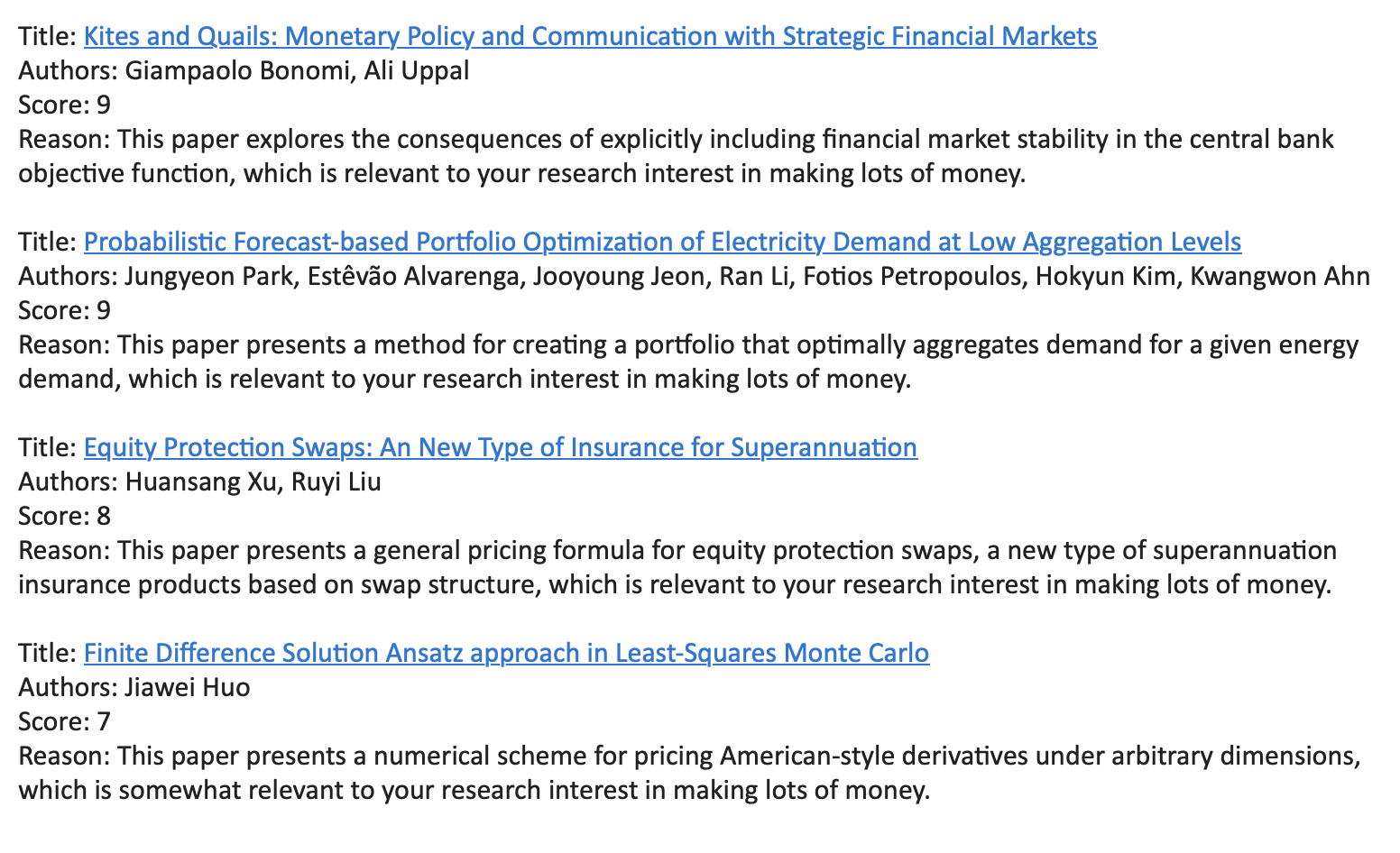

- Subject/Topic: Quantitative Finance

- Interest: "making lots of money"

The recommended way to get started using this repository is to:

- Fork the repository

- Modify

config.yamland merge the changes into your main branch. If you want a different schedule than Sunday through Thursday at 1:25PM UTC, then also modify the file.github/workflows/daily_pipeline.yaml - Create or fetch your api key for OpenAI. Note: you will need an OpenAI account.

- Create or fetch your api key for SendGrid. You will need a SendGrid account. The free tier will generally suffice.

- Set the following secrets (under settings, Secrets and variables, repository secrets):

OPENAI_API_KEYSENDGRID_API_KEYFROM_EMAILThis value must match the email you used to create the SendGrid Api Key. This is not needed if you have it set inconfig.yaml.TO_EMAILOnly if you don't have it set inconfig.yaml

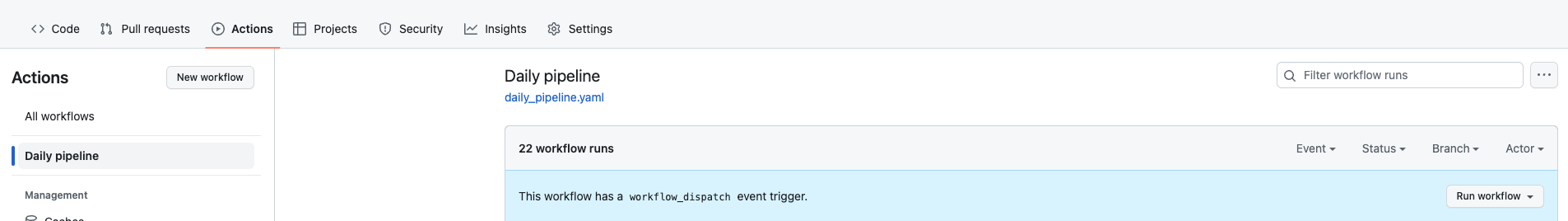

- Manually trigger the action or wait until the scheduled action takes place.

An alternative way to get started using this repository is to:

- Fork the repository

- Modify

config.yamland merge the changes into your main branch. If you want a different schedule than Sunday through Thursday at 1:25PM UTC, then also modify the file.github/workflows/daily_pipeline.yaml - Create or fetch your api key for OpenAI. Note: you will need an OpenAI account.

- Find your email provider's SMTP settings and set the secret

MAIL_CONNECTIONto that. It should be in the formsmtp://user:password@server:portorsmtp+starttls://user:password@server:port. Alternatively, if you are using Gmail, you can setMAIL_USERNAMEandMAIL_PASSWORDinstead, using an application password. - Set the following secrets (under settings, Secrets and variables, repository secrets):

OPENAI_API_KEYMAIL_CONNECTION(see above)MAIL_PASSWORD(only if you don't haveMAIL_CONNECTIONset)MAIL_USERNAME(only if you don't haveMAIL_CONNECTIONset)FROM_EMAIL(only if you don't have it set inconfig.yaml)TO_EMAIL(only if you don't have it set inconfig.yaml)

- Manually trigger the action or wait until the scheduled action takes place.

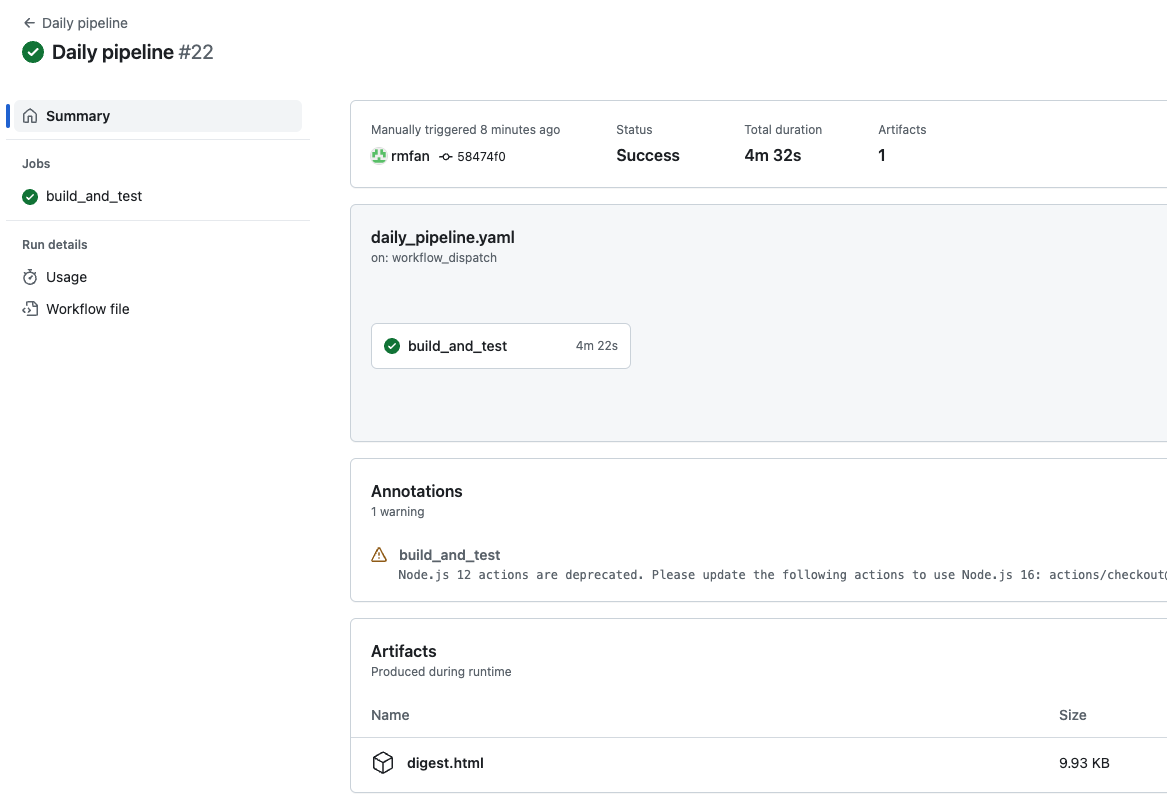

If you do not wish to create a SendGrid account or use your email authentication, the action will also emit an artifact containing the HTML output. Simply do not create the SendGrid or SMTP secrets.

You can access this digest as part of the github action artifact.

If you do not wish to fork this repository, and would prefer to clone and run it locally instead:

- Install the requirements in

src/requirements.txt - Modify the configuration file

config.yaml - Create or fetch your api key for OpenAI. Note: you will need an OpenAI account.

- Create or fetch your api key for SendGrid (optional, if you want the script to email you)

- Set the following secrets as environment variables:

OPENAI_API_KEYSENDGRID_API_KEY(only if using SendGrid)FROM_EMAIL(only if using SendGrid and if you don't have it set inconfig.yaml. Note that this value must match the email you used to create the SendGrid Api Key.)TO_EMAIL(only if using SendGrid and if you don't have it set inconfig.yaml)

- Run

python action.py. - If you are not using SendGrid, the html of the digest will be written to

digest.html. You can then use your favorite webbrowser to view it.

You may want to use something like crontab to schedule the digest.

Install the requirements in src/requirements.txt as well as gradio. Set the evironment variables OPENAI_API_KEY, FROM_EMAIL and SENDGRID_API_KEY. Ensure that FROM_EMAIL matches SENDGRID_API_KEY.

Run python src/app.py and go to the local URL. From there you will be able to preview the papers from today, as well as the generated digests.

- Support personalized paper recommendation using LLM.

- Send emails for daily digest.

- Implement a ranking factor to prioritize content from specific authors.

- Support open-source models, e.g., LLaMA, Vicuna, MPT etc.

- Fine-tune an open-source model to better support paper ranking and stay updated with the latest research concepts..

You may (and are encourage to) modify the code in this repository to suit your personal needs. If you think your modifications would be in any way useful to others, please submit a pull request.

These types of modifications include things like changes to the prompt, different language models, or additional ways for the digest is delivered to you.