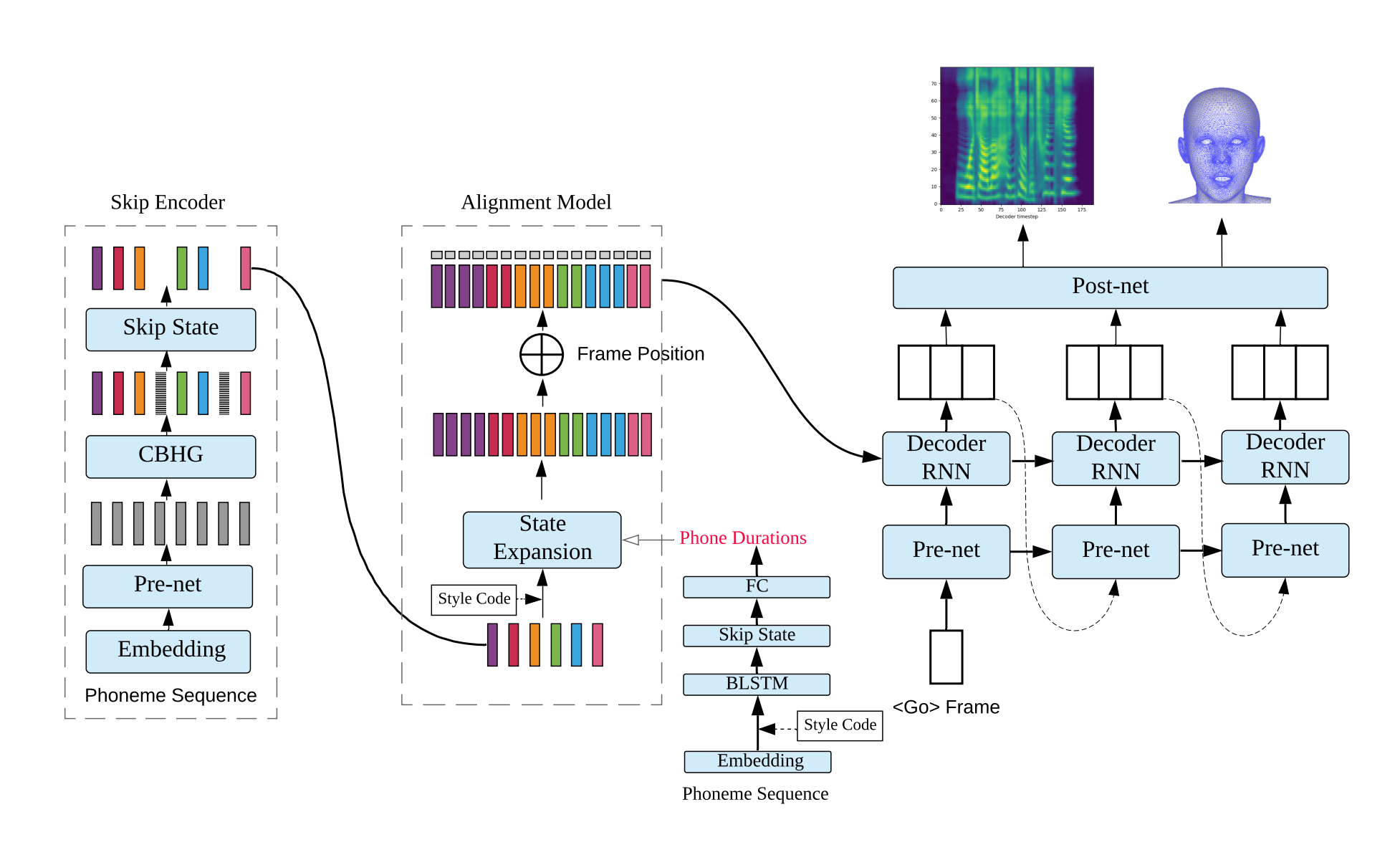

Implementation of DurIAN: Duration Informed Attention Network For Multimodal Synthesis

English:

- I use the same encoder as tacotron2

- I remove attention module in decoder and use average pooling to implement "predicting r frames at once"

- I remove position encoding and skip encoder in this implementation

Chinese:

- 我用了和tacotron2相同的encoder结构,但是参数更小

- 我去除了decoder中的attention模块,由于一步输出三帧,我对三个时间步的memory进行了相加求均值的操作,表现在代码中为average pooling,经过实验,相比与存在attention模块的decoder,这样的音质会受到很小的负面影响,但是训练速度有了极大的提高

- 我舍弃了position encoding和skip encoder,这对合成效果的影响很小

sample here, I use waveglow as vocoder, pretrained model here, batchsize is 32, step is 180k.

training:

pip install -r requirements.txt- download and extract LJSpeech dataset

- put LJSpeech dataset in

data unzip alignments.zippython3 preprocess.pyCUDA_VISIBLE_DEVICES=0 python3 train.py

testing:

- Put Nvidia pretrained waveglow model in the

waveglow/pretrained_model CUDA_VISIBLE_DEVICES=0 python3 test.py --step [step-of-checkpoint]

testing using pretrained model:

- put pretrained model in

model_new CUDA_VISIBLE_DEVICES=0 python3 test.py --step 180000

尽管DurIAN的生成速度比不上FastSpeech,但是DurIAN生成的样本音质好于FastSpeech,并且计算量也小于FastSpeech,在实际部署中,DurIAN的生成速度已经完全满足RTF要求。