VTimeLLM [Paper]

Official PyTorch implementation of the paper "VTimeLLM: Empower LLM to Grasp Video Moments".

- Jan-2: Thanks to Xiao Xia , Shengbo Tong and Beining Wang, we have refactored the code to now support both the LLAMA and ChatGLM3 architectures. We translated the training data into Chinese and fine-tuned a Chinese version based on the ChatGLM3-6b.

- Dec-14: Released the training code and data. All the resources including models, datasets and extracted features are available here. 🔥🔥

- Dec-4: VTimeLLM: demo released.

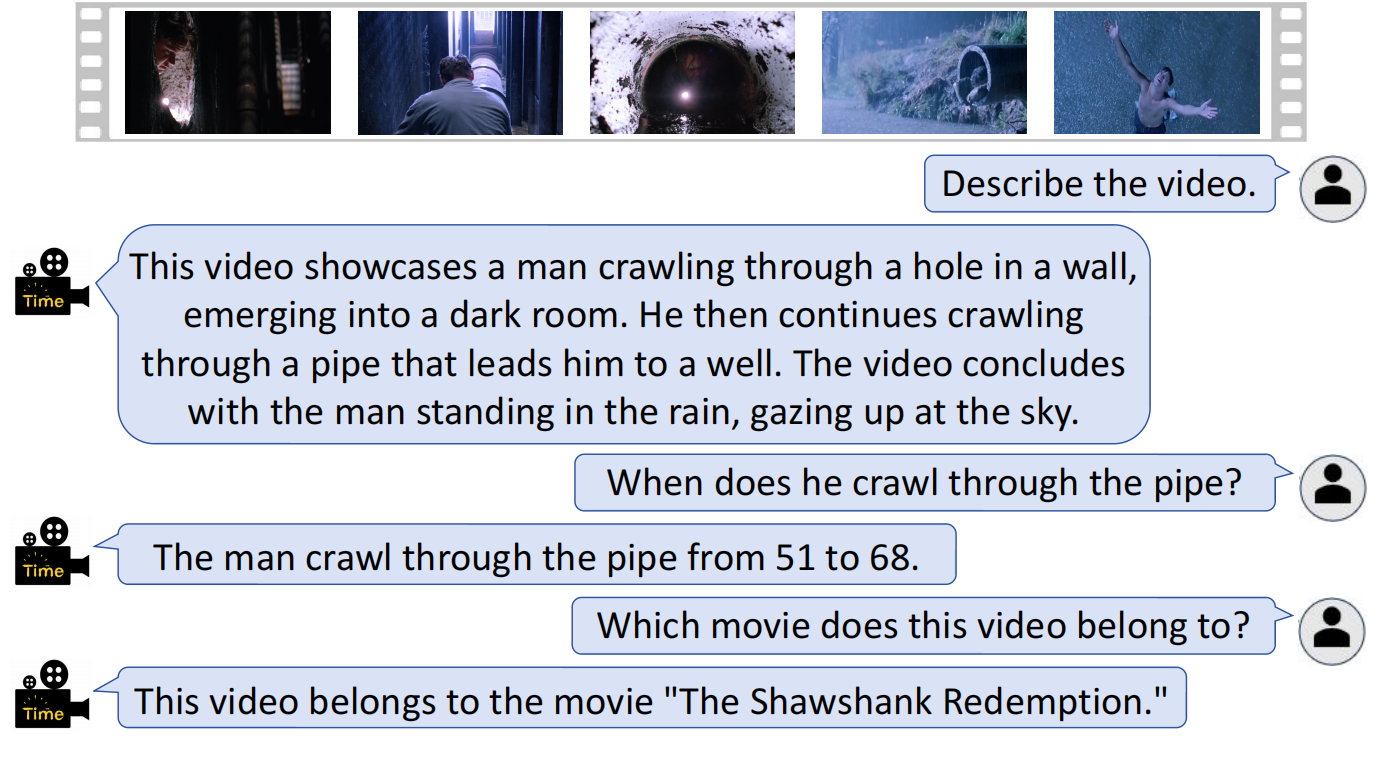

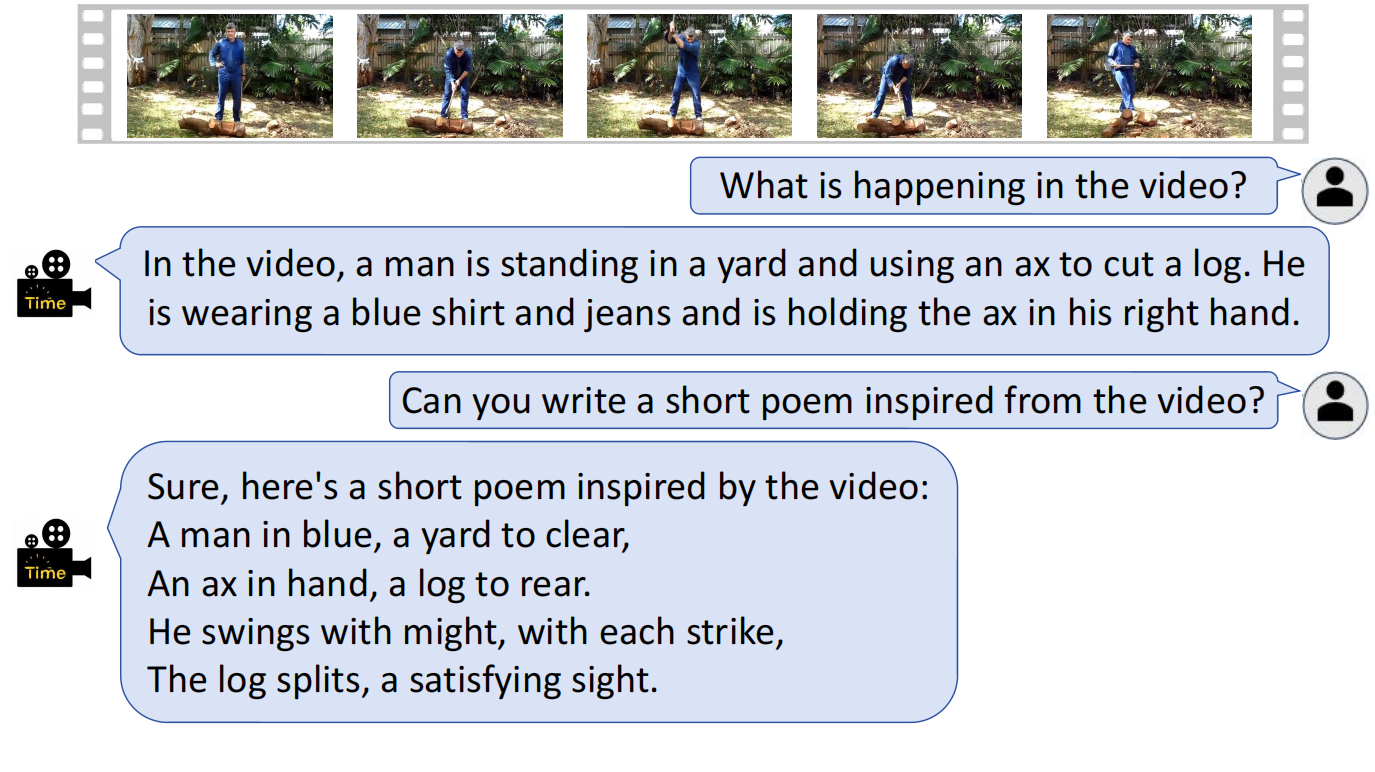

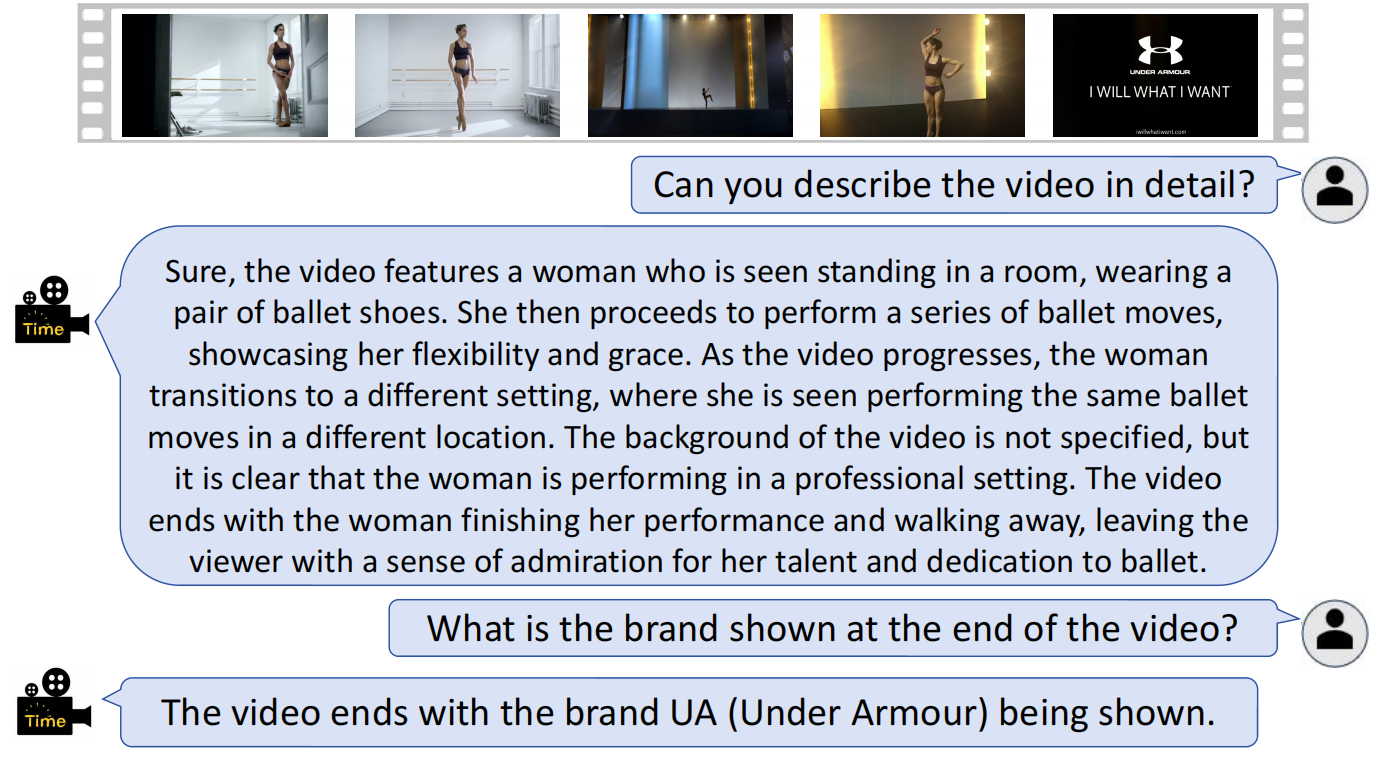

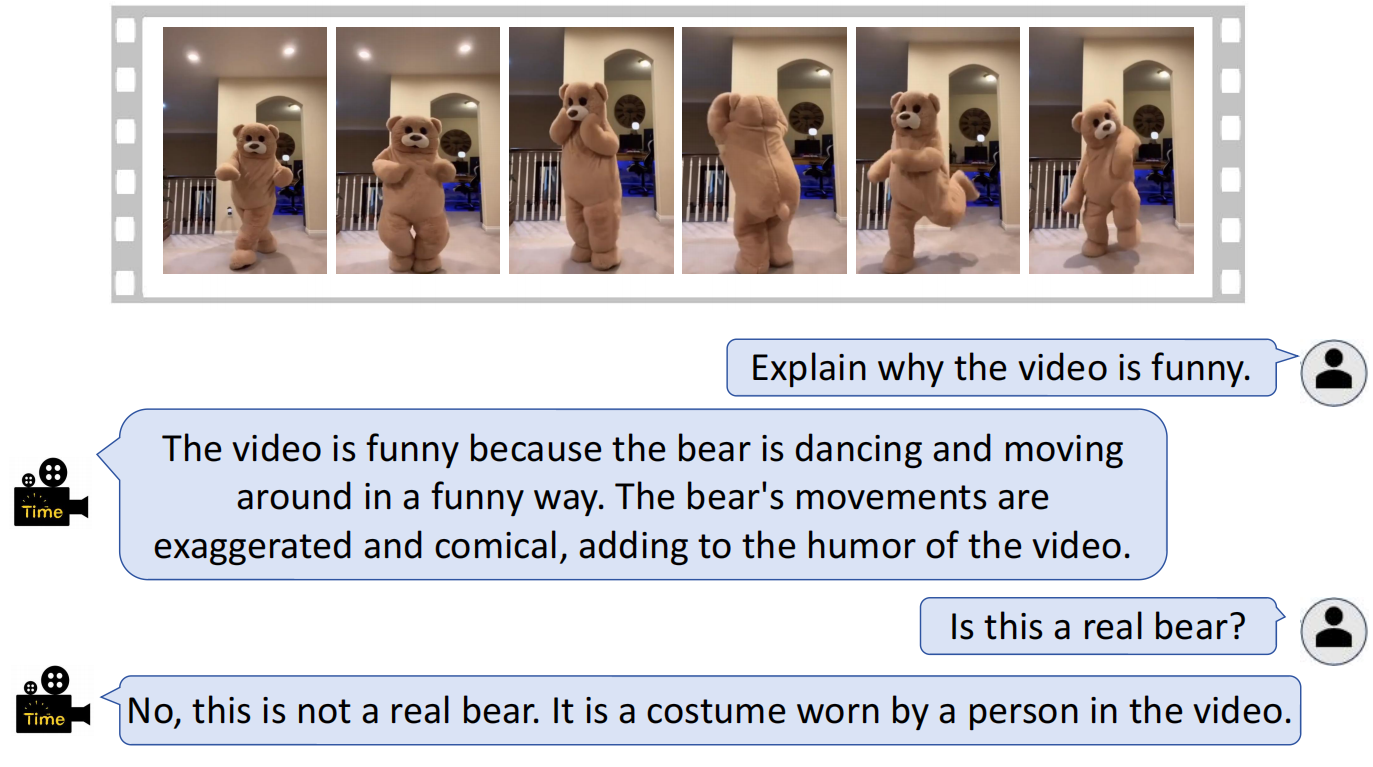

VTimeLLM is a novel Video LLM designed for fine-grained video moment understanding and reasoning with respect to time boundary.

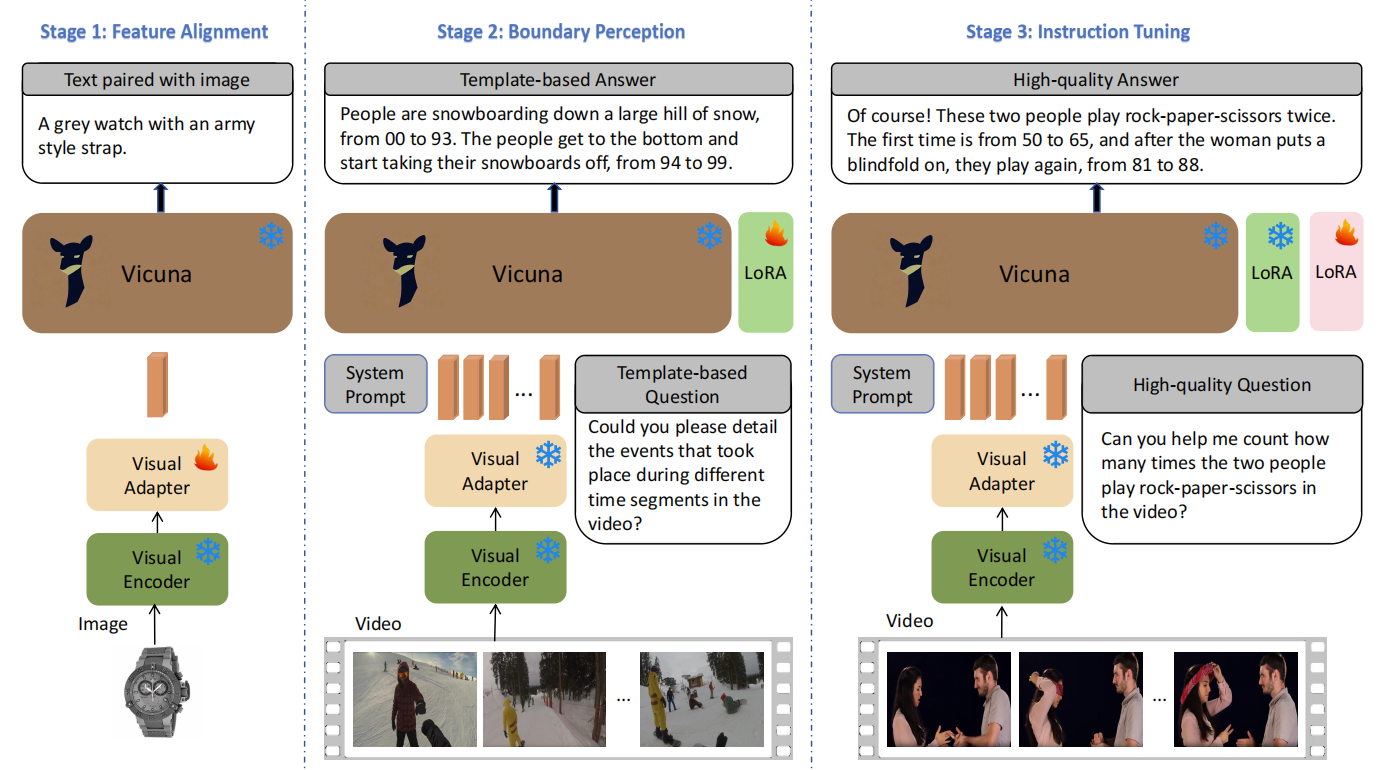

VTimeLLM adopts a boundary-aware three-stage training strategy, which respectively utilizes image-text pairs for feature alignment, multiple-event videos to increase temporal-boundary awareness, and high-quality video-instruction tuning to further improve temporal understanding ability as well as align with human intents.

- We propose VTimeLLM, the first boundary-aware Video LLM, to the best of our knowledge.

- We propose the boundary-aware three-stage training strategy, which consecutively leverages i) large-scale image-text data for feature alignment, ii) large-scale multi-event video-text data together with the temporal-related single-turn and multi-turn QA to enhance the awareness of time boundary, and iii) instruction tuning on the high-quality dialog dataset for better temporal reasoning ability.

- We conduct extensive experiments to demonstrate that the proposed VTimeLLM significantly outperforms existing Video LLMs in various fine-grained temporal-related video tasks, showing its superior ability for video understanding and reasoning.

We recommend setting up a conda environment for the project:

conda create --name=vtimellm python=3.10

conda activate vtimellm

git clone https://github.com/huangb23/VTimeLLM.git

cd VTimeLLM

pip install -r requirements.txtAdditionally, install additional packages for training cases.

pip install ninja

pip install flash-attn --no-build-isolationTo run the demo offline, please refer to the instructions in offline_demo.md.

For training instructions, check out train.md.

A Comprehensive Evaluation of VTimeLLM's Performance across Multiple Tasks.

We are grateful for the following awesome projects our VTimeLLM arising from:

- LLaVA: Large Language and Vision Assistant

- FastChat: An Open Platform for Training, Serving, and Evaluating Large Language Model based Chatbots

- Video-ChatGPT: Towards Detailed Video Understanding via Large Vision and Language Models

- LLaMA: Open and Efficient Foundation Language Models

- Vid2seq: Large-Scale Pretraining of a Visual Language Model for Dense Video Captioning

- InternVid: A Large-scale Video-Text dataset

If you're using VTimeLLM in your research or applications, please cite using this BibTeX:

@inproceedings{huang2024vtimellm,

title={Vtimellm: Empower llm to grasp video moments},

author={Huang, Bin and Wang, Xin and Chen, Hong and Song, Zihan and Zhu, Wenwu},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={14271--14280},

year={2024}

}This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License.

Looking forward to your feedback, contributions, and stars! 🌟