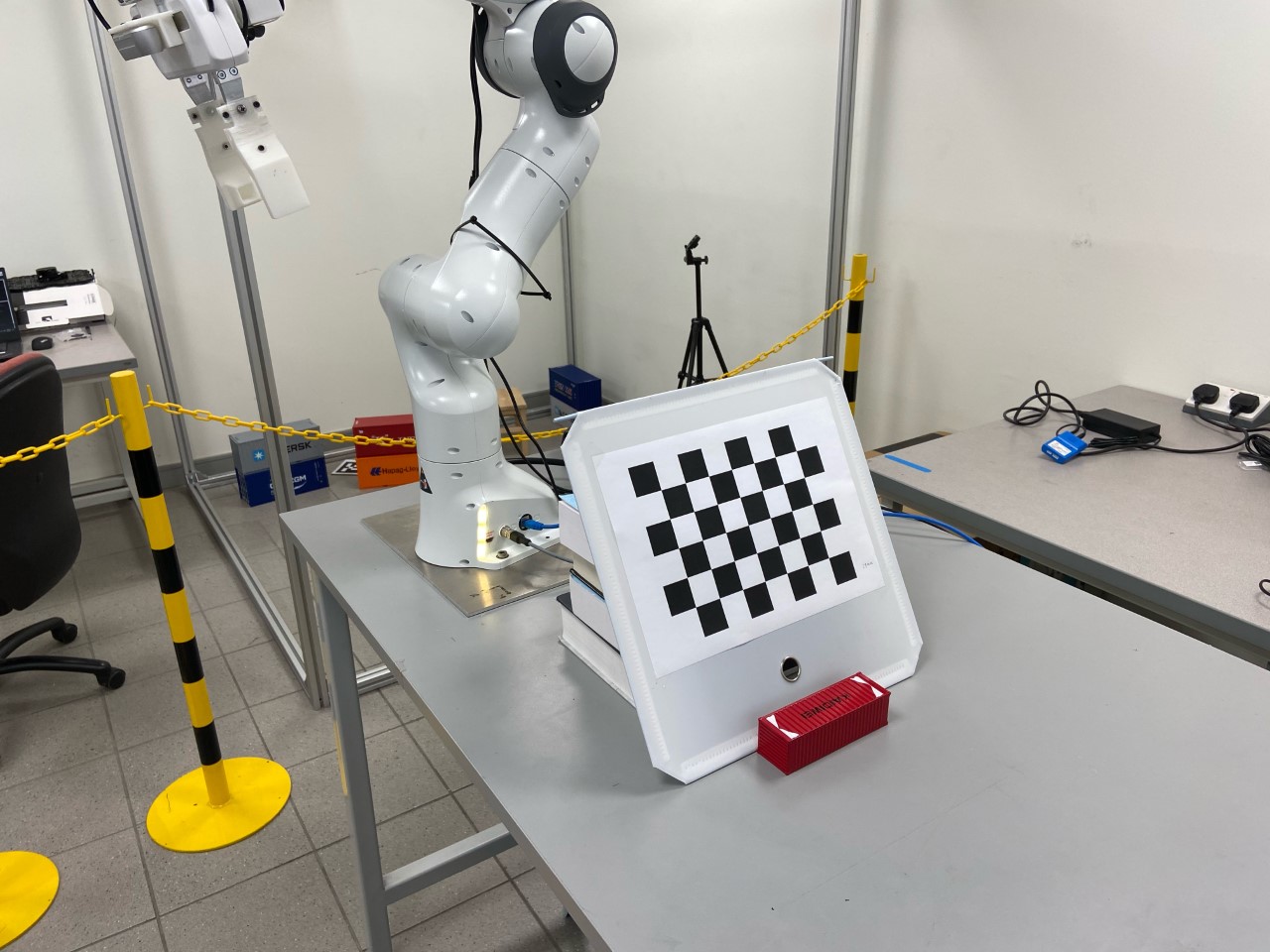

A toolbox for hand-eye calibration for camera on a manipulator. It has been tested on UR5 and Franka Emika Panda robot. Currently, it supports calibration on the Franka Emika Panda robot. To setup the connection of RGBD camera, please refer to this repository.

- libfranka & franka_ros

- MoveIt

- openni_camera & openni_launch: for PrimeSense camera

- librealsense & realsense-ros: for RealSense camera

- OpenCV

pip install opencv-python

- panda_moveit_ctrl (Optional): ROS package to control the Franka Emika Panda robot. If you are using other robots, please follow

src/calibration_toolbox/panda_robot.pyto write your own robot class. - aruco_ros (Optional): for aruco marker tracking. If you are using chessboard, this is not needed.

To calibrate instrinsic, follow the instruction in the camera_calibration ROS package. If the camera is an RGBD camera, make sure to calibrate both the RGB and IR camera.

When using the camera_calibration package, make sure to check the X, Y, Size, and Skew on the left of the window. Make sure all of them are turned green. Move the camera very closed to the chessboard. The distortion is most obvious when it is close to the chessboard.

This repository solves AXXB problem to get the extrinsic calibration problem. It has been tested on Franka Emika Robot with both Intel RealSense D435 and PrimeSense Carmine 1.09. The system we used in Ubunut 20.04. To control the Panda robot, see the panda_moveit_ctrl package. It consists of 2 steps: collecting data and solving AXXB. There are 3 options of calibration:

EH: eye-on-hand, calibrate the pose of the camera frame in the end-effector frameEBME: eye-on-base, calibrate the pose of the marker in the end-effector frameEBCB: eye-on-base, calibrate the pose of the camera in the base frame

In the data collection part, the robot moves to different configurations and collects the transformation from the hand (end effector) to base and the corresponding transformation from camera to chessboard.

-

Generate and print the calibration chessboard or aruco marker. In our

EBCMcalibration, the grid size is 0.038mm. The number of columns and rows are 5 and 6. In ourEHcalibration, the grid size is 0.029mm. The number of columns and rows are 6 and 8. In ourEBMEcalibration, the grid size is 0.010mm. The number of columns and rows are 5. -

Attach the target onto a flat rigid plane and fix it on 1) the end effector of the robot for

EBCB2) table forEH3) and hand forEBME. -

Move the robot to at least 15 configurations. Make sure in each configuration, the camera can see the whole target and the target is LARGE. Because if the target is too small, the estimation of pose will be inaccurate.

-

In

scripts/calib_collector_server_node, specify:target: chessboard / arucocalib_points_file: the txt file of the robot configuration points for data collectionimage_topic: the ROS topic of the image.

If the calibration target is the chessboard, the pose of the chessboard will be detected later. If the calibration target is the ArUco tag, the pose of the tag will be collected along the data collection.

For PrimeSense 1.09, the rgb topic is "/camera/rgb/image_raw". For RealSense D435, the rgb topic is "/camera/color/image_raw".

-

In

src/calib_collector.py, specify the base and end-effector link. -

Initialize the camera. To initialize PrimeSense 1.09,

roslaunch openni2_launch openni2.launchTo initialize RealSense D435,

roslaunch realsense2_camera rs_camera.launchTo check the image of the camera

rosrun image_view image_view image:=<image_topic> -

If you are using aruco marker for calibration,

roslaunch aruco_ros single.launch markerId:=<marker_id> markerSize:=<marker_size in meter>Otherwise, skip this step.

-

Launch the Panda robot

roslaunch panda_moveit_config panda_control_moveit_rviz.launch robot_ip:=<robot_ip> load_gripper:=<true/false> rosrun panda_moveit_ctrl panda_moveit_ctrl_server_node.py -

Run the calib collector server

rosrun calibration_toolbox calib_collector_server_node.pyIt will broadcast the ROS service "collect_data".

-

Call the service to collect data:

rosservice call /collect_data <data_directory>The robot will move through the configurations and collect the data. After the collection process is finished, each image is paired with a robot pose file. The image filename is "x_img.png"/; the robot pose filename is "x_robotpose.txt".

If your calibration target is the chessboard, the following will detect the pose of the chessboard in the camera frame in each collected image with the OpenCV. If you are using aruco marker, skip this step.

- Specify the

chessboard pattern,camera info yaml, anddata directoryinsrc/calibration_toolbox/chessboard_detection.py. - Run the chessboard detector

The chessboard poses are saved in the data directory. marker poses are saved in "x_markerpose.txt". Make sure the axis generated in all of the images are in the same corner of the chessboard.

python chessboard_detector.py

The Park & Martin's method is used to solve AXXB problem.

- Specify the

data directoryandcalibration optioninscripts/main_calib_node.py - Solve the AXXB problem

The calibrated transformation will be saved in the data directory as

rosrun calibration_toolbox main_calib_node.pypose.txtandpose.yaml. Make sure to check each of the check pose in the terminal. If the calibration is successful, they should be very close to each other.

- Add images for the three experiment settings.

- Add an example yaml file.