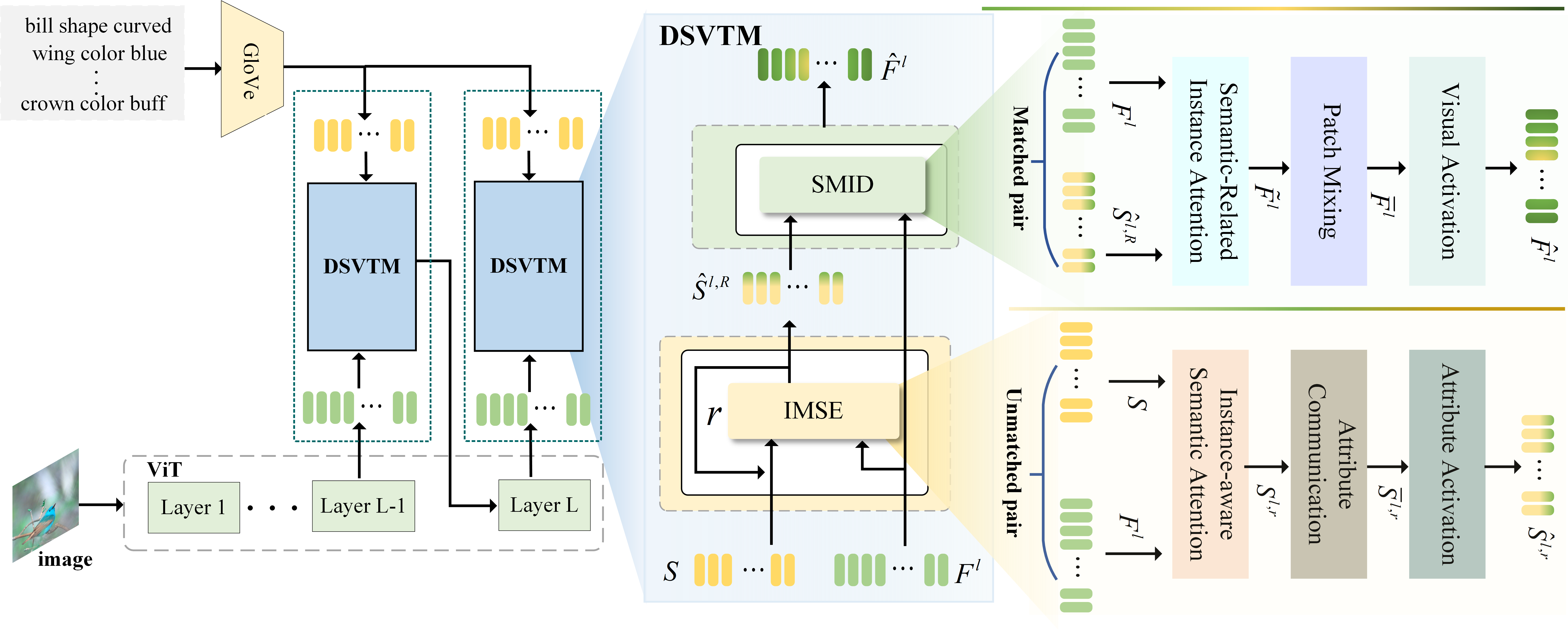

- Progressive Semantic-Visual Mutual Adaption for Generalized Zero-Shot Learning This repository contains the reference code for the paper "Progressive Semantic-Visual Mutual Adaption for Generalized Zero-Shot Learning" accepted to CVPR 2023.

Python 3.6.7PyTorch = 1.7.0- All experiments are performed with one RTX 3090Ti GPU.

- Dataset: please download the dataset, i.e., CUB, AWA2, SUN to the dataset root path on your machine

- Data split: Datasets can be download from Xian et al. (CVPR2017) and take them into dir

../../datasets/. - Attribute w2v:

extract_attribute_w2v_CUB.pyextract_attribute_w2v_SUN.pyextract_attribute_w2v_AWA2.pyshould generate and place it inw2v/. - Download pretranined vision Transformer as the vision encoder.

Before running commands, you can set the hyperparameters in config on different datasets:

config/cub.yaml #CUB

config/sun.yaml #SUN

config/awa2.yaml #AWA2

T rain:

python train.pyEval:

python test.pyYou can test our trained model: CUB, AwA2, SUN.

If this work is helpful for you, please cite our paper.

@InProceedings{Liu_2023_CVPR,

author = {Liu, Man and Li, Feng and Zhang, Chunjie and Wei, Yunchao and Bai, Huihui and Zhao, Yao},

title = {Progressive Semantic-Visual Mutual Adaption for Generalized Zero-Shot Learning},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {15337-15346}

}

We thank the following repos providing helpful components in our work. GEM-ZSL